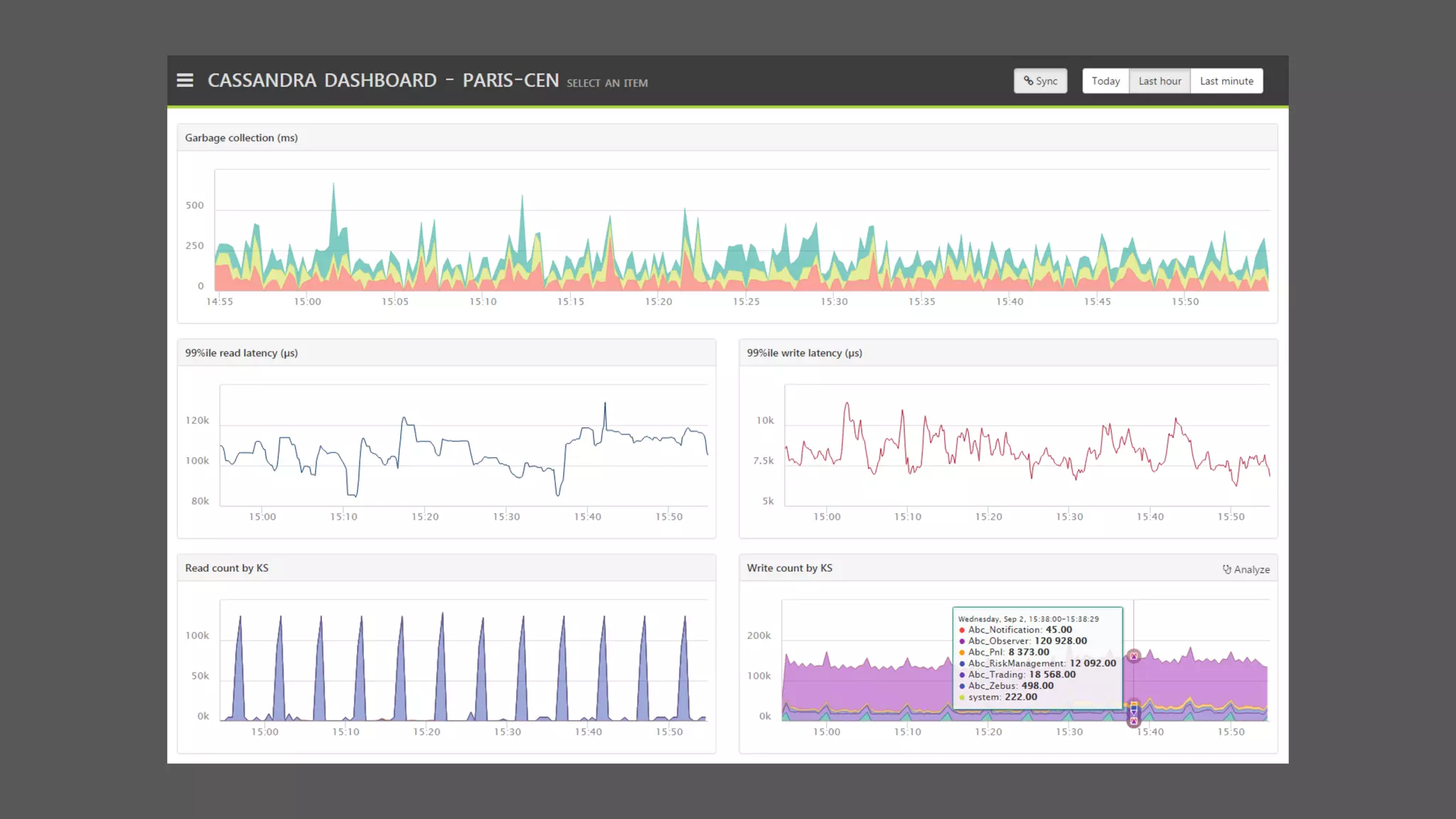

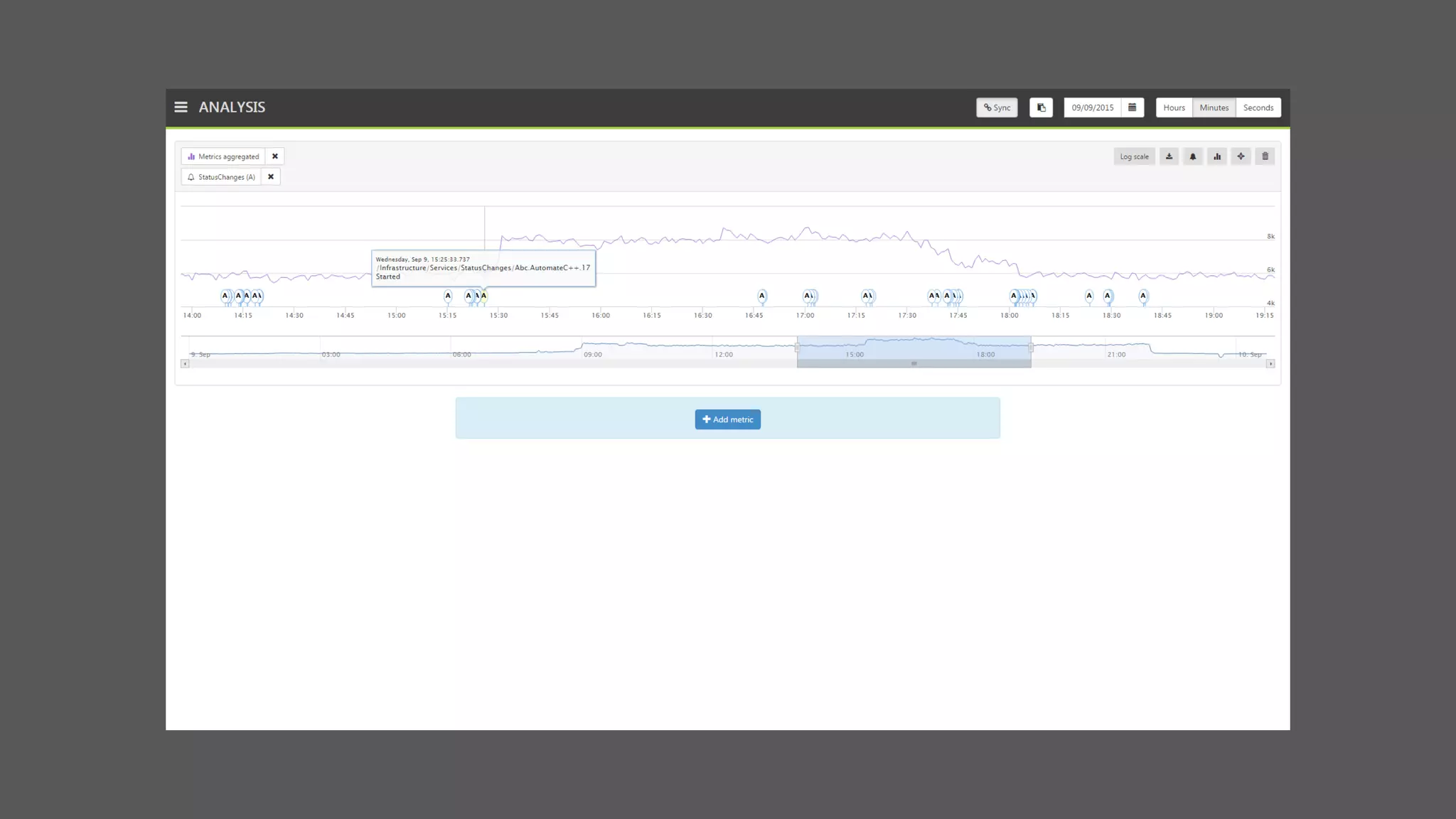

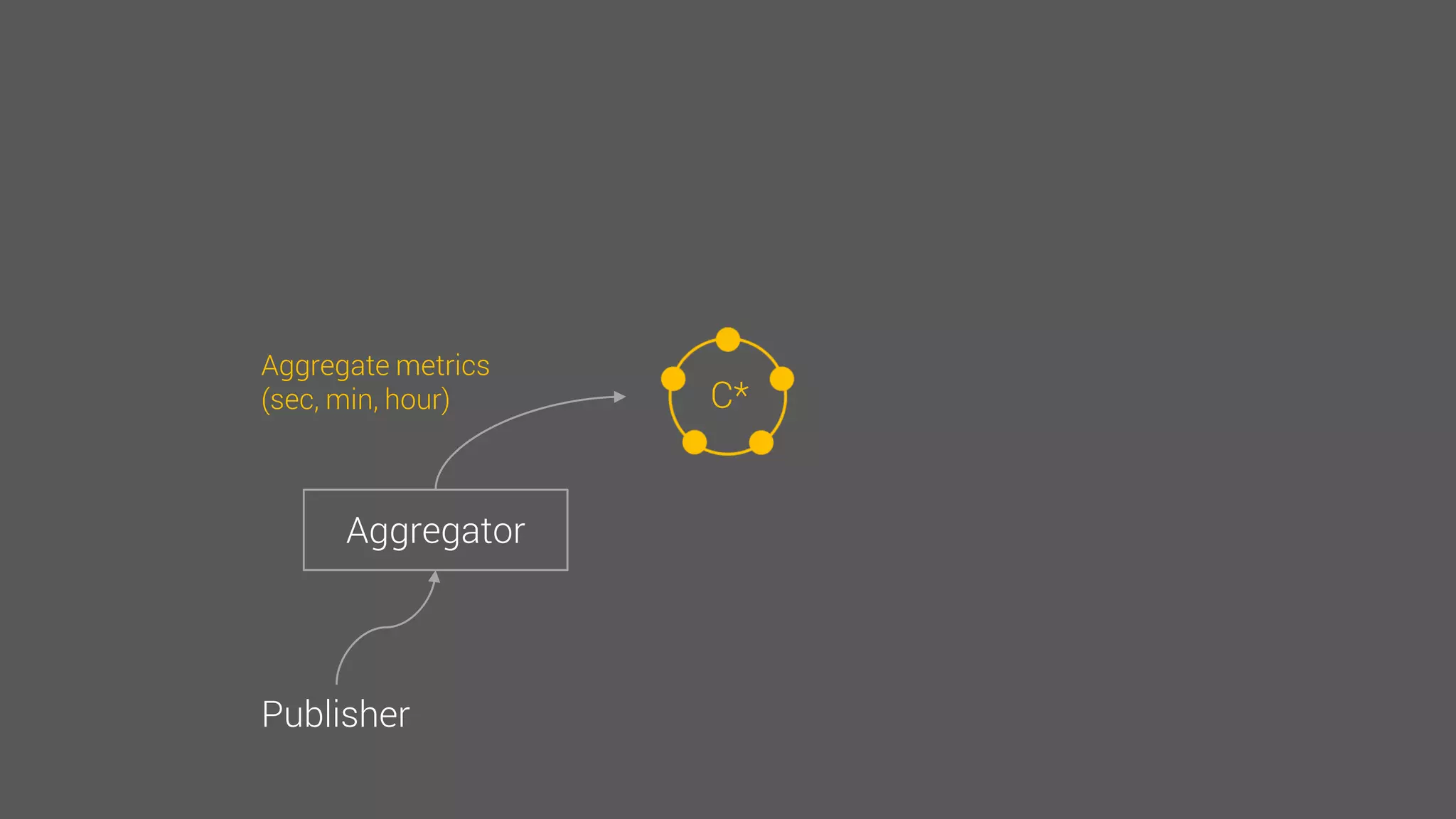

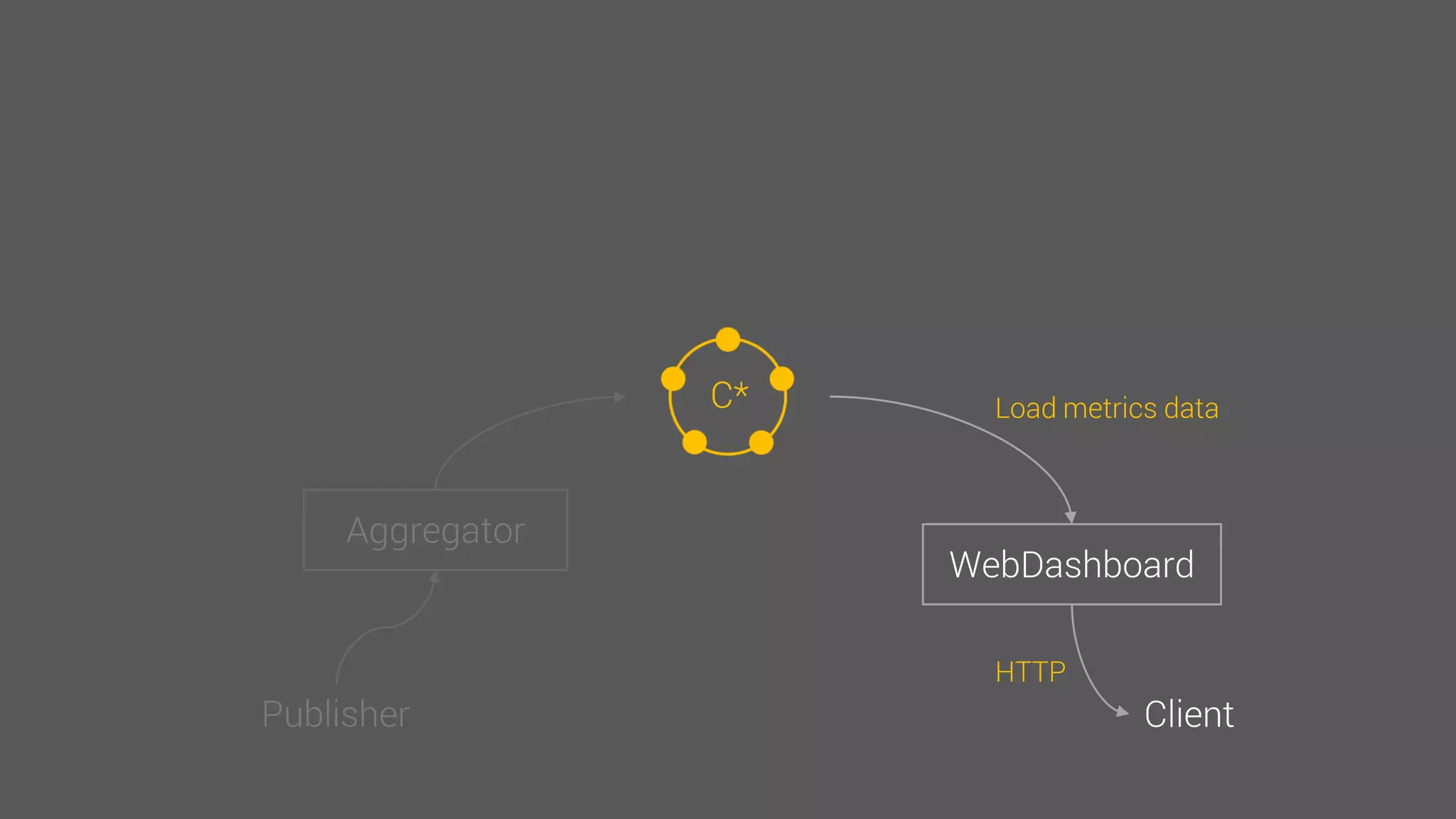

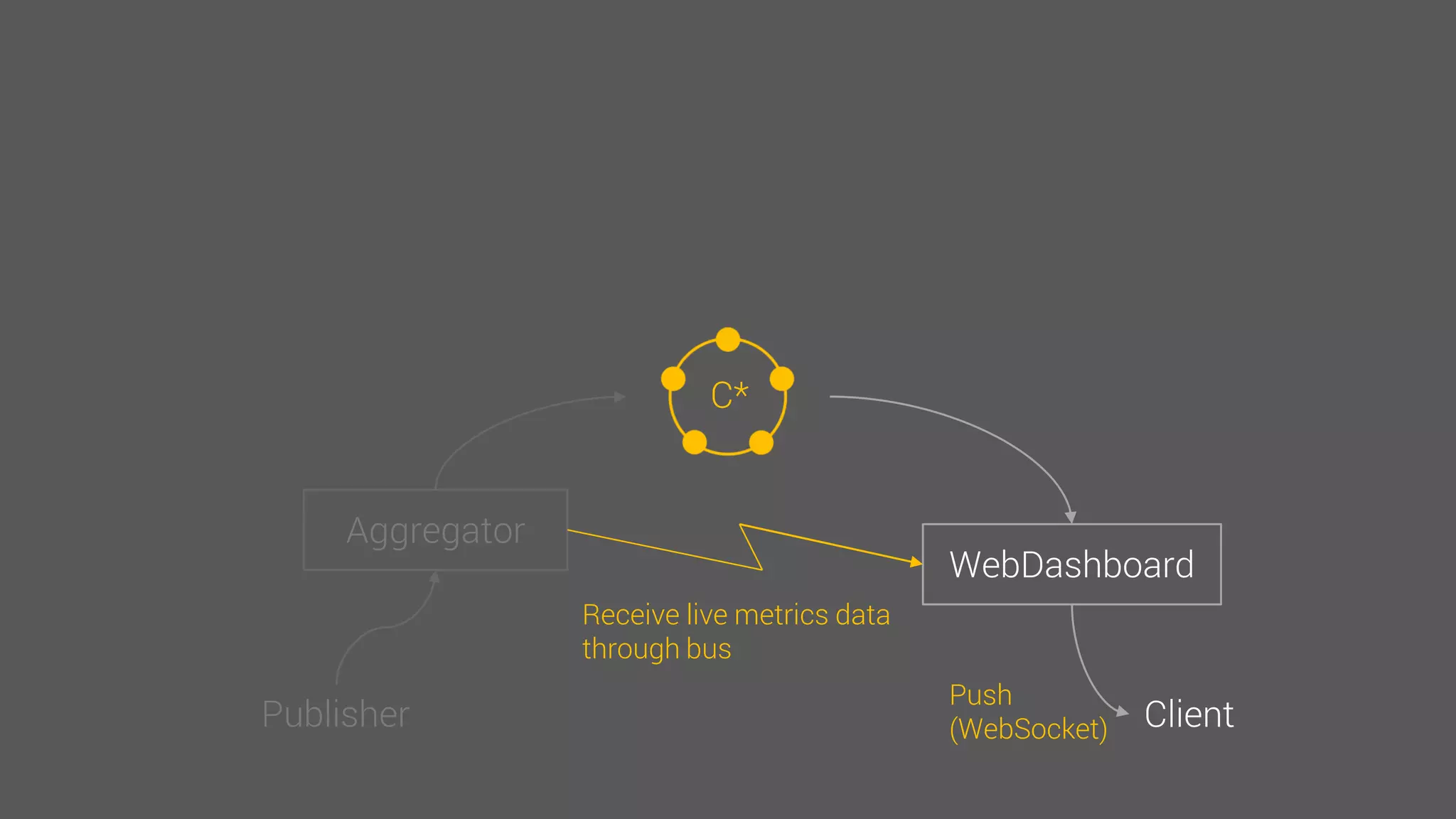

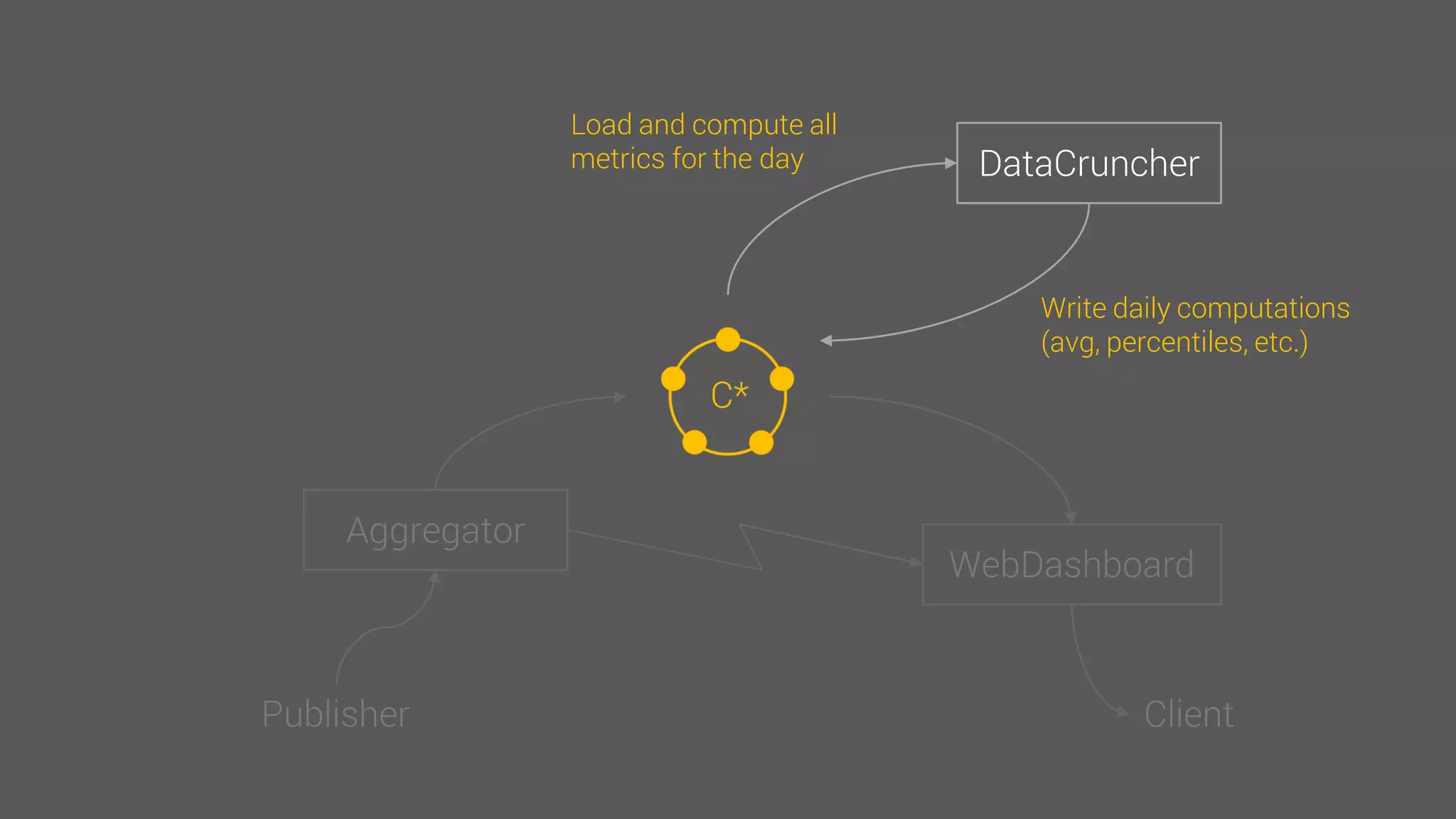

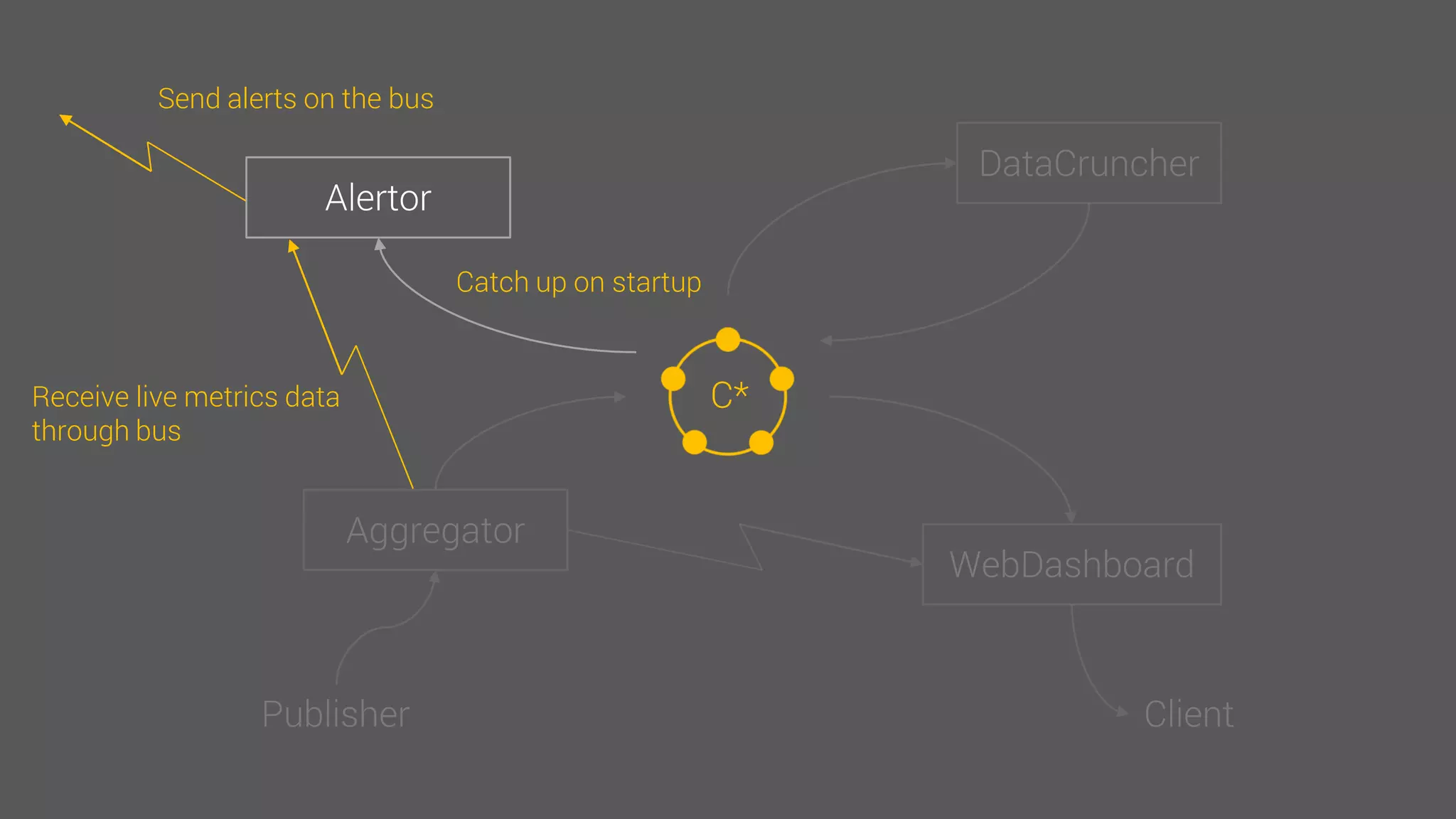

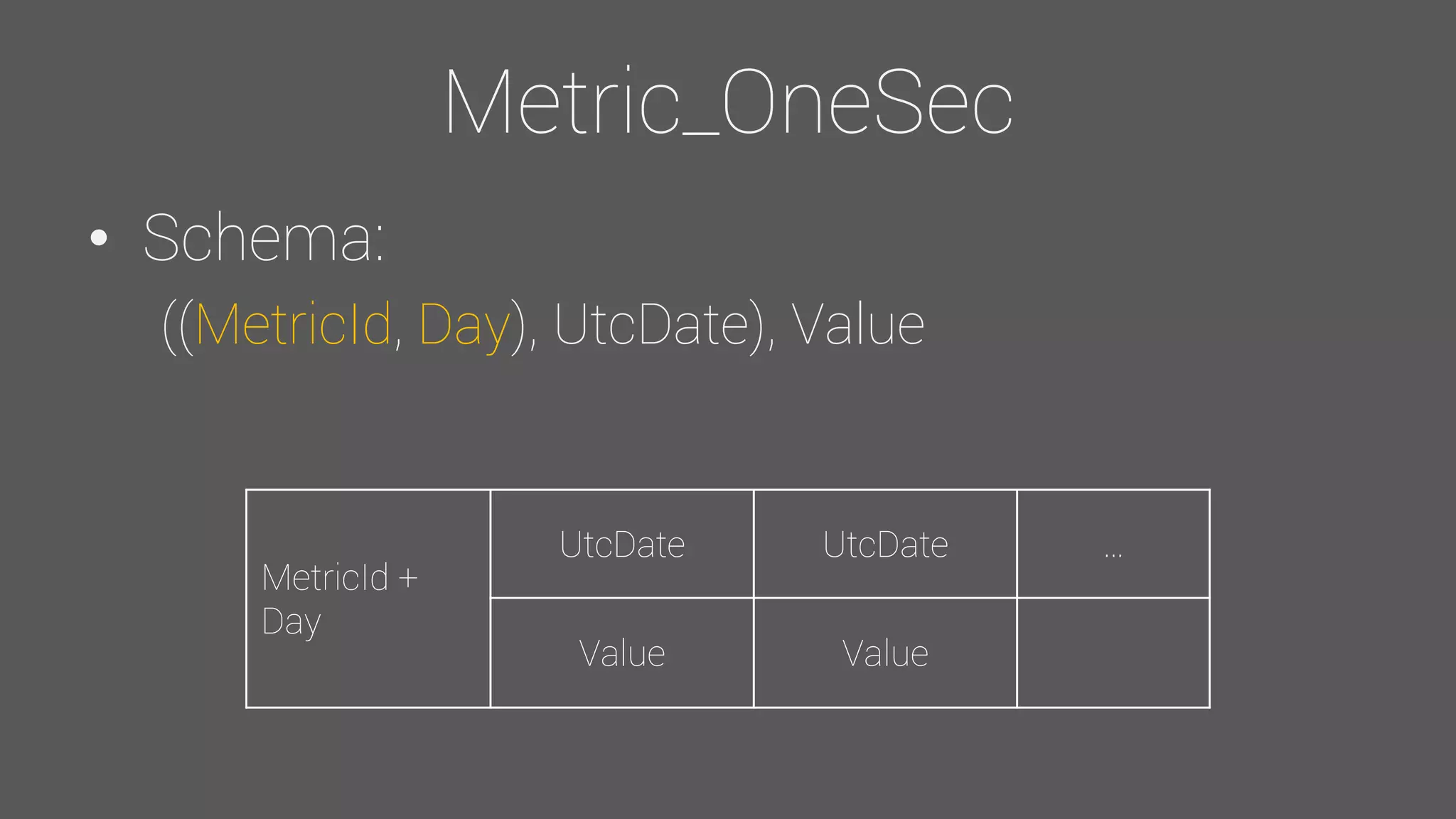

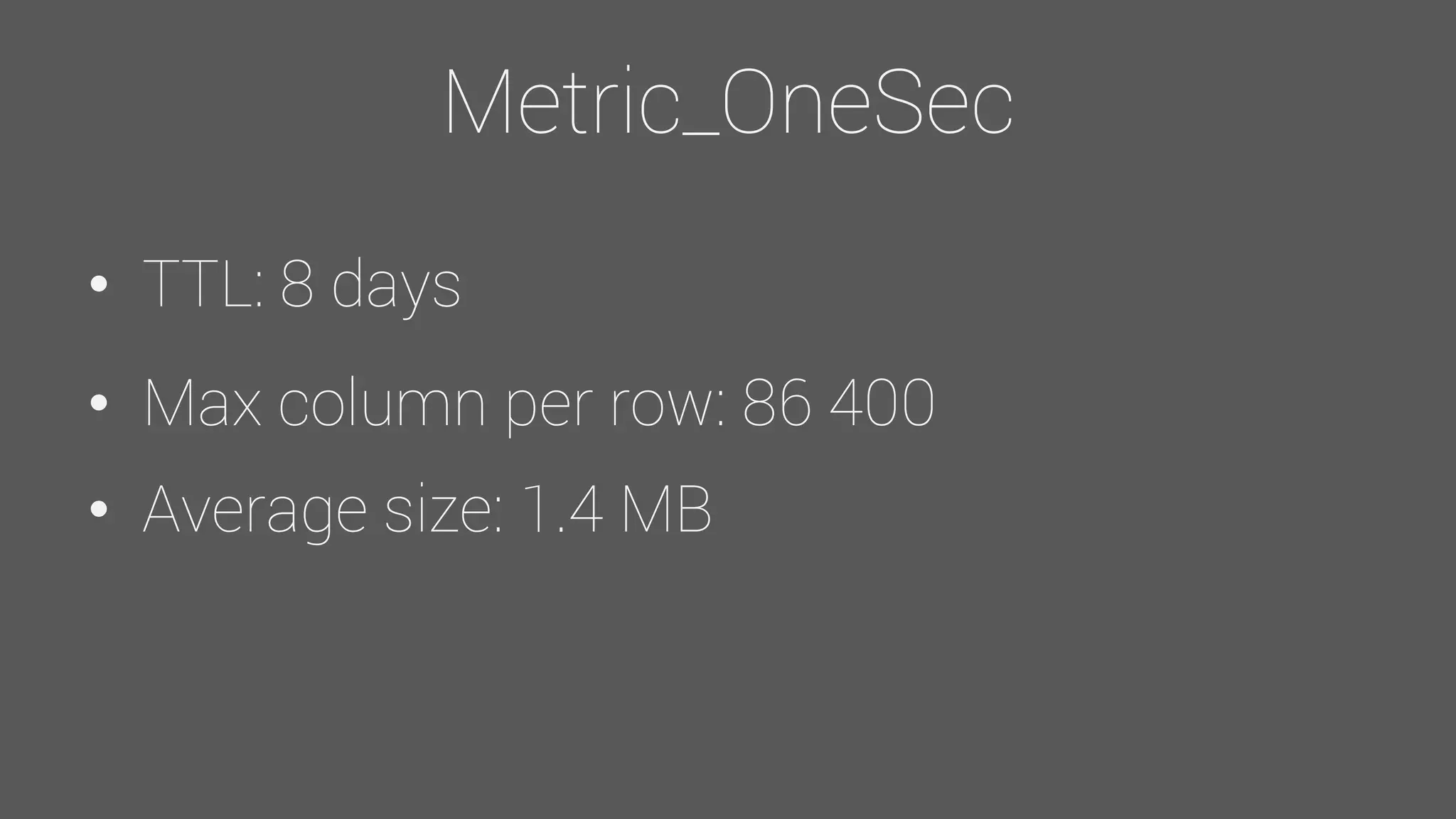

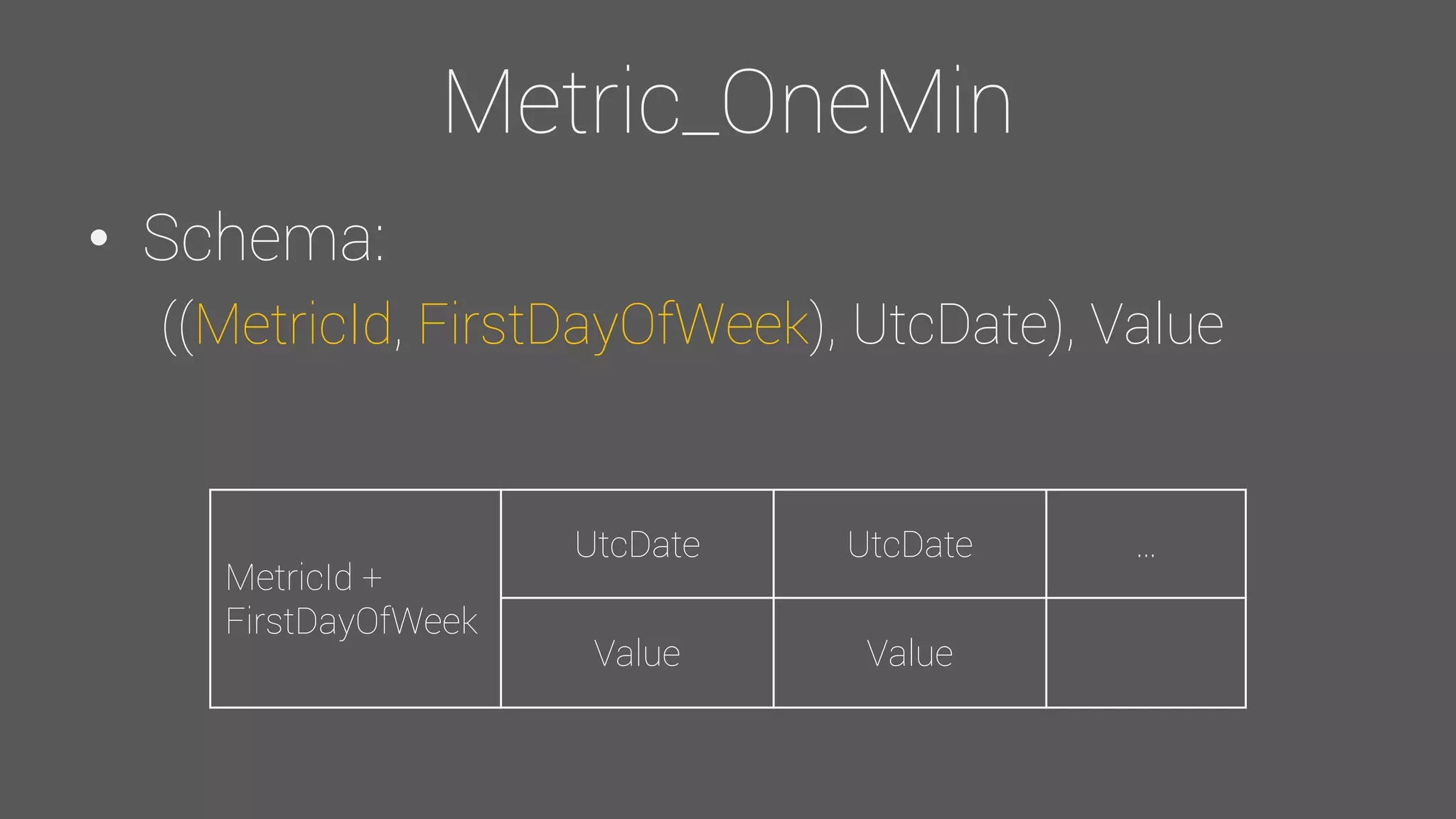

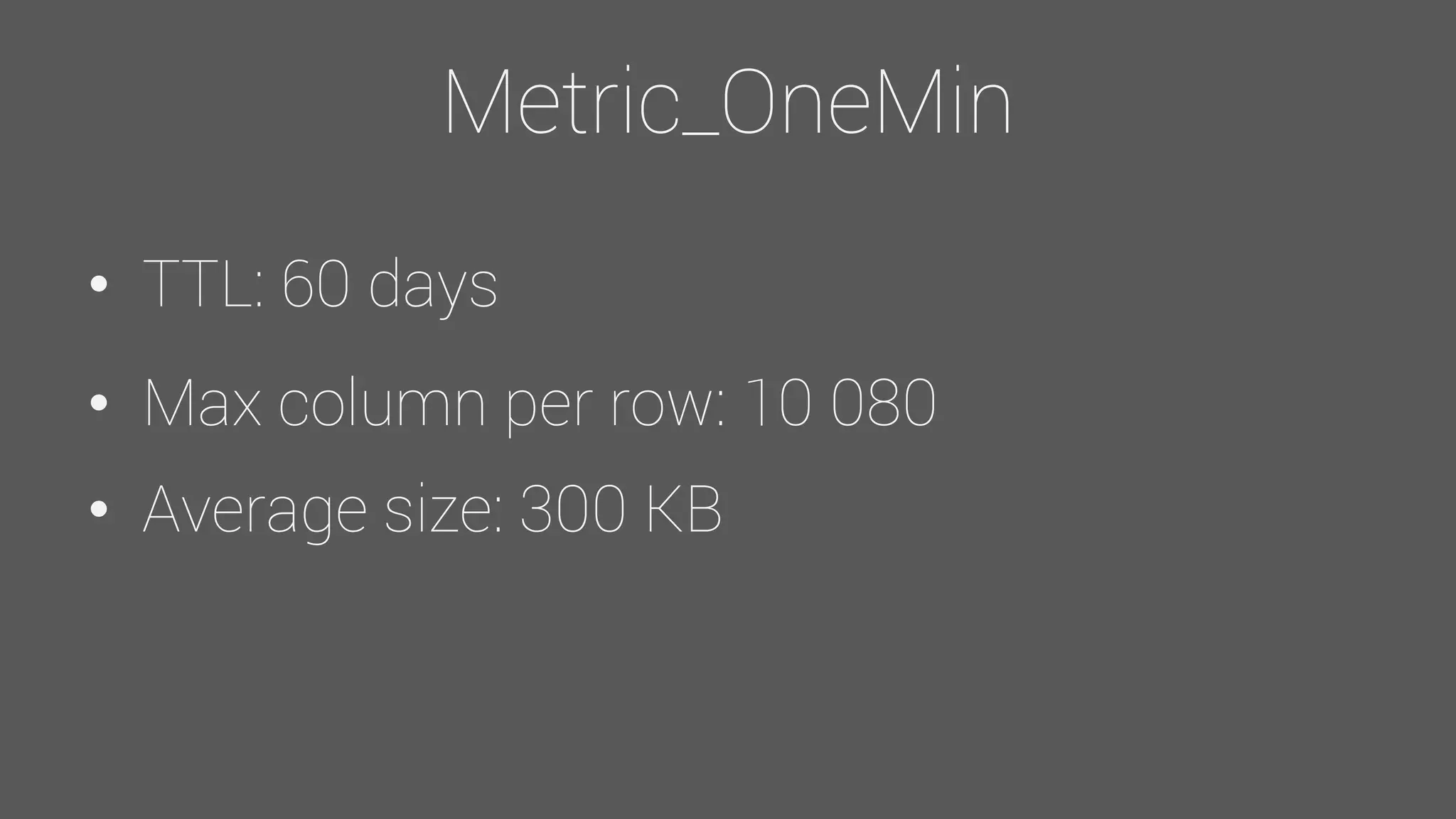

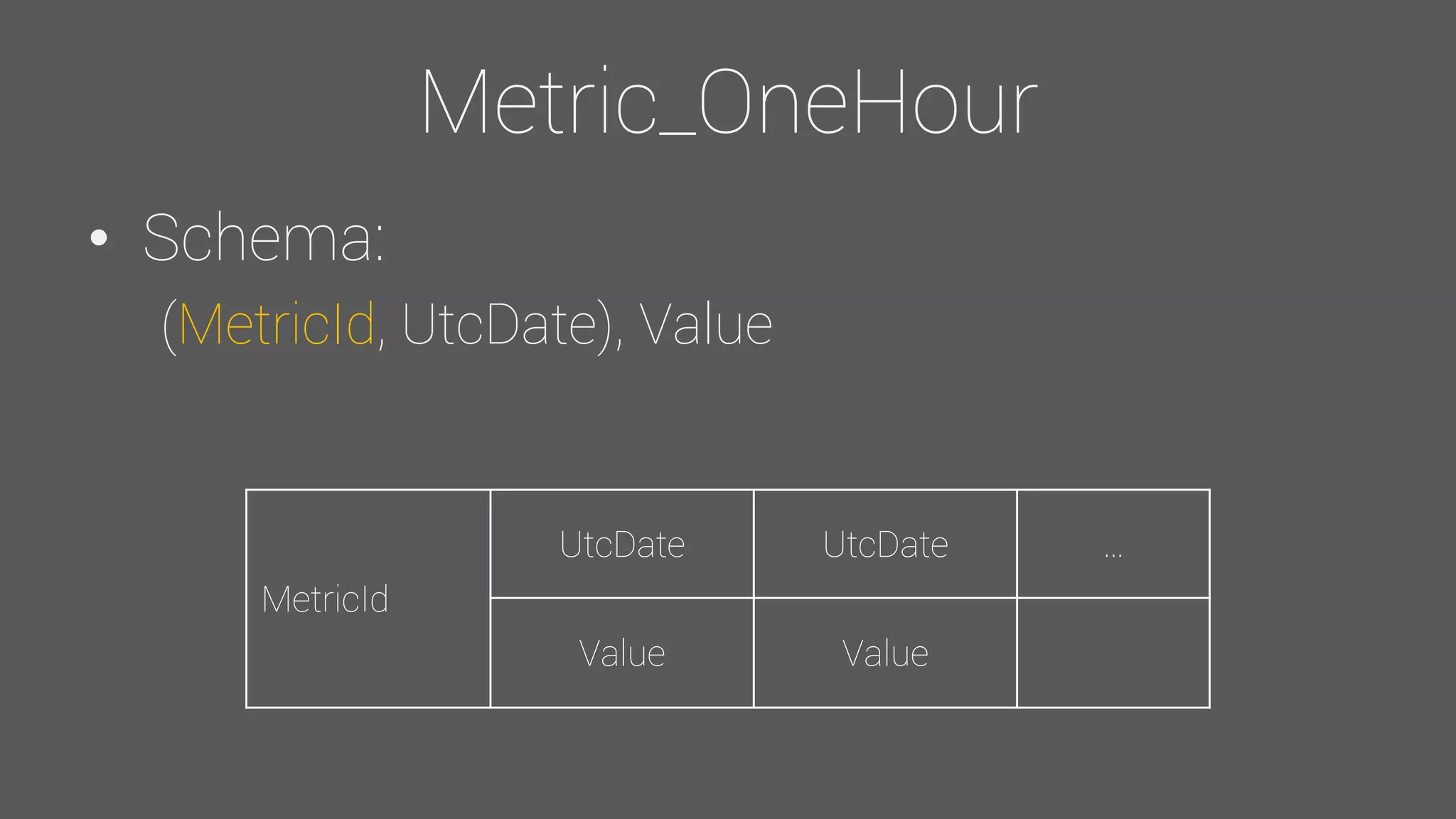

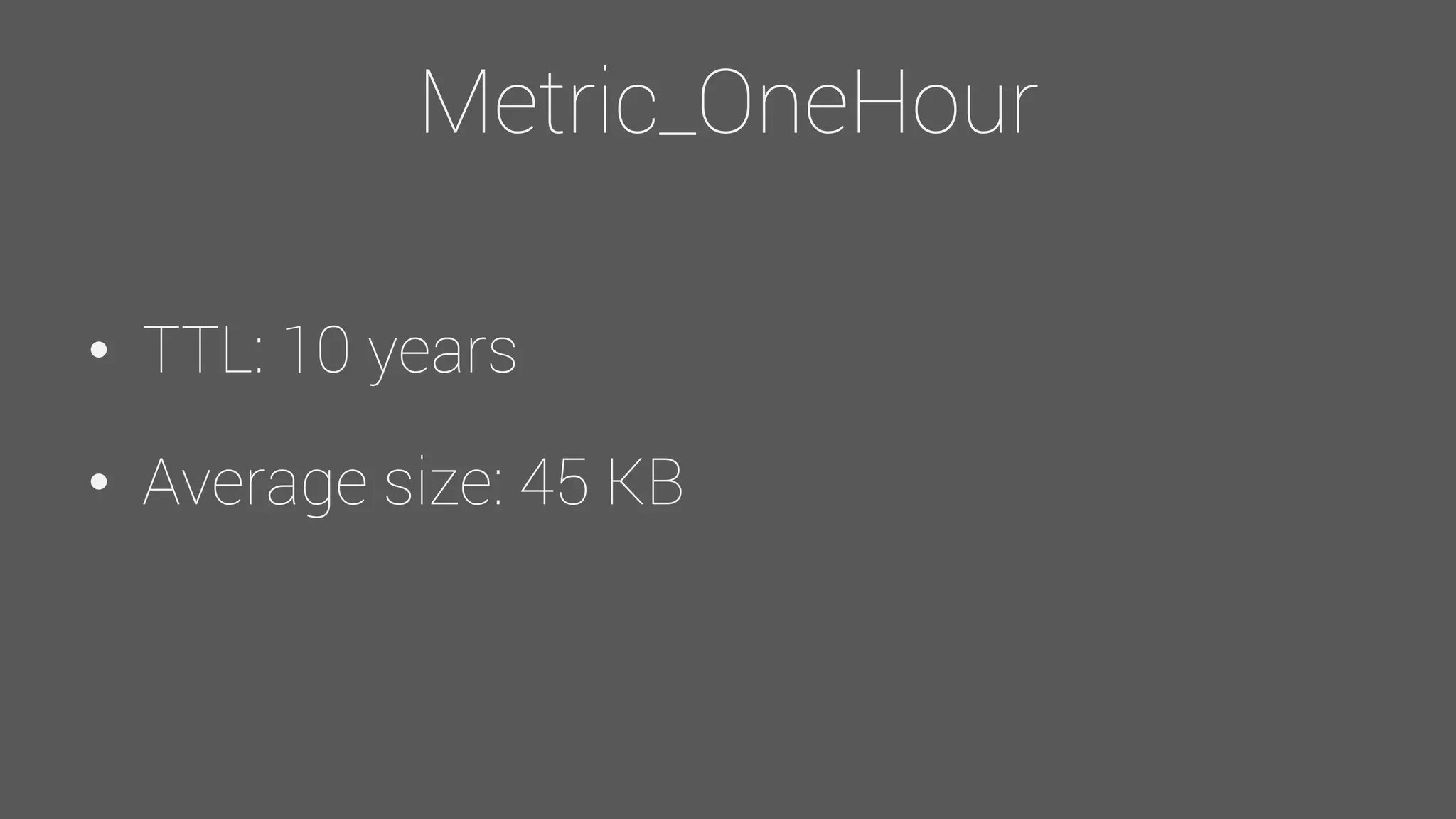

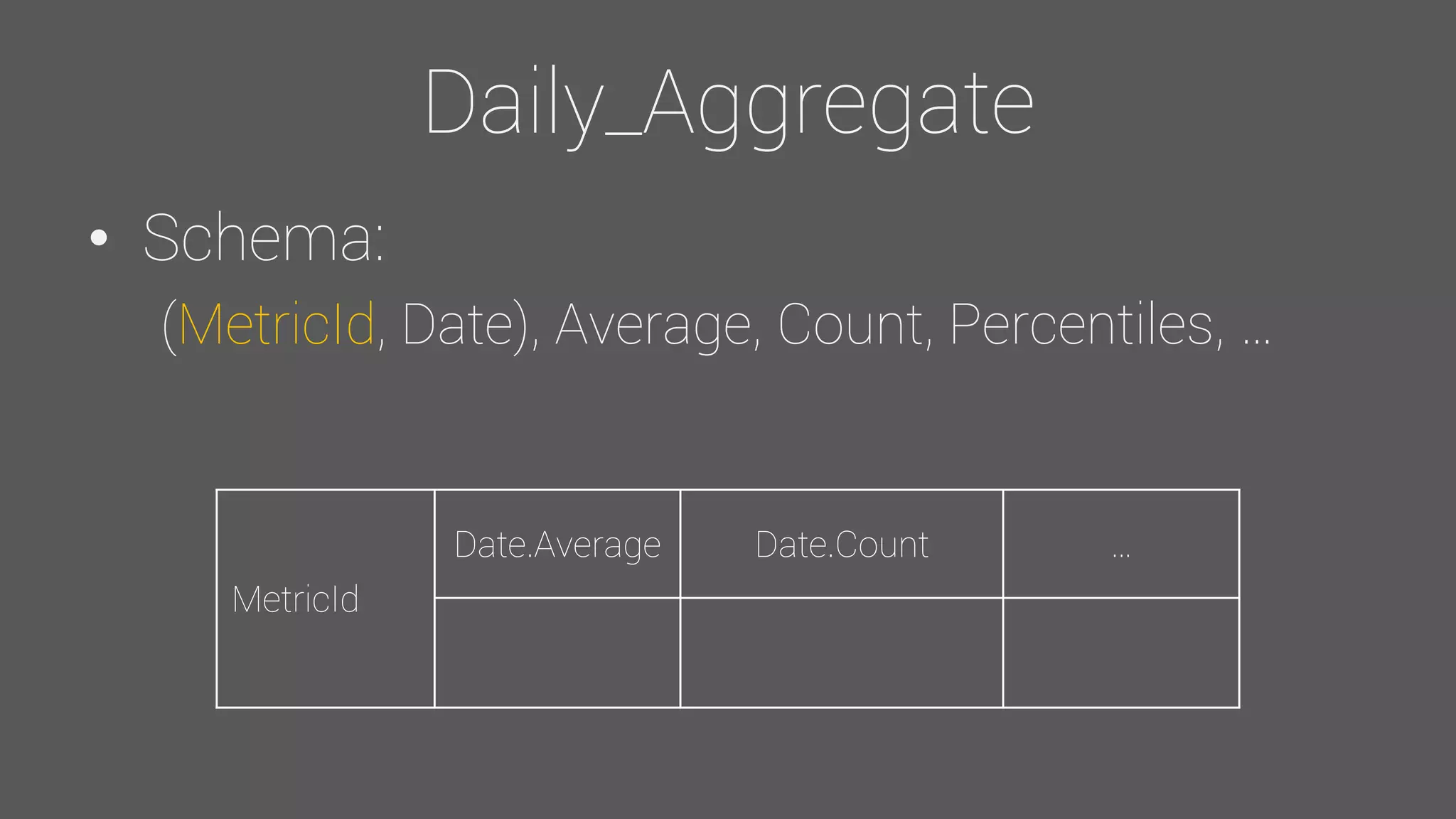

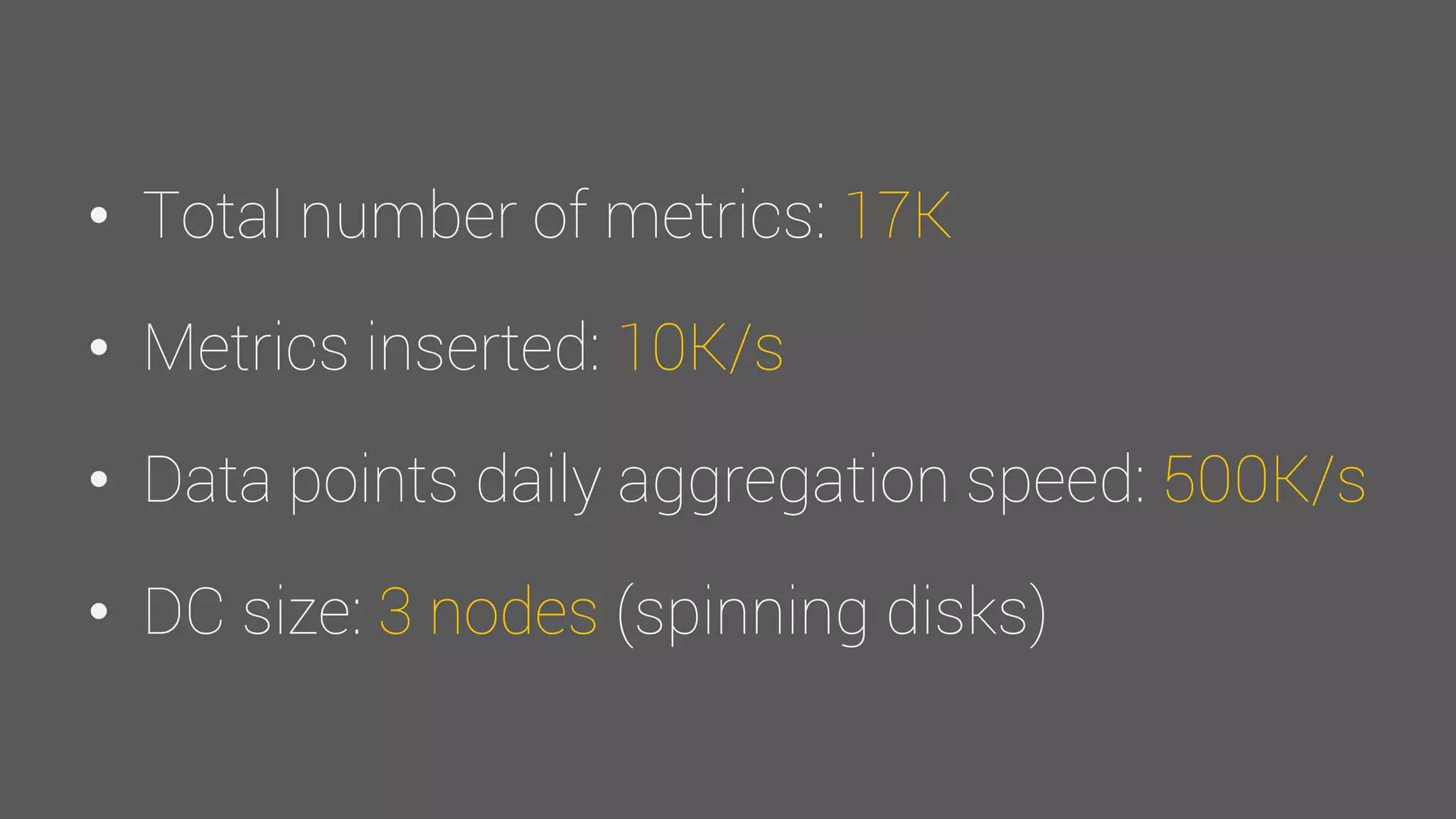

The document provides an overview of the Observer time-series application developed by Kévin Lovato, detailing its architecture, key features, and CQL schema for data management. It discusses various components like metrics aggregation, alerting mechanisms, schema best practices, and performance optimization strategies. Additionally, it outlines future plans including transitioning to SSDs and using different data storage configurations.