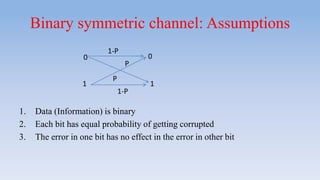

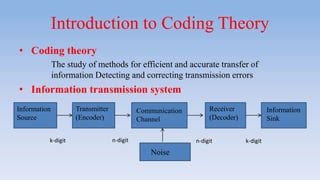

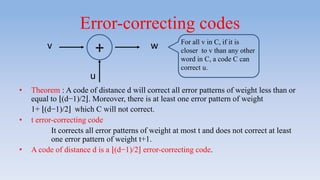

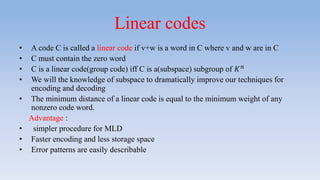

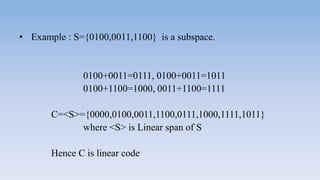

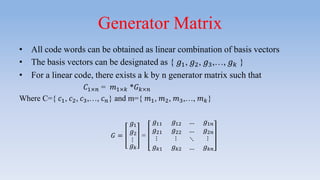

This document provides an introduction to error correcting codes. It discusses key concepts such as channel capacity, error detection and correction, information rate, weight and distance, maximum likelihood decoding, and linear codes. Error correcting codes add redundant bits to messages to facilitate detecting and correcting errors that may occur during transmission or storage. Linear codes allow for simpler encoding and decoding procedures compared to non-linear codes. The generator and parity check matrices are important for encoding and decoding messages using linear codes.

![Block Codes in Systematic Form

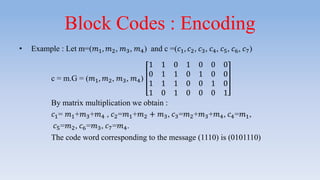

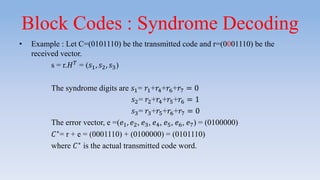

• In this form, the code word consists of (n-k) parity check bits followed by k bits of

the message.

• The rate or efficiency for this code R = k/n.

• The structure of the code word in systematic form is :

𝐺 𝑘×𝑛= 𝐼 𝑘×𝑘 𝑃(𝑛−𝑘)×𝑛

Code C = m.G = [m mP]](https://image.slidesharecdn.com/presentation1-190704112947/85/linear-codes-and-cyclic-codes-27-320.jpg)

![Parity Check Matrix

• When G is systematic, it is easy to determine the parity check matrix H as :

H=[𝐼(𝑛−𝑘)×(𝑛−𝑘) 𝑃 𝑇

(𝑛−𝑘)×𝑛]

• The parity check matrix H of a generator matrix is an (n-k)-by-n matrix satisfying:

𝐻(𝑛−𝑘)×𝑛 ∗ 𝐺 𝑇

𝑛×𝑘 = 0

• Then the code words should satisfy (n-k) parity check equations

𝑐1×𝑛 ∗ 𝐻 𝑇

𝑛×(𝑛−𝑘) = 𝑚1×𝑘 ∗ 𝐺 𝑘×𝑛* 𝐻 𝑇

𝑛×(𝑛−𝑘) = 0](https://image.slidesharecdn.com/presentation1-190704112947/85/linear-codes-and-cyclic-codes-29-320.jpg)

![Parity check matrices for cyclic codes

• Let C be a cyclic [ n, k]-code with the generator polynomial g(𝑥) (of degree n-k),

By the last theorem g(𝑥) is a factor of 𝑥 𝑛 − 1. Hence 𝑥 𝑛 − 1 =𝑔 𝑥 ℎ(𝑥) for some

h(𝑥) of degree K (where h(𝑥) is called the check polynomial of C)

• Theorem: Suppose C is a cyclic [ n, k]-code with the check polynomial

h(𝑥) =ℎ0 + ℎ1 𝑥 + ⋯ + ℎ 𝑘 𝑥 𝑘 then a parity-check matrix for C is

H=

ℎ 𝑘 ℎ 𝑘−1 ℎ 𝑘−2 … ℎ0 0 0 0 … 0

0 ℎ 𝑘 ℎ 𝑘−1 ℎ 𝑘−2 … ℎ0 0 0 … 0

0 0 ℎ 𝑘 ℎ 𝑘−1 ℎ 𝑘−2 … ℎ0 0 … 0

⋮ ⋮ ⋱ ⋱ ⋱ ⋱ ⋱ ⋱ ⋱ ⋮

0 0 … 0 0 … … ℎ 𝑘 … ℎ0](https://image.slidesharecdn.com/presentation1-190704112947/85/linear-codes-and-cyclic-codes-39-320.jpg)

![Basic Definitions of Posets

• P=([n], ≤ 𝑃) is a partial order (or Poset) on [n] where [n]={1,2,…,n} (or n-set)

• Given u=(𝑢1, … , 𝑢 𝑛)∈ 𝐹2

𝑛

, the support of u is the set supp(u)={i∈ [𝑛]:𝑢𝑖 ≠0}

• The P-distance of u and v is the cardinality 𝑑 𝑝 𝑢, 𝑣 = 𝑠𝑢𝑝𝑝(𝑢 − 𝑣)

• A poset code is a linear subspace C of the metric space (𝐹2

𝑛

, 𝑑 𝑝)

• Example : Let P=([3], ≤ 𝑃) be the usual chain order and Q=([2], ≤ 𝑄) be the

anti chain order. If [3]×[2], then the R-sum is the Y order:

1

2

3

4

(4-3)

5

(5-3)](https://image.slidesharecdn.com/presentation1-190704112947/85/linear-codes-and-cyclic-codes-40-320.jpg)