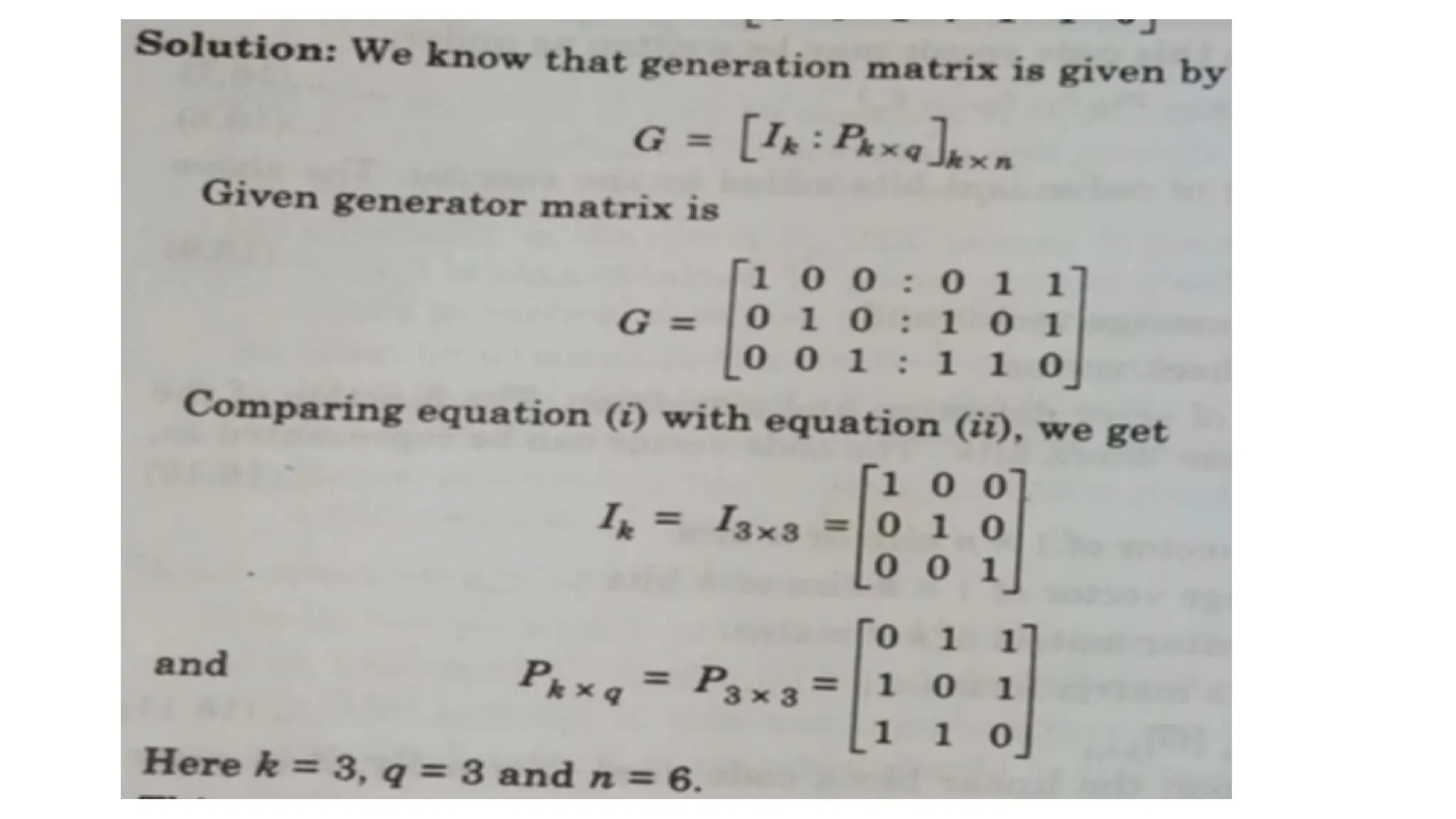

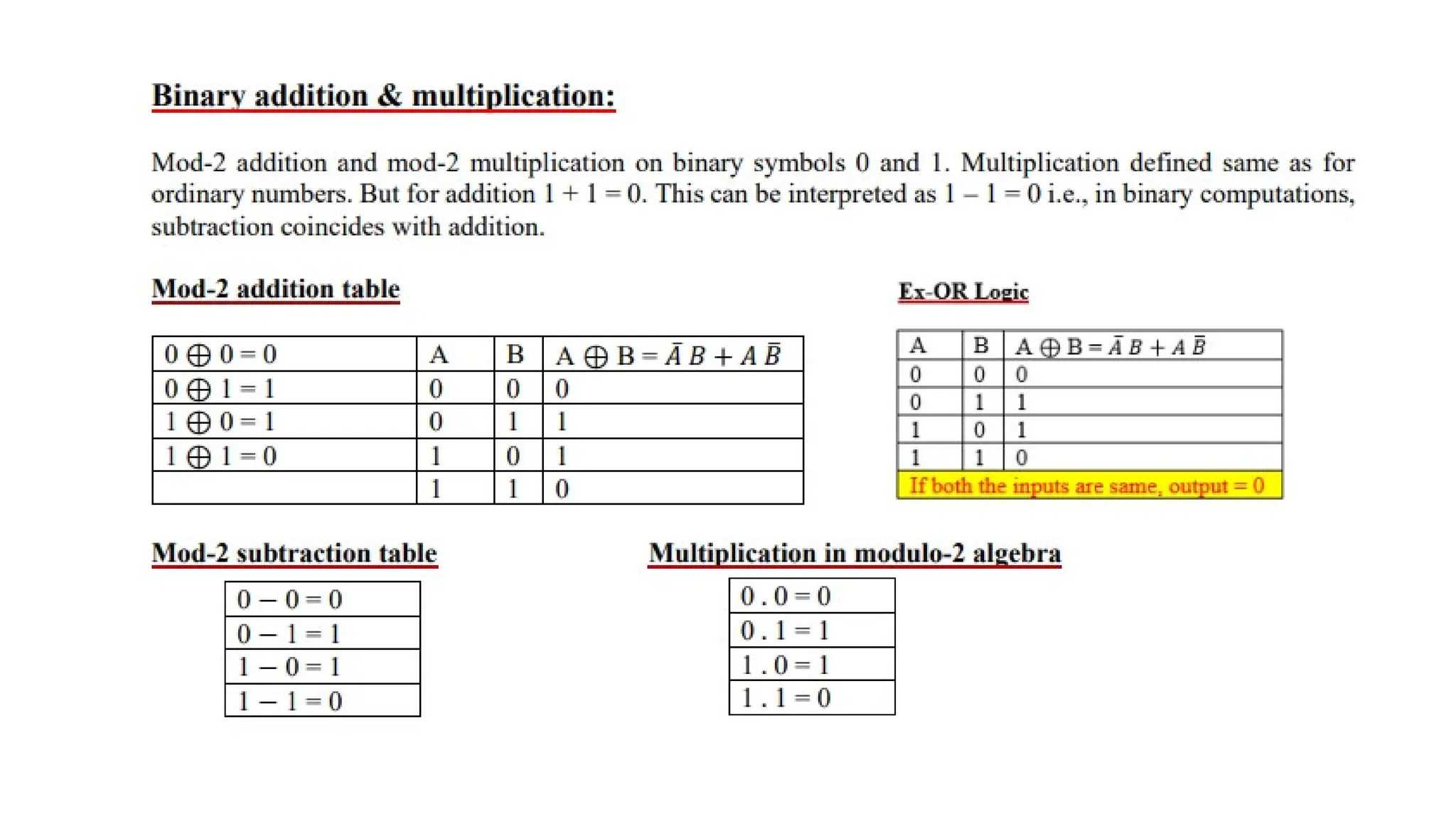

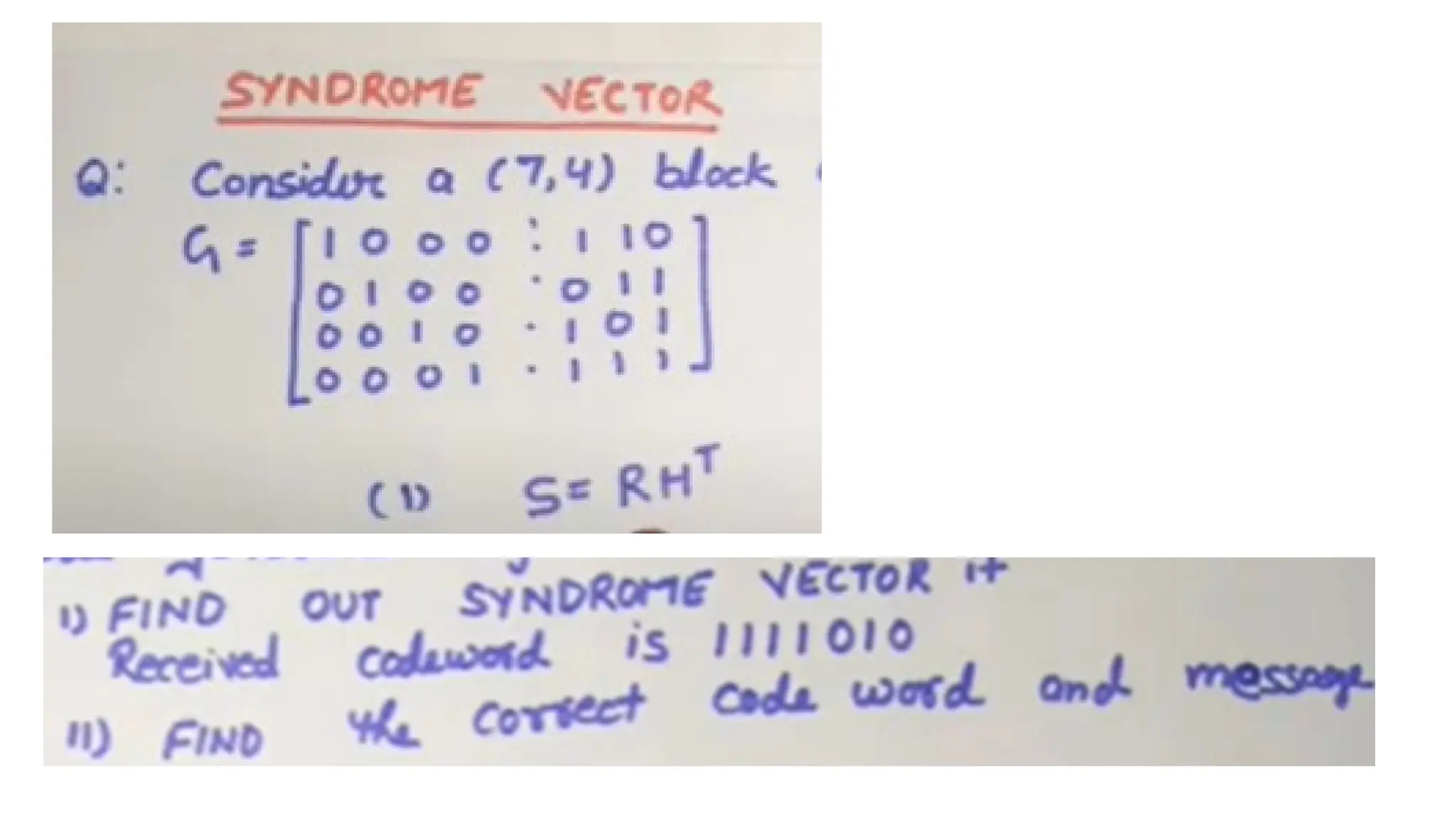

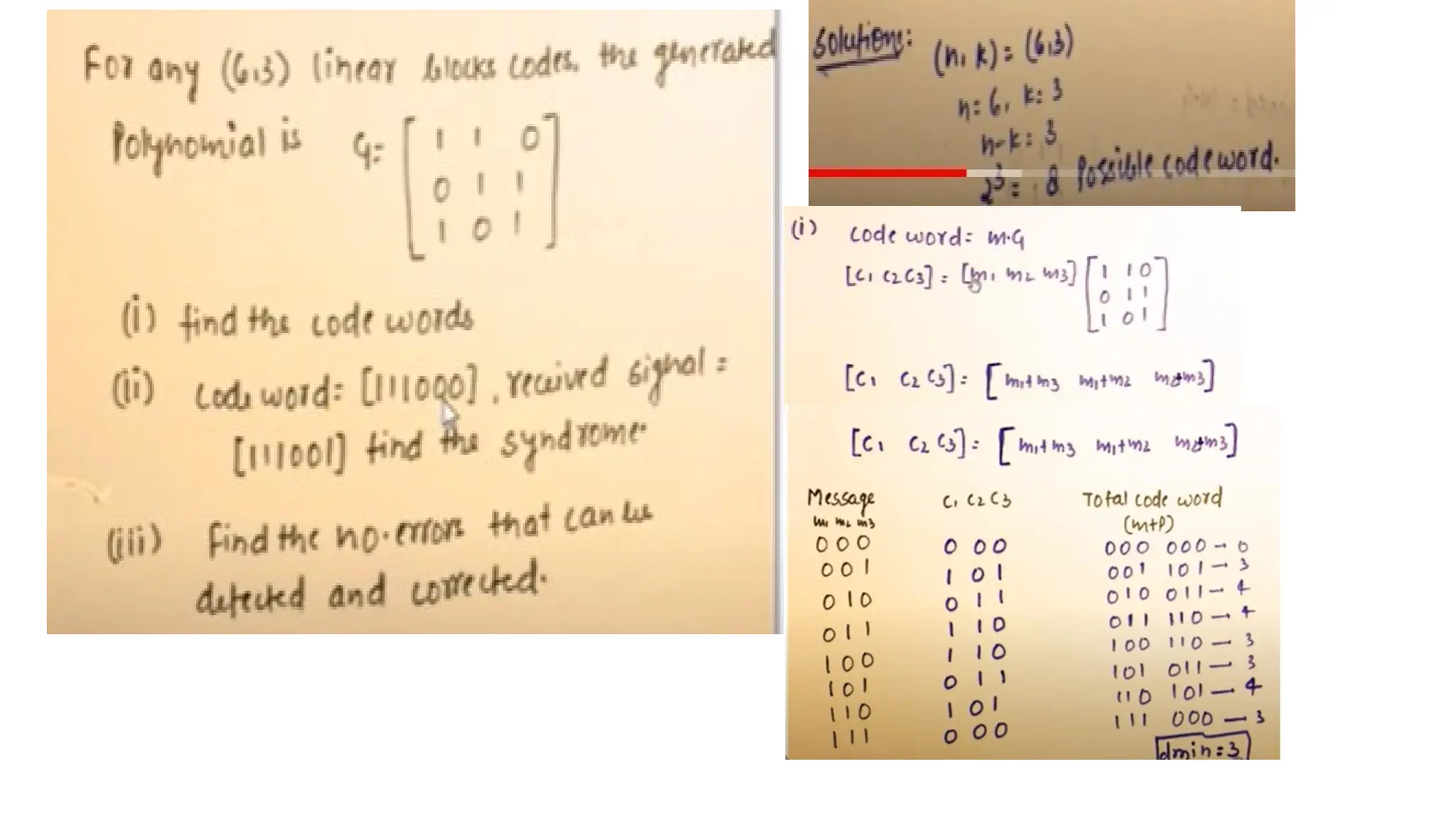

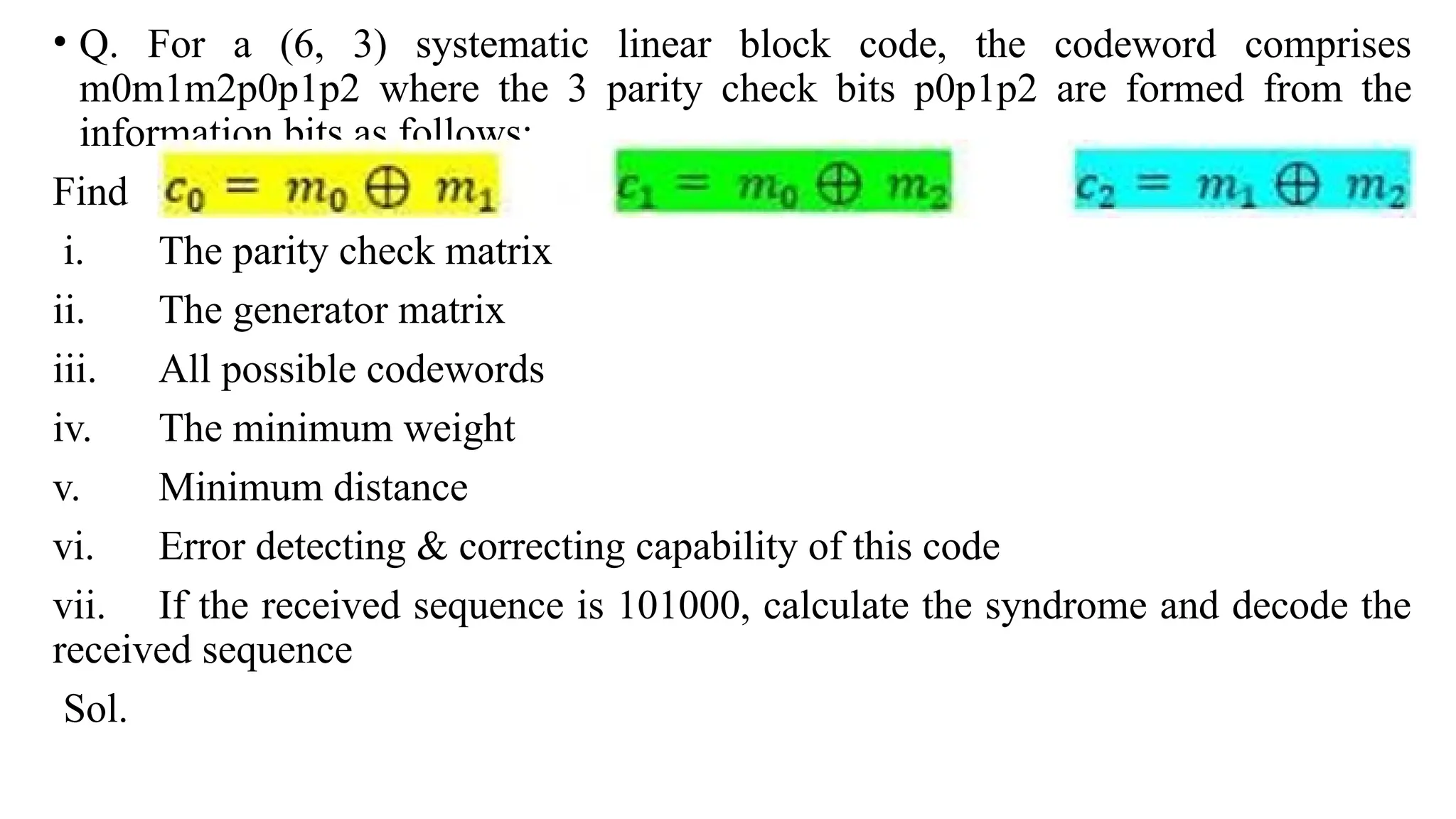

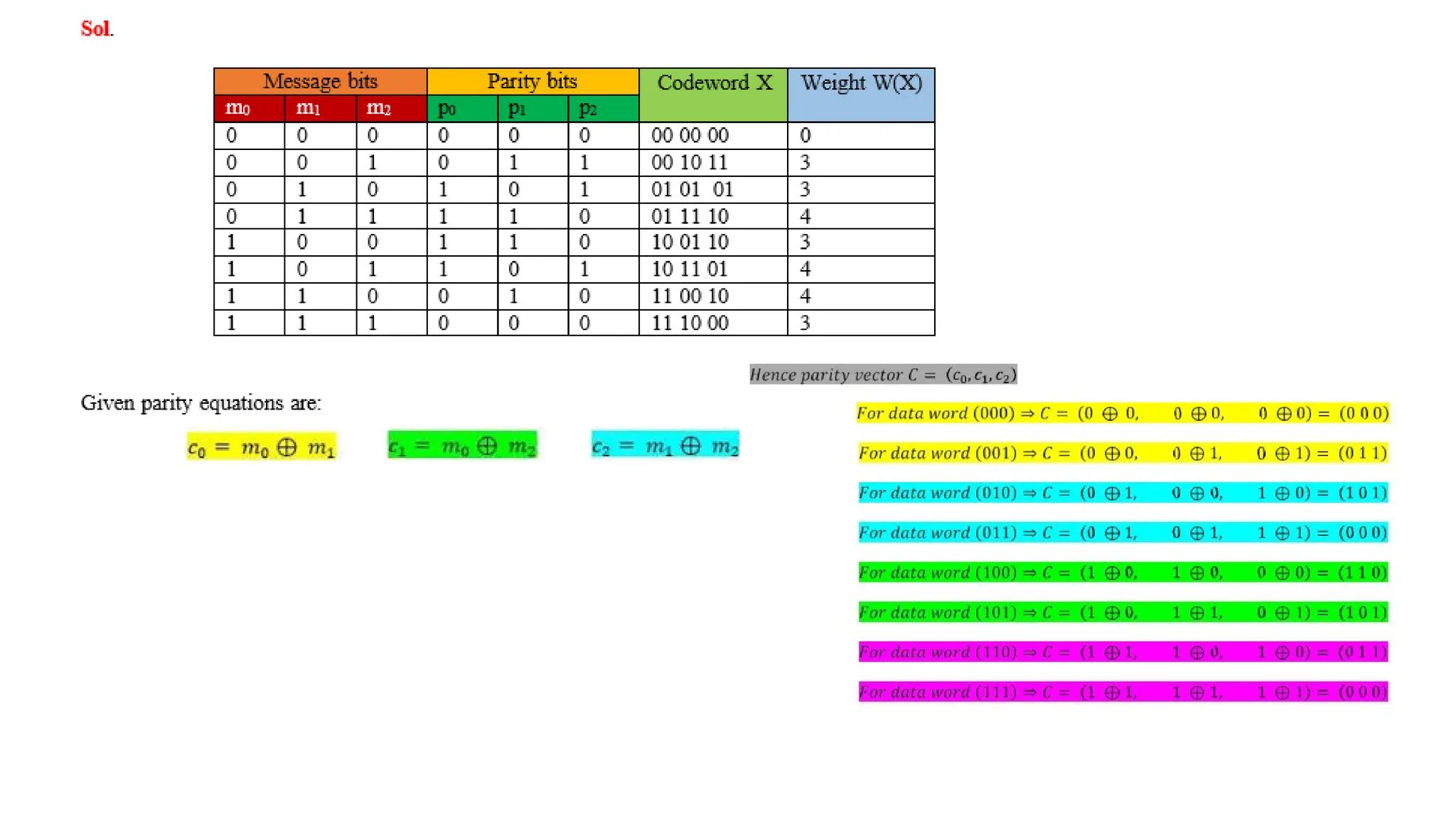

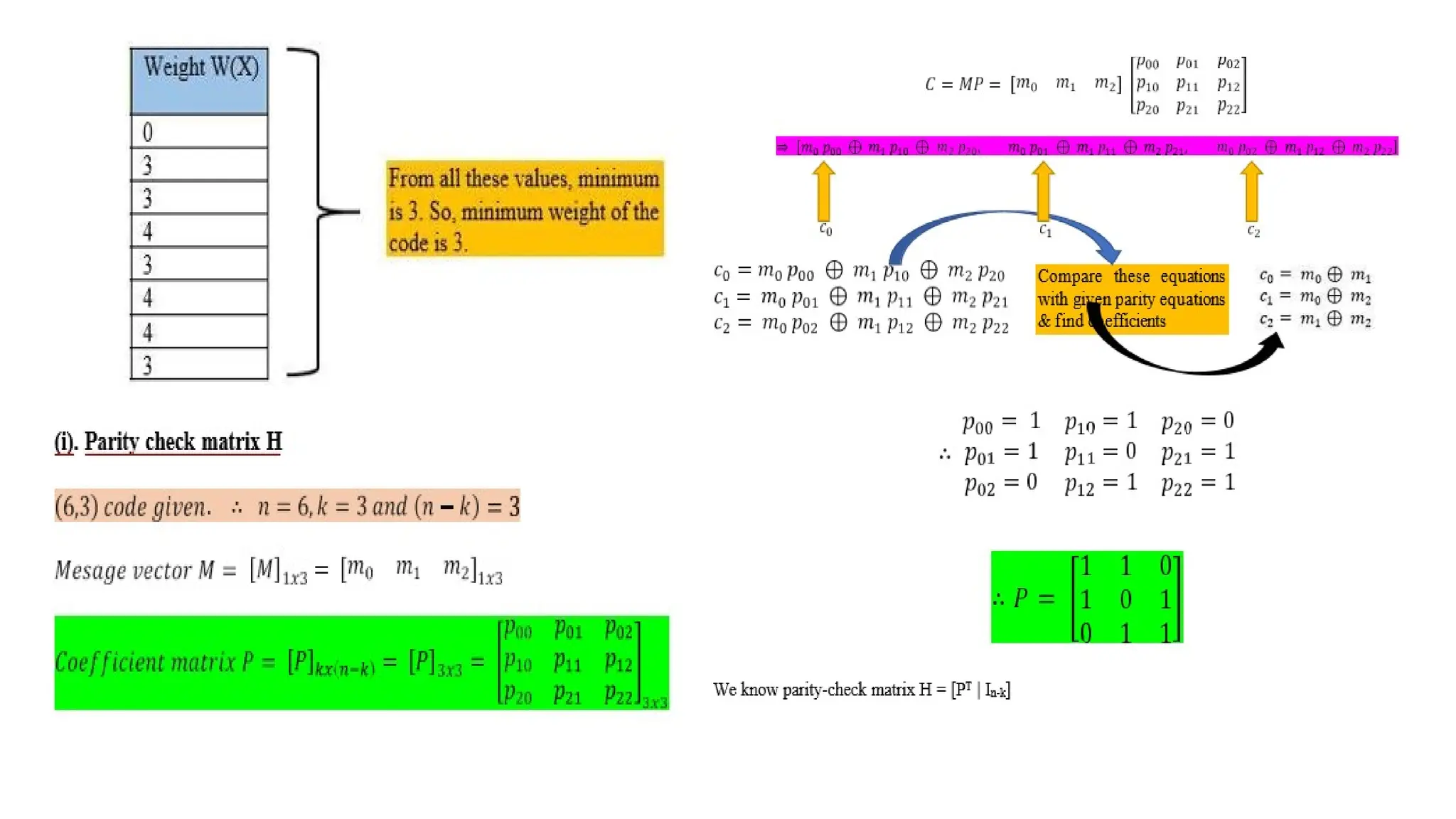

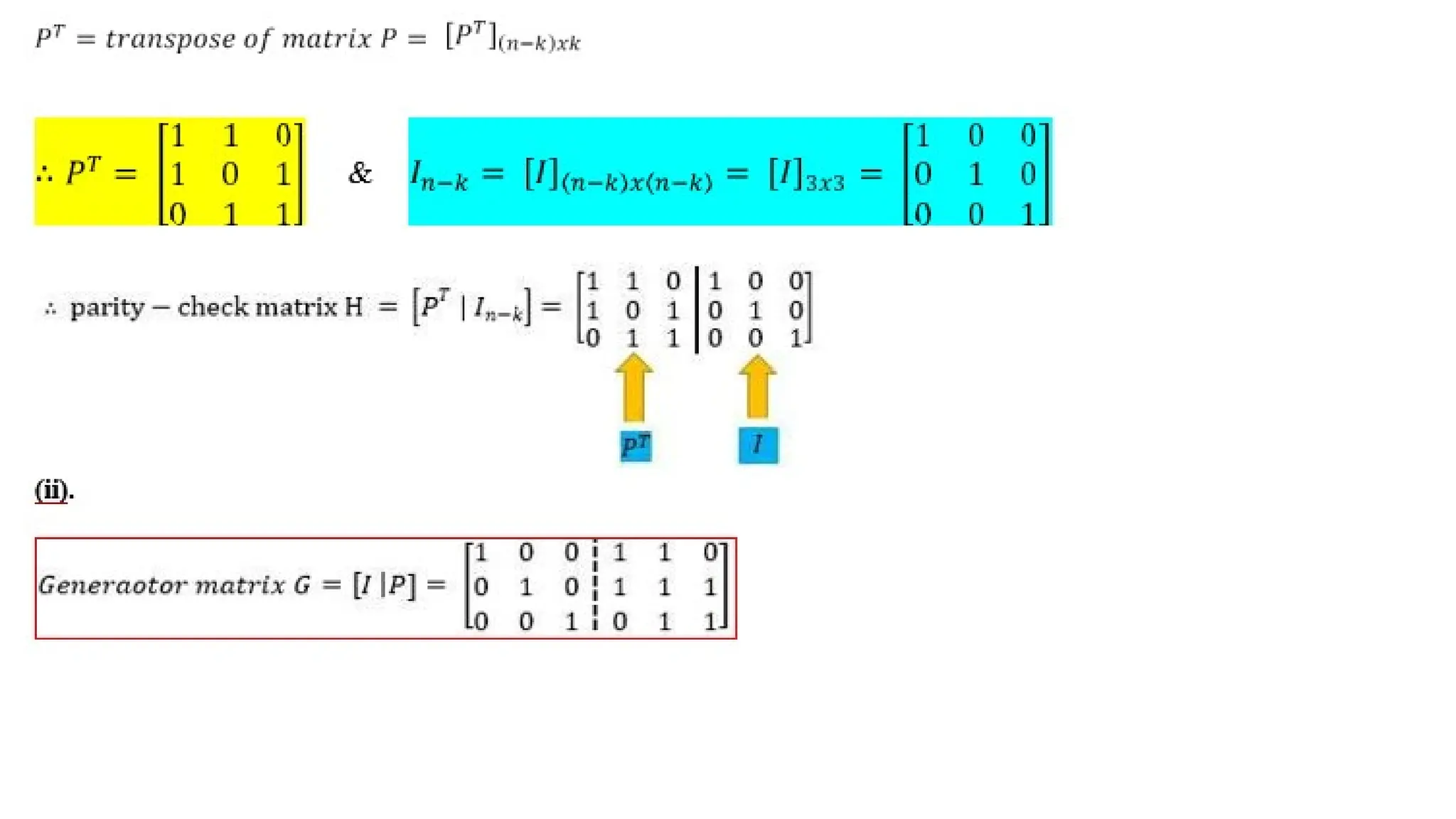

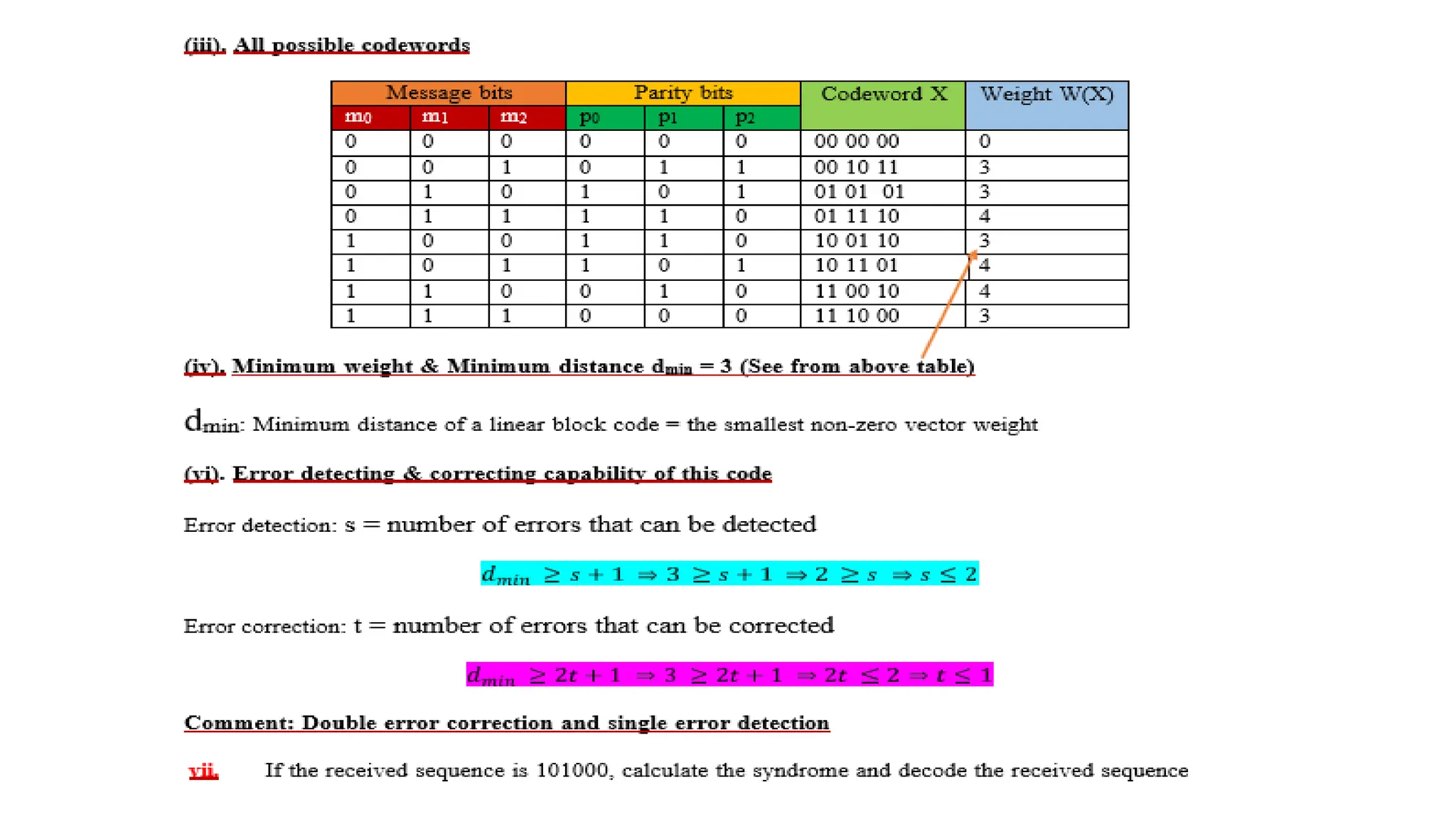

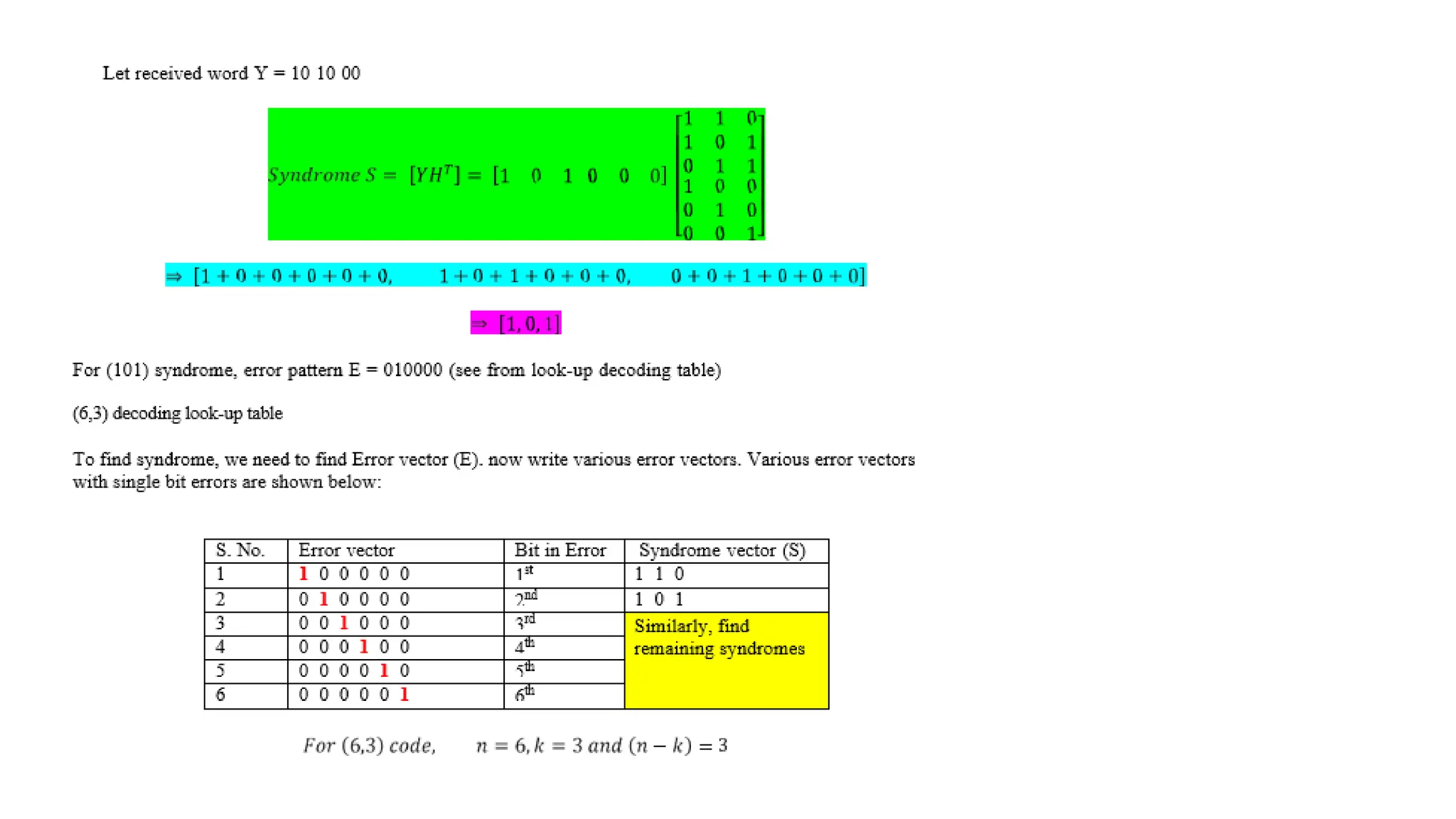

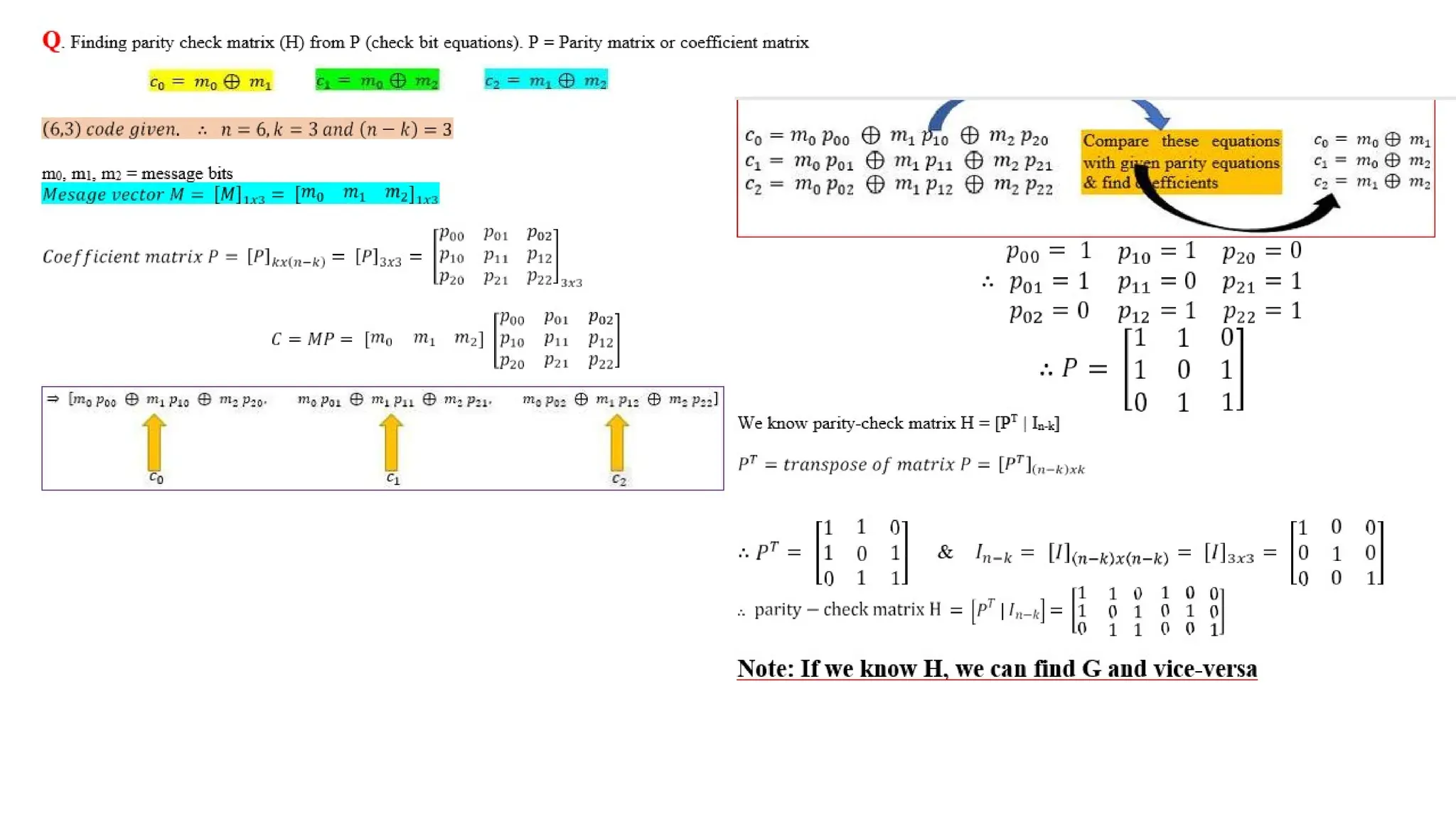

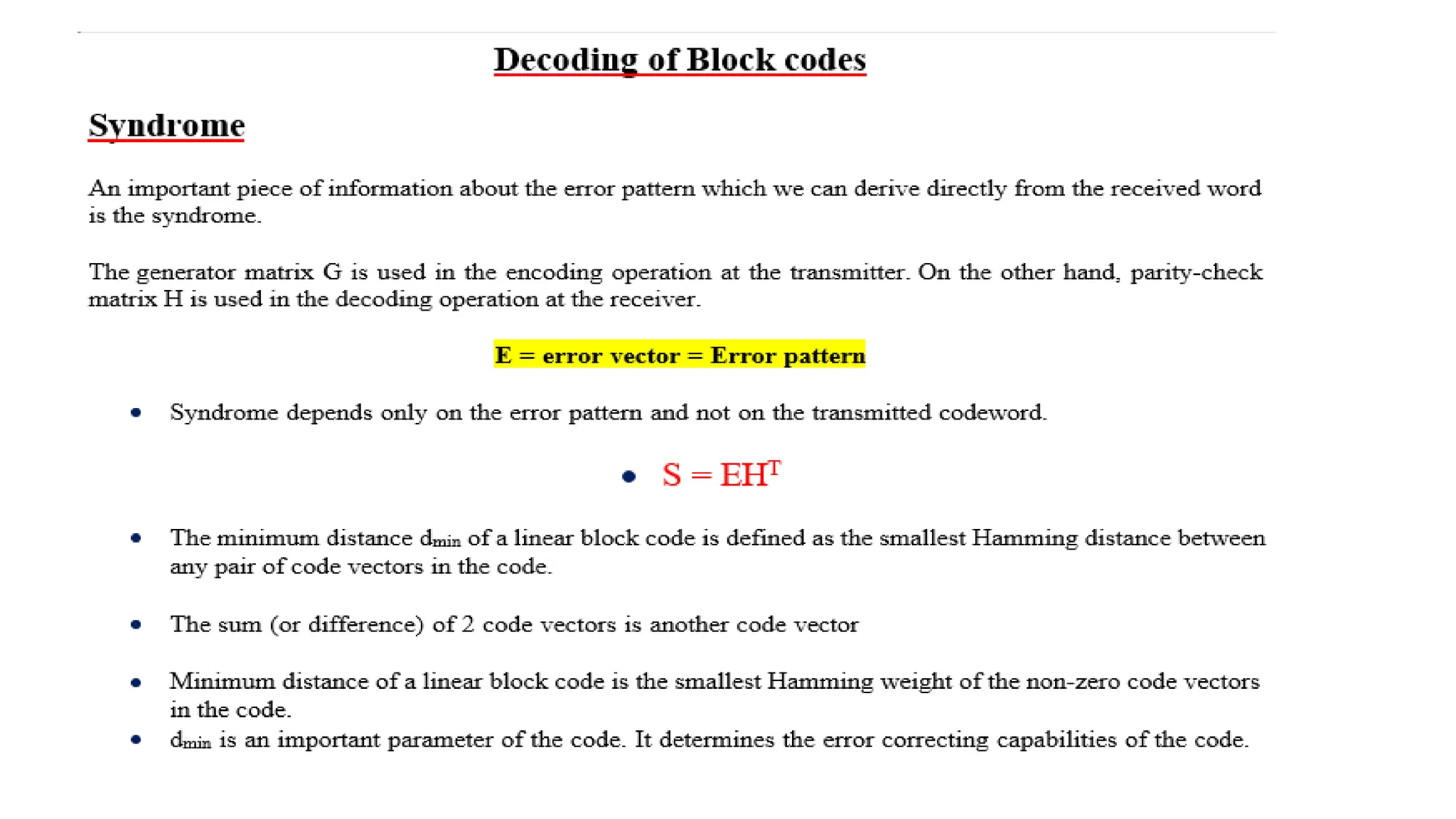

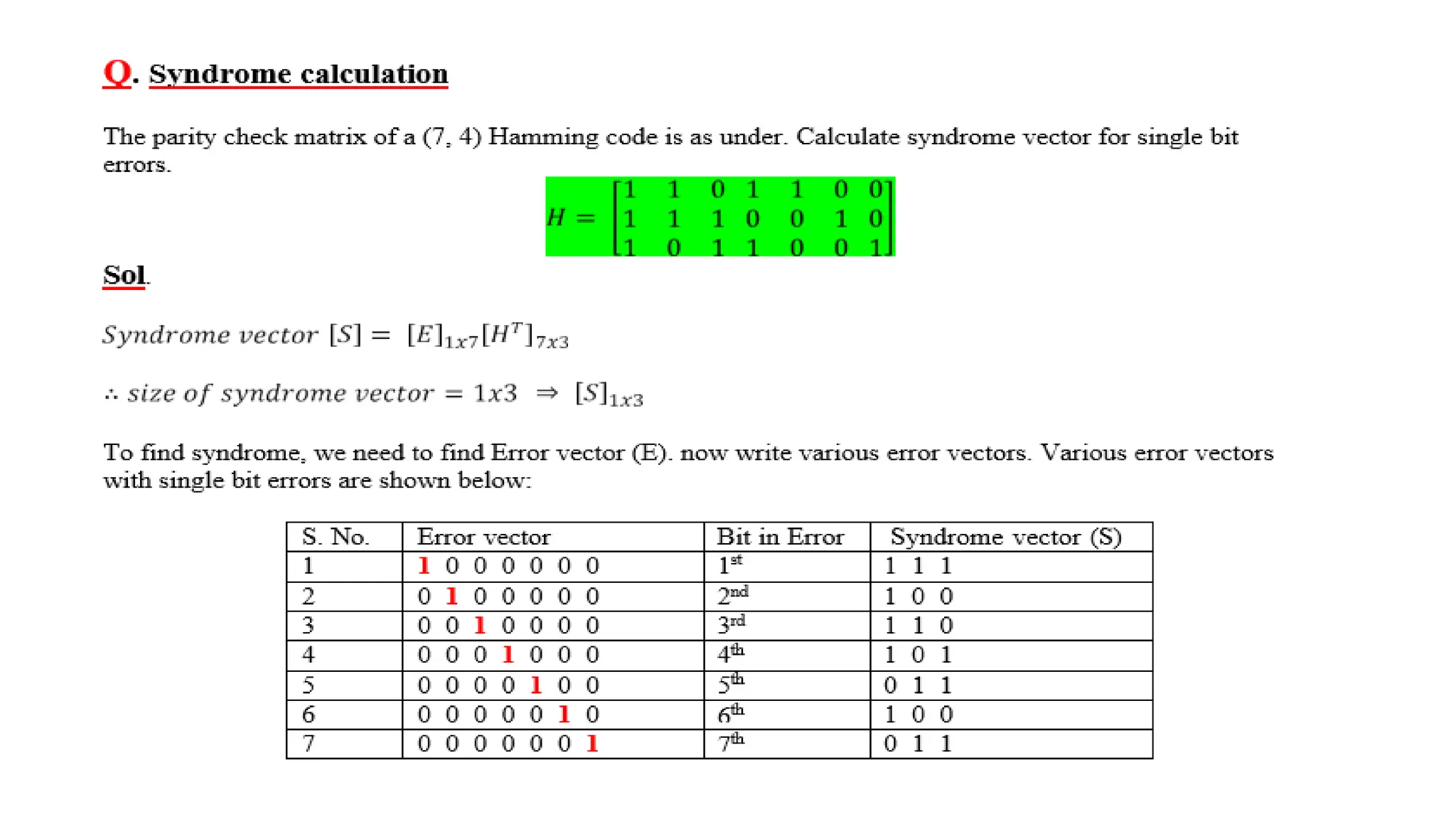

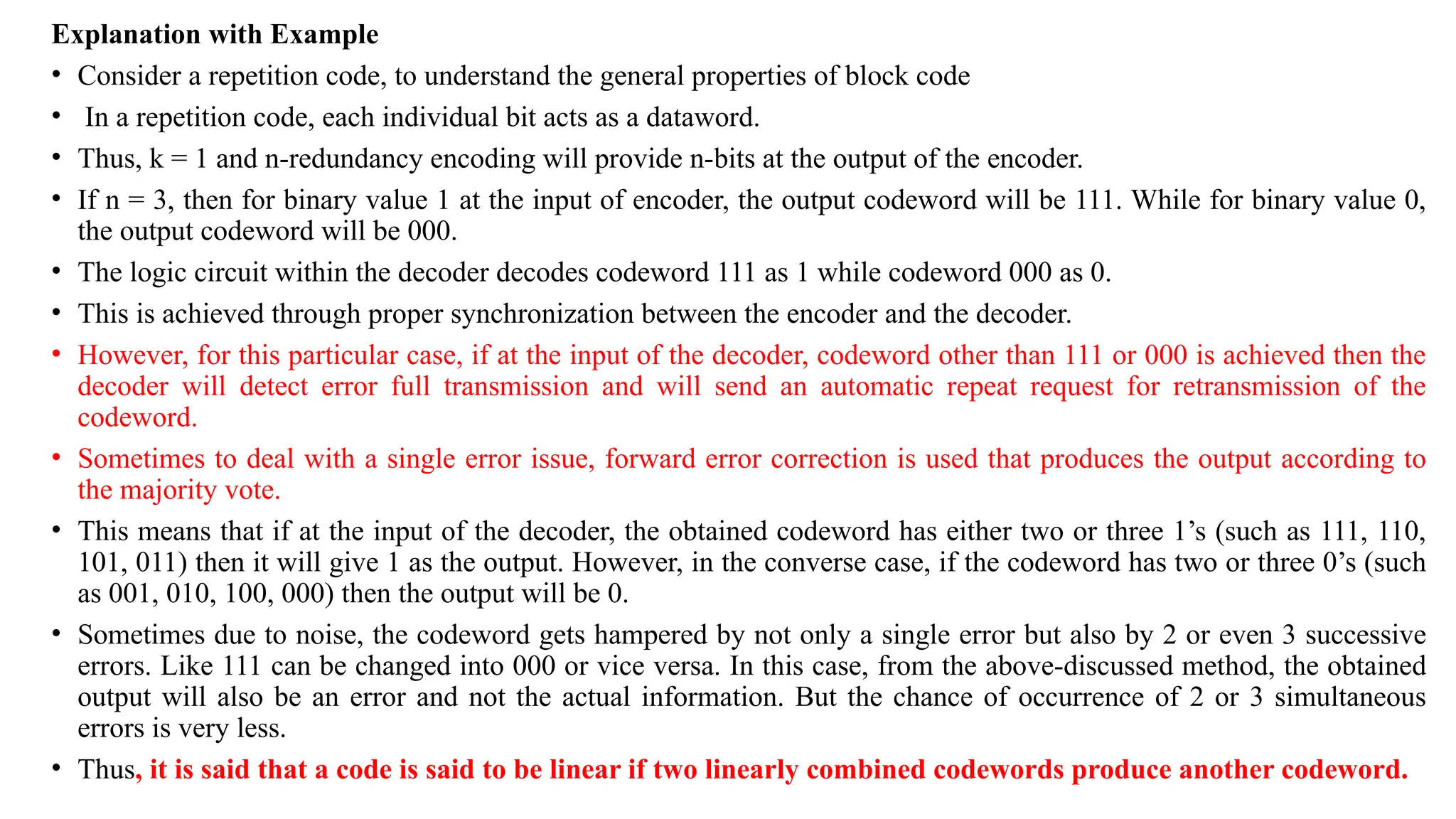

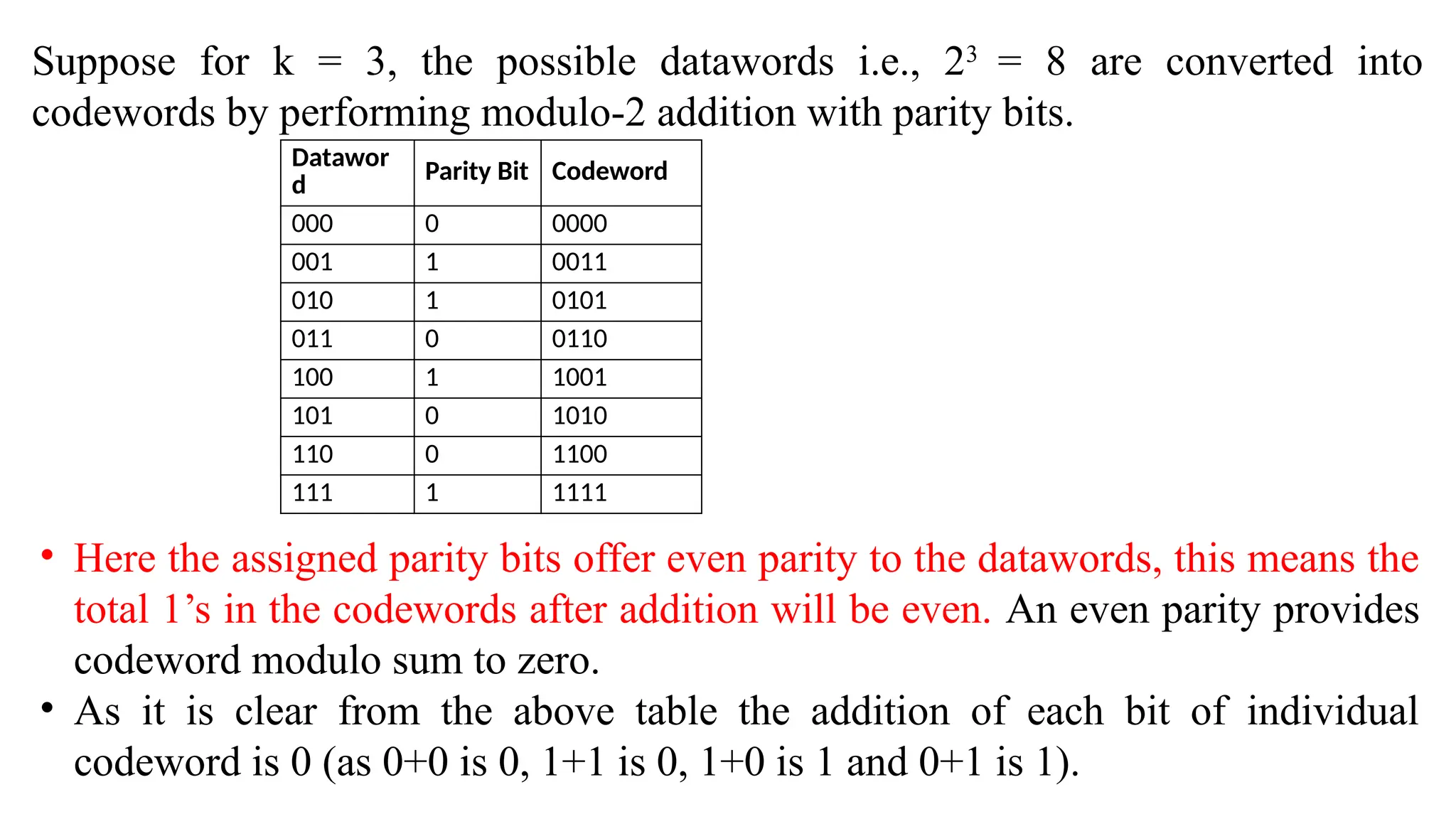

The document discusses linear block codes, including their generation, capabilities, and syndrome calculations, focusing on error-correcting codes that detect and correct transmission errors in digital signals. It explains the structure of linear block codes, the encoding and decoding process, and introduces key concepts like codewords, datawords, code rates, and Hamming distance. Additionally, it outlines methods for error detection and correction, including the use of parity check matrices and the syndrome vector for identifying errors in transmitted messages.

![Linear Encoding of Block Codes

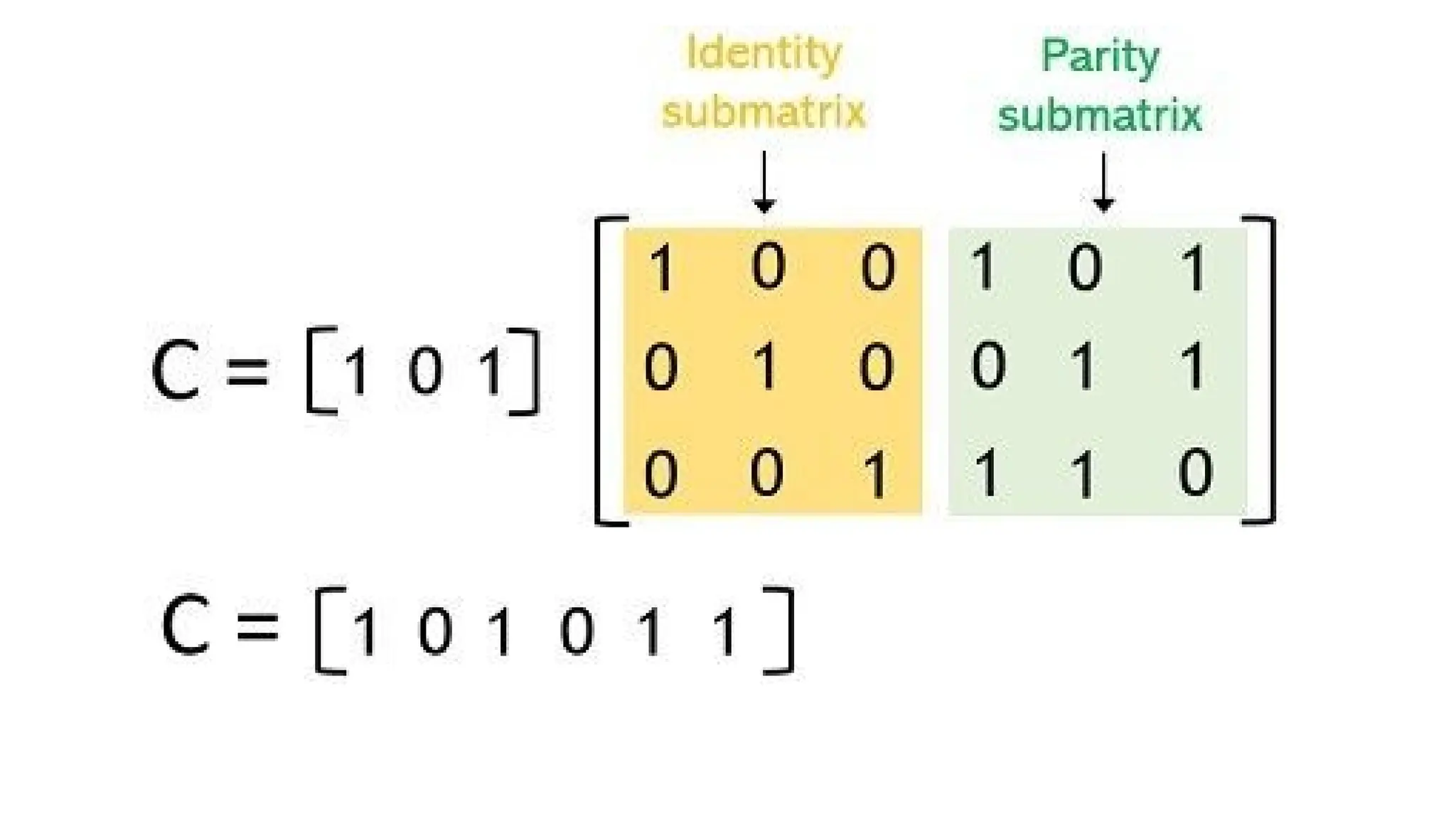

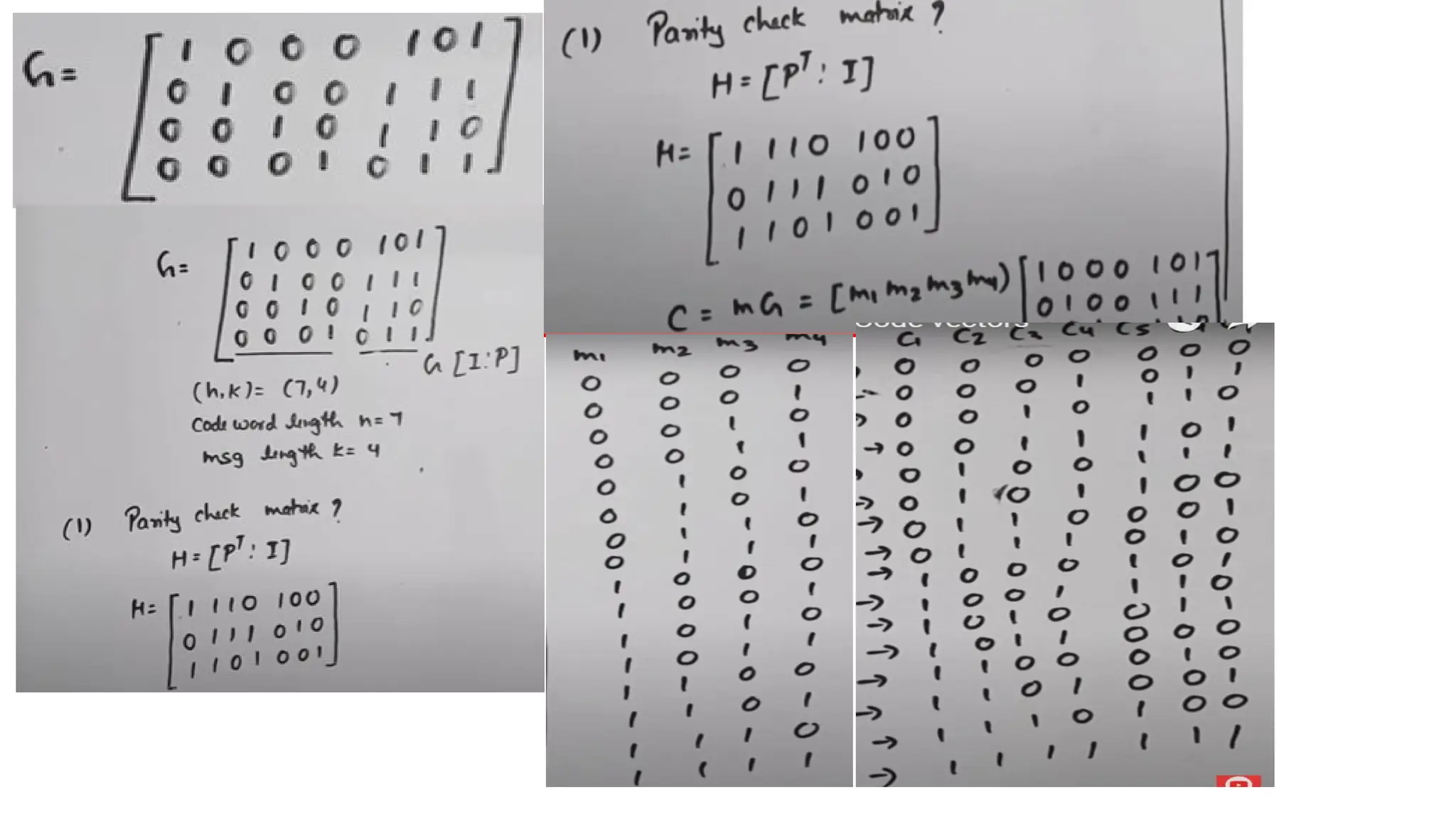

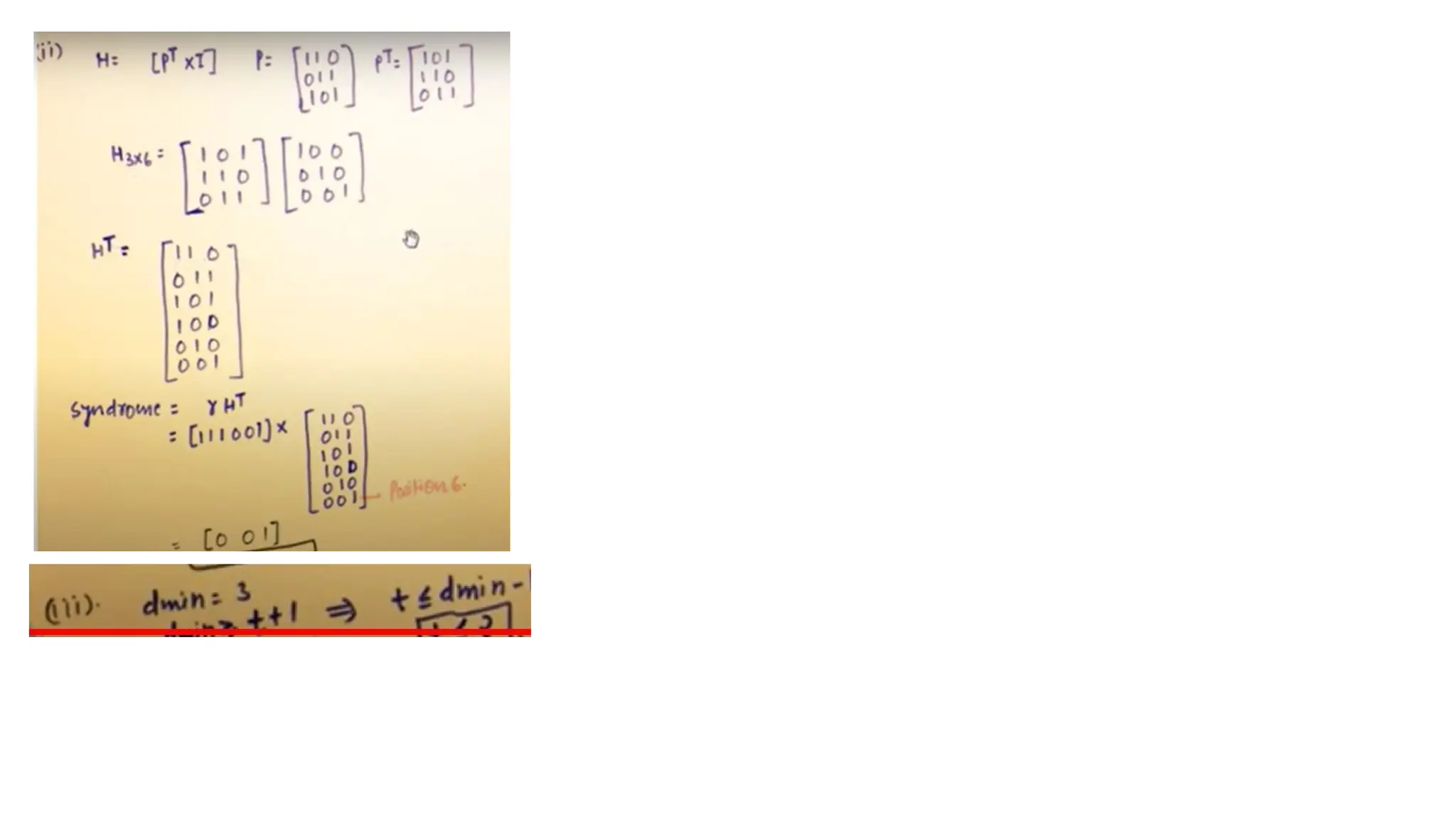

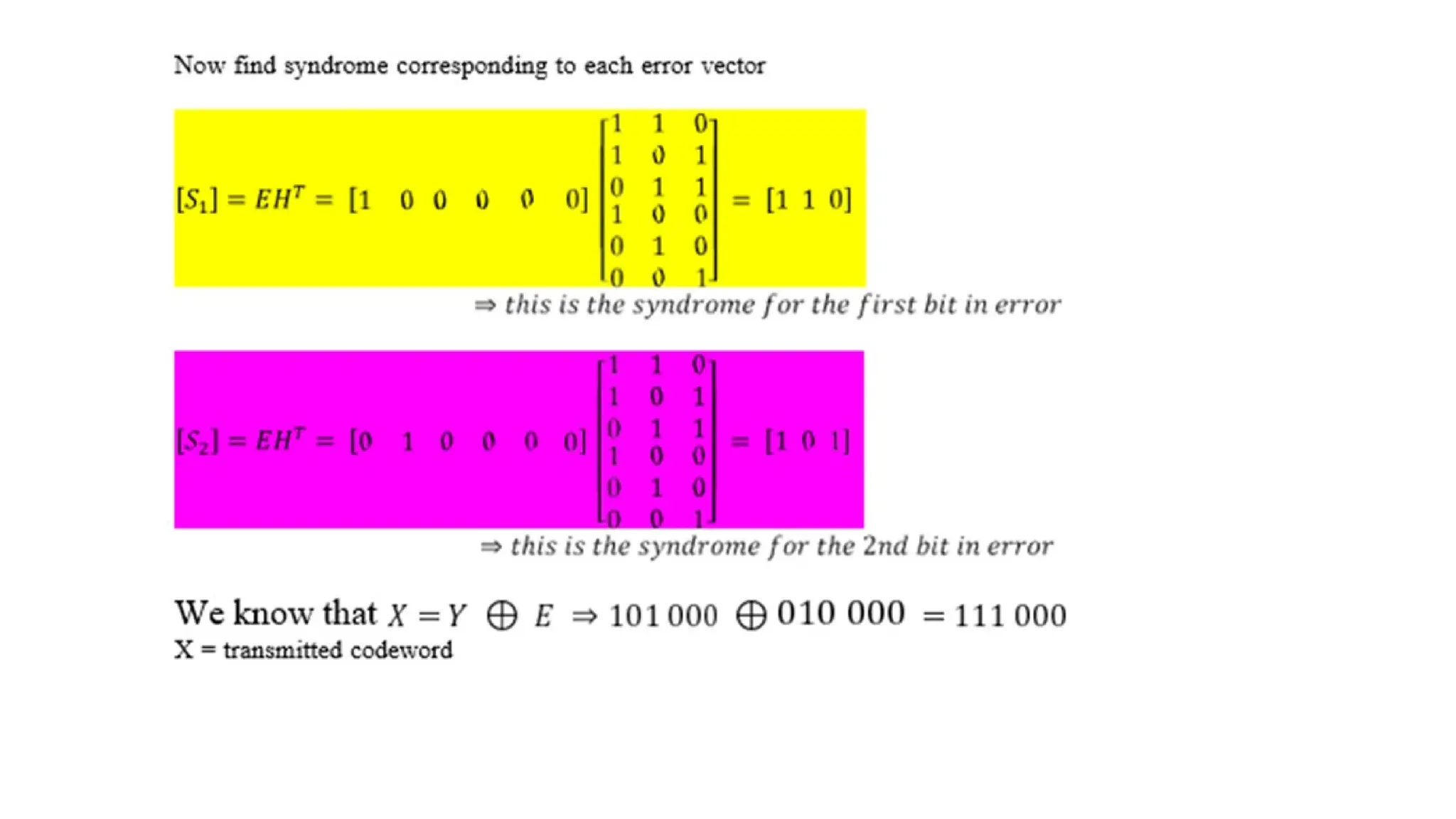

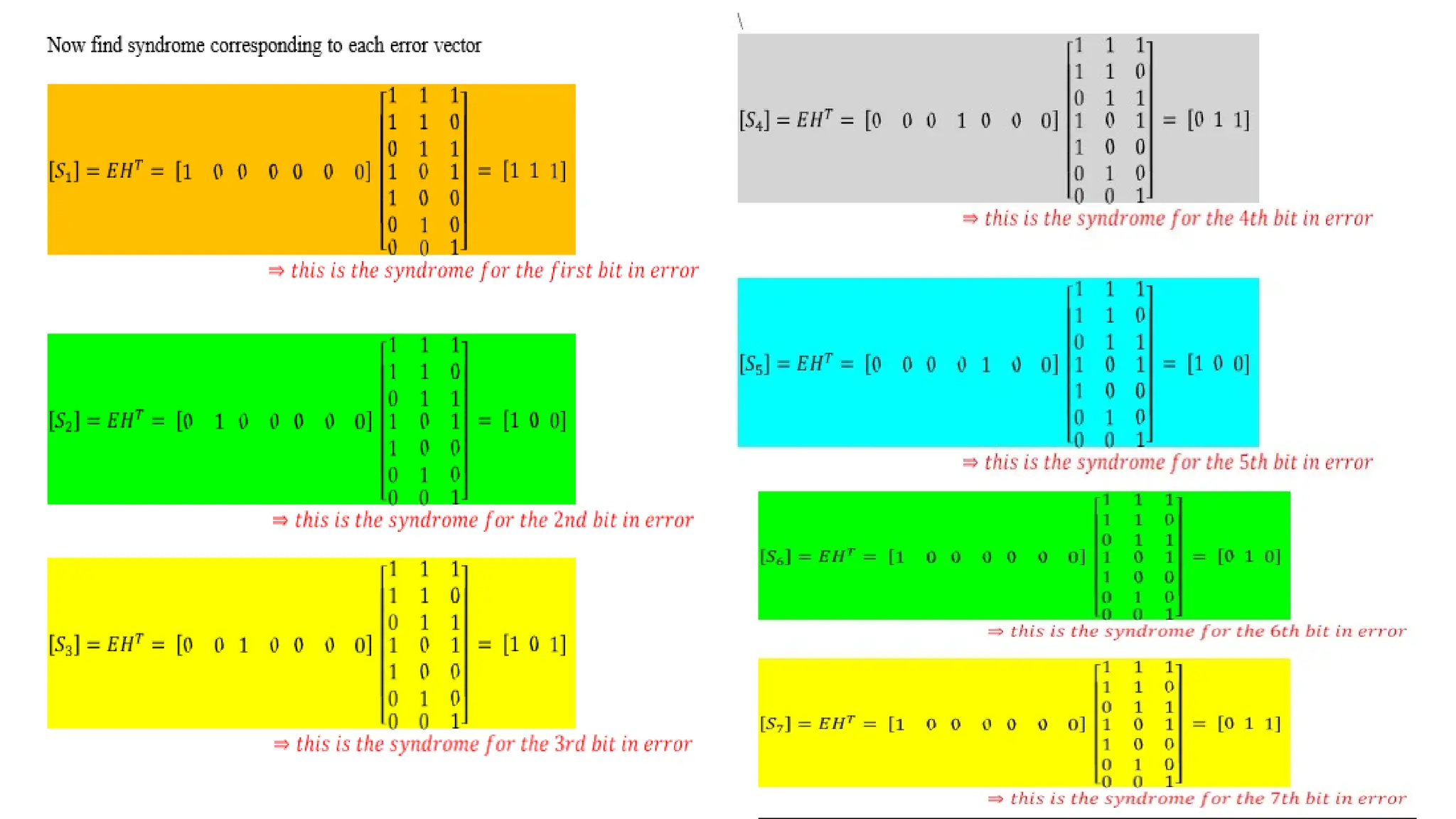

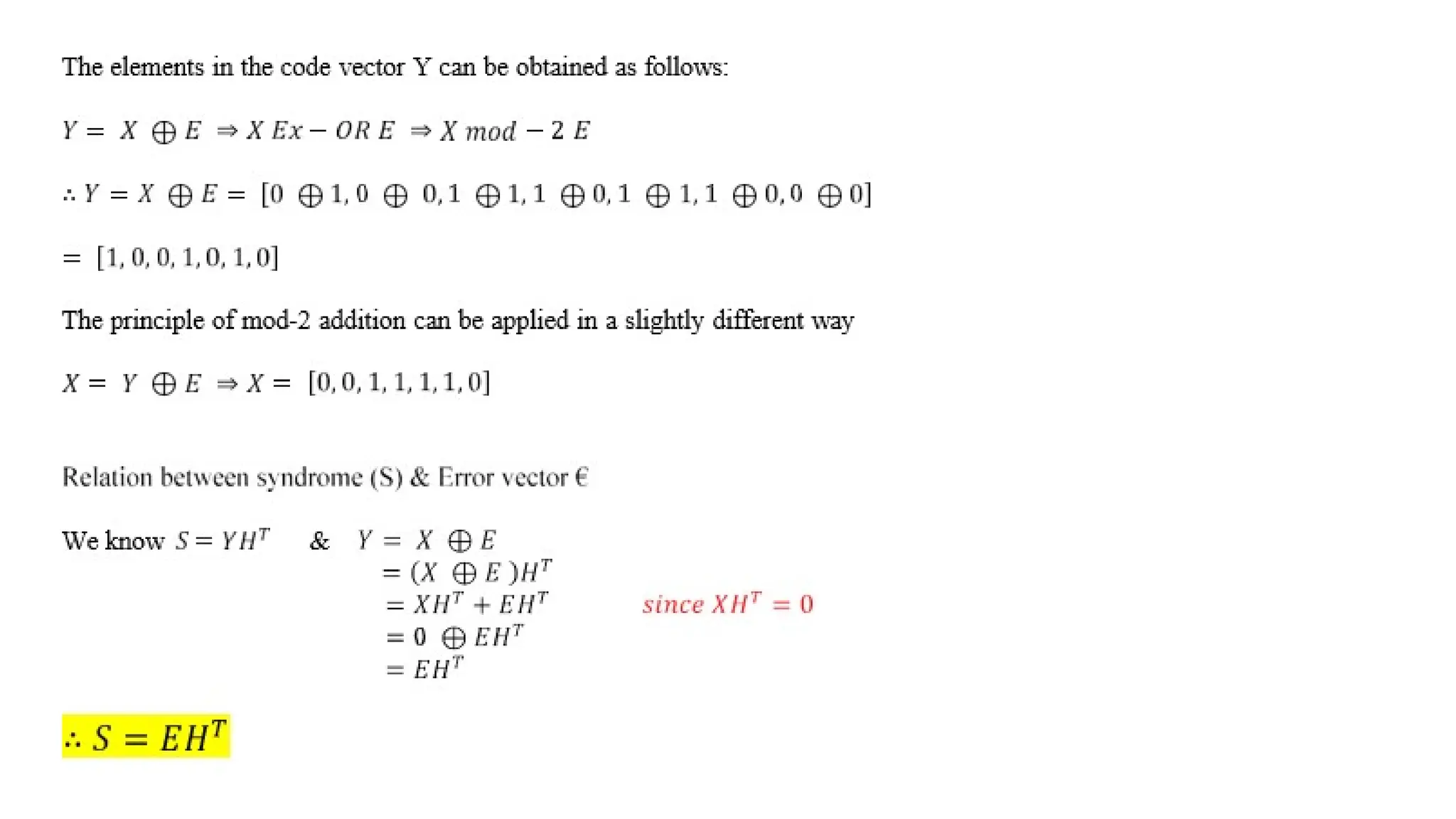

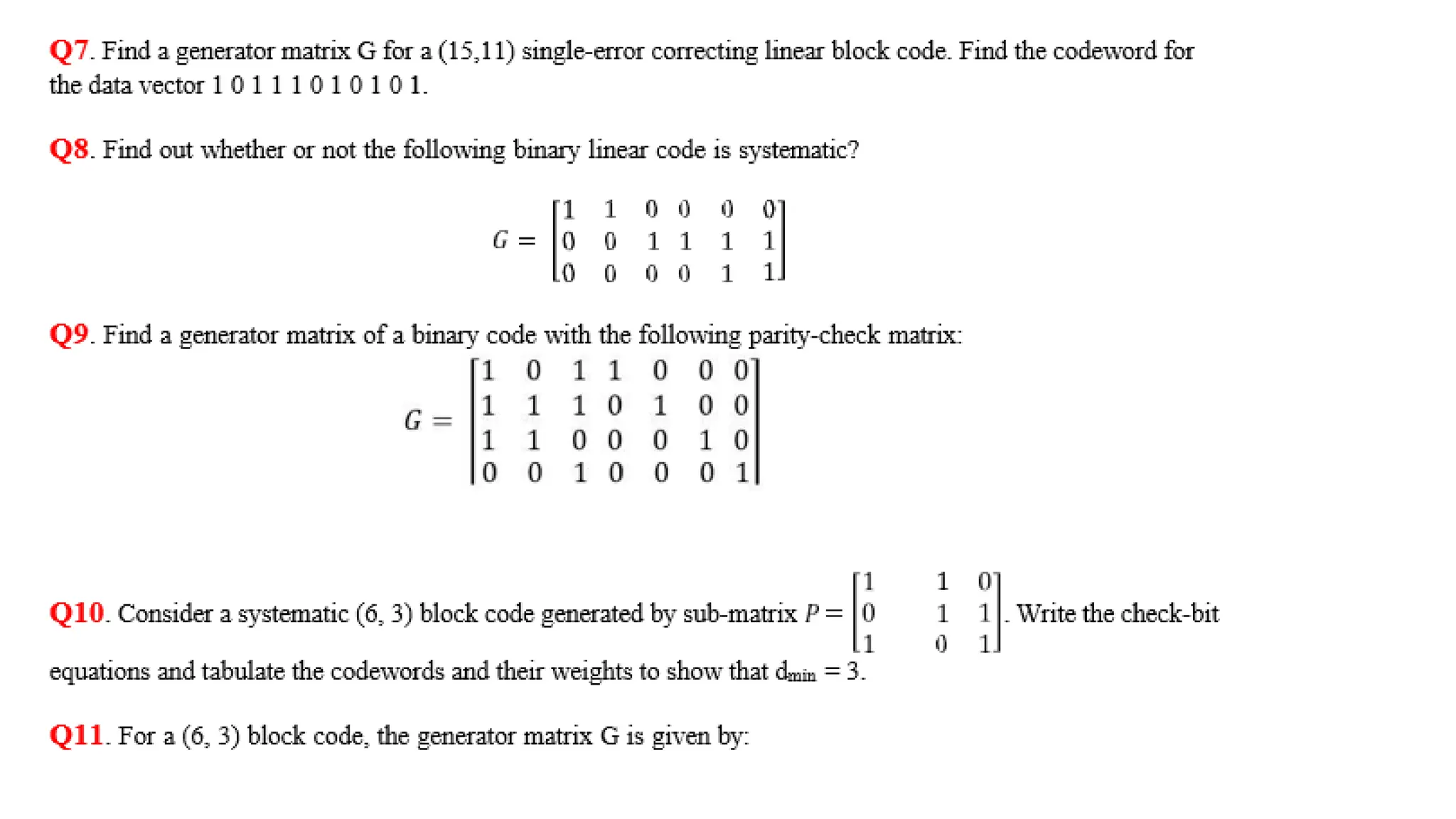

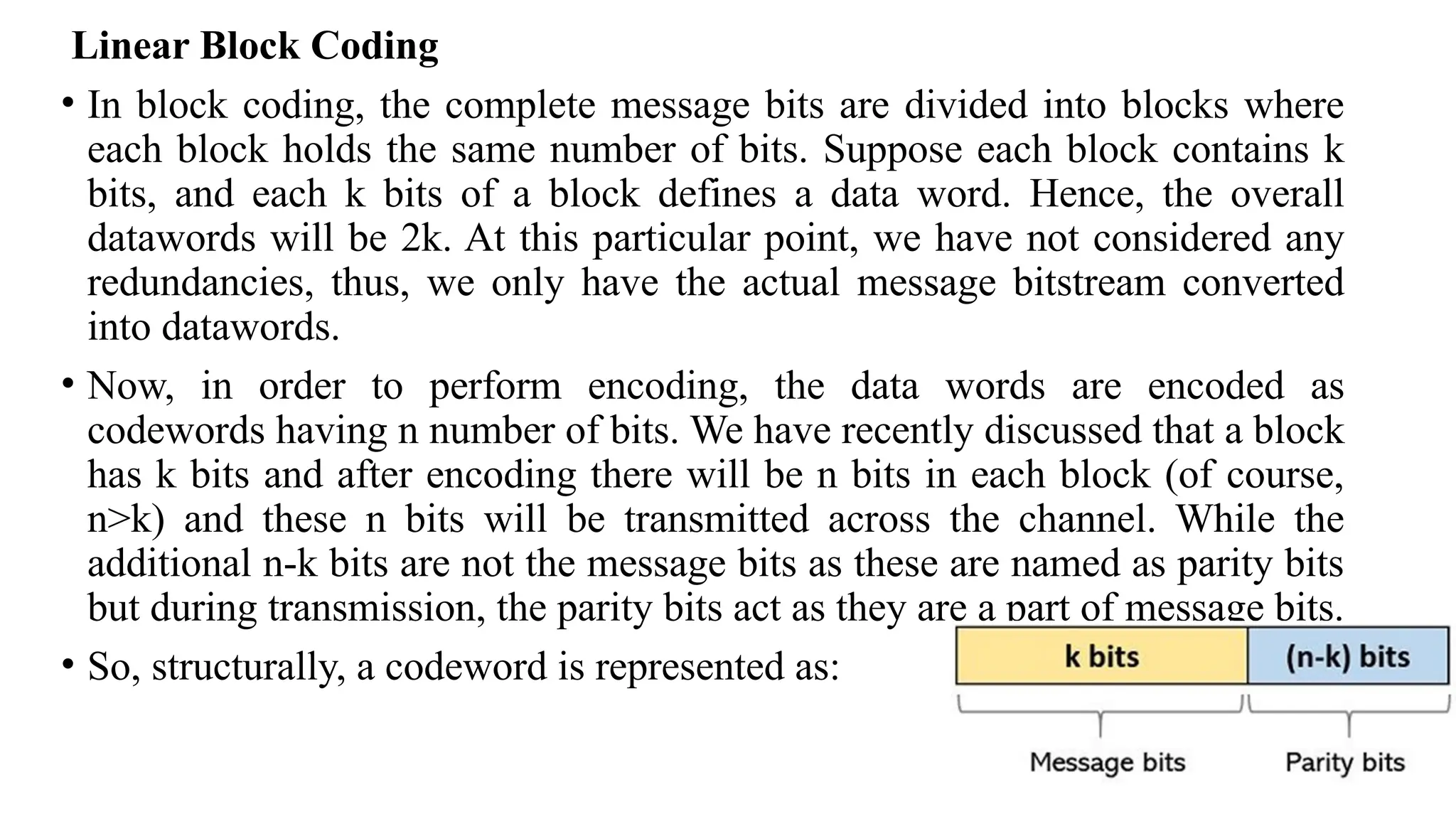

• Now, consider matrices to understand more about linear block codes.

• Suppose, we have a dataword, 101, represented by row vector d. d = [101]

• The codeword for this with the even parity approach discussed above will be 1010, given by row

vector c. c = [1010]

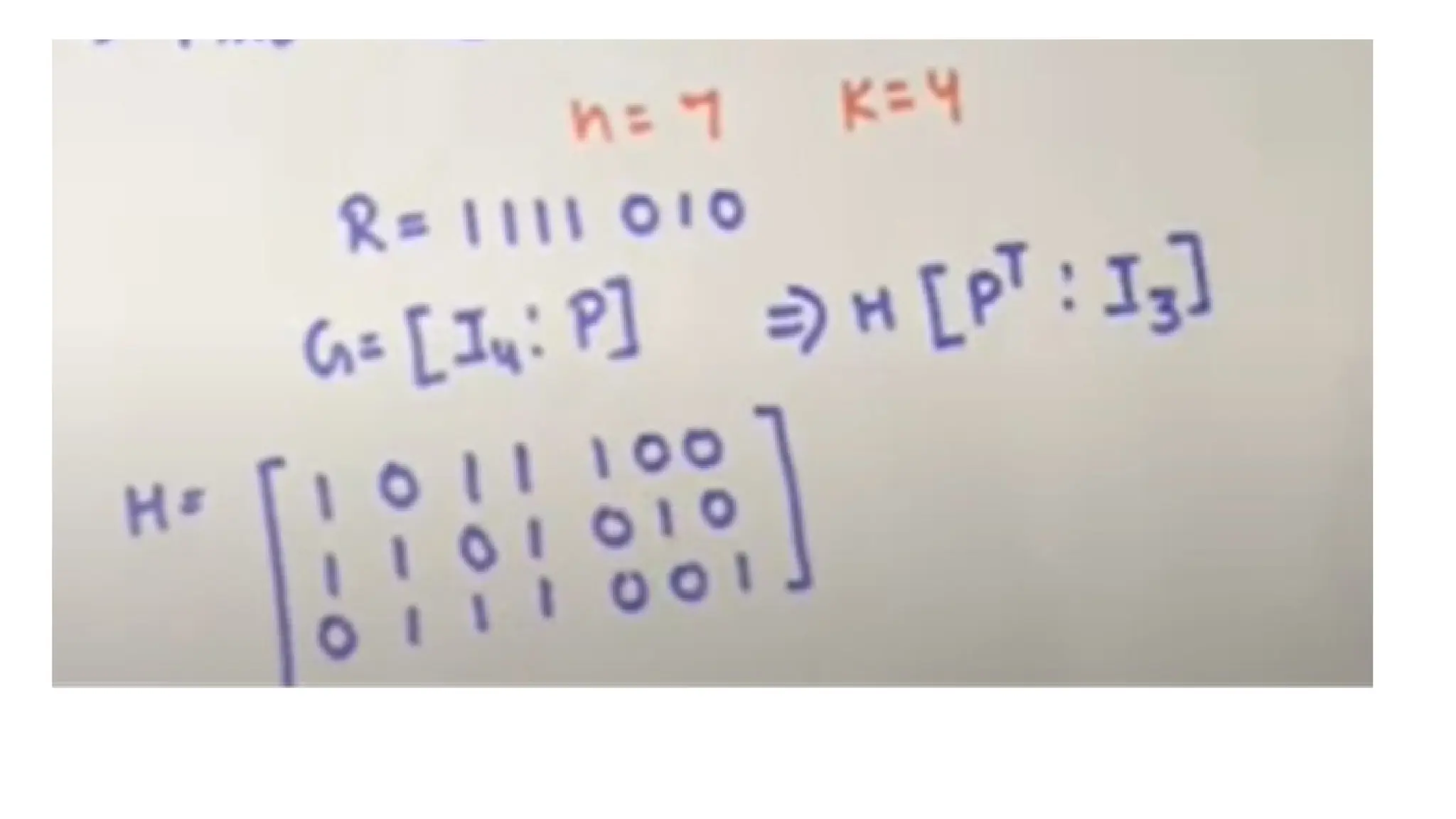

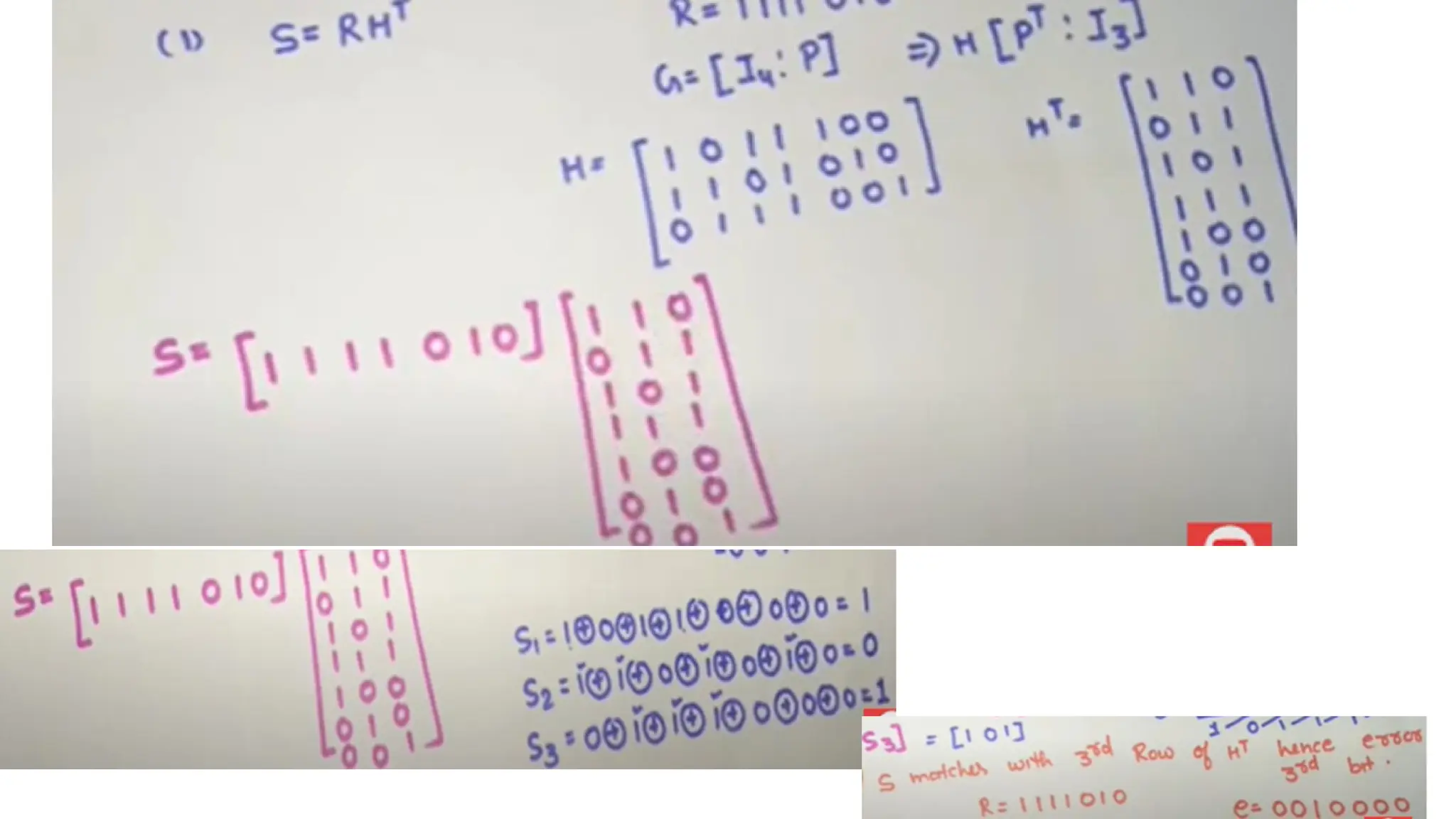

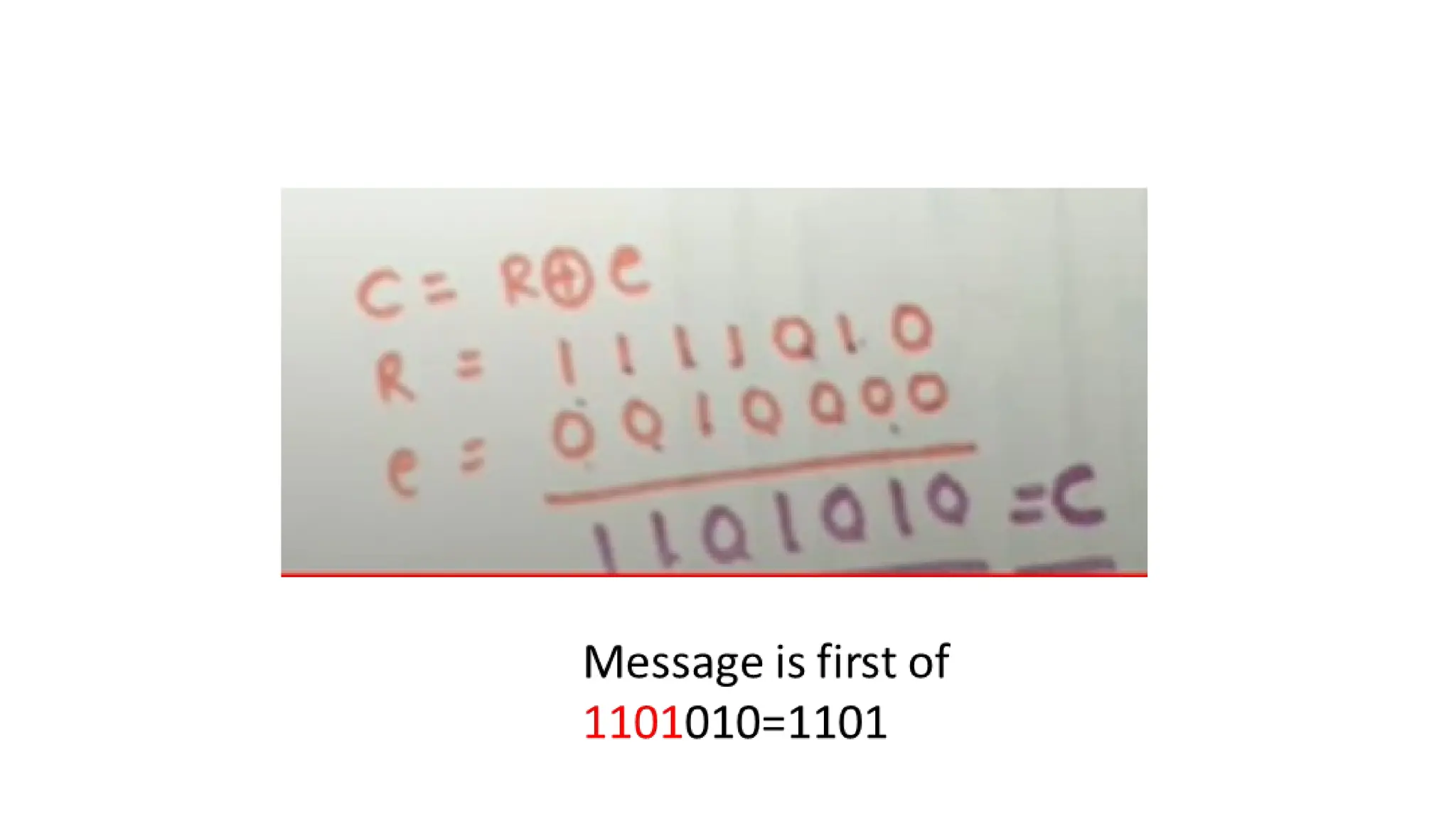

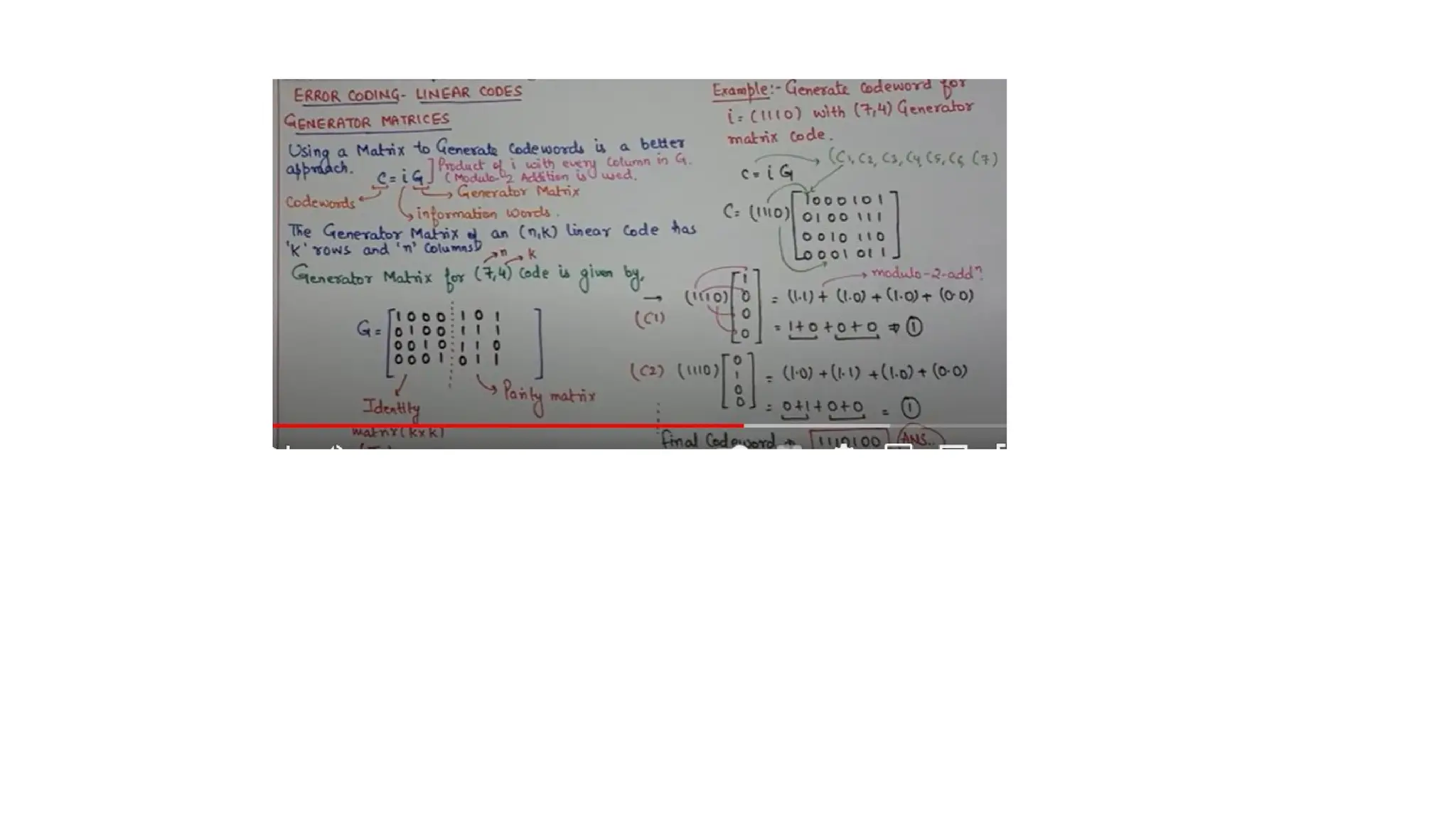

• Generally, generator matrix, G is used to produce codeword from dataword. The relation between c, d

and G is given as: c = dG

• For a code (6,3), there will be 6 bits in codeword and 3 in the dataword.

• In a systematic code, the most common arrangement has dataword at the beginning of the

codeword. To get this, identity submatrix is used in conjunction with parity submatrix and

using the relation d.G, we can have

• It is clearly shown that

dataword is present as the

first 3 bits of the obtained

codeword while the rest 3 are

the parity bits.](https://image.slidesharecdn.com/linearblockcode-241114081459-4eaabc18/75/linear-block-code-pptxjdkdidjdjdkdkidndndjdj-11-2048.jpg)