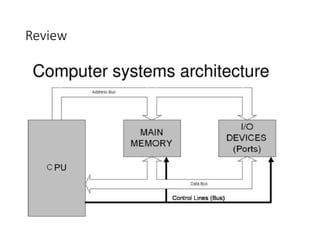

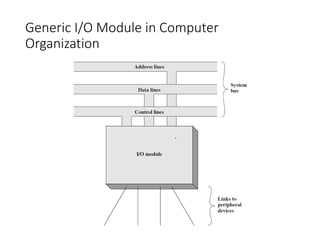

This document discusses different techniques for data transfer between the CPU and I/O devices, including programmed I/O, interrupt-driven I/O, and direct memory access (DMA). It describes the basic functioning of an I/O module, comparing programmed I/O to interrupt-driven I/O. It then provides details on DMA, including how it allows high-speed transfer of data directly between memory and I/O devices without CPU involvement. The document also covers I/O interfaces, asynchronous data transfer methods like handshaking, and serial transmission techniques.