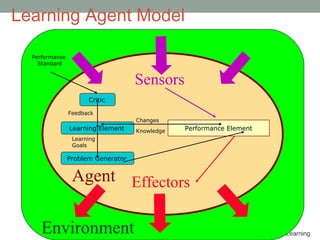

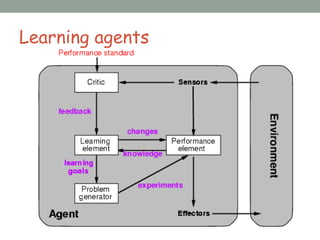

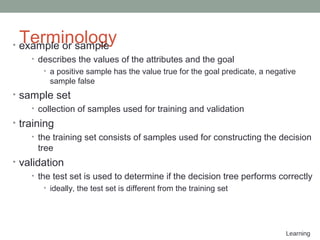

Learning agents improve their performance over time through experience. A learning agent has four main components: a performance element that selects actions, a learning element that improves performance, a critic that provides feedback, and a problem generator that suggests exploratory actions. There are three main forms of learning - supervised learning uses external feedback, unsupervised learning finds patterns without feedback, and reinforcement learning receives delayed feedback on behavior. Learning agents can use decision trees to learn concepts from attributes and make predictions.