Embed presentation

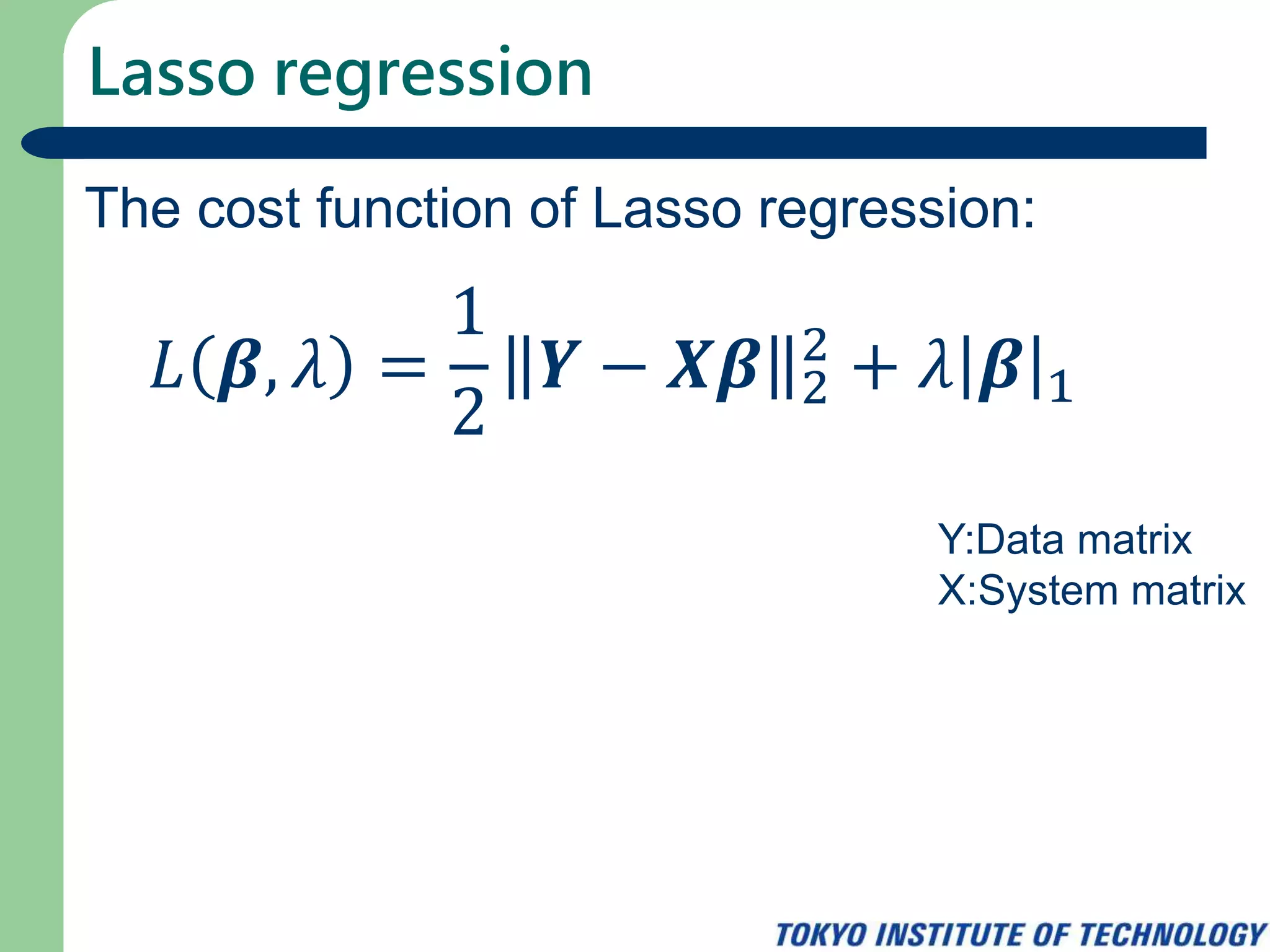

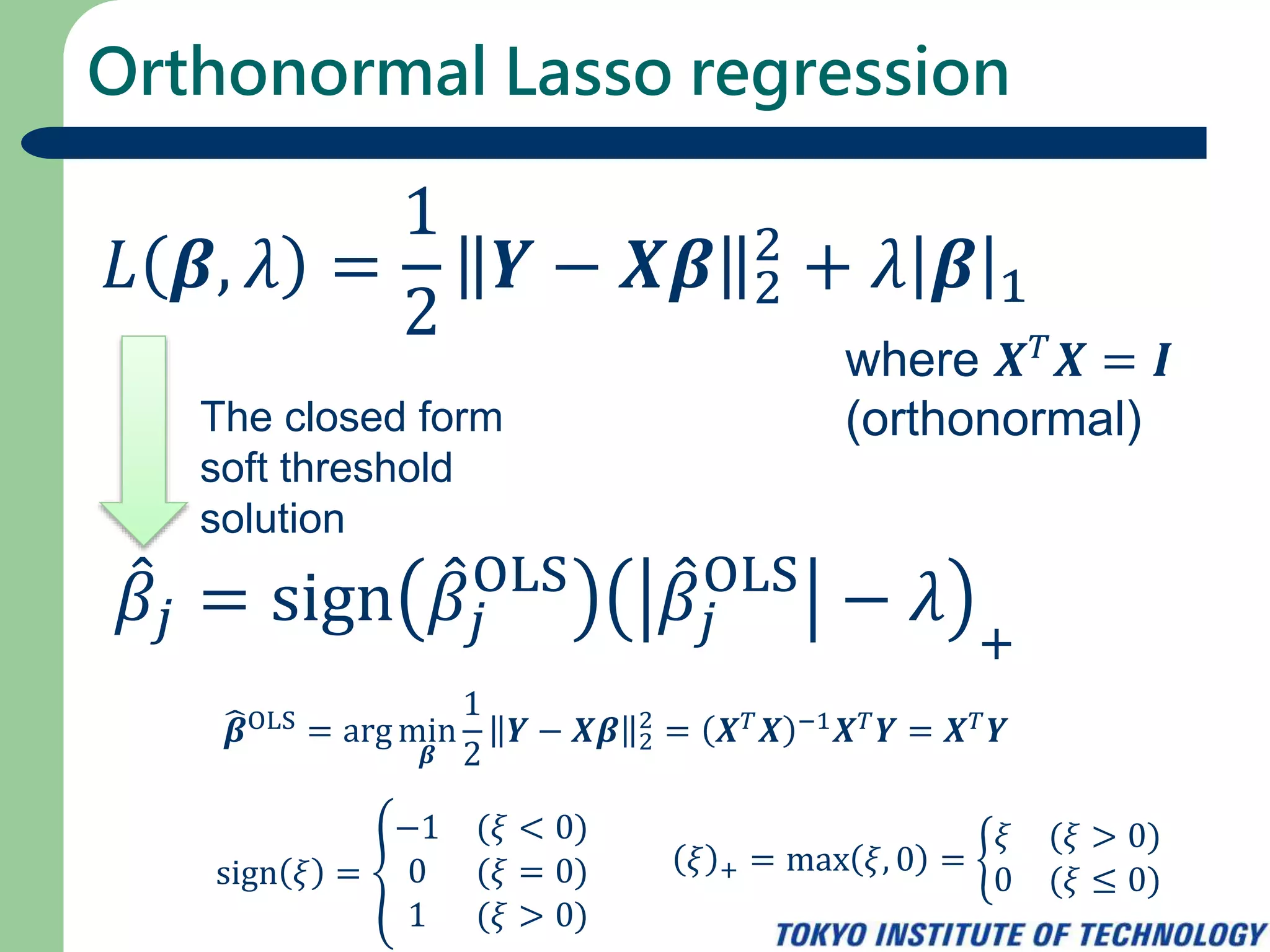

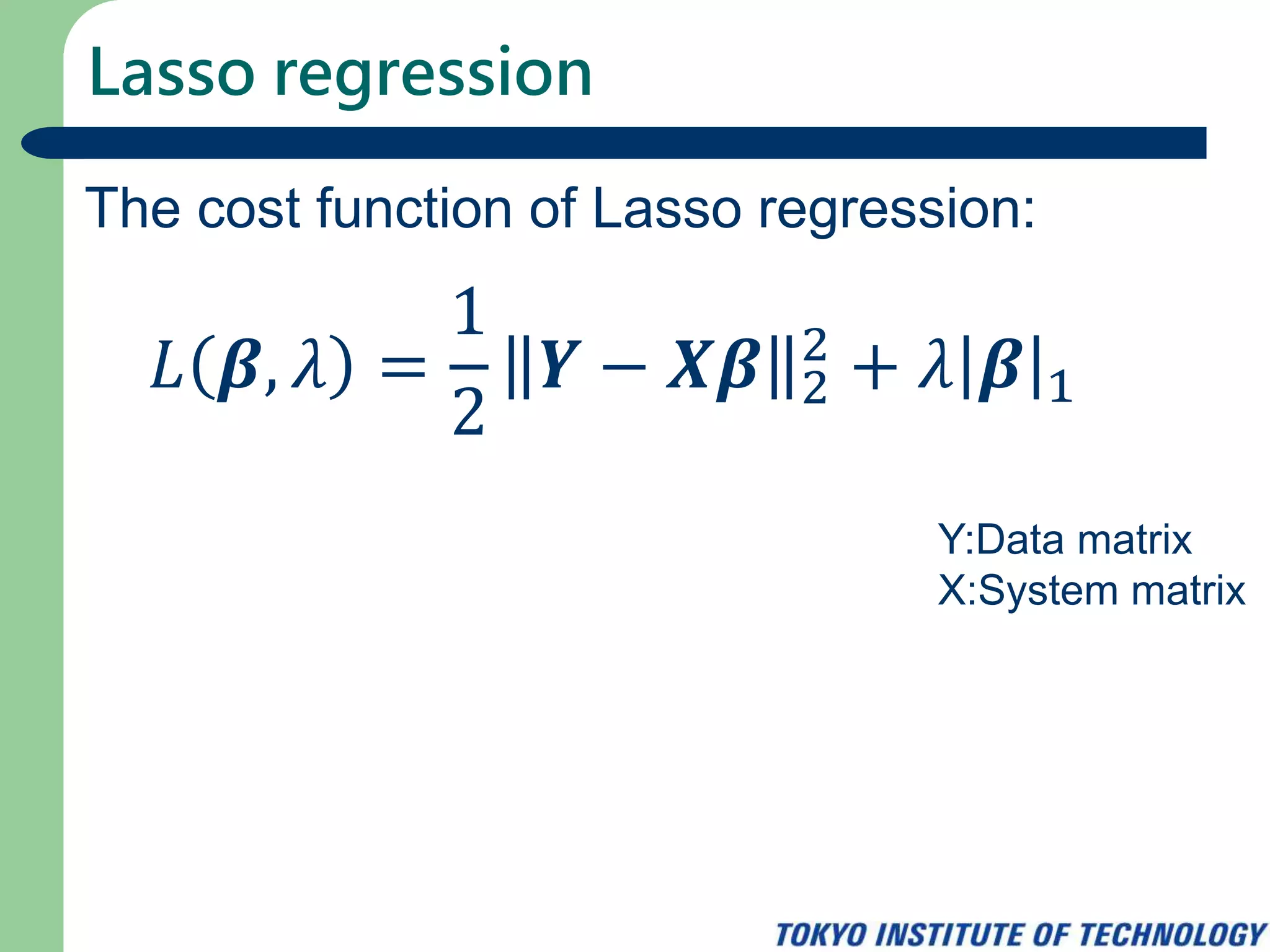

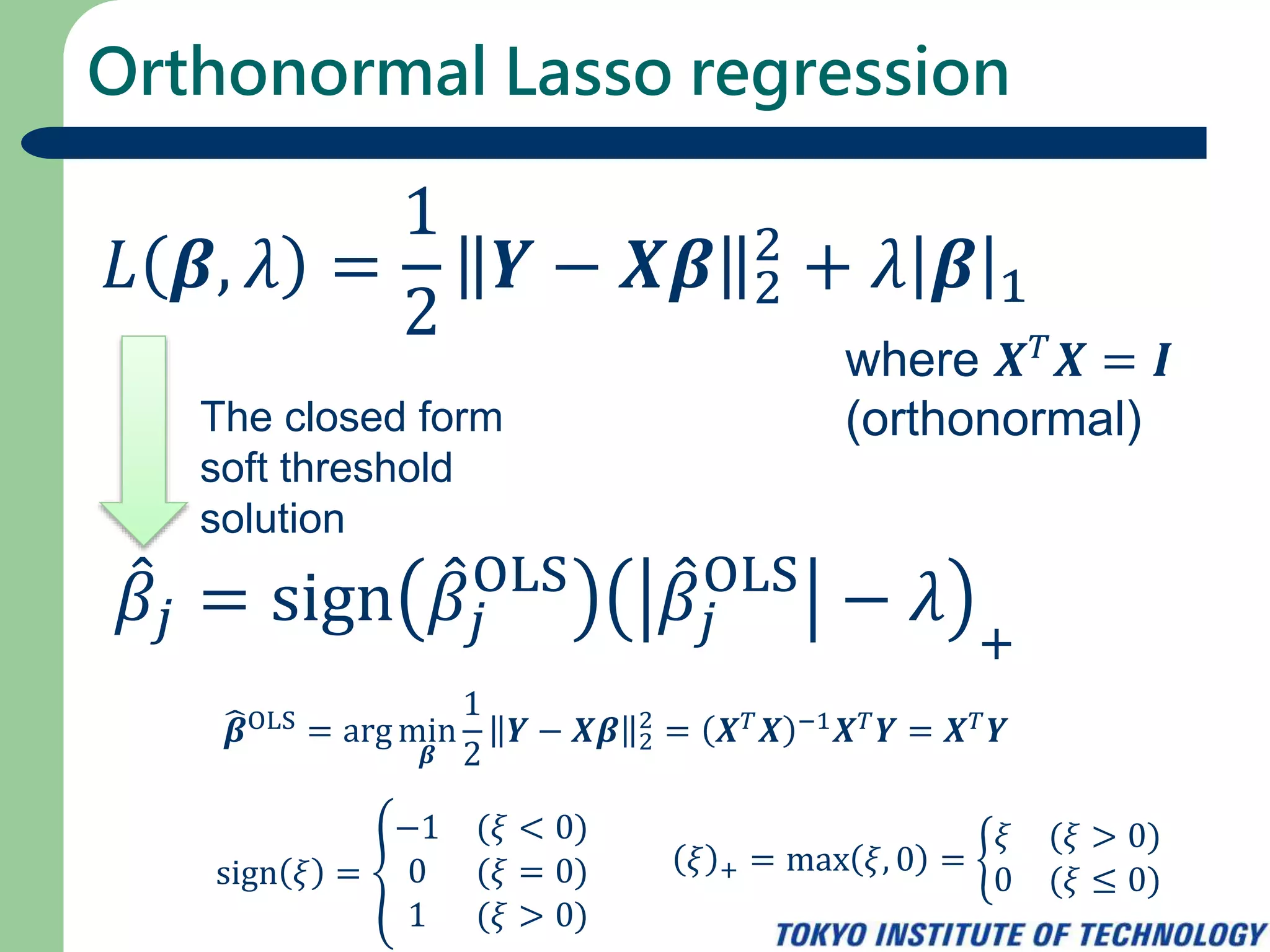

This document derives the closed-form soft threshold solution for Lasso regression. It begins by defining the cost function for Lasso regression and orthonormal Lasso regression. It then shows the derivation step-by-step, considering the cost function element-wise. There are three cases: when the ordinary least squares estimate is less than the threshold, equal to the threshold, and greater than the threshold. In each case, the soft threshold solution is defined. The final solution is expressed compactly as the sign of the OLS estimate times the soft-thresholded value.

Lasso regression is introduced, describing its cost function and the derivation of the closed soft threshold solution, crucial for high-dimensional data analysis.