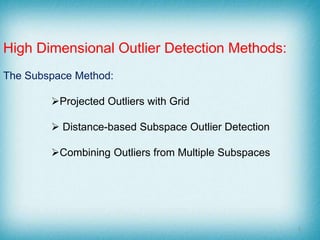

This document discusses outlier detection in high dimensional data through integrated feature selection algorithms. It defines outliers and lists some applications of outlier detection. It then discusses challenges of detecting outliers in high dimensional space due to the "curse of dimensionality". The document proposes integrating feature selection algorithms with outlier detection methods to address this issue. It describes the key steps of feature selection, including subset generation, evaluation, stopping criteria, and result validation. Finally, it suggests that filter models are preferred over wrapper models for feature selection in high dimensional data due to lower computational costs.

![References

[1] Huan Liu, Lei Yu “Toward Integrating Feature Selection Algorithms for Classification

and Clustering” IEEE Transactions on Knowledge and Data Engineering, Vol 17,NO

.4,April 2005 .

[2] C.C Aggarwal, Outlier analysis. 1 53, DOI 10.1007/978- - © Springer

science+Business Media New York 2013

[3] M. Dash and H. Liu, “Feature Selection for Clustering,” Proc.

Fourth Pacific Asia Conf. Knowledge Discovery and Data Mining,

(PAKDD-2000), pp. 110-121, 2000.

[4] M. Devaney and A. Ram, “Efficient Feature Selection in

Conceptual Clustering,” Proc. 14th Int’l Conf. Machine Learning,

pp. 92-97, 1997.

[5] Feature Selection: An Ever Evolving Frontier in Data Mining ,JMLR: Workshop and

Conference Proceedings 10: 4-13 The Fourth Workshop on Feature Selection in Data

Mining

20](https://image.slidesharecdn.com/seminarppt-160226090820/85/Seminarppt-20-320.jpg)