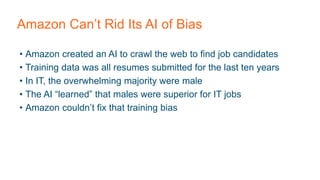

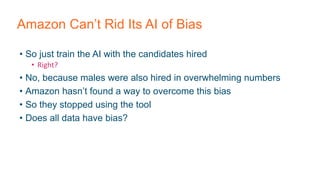

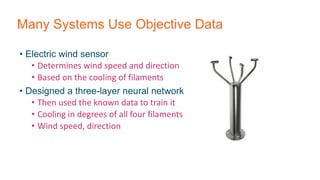

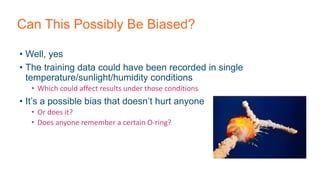

The document discusses how machine learning systems can produce biased results based on issues with the training data used, and provides examples of how biases have emerged in commercial AI systems. It then outlines approaches for testing machine learning systems to identify potential biases, including understanding the training data, defining objective success criteria, and testing with diverse edge cases. The challenges of addressing biases that emerge from limitations in the data or human decisions are also examined.