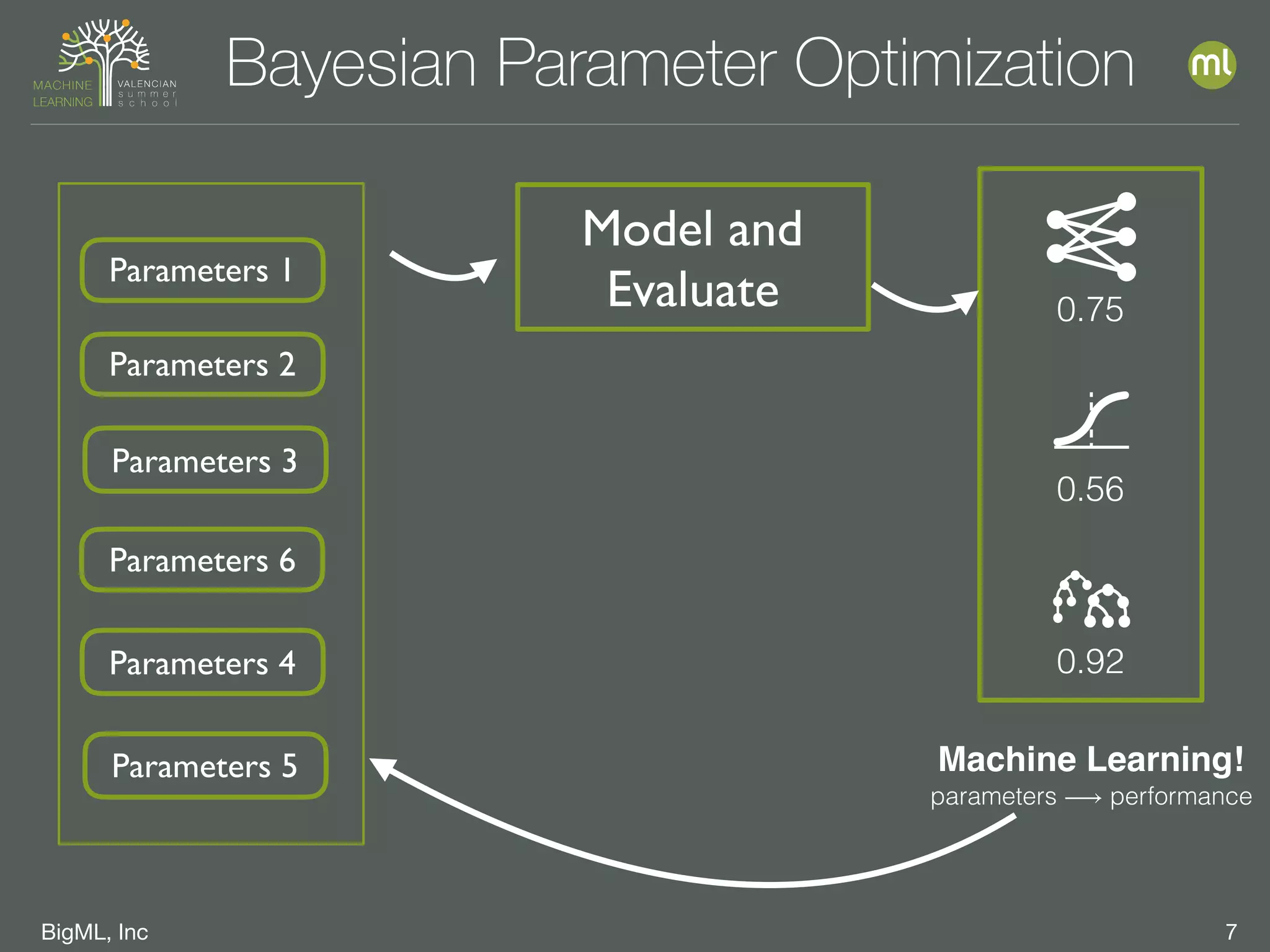

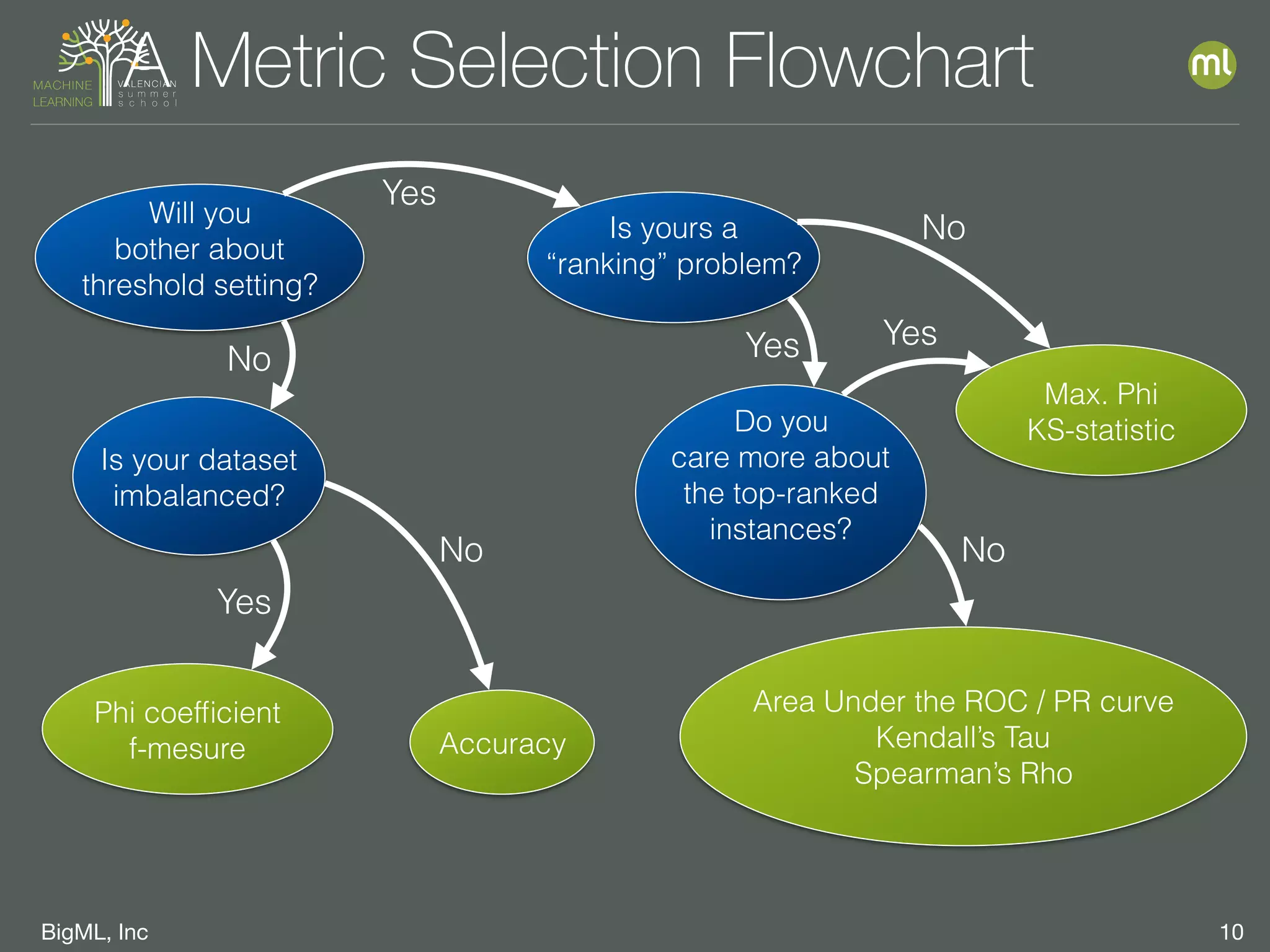

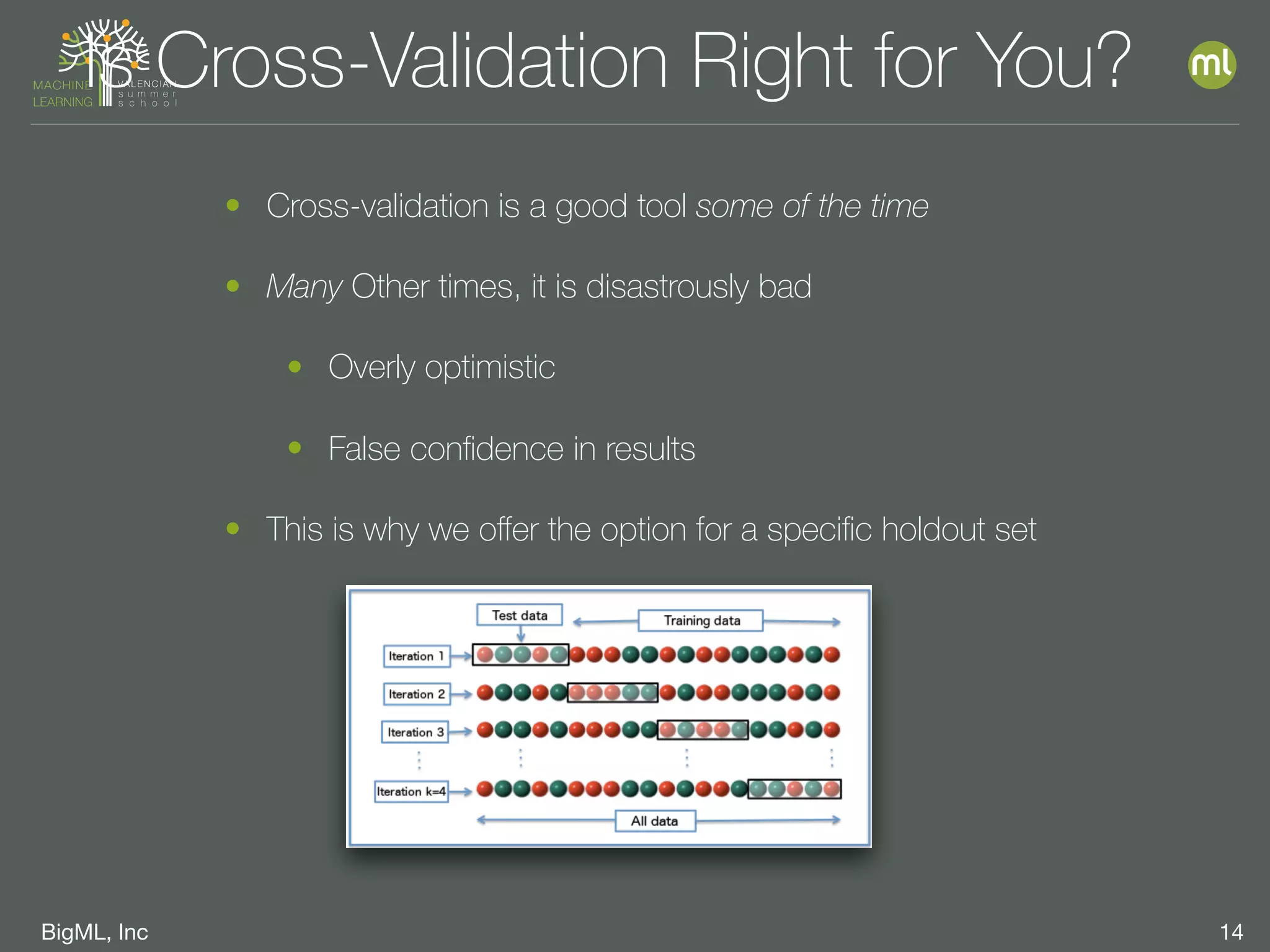

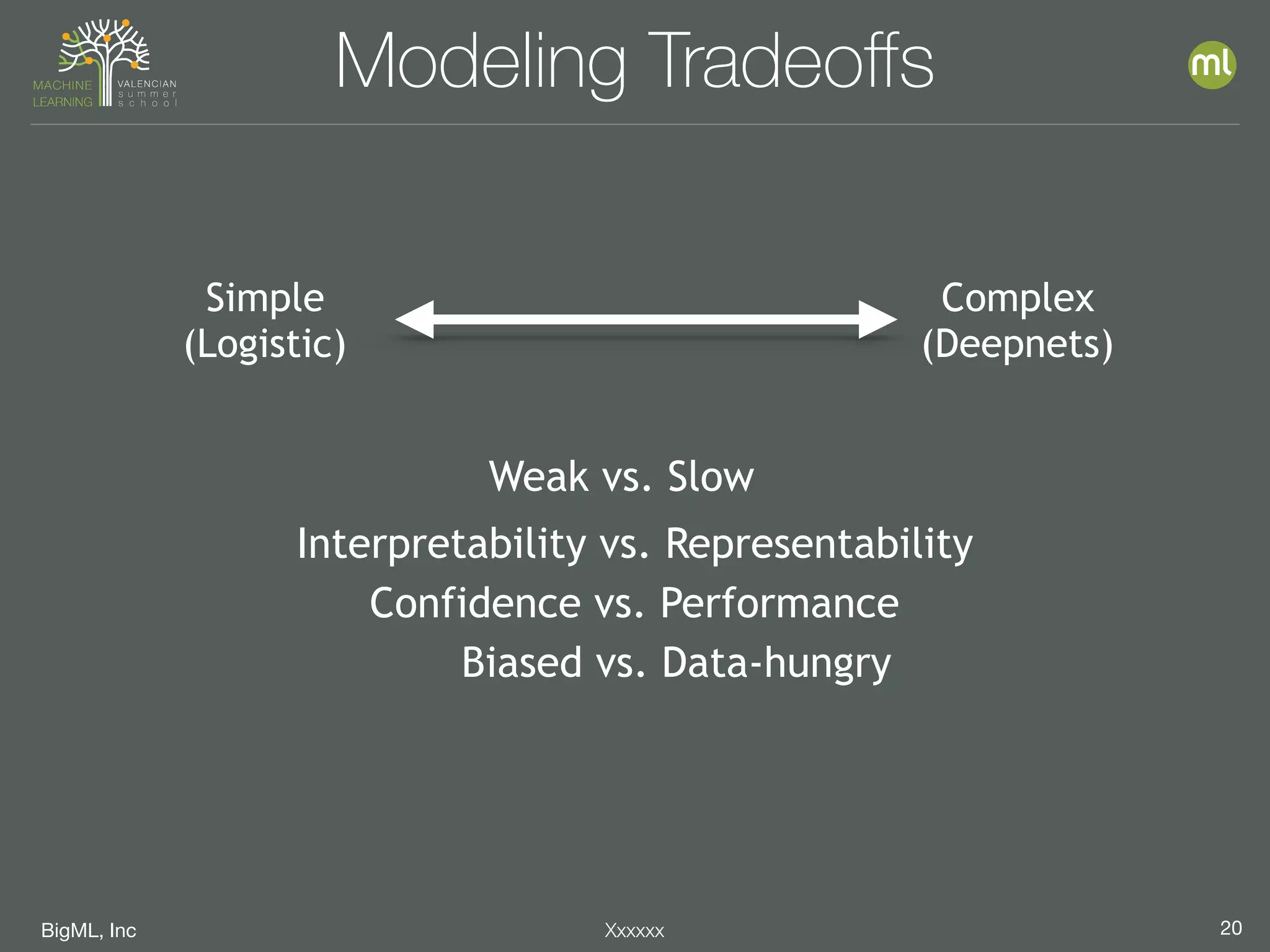

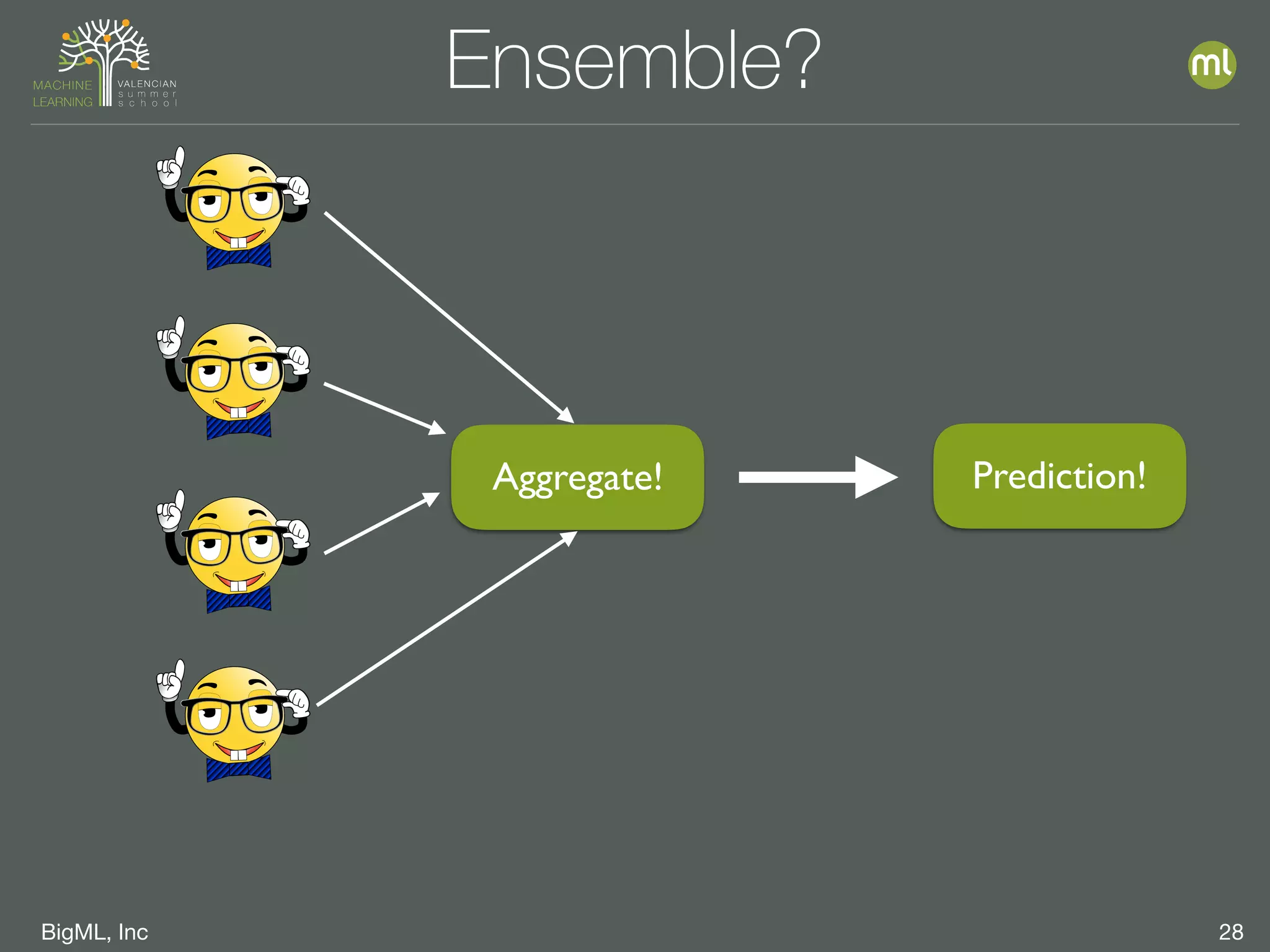

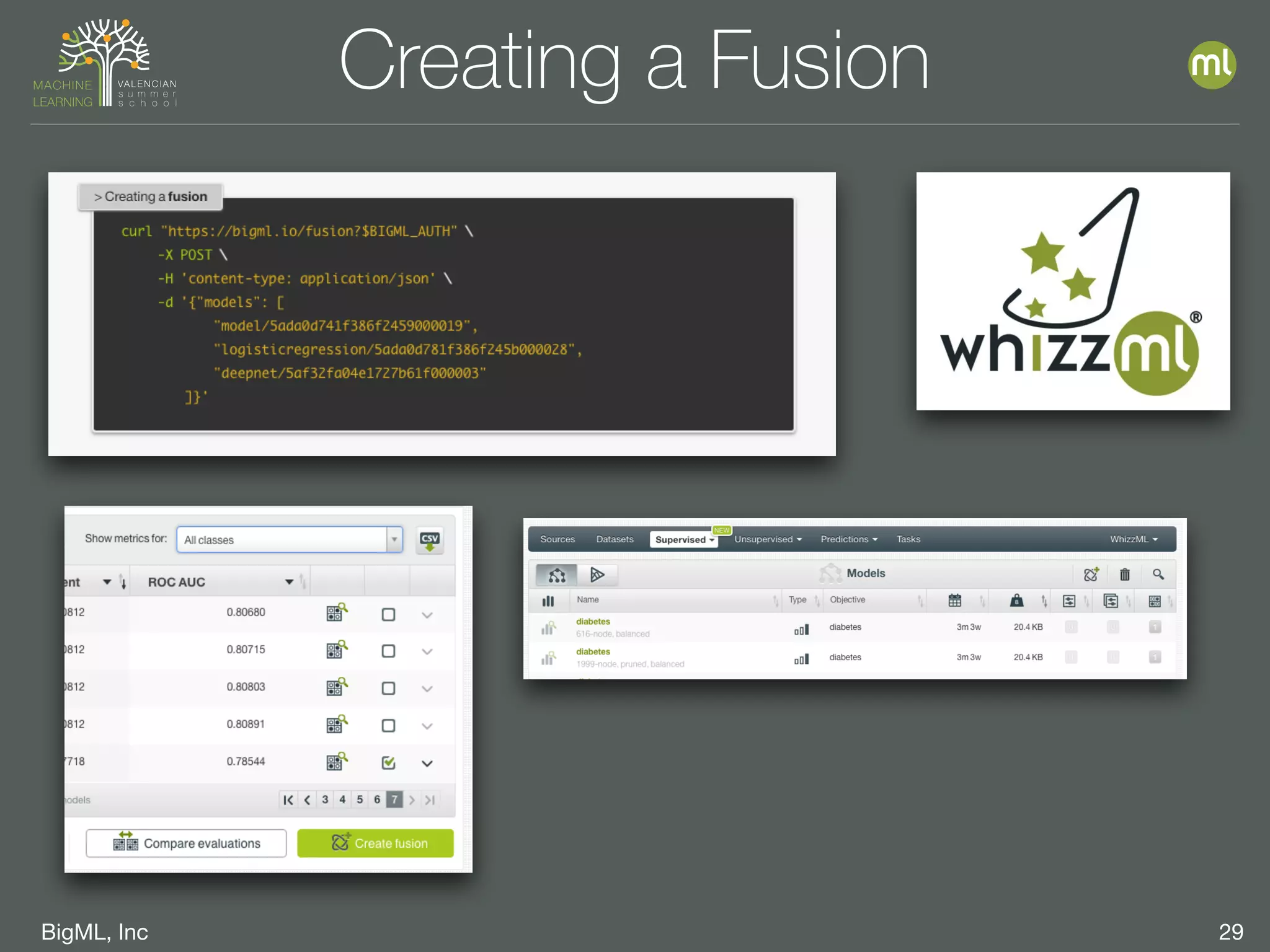

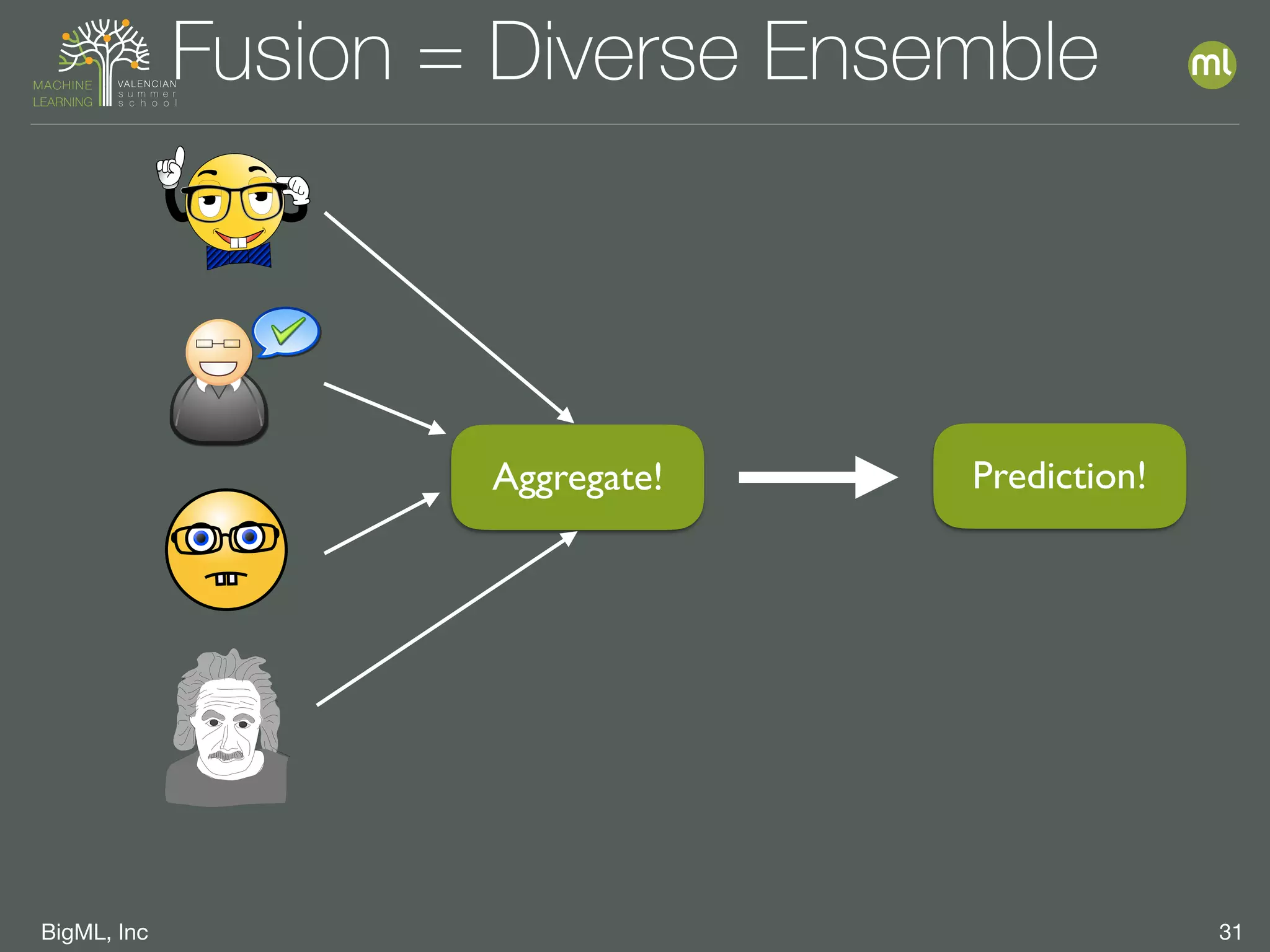

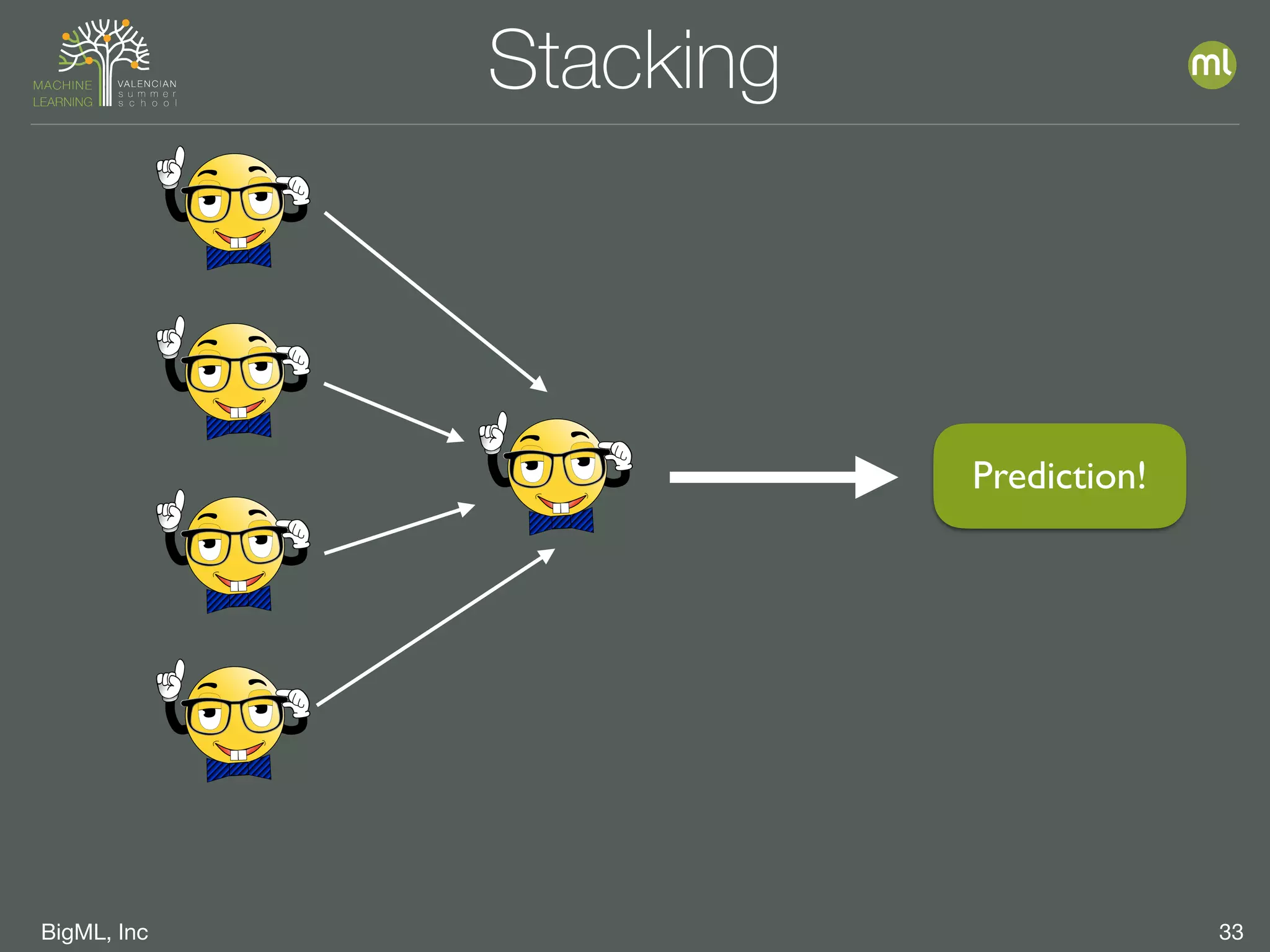

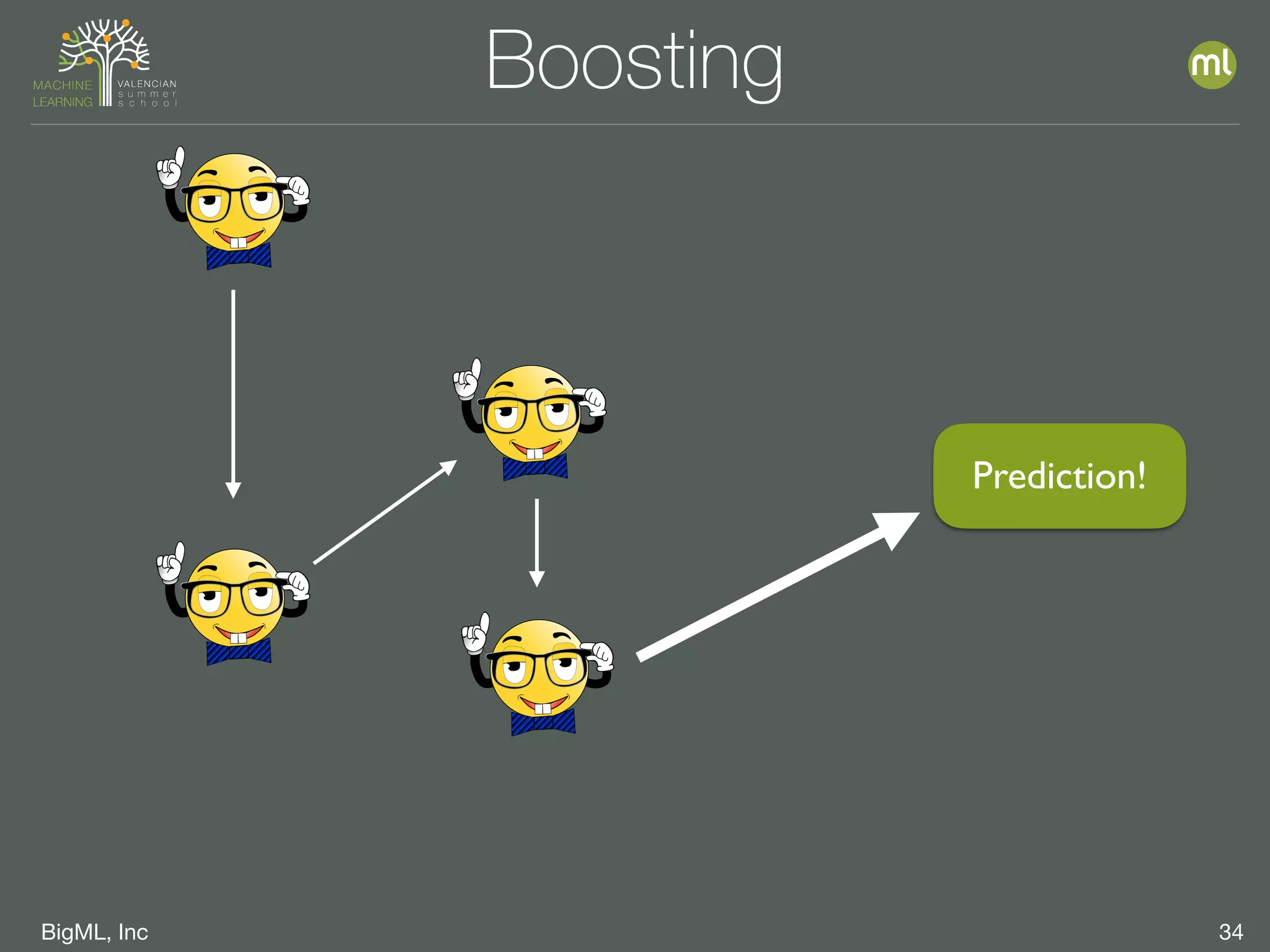

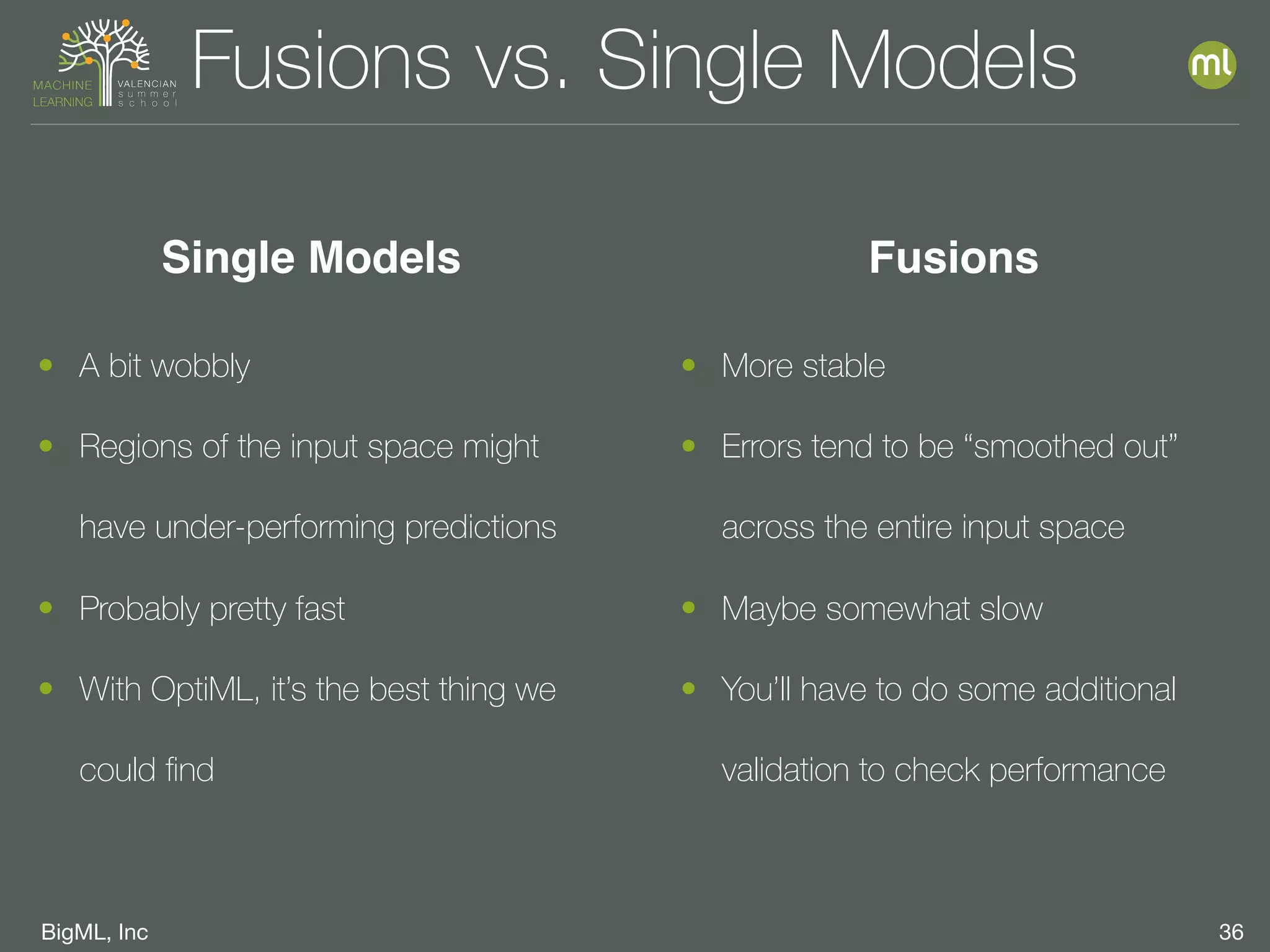

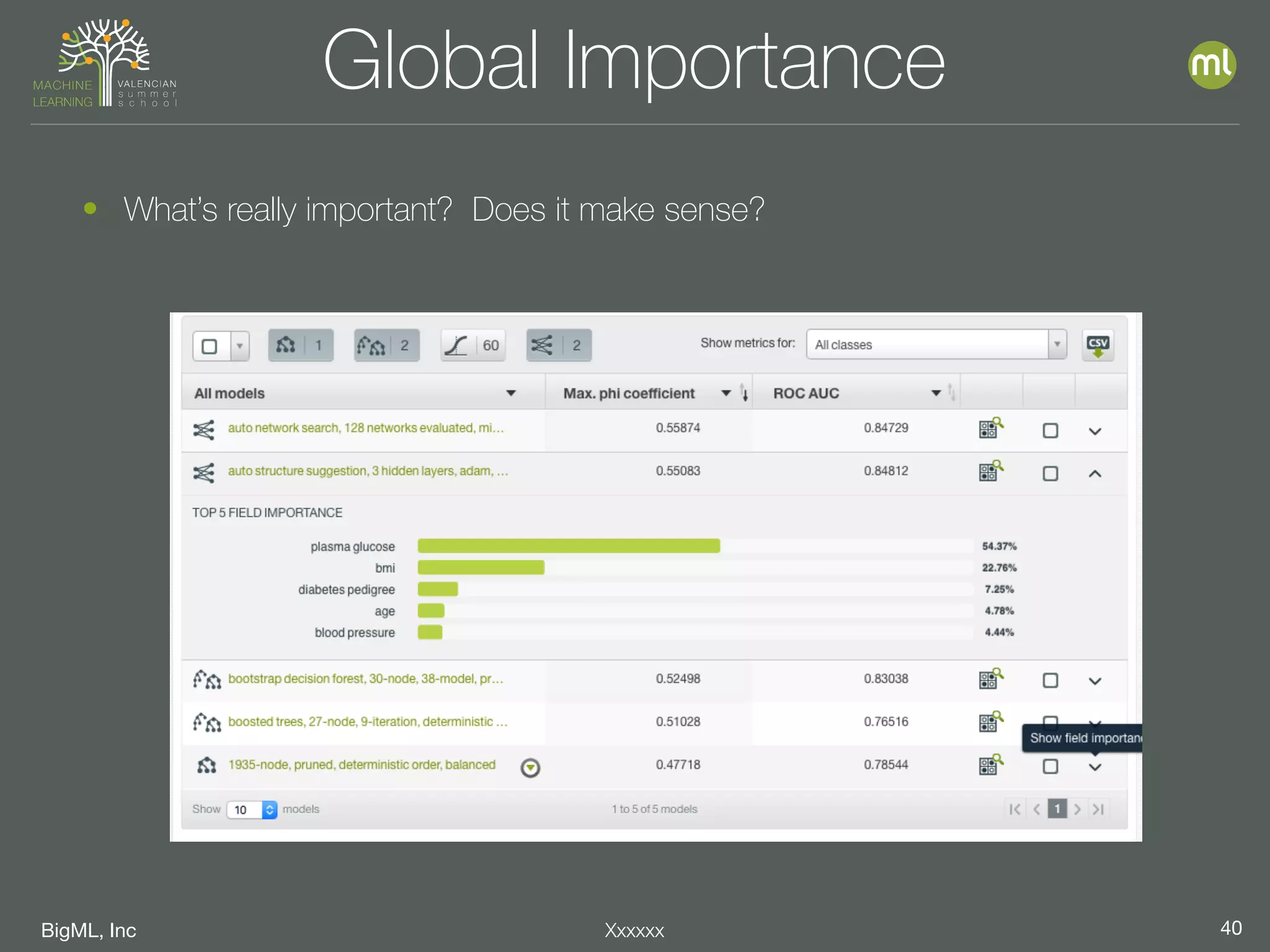

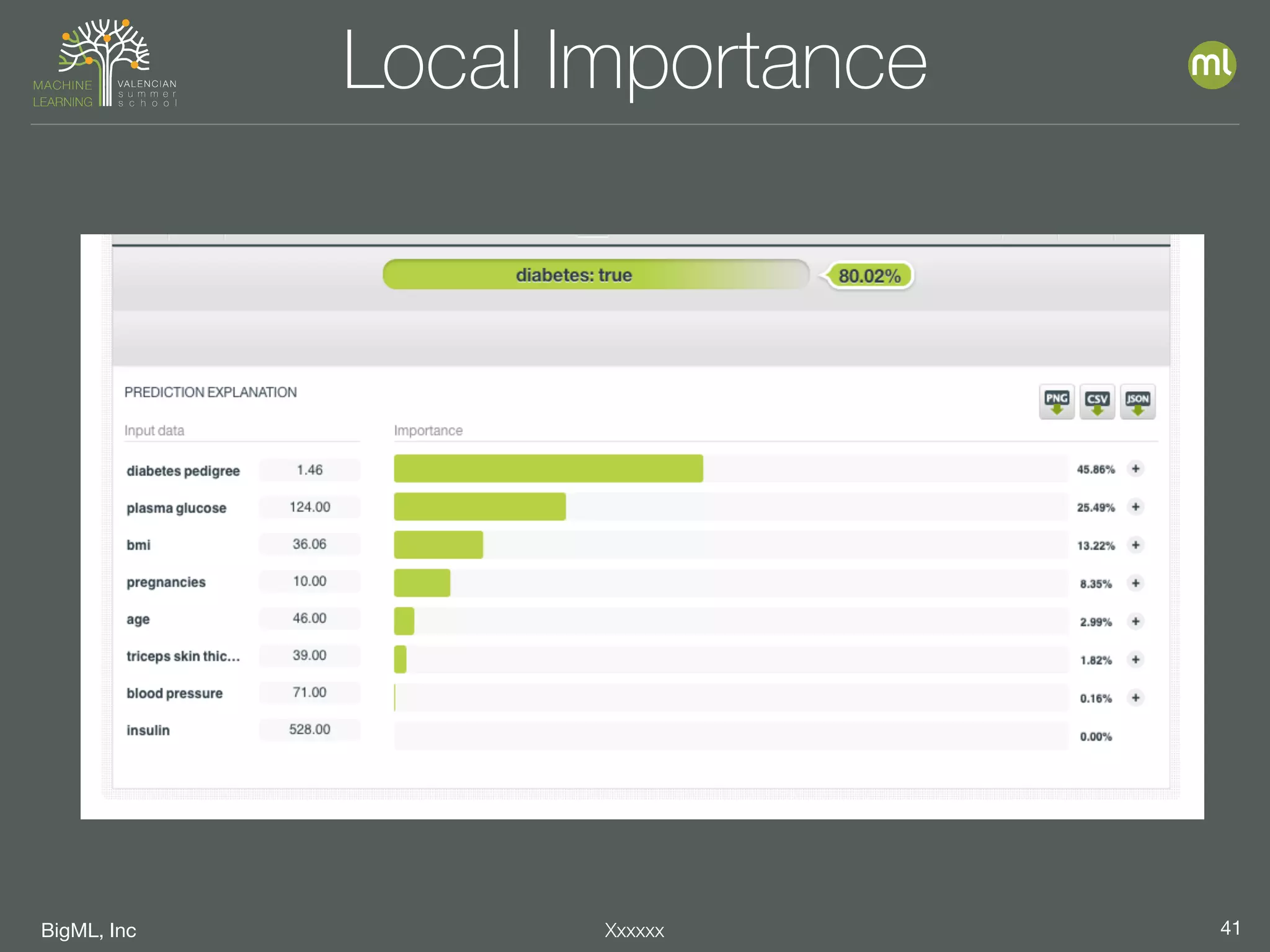

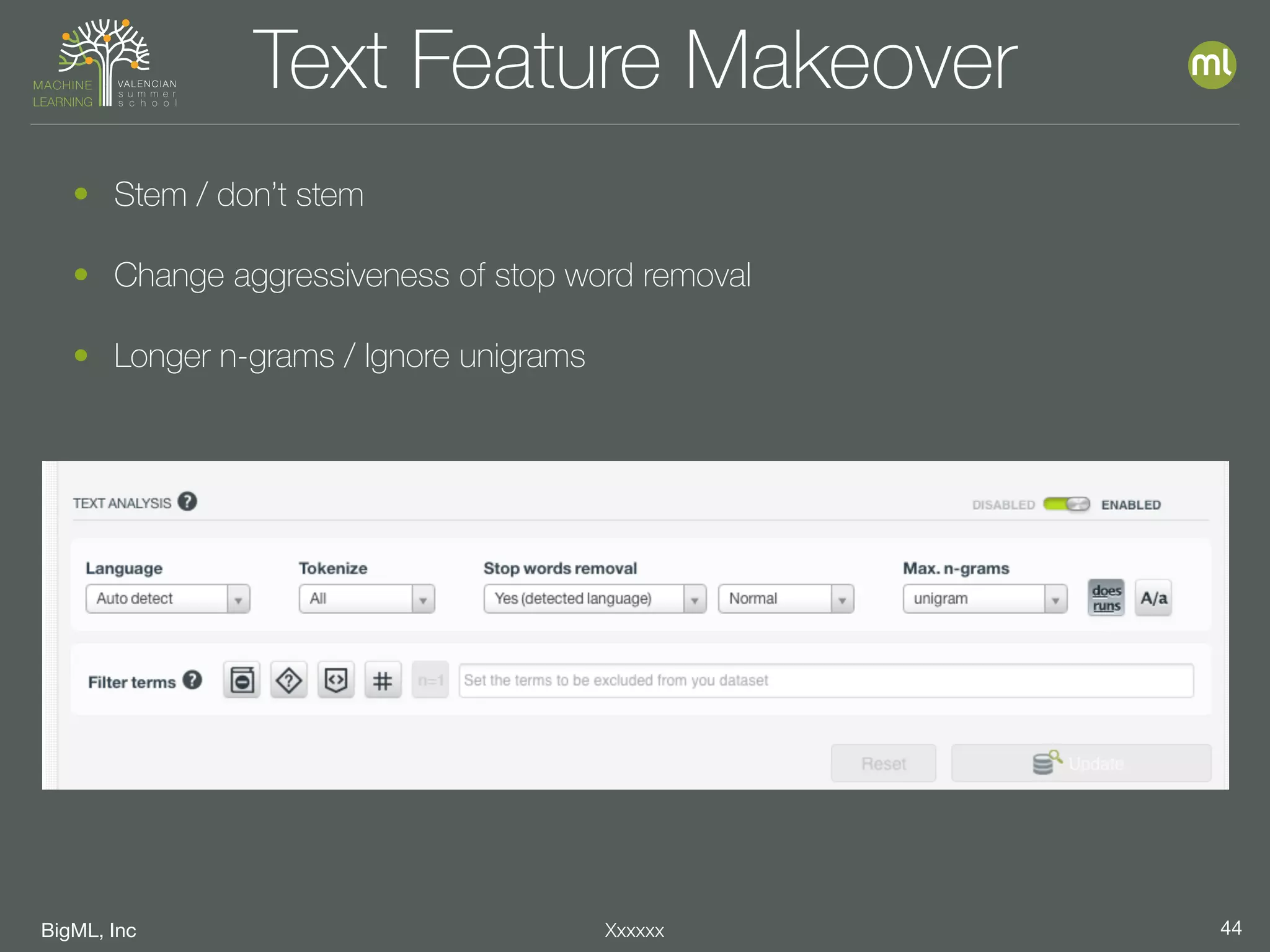

The document discusses parameter optimization and machine learning techniques for tuning models. It covers using machine learning to predict the performance of parameter configurations before training models on them, called Bayesian parameter optimization. It also discusses dangers of naive cross-validation and how to select the best model by considering factors beyond just performance like retraining needs and prediction speed. The document advocates creating diverse ensembles through techniques like fusions to improve stability and importance profiles.