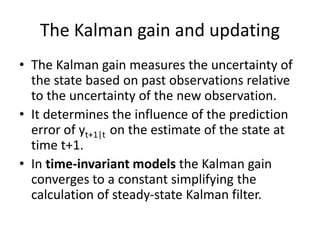

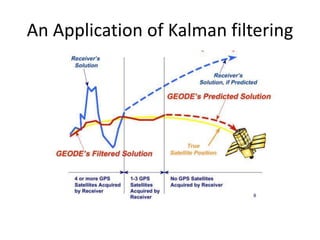

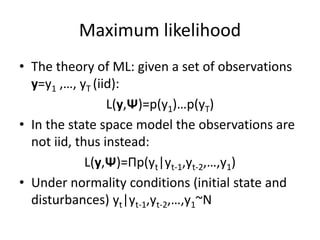

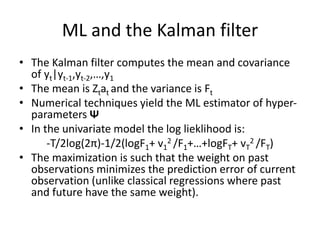

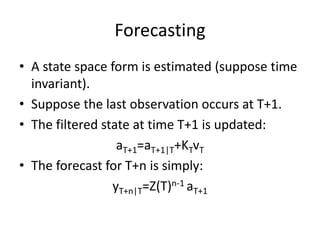

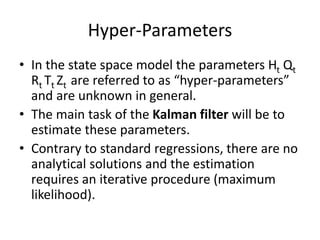

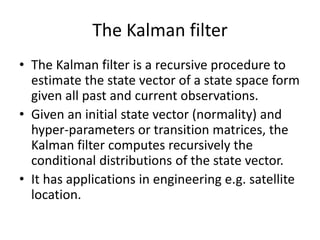

This document provides an overview of forecasting and smoothing using the Kalman filter. It discusses (1) how state space models represent time series using unobserved state variables, (2) how the Kalman filter recursively estimates the state variables using past and current observations, and (3) how maximum likelihood estimation and forecasting/smoothing techniques utilize the Kalman filter outputs. The document uses examples from macroeconomic forecasting to illustrate these concepts.

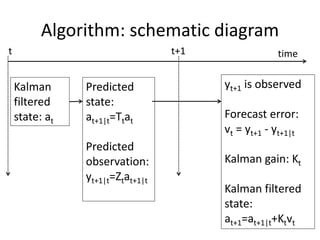

![Period t: initial values

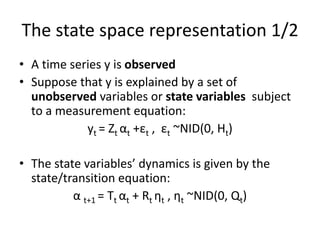

• Let at denote the optimal estimator of αt the

state vector at time t given all past

information including yt i.e. the Kalman

filtered state.

• Let Pt denote var-cov of the estimation error:

Pt=E[(αt- at) (αt- at)’]](https://image.slidesharecdn.com/l11-forecastingandsmoothingusingthekalmanfilter-231223204358-0d4208ac/85/L11-Forecasting-and-Smoothing-using-the-Kalman-Filter-pptx-16-320.jpg)