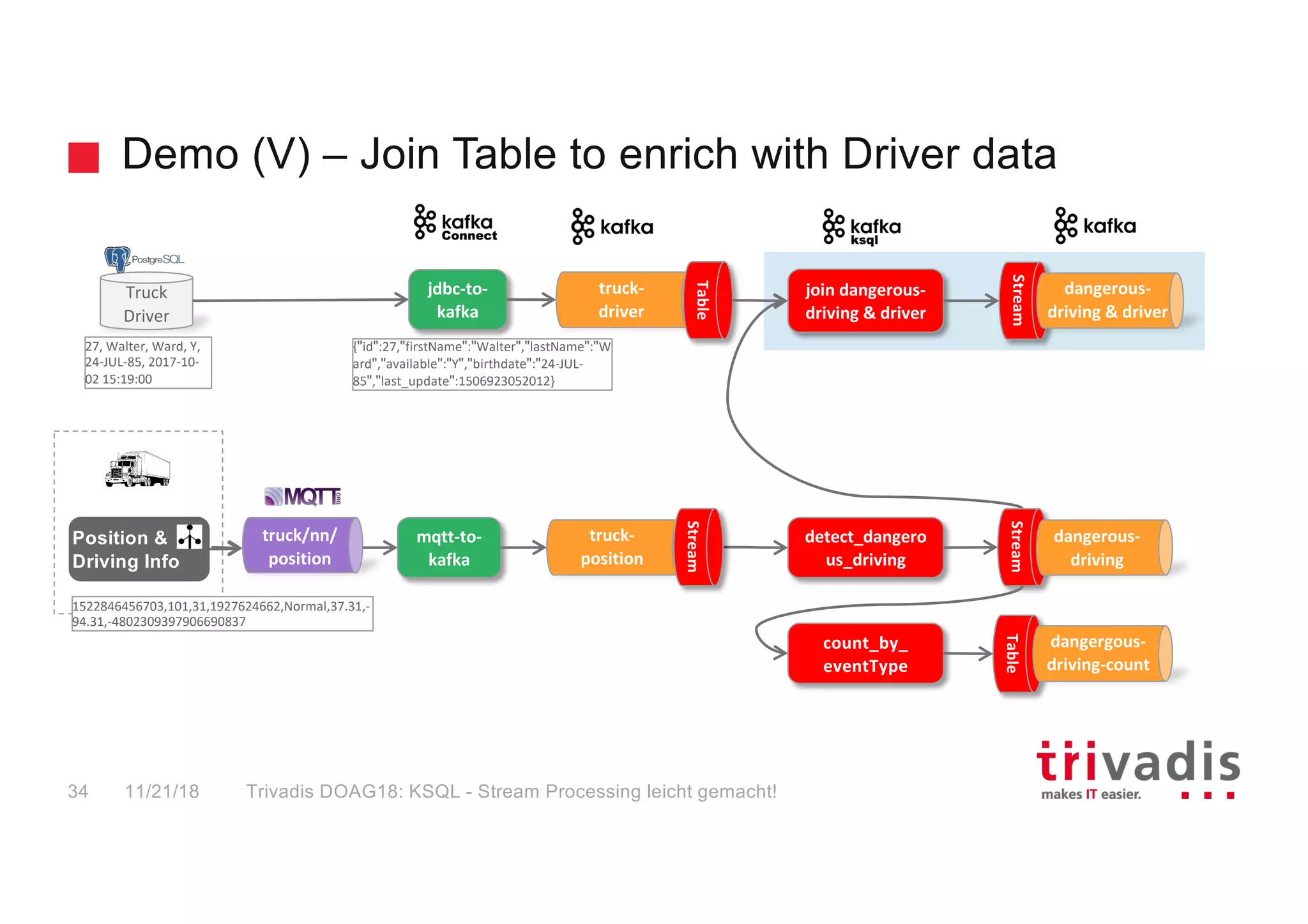

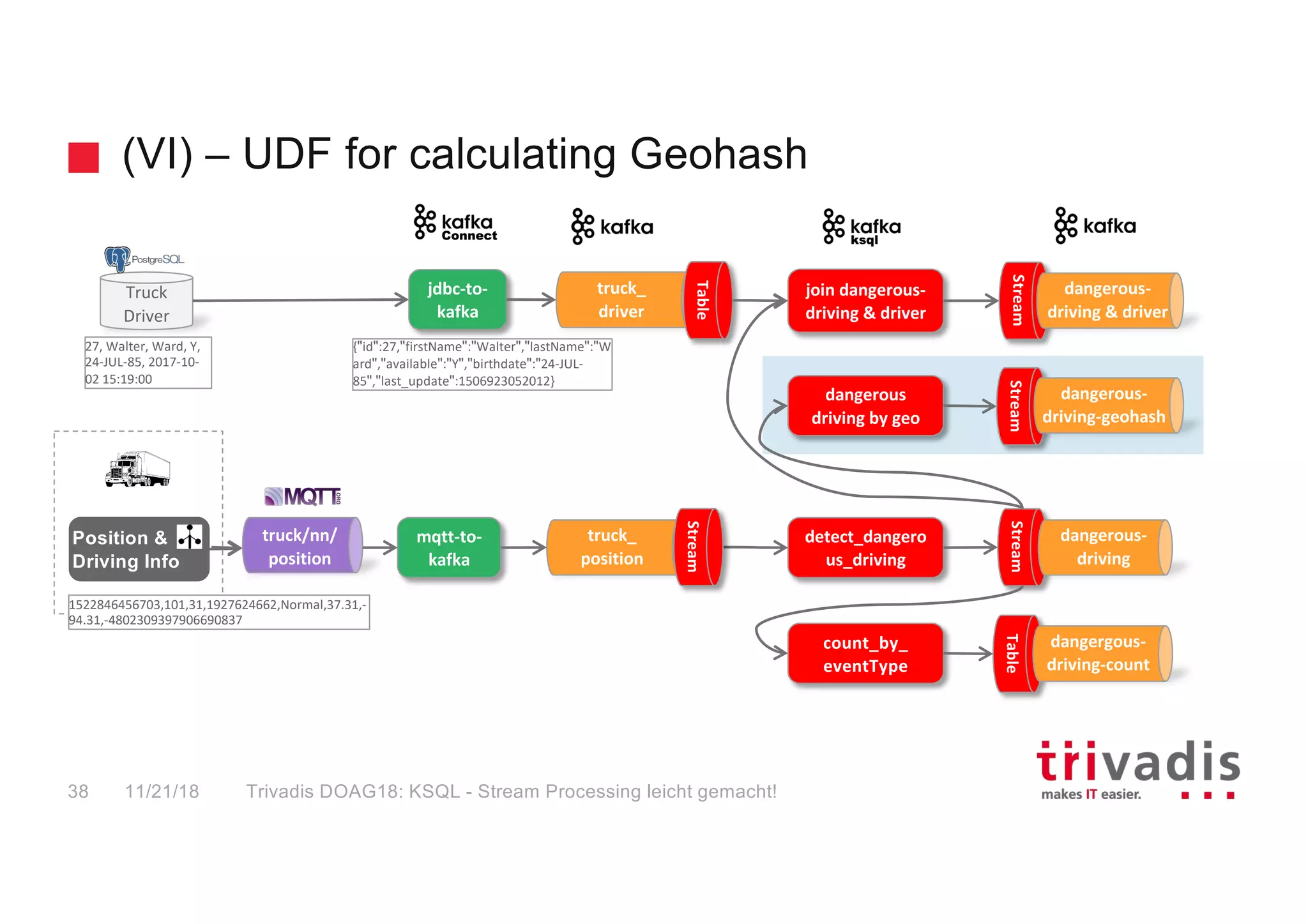

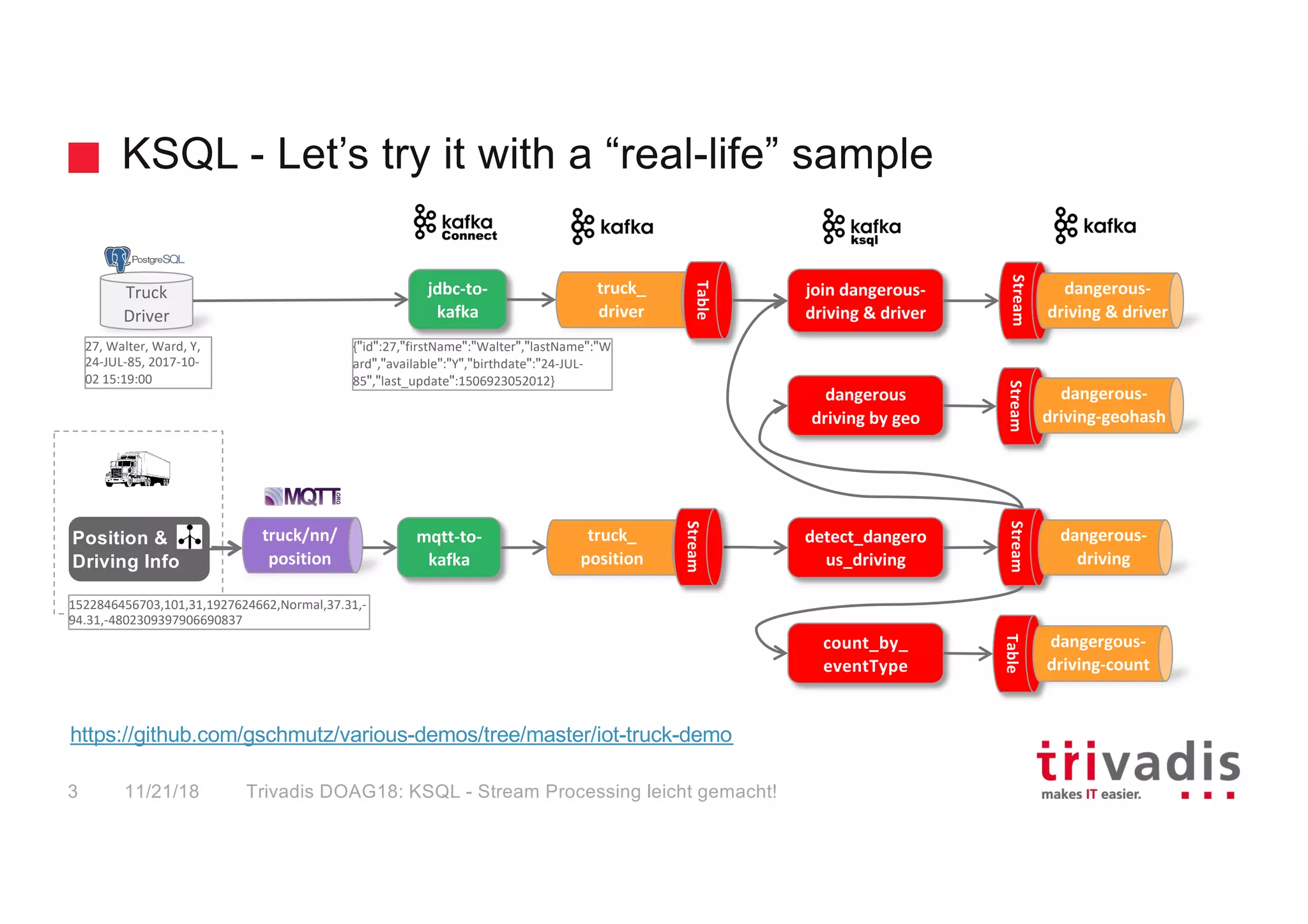

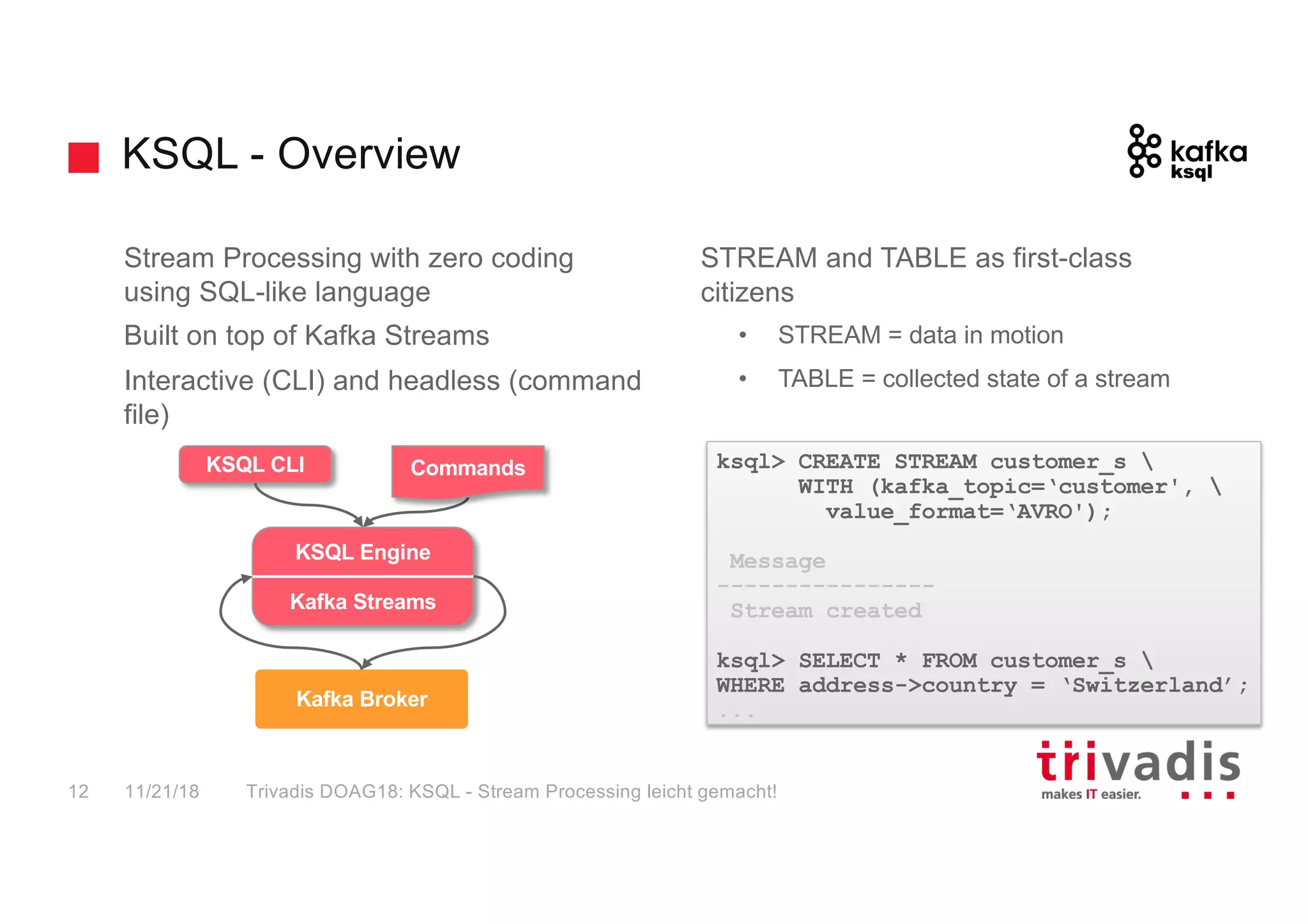

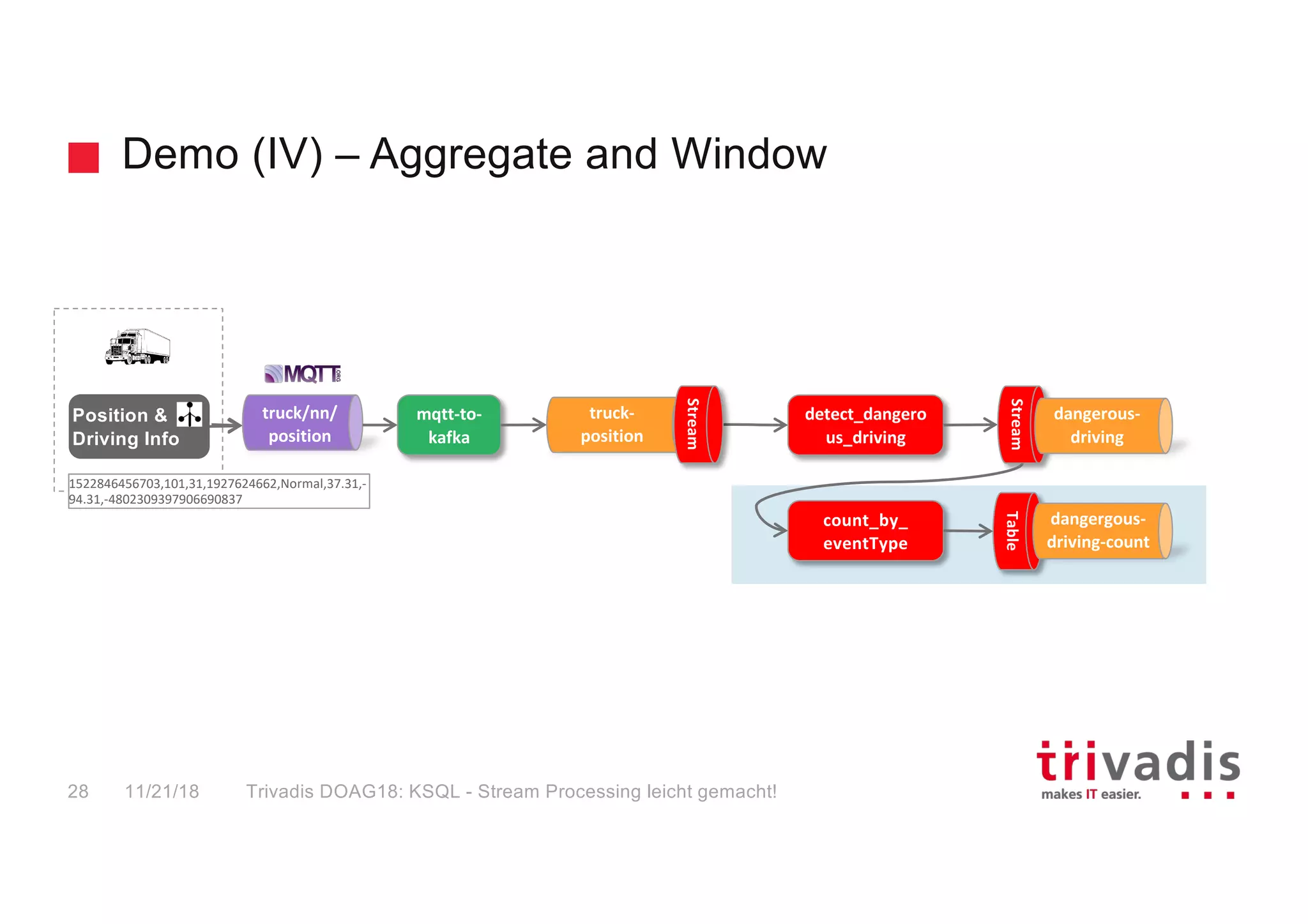

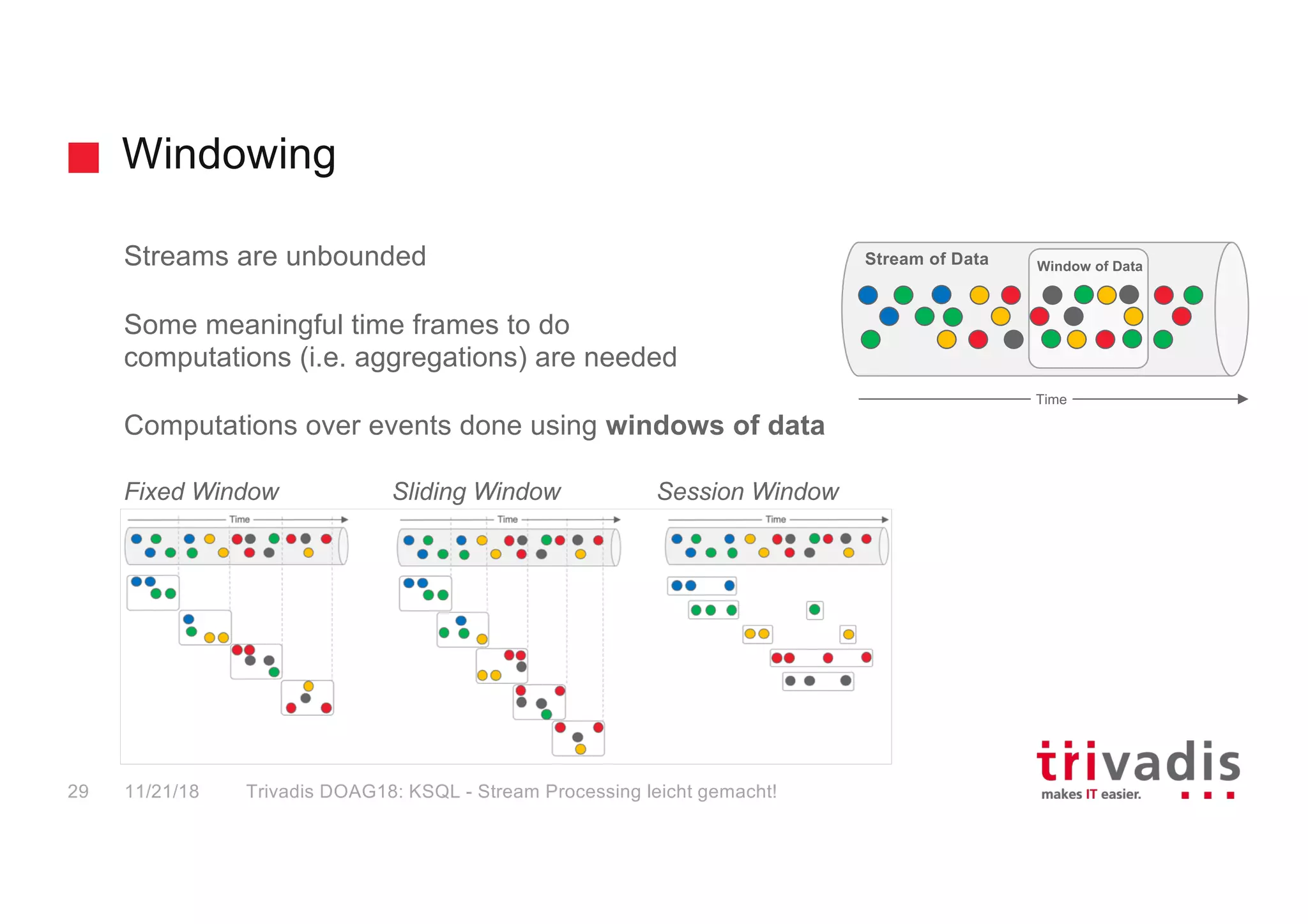

The document presents an overview of KSQL, a streaming SQL engine built on Apache Kafka, designed for real-time data processing without extensive coding. It includes practical demonstrations involving the creation of streams and tables, data ingestion through MQTT, and integrating data from various sources. The speaker, Guido Schmutz, outlines features of KSQL such as windowing, joining streams, and the use of SQL-like syntax for managing streaming data efficiently.

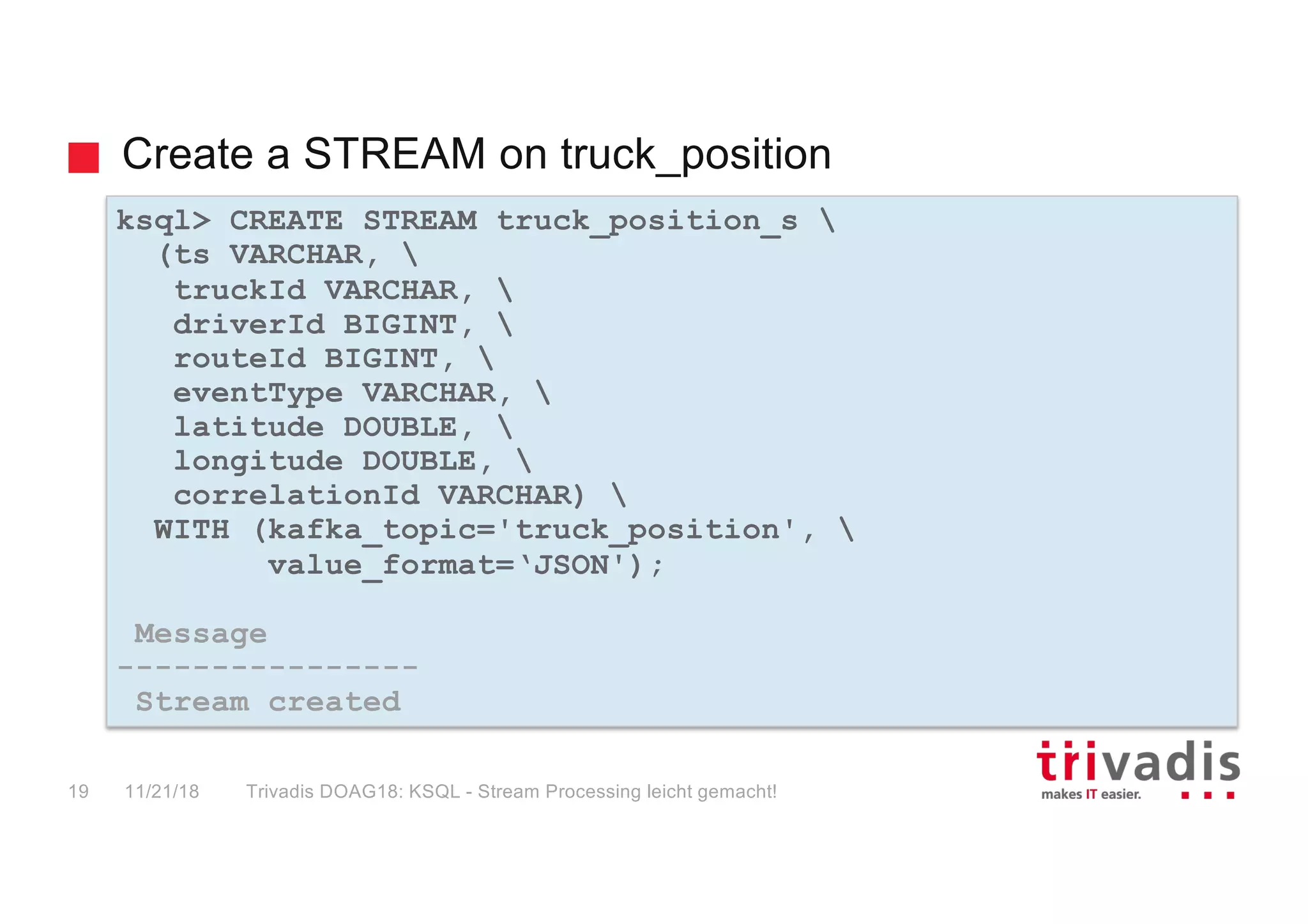

![CREATE STREAM

Create a new stream, backed by a Kafka topic, with the specified columns and

properties

Supported column data types:

• BOOLEAN, INTEGER, BIGINT, DOUBLE, VARCHAR or STRING

• ARRAY<ArrayType>

• MAP<VARCHAR, ValueType>

• STRUCT<FieldName FieldType, ...>

Supports the following serialization formats: CSV, JSON, AVRO

KSQL adds the implicit columns ROWTIME and ROWKEY to every stream

CREATE STREAM stream_name ( { column_name data_type } [, ...] )

WITH ( property_name = expression [, ...] );

11/21/18 Trivadis DOAG18: KSQL - Stream Processing leicht gemacht!17](https://image.slidesharecdn.com/kafka-ksql-v1-181122135807/75/KSQL-Stream-Processing-simplified-16-2048.jpg)

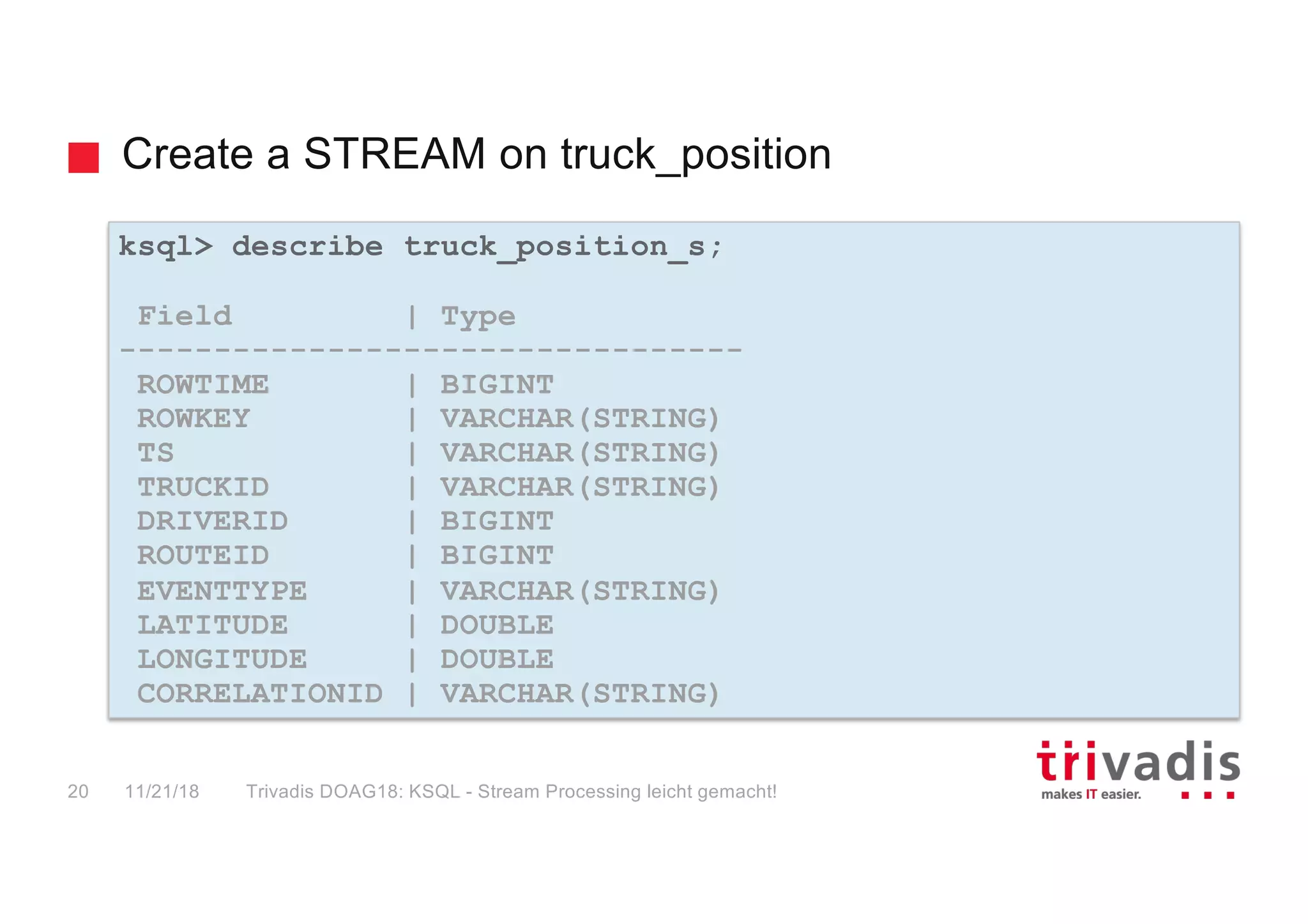

![SELECT

Selects rows from a KSQL stream or table

Result of this statement will not be persisted in a Kafka topic and will only be printed out

in the console

from_item is one of the following: stream_name, table_name

SELECT select_expr [, ...]

FROM from_item

[ LEFT JOIN join_table ON join_criteria ]

[ WINDOW window_expression ]

[ WHERE condition ]

[ GROUP BY grouping_expression ]

[ HAVING having_expression ]

[ LIMIT count ];

11/21/18 Trivadis DOAG18: KSQL - Stream Processing leicht gemacht!21](https://image.slidesharecdn.com/kafka-ksql-v1-181122135807/75/KSQL-Stream-Processing-simplified-20-2048.jpg)

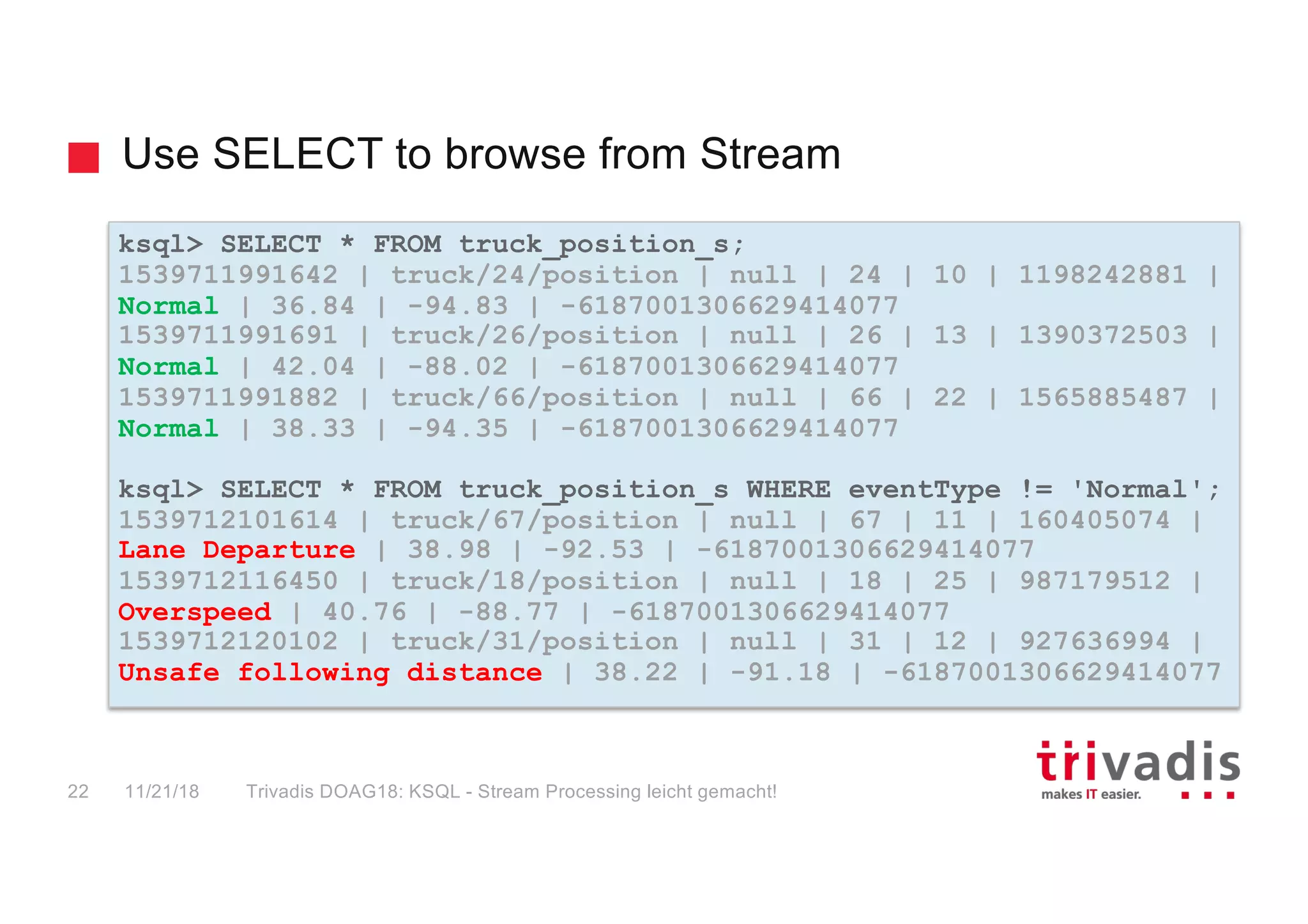

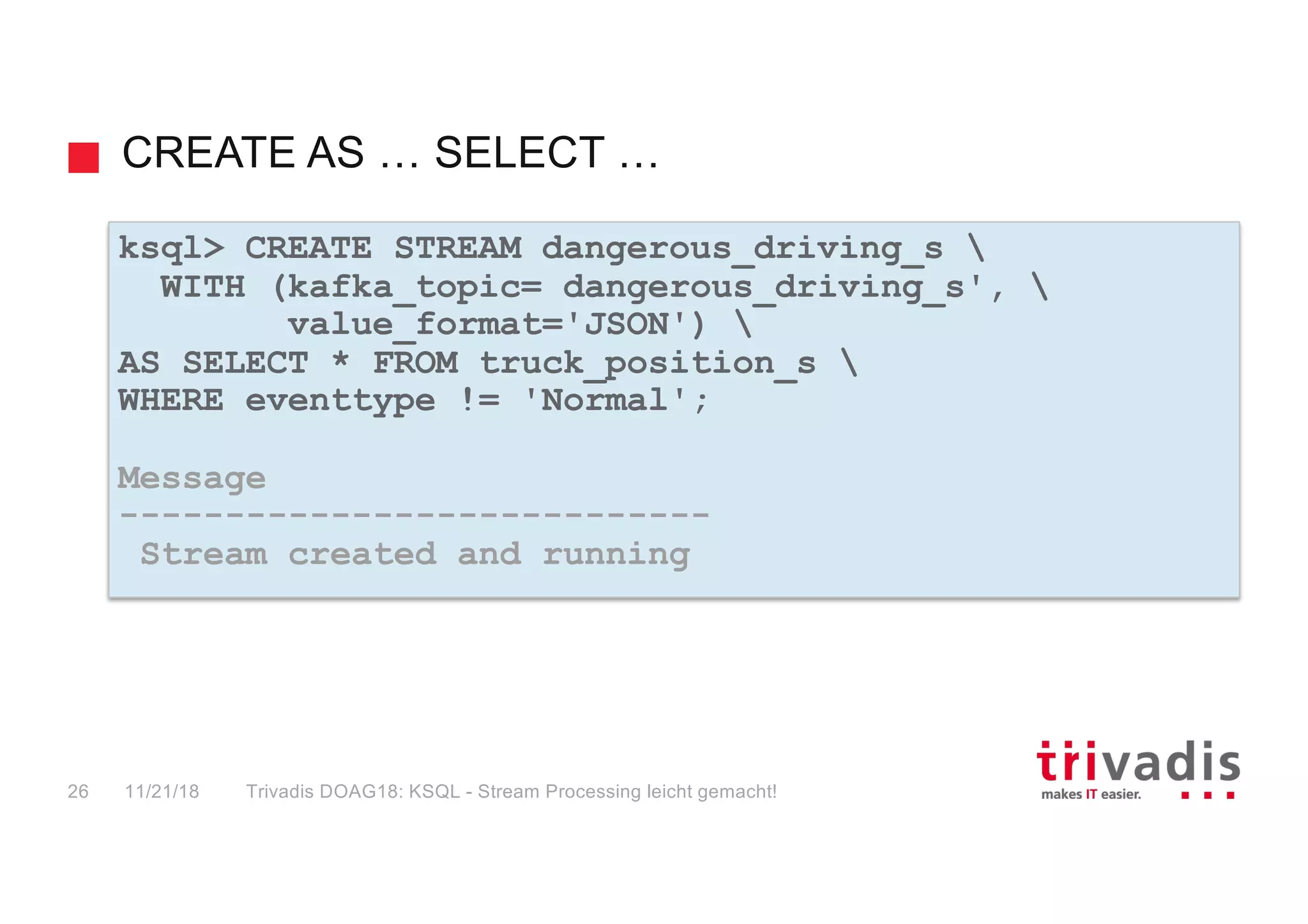

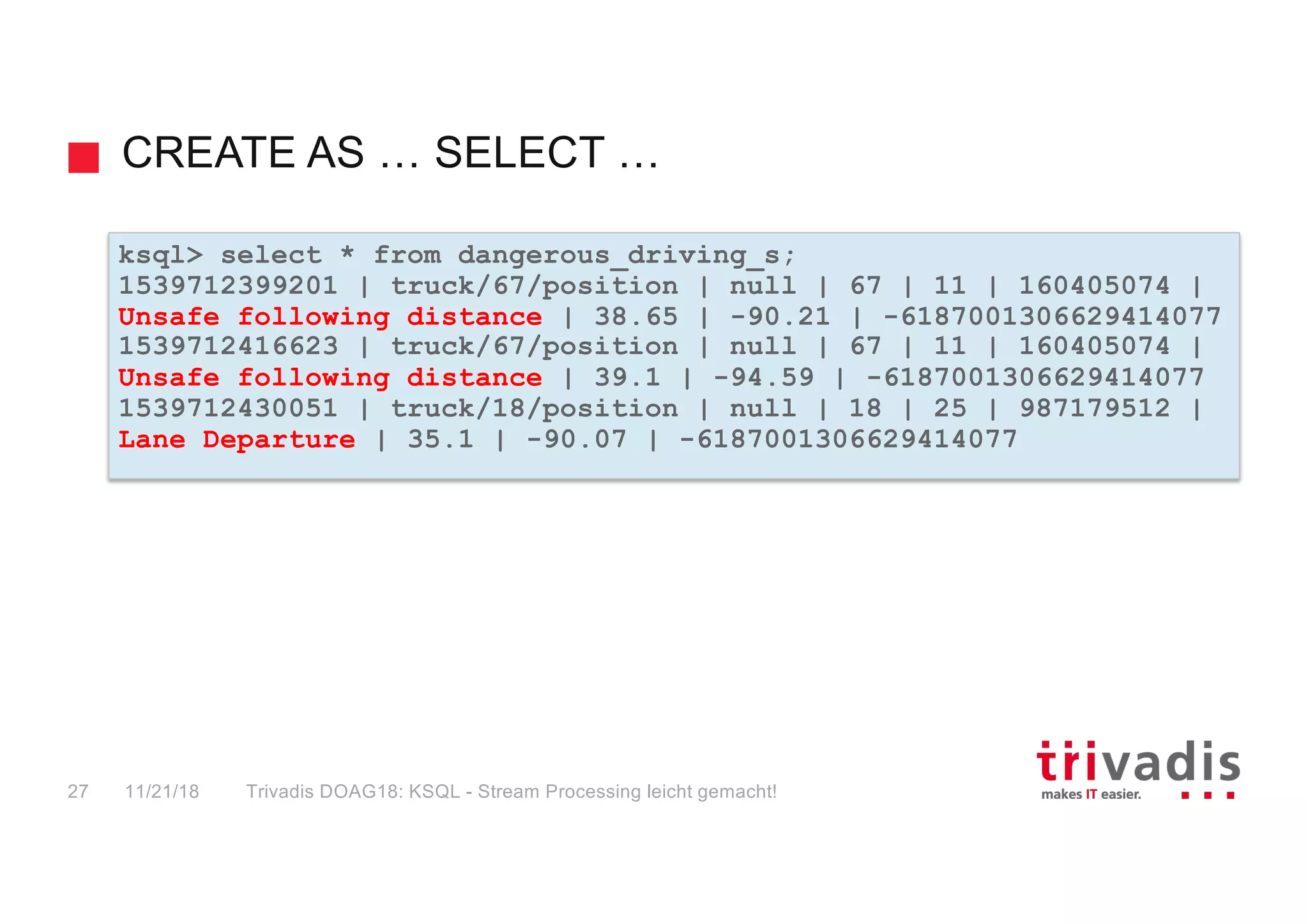

![CREATE STREAM … AS SELECT …

Create a new KSQL table along with the corresponding Kafka topic and stream the

result of the SELECT query as a changelog into the topic

WINDOW clause can only be used if the from_item is a stream

CREATE STREAM stream_name

[WITH ( property_name = expression [, ...] )]

AS SELECT select_expr [, ...]

FROM from_stream [ LEFT | FULL | INNER ]

JOIN [join_table | join_stream]

[ WITHIN [(before TIMEUNIT, after TIMEUNIT) | N TIMEUNIT] ]

ON join_criteria

[ WHERE condition ]

[PARTITION BY column_name];

11/21/18 Trivadis DOAG18: KSQL - Stream Processing leicht gemacht!24](https://image.slidesharecdn.com/kafka-ksql-v1-181122135807/75/KSQL-Stream-Processing-simplified-23-2048.jpg)

![INSERT INTO … SELECT …

Stream the result of the SELECT query into an existing stream and its underlying topic

schema and partitioning column produced by the query must match the stream’s

schema and key

If the schema and partitioning column are incompatible with the stream, then the

statement will return an error

CREATE STREAM stream_name ...;

INSERT INTO stream_name

SELECT select_expr [., ...]

FROM from_stream

[ WHERE condition ]

[ PARTITION BY column_name ];

11/21/18 Trivadis DOAG18: KSQL - Stream Processing leicht gemacht!25](https://image.slidesharecdn.com/kafka-ksql-v1-181122135807/75/KSQL-Stream-Processing-simplified-24-2048.jpg)

![CREATE TABLE

Create a new table with the specified columns and properties

Supports same data types as CREATE STREAM

KSQL adds the implicit columns ROWTIME and ROWKEY to every table as well

KSQL has currently the following requirements for creating a table from a Kafka topic

• message key must also be present as a field/column in the Kafka message value

• message key must be in VARCHAR aka STRING format

CREATE TABLE table_name ( { column_name data_type } [, ...] )

WITH ( property_name = expression [, ...] );

11/21/18 Trivadis DOAG18: KSQL - Stream Processing leicht gemacht!30](https://image.slidesharecdn.com/kafka-ksql-v1-181122135807/75/KSQL-Stream-Processing-simplified-29-2048.jpg)