This document summarizes the Dirichlet Process with Mixed Random Measures (DP-MRM) topic model. DP-MRM is a nonparametric, supervised topic model that does not require specifying the number of topics in advance. It places a Dirichlet process prior over label-specific random measures, with each measure representing the topics for a label. The generative process samples document-topic distributions from these random measures. Inference is done using a Chinese restaurant franchise process. Experiments show DP-MRM can automatically learn label-topic correspondences without manual specification.

![[Kim+ ICML2012] Dirichlet Process

with Mixed Random Measures : A

Nonparametric Topic Model for

Labeled Data

2012/07/28

Nakatani Shuyo @ Cybozu Labs, Inc

twitter : @shuyo](https://image.slidesharecdn.com/dp-mrmkimicml2012-120727233419-phpapp01/75/Kim-ICML2012-Dirichlet-Process-with-Mixed-Random-Measures-A-Nonparametric-Topic-Model-for-Labeled-Data-1-2048.jpg)

![LDA(Latent Dirichlet Allocation)

[Blei+ 03]

• Unsupervised Topic Model

– Each word has an unobserved topic

• Parametric

– The topic size K is given in advance

via Wikipedia](https://image.slidesharecdn.com/dp-mrmkimicml2012-120727233419-phpapp01/85/Kim-ICML2012-Dirichlet-Process-with-Mixed-Random-Measures-A-Nonparametric-Topic-Model-for-Labeled-Data-2-320.jpg)

![Labeled LDA [Ramage+ 09]

• Supervised Topic Model

– Each document has an observed label

• Parametric

via [Ramage+ 09]](https://image.slidesharecdn.com/dp-mrmkimicml2012-120727233419-phpapp01/85/Kim-ICML2012-Dirichlet-Process-with-Mixed-Random-Measures-A-Nonparametric-Topic-Model-for-Labeled-Data-3-320.jpg)

![Generative Process for L-LDA

• 𝜷 𝑘 ~Dir 𝜼

topics corresponding to

𝑑 observed labels

• Λ 𝑘 ~Bernoulli Φ 𝑘

• 𝜽 𝑑 ~Dir 𝜶 𝑑

restricted to labeled

– where 𝜶 𝑑 = 𝛼𝑘 parameters

𝑑

𝑘 Λ 𝑘 =1

𝑑 𝑑

• 𝑧 𝑖 ~Multi 𝜽

𝑑

• 𝑤𝑖 ~Multi 𝜷 𝑧 𝑑

𝑖

via [Ramage+ 09]](https://image.slidesharecdn.com/dp-mrmkimicml2012-120727233419-phpapp01/85/Kim-ICML2012-Dirichlet-Process-with-Mixed-Random-Measures-A-Nonparametric-Topic-Model-for-Labeled-Data-4-320.jpg)

![Pros/Cons of L-LDA

• Pros

– Easy to implement

• Cons via [Ramage+ 09]

– It is necessary to specify label-topic

correspondence manually

• Its performance depends on the corresponds

※) My implementation is here : https://github.com/shuyo/iir/blob/master/lda/llda.py](https://image.slidesharecdn.com/dp-mrmkimicml2012-120727233419-phpapp01/85/Kim-ICML2012-Dirichlet-Process-with-Mixed-Random-Measures-A-Nonparametric-Topic-Model-for-Labeled-Data-5-320.jpg)

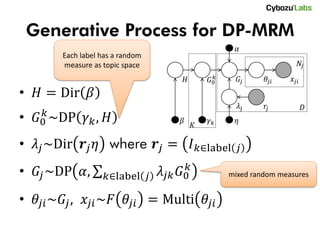

![DP-MRM [Kim+ 12]

– Dirichlet Process with Mixed Random Measures

• Supervised Topic Model

• Nonparametric

– K is not the topic size, but the label size

𝛼

𝑁𝑗

𝐻 𝐺0𝑘 𝐺𝑗 𝜃 𝑗𝑖 𝑥 𝑗𝑖

𝜆j 𝑟𝑗 𝐷

𝛽 𝛾𝑘 𝜂

𝐾](https://image.slidesharecdn.com/dp-mrmkimicml2012-120727233419-phpapp01/85/Kim-ICML2012-Dirichlet-Process-with-Mixed-Random-Measures-A-Nonparametric-Topic-Model-for-Labeled-Data-6-320.jpg)

![Experiments

• DP-MRM gives label-topic probabilistic

corresponding automatically.

via [Kim+ 12]](https://image.slidesharecdn.com/dp-mrmkimicml2012-120727233419-phpapp01/85/Kim-ICML2012-Dirichlet-Process-with-Mixed-Random-Measures-A-Nonparametric-Topic-Model-for-Labeled-Data-12-320.jpg)

![via [Kim+ 12]

• L-LDA can also predict single labeled document to

assign a common second label to any documents.](https://image.slidesharecdn.com/dp-mrmkimicml2012-120727233419-phpapp01/85/Kim-ICML2012-Dirichlet-Process-with-Mixed-Random-Measures-A-Nonparametric-Topic-Model-for-Labeled-Data-13-320.jpg)

![References

• [Kim+ ICML2012] Dirichlet Process with Mixed

Random Measures : A Nonparametric Topic

Model for Labeled Data

• [Ramage+ EMNLP2009] Labeled LDA : A

supervised topic model for credit attribution in

multi-labeled corpora

• [Blei+ 2003] Latent Dirichlet Allocation](https://image.slidesharecdn.com/dp-mrmkimicml2012-120727233419-phpapp01/85/Kim-ICML2012-Dirichlet-Process-with-Mixed-Random-Measures-A-Nonparametric-Topic-Model-for-Labeled-Data-14-320.jpg)