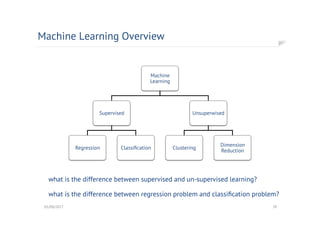

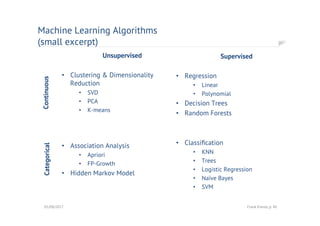

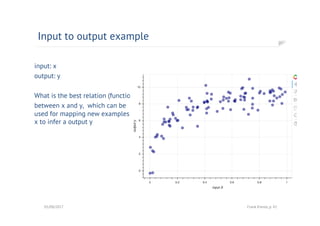

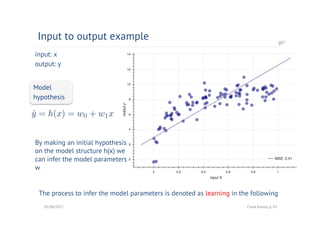

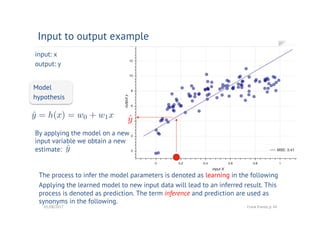

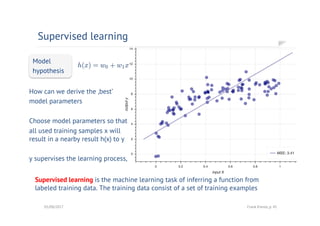

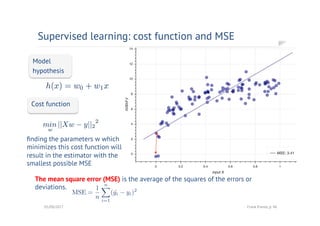

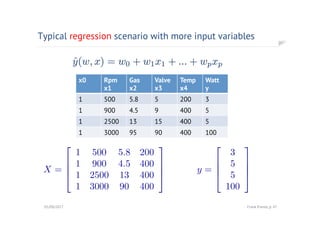

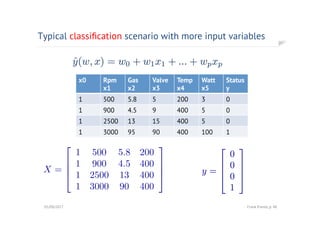

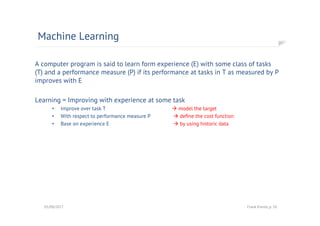

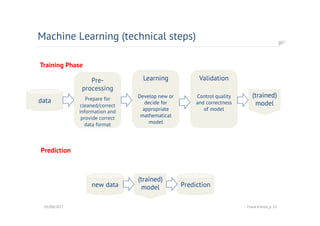

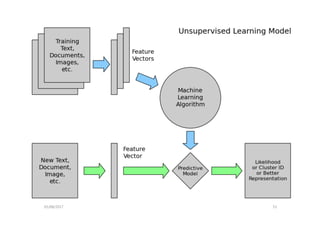

The document provides an overview of data science, focusing on machine learning and artificial intelligence concepts. It explains the distinction between supervised and unsupervised learning, various machine learning algorithms, and the processes of model inference and prediction. Additionally, it discusses practical applications such as spam filtering, fraud detection, and customer segmentation, along with the importance of data processing methods like batch and stream processing.