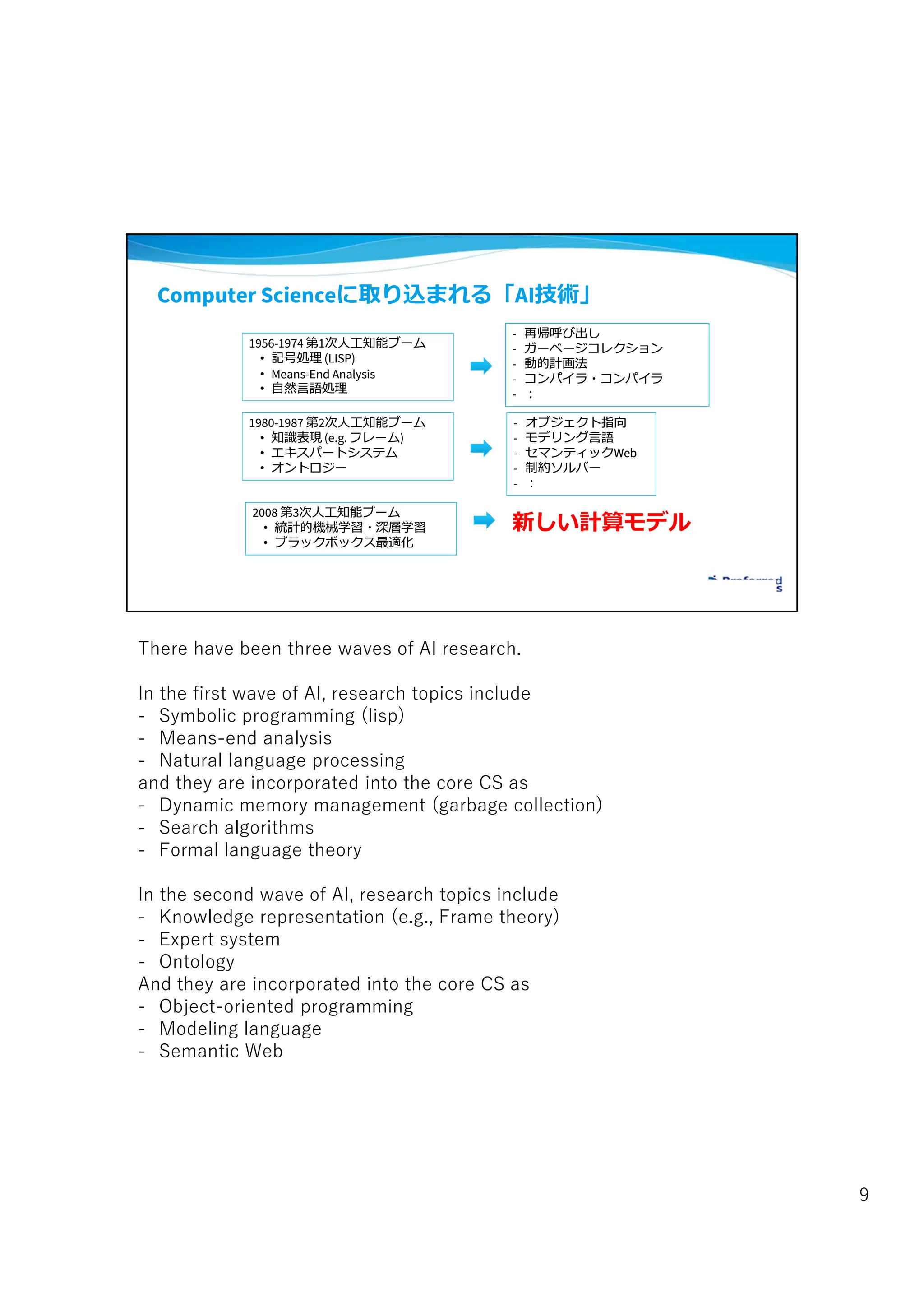

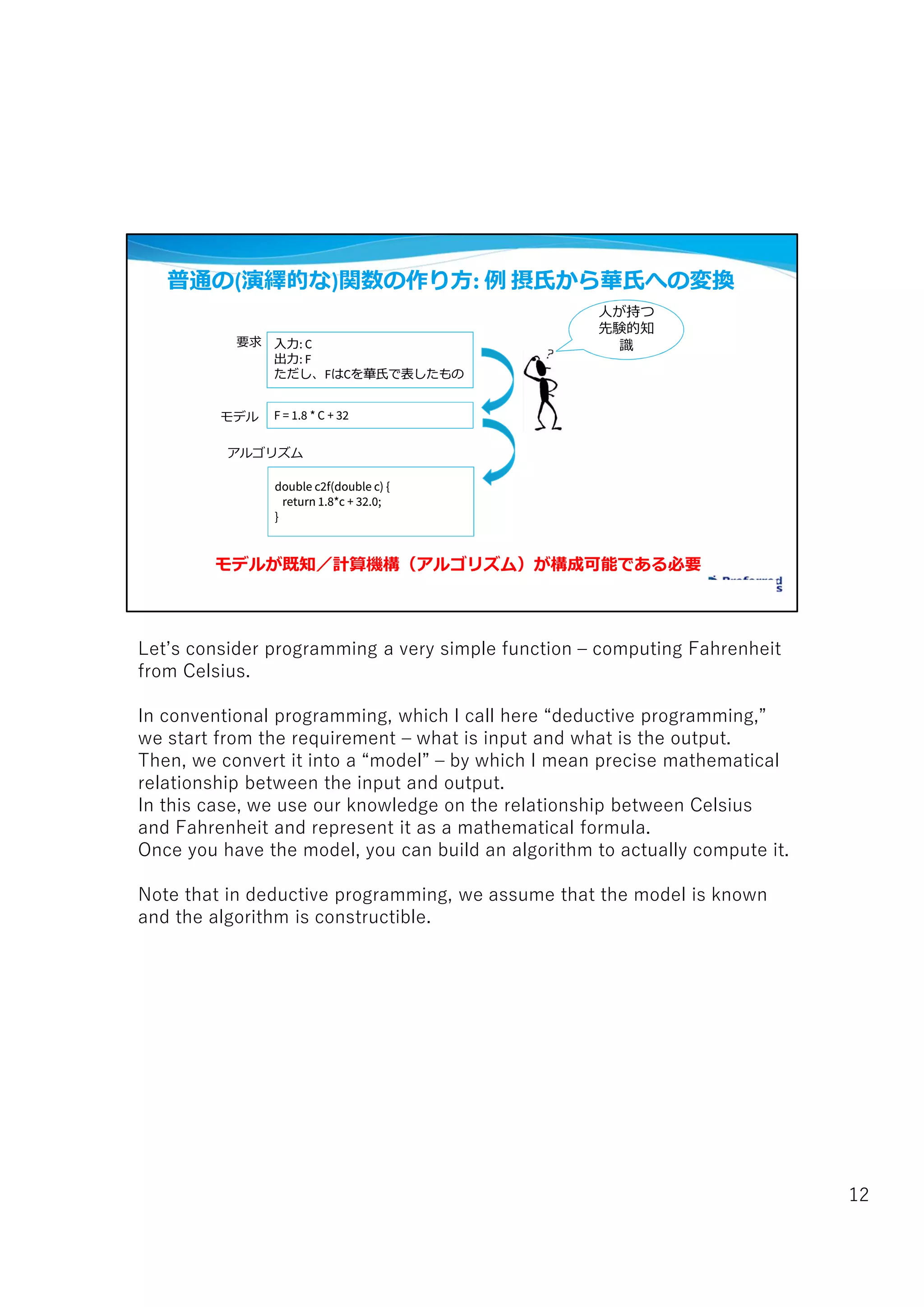

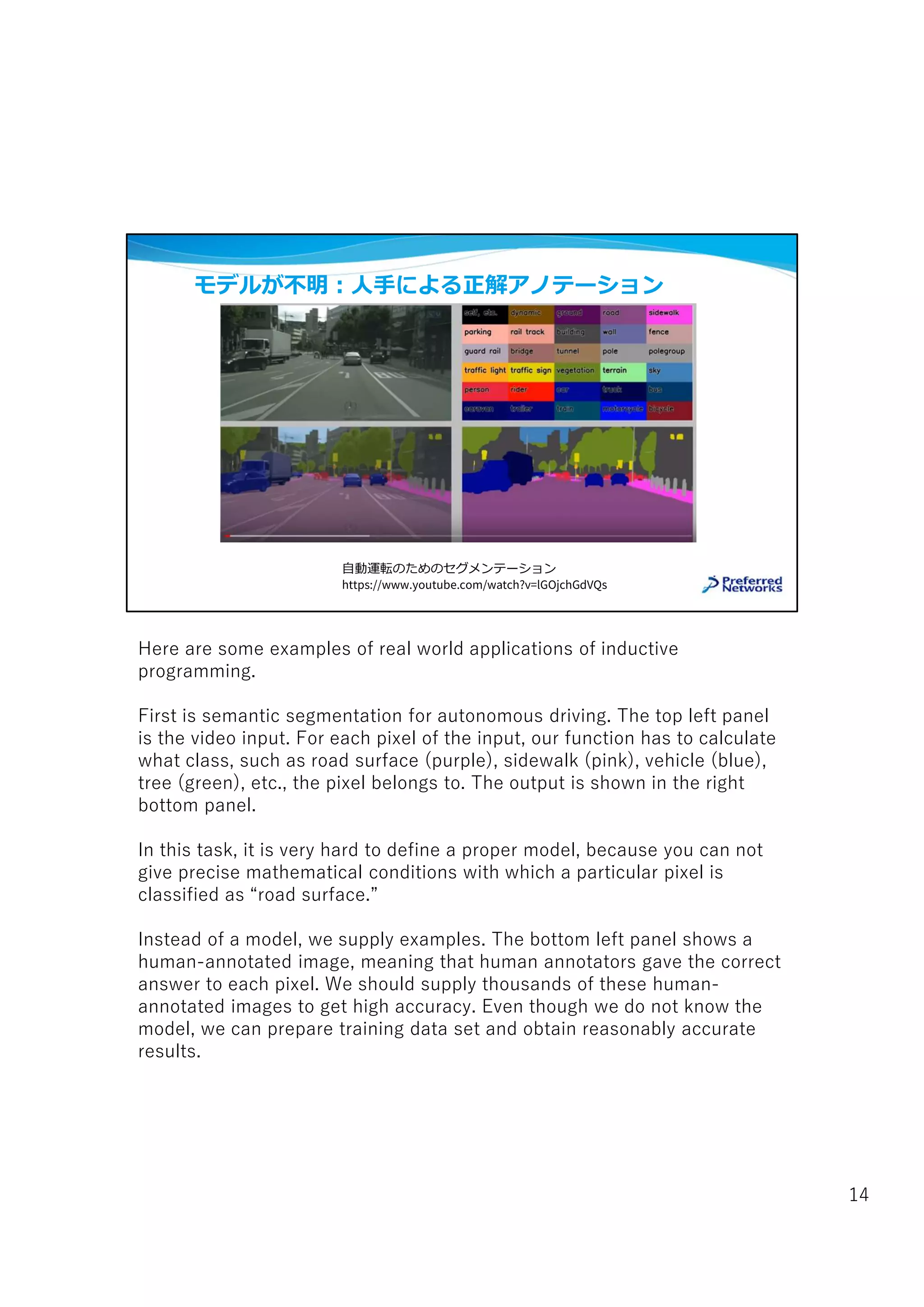

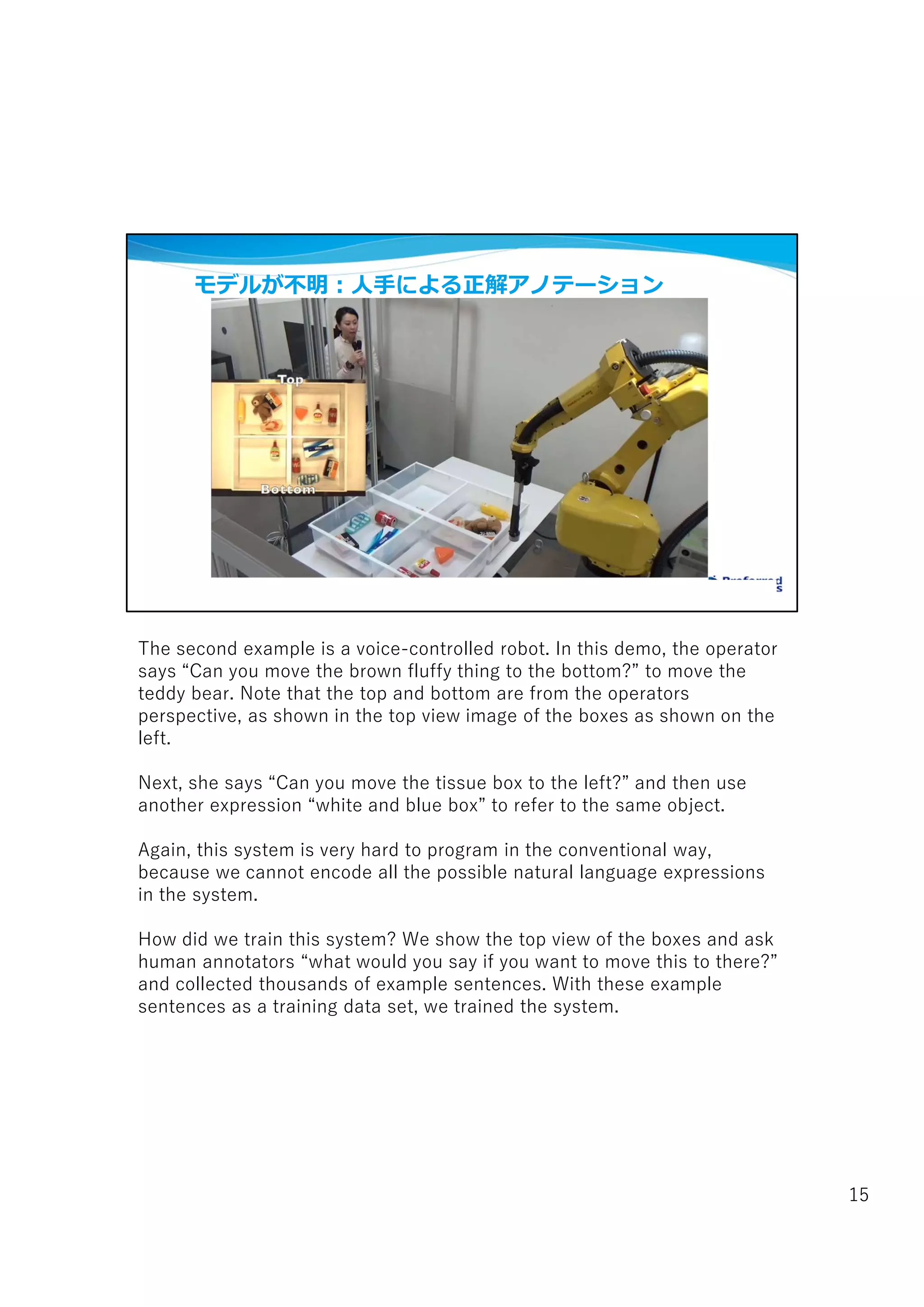

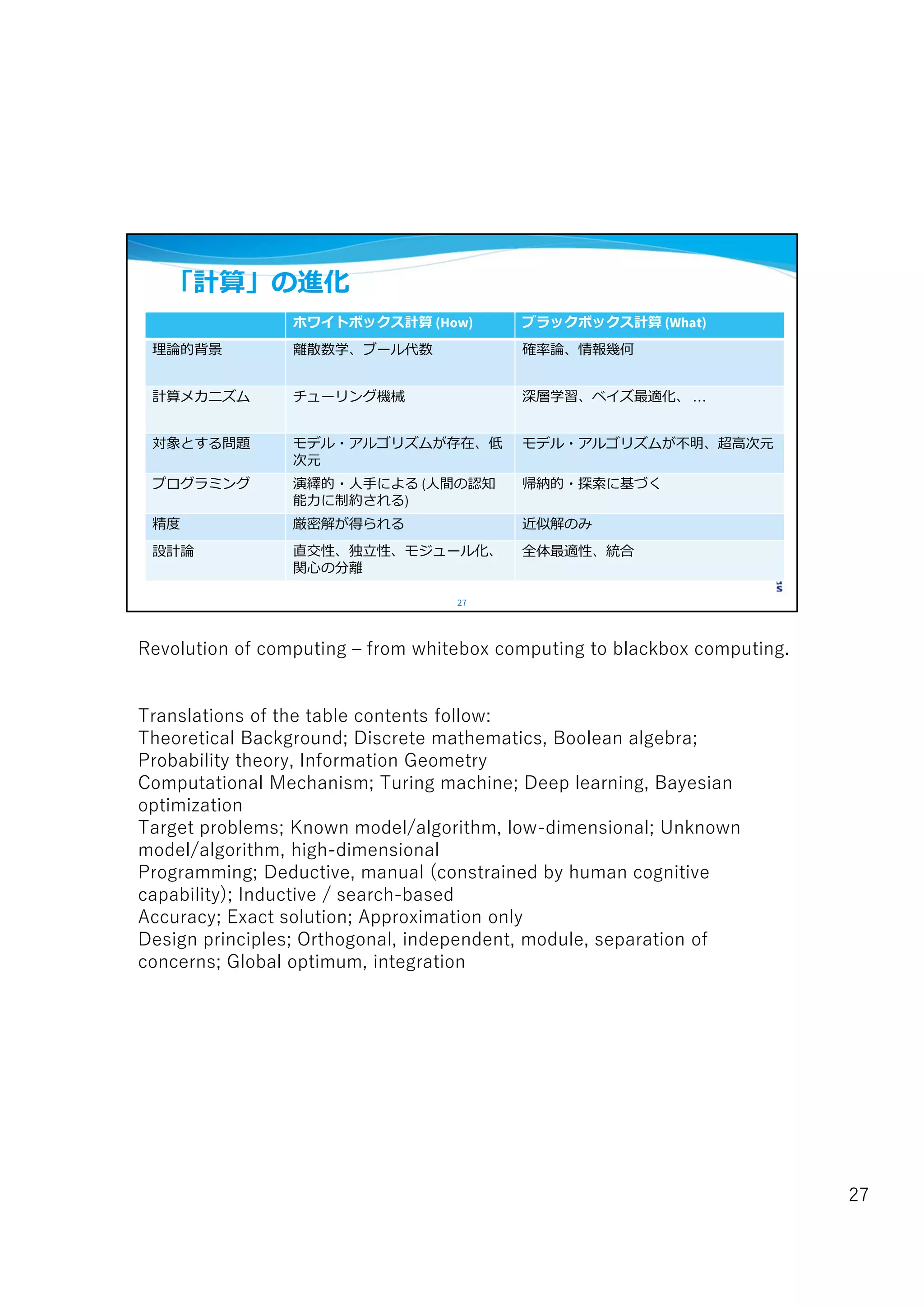

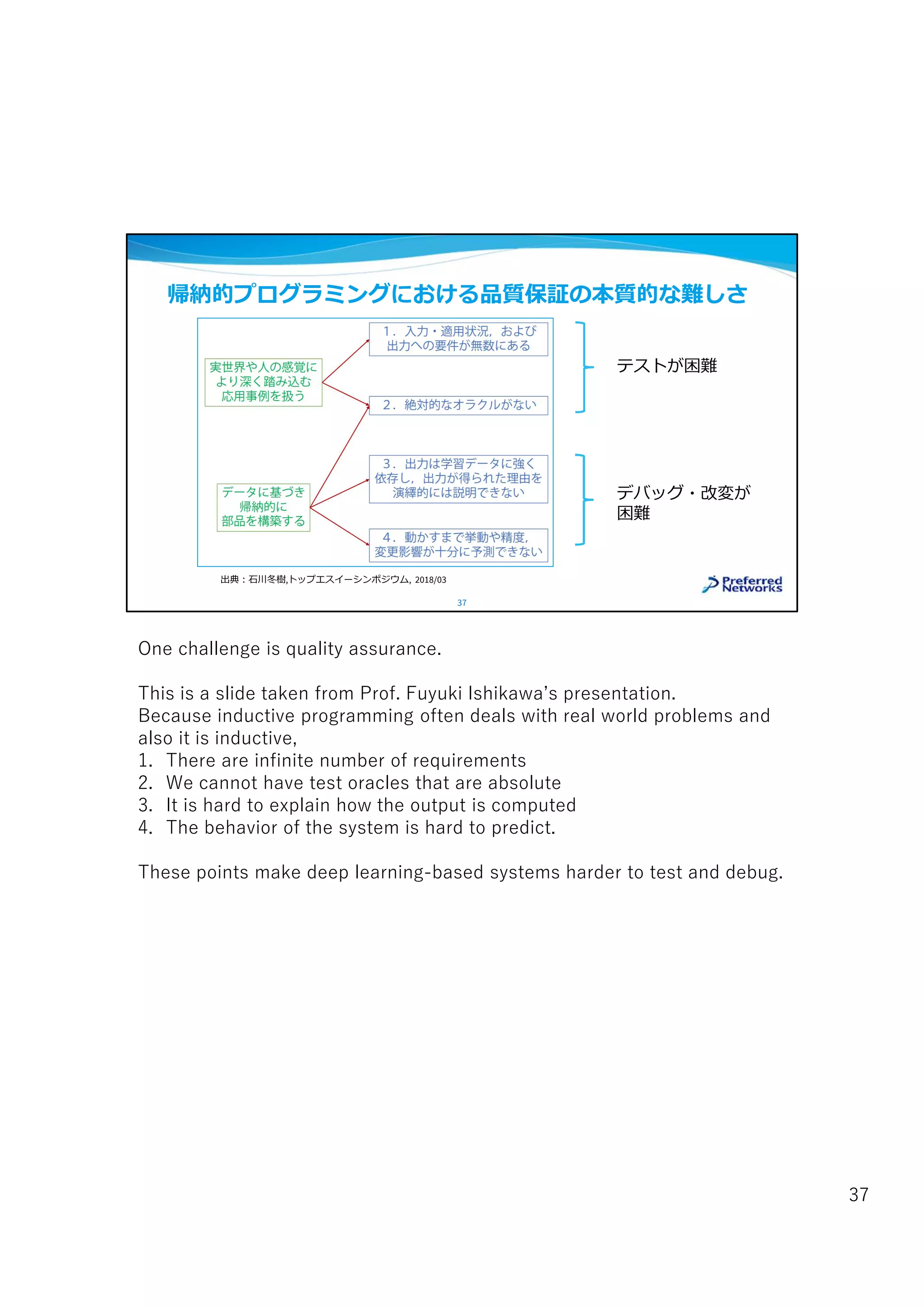

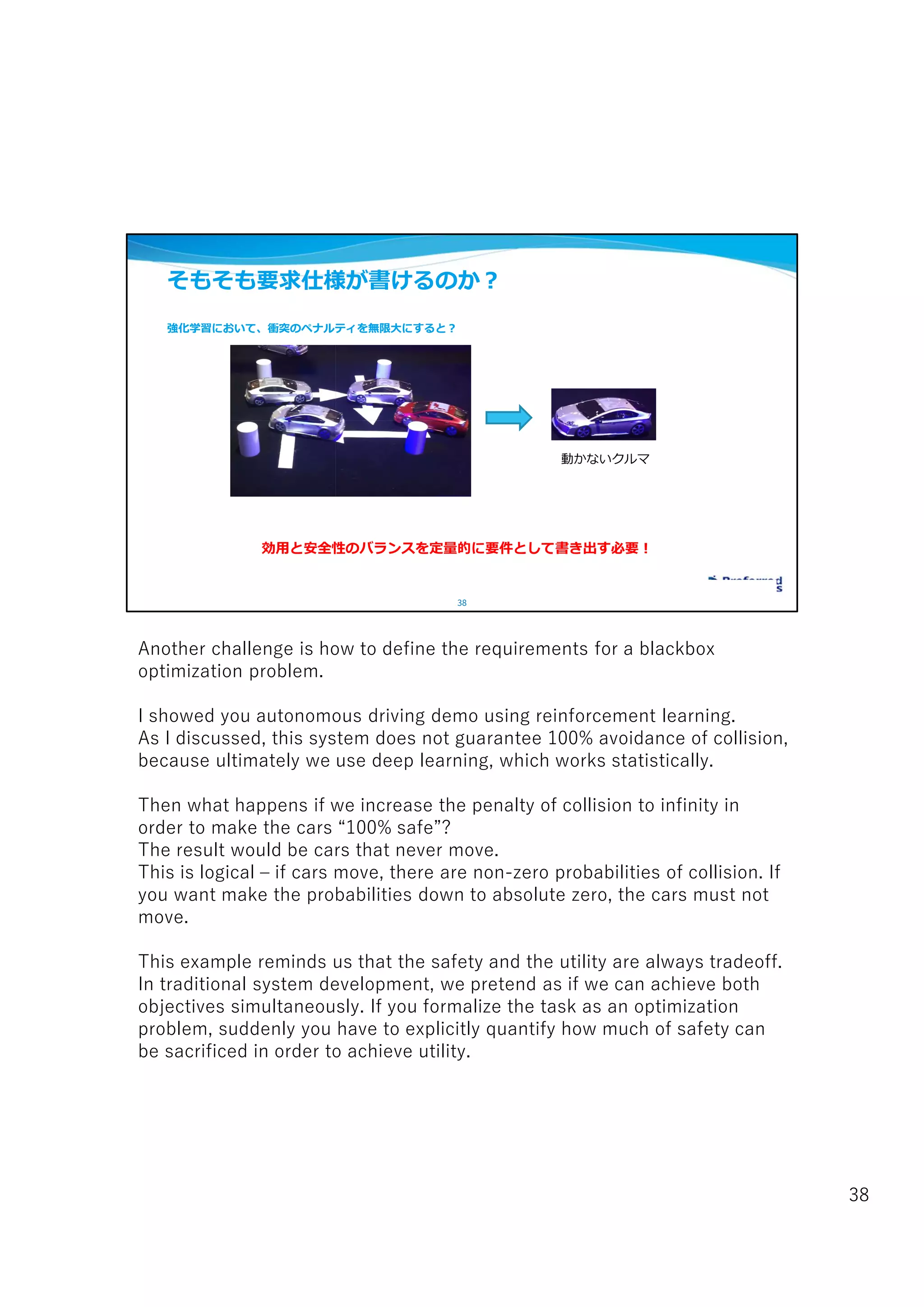

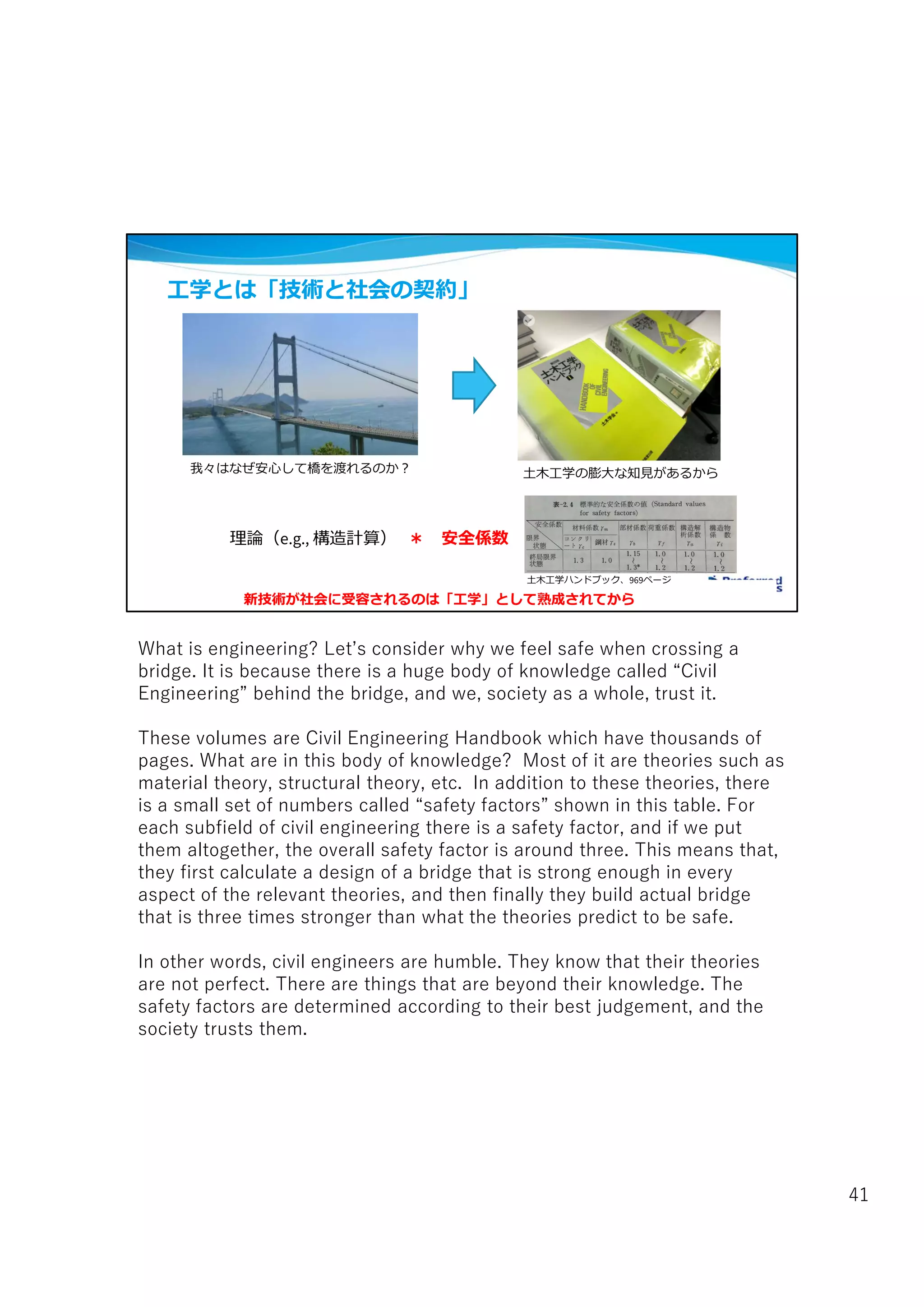

The document discusses the varying interpretations of 'artificial intelligence' (AI) and its implications for the fields of computer science, science, and engineering. It outlines three interpretations: AI as a field of study, AI as a sophisticated technology often misrepresented, and AI as an emerging frontier within computer science that drives new computation models like deep learning. The speaker emphasizes the confusion surrounding AI terminology and its evolving role in technological advancement, highlighting the limitations and potential future of AI applications.