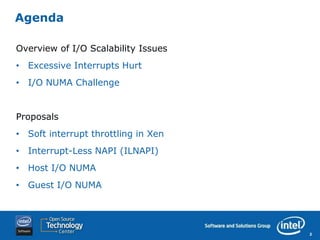

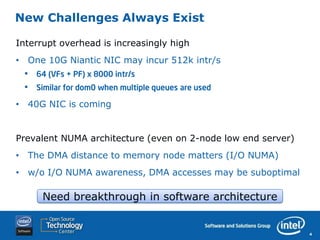

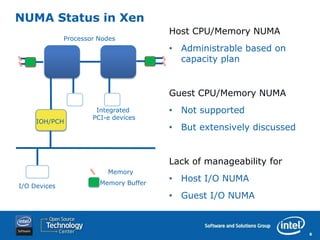

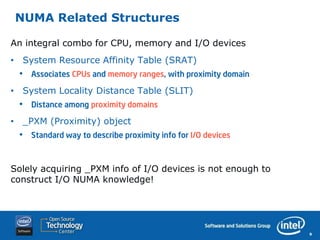

Excessive interrupts can hurt I/O scalability in Xen. The proposals discuss software interrupt throttling and interrupt-less NAPI to reduce interrupt overhead. They also discuss exposing NUMA information to Xen to improve host I/O NUMA awareness and enabling guest I/O NUMA awareness by constructing _PXM methods and extending device assignment policies.