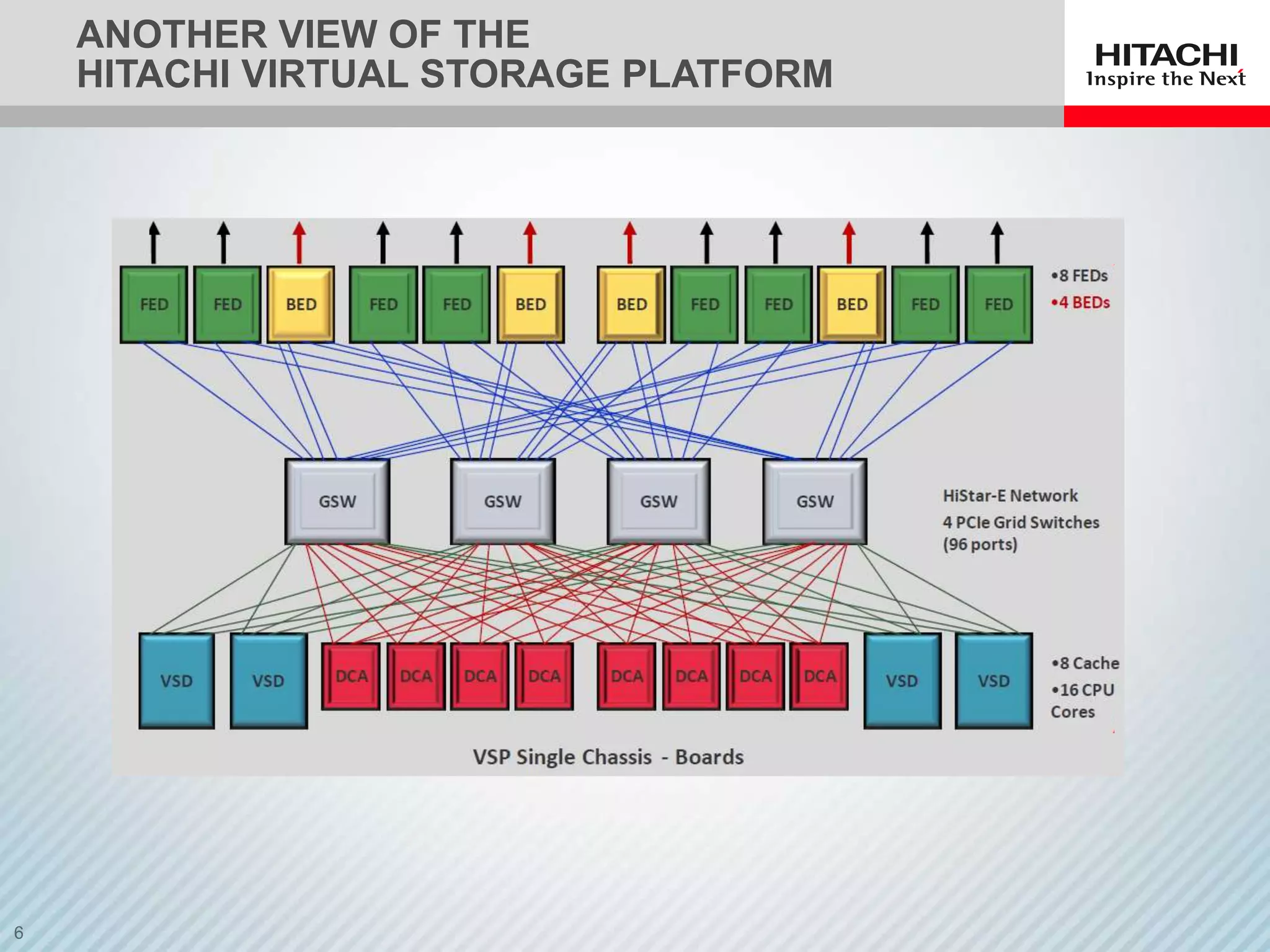

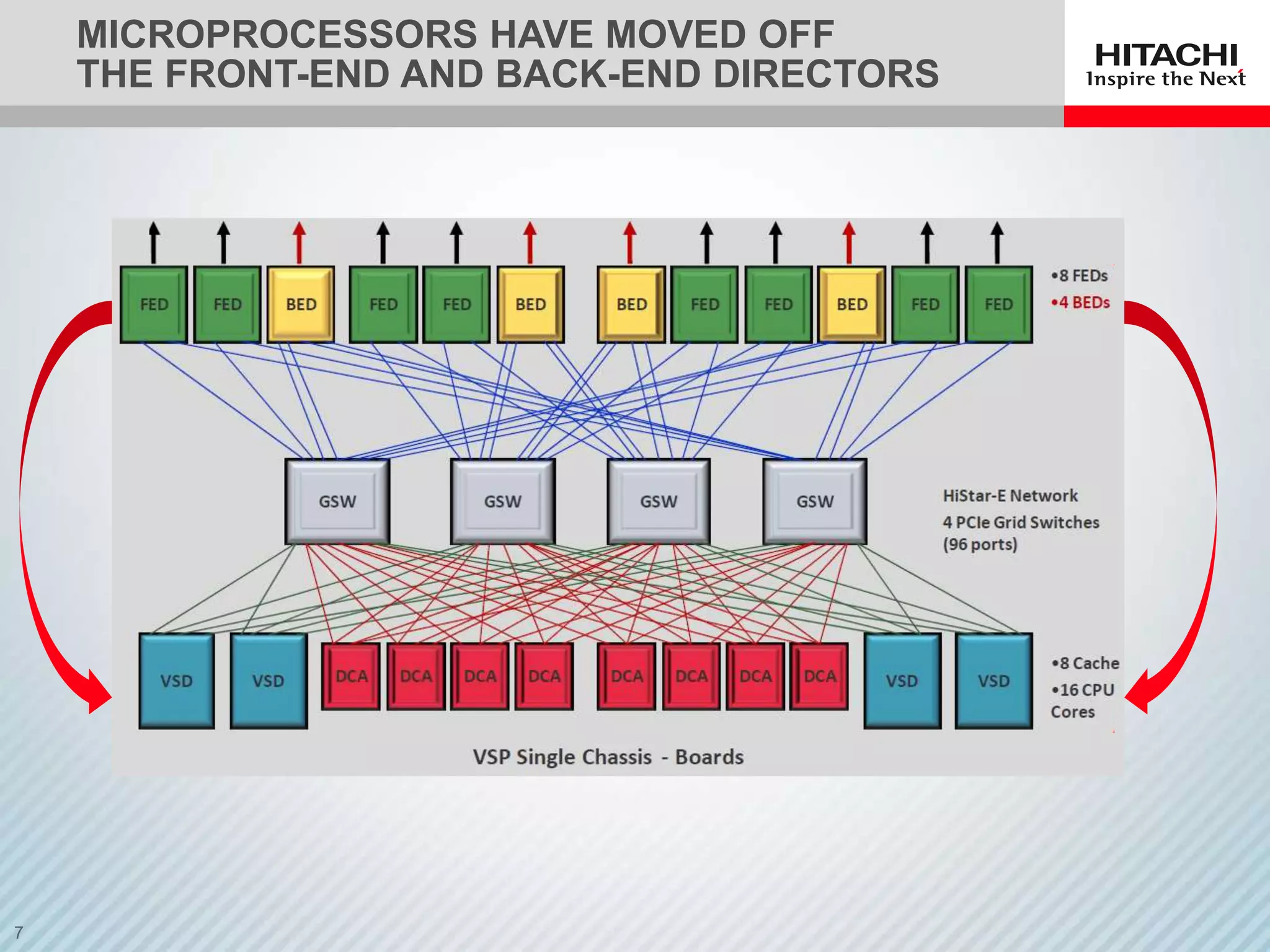

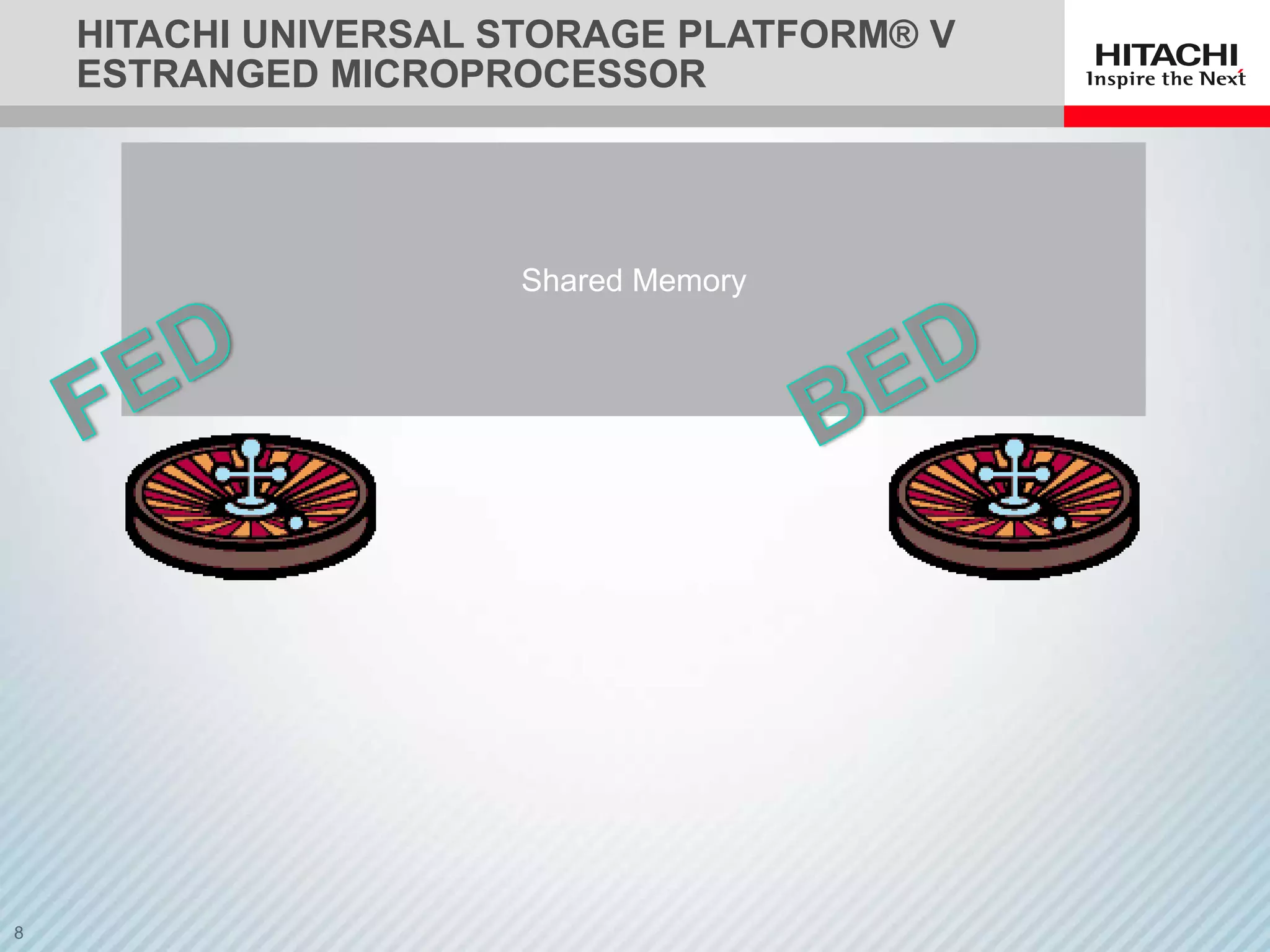

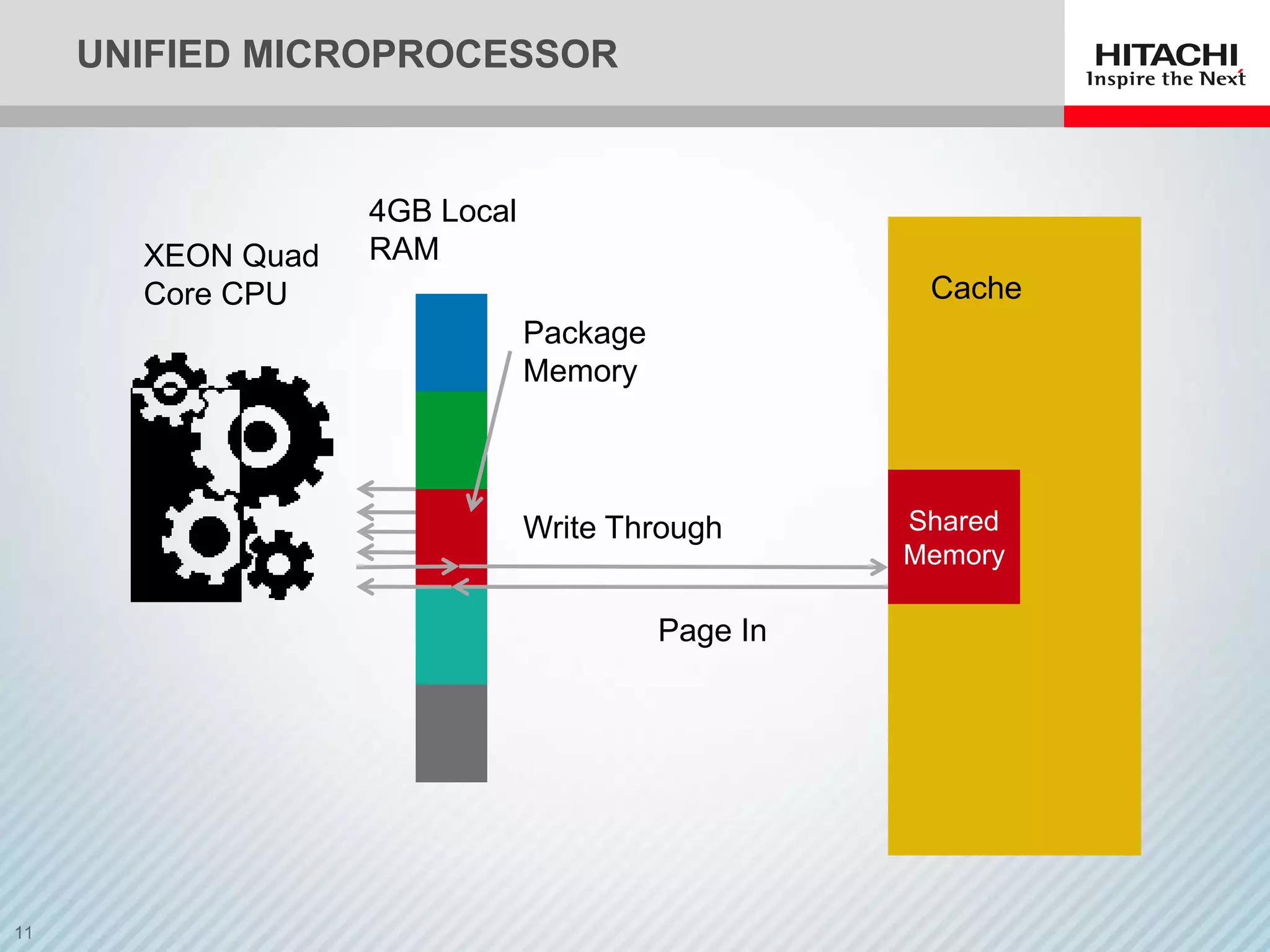

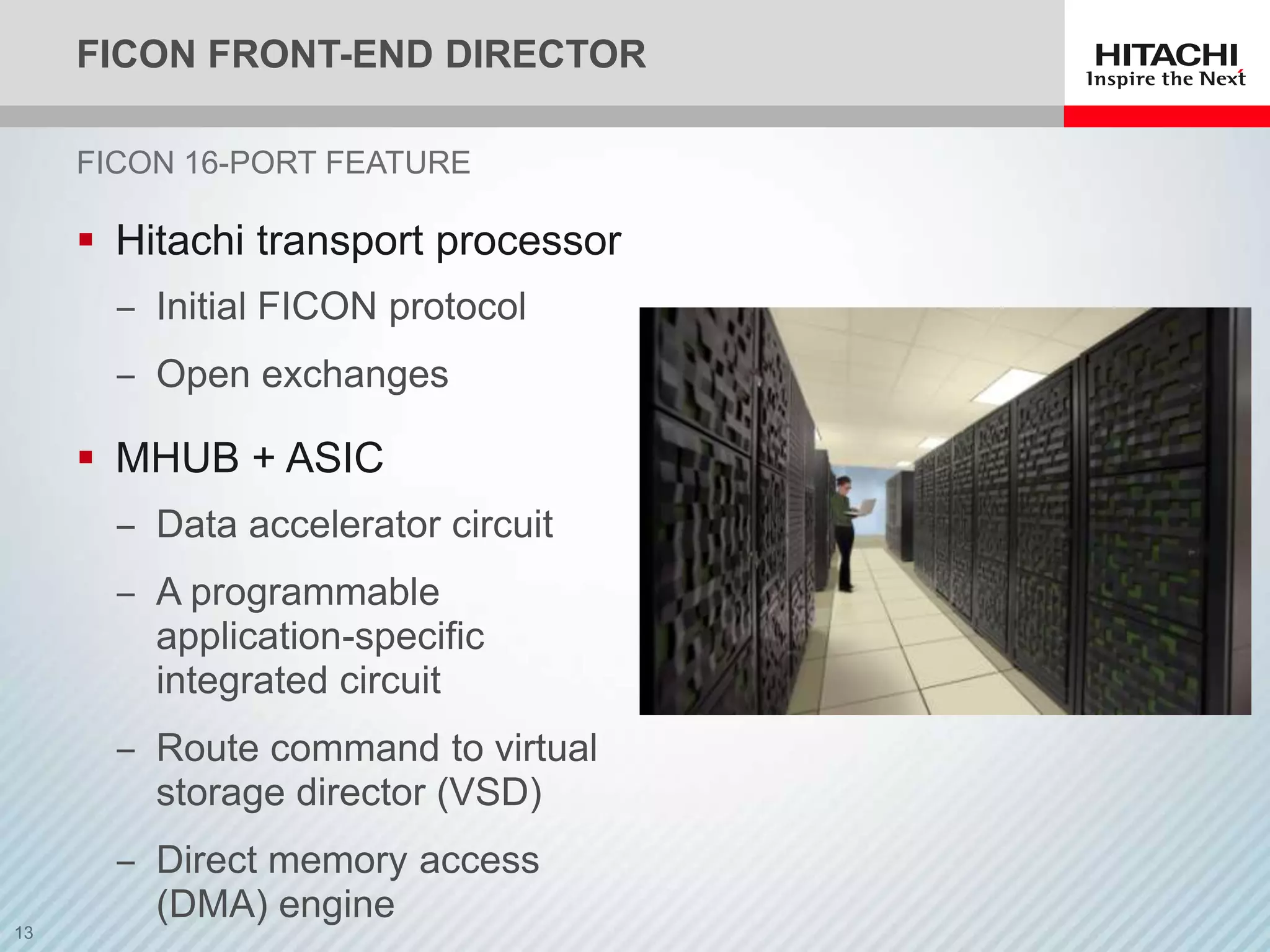

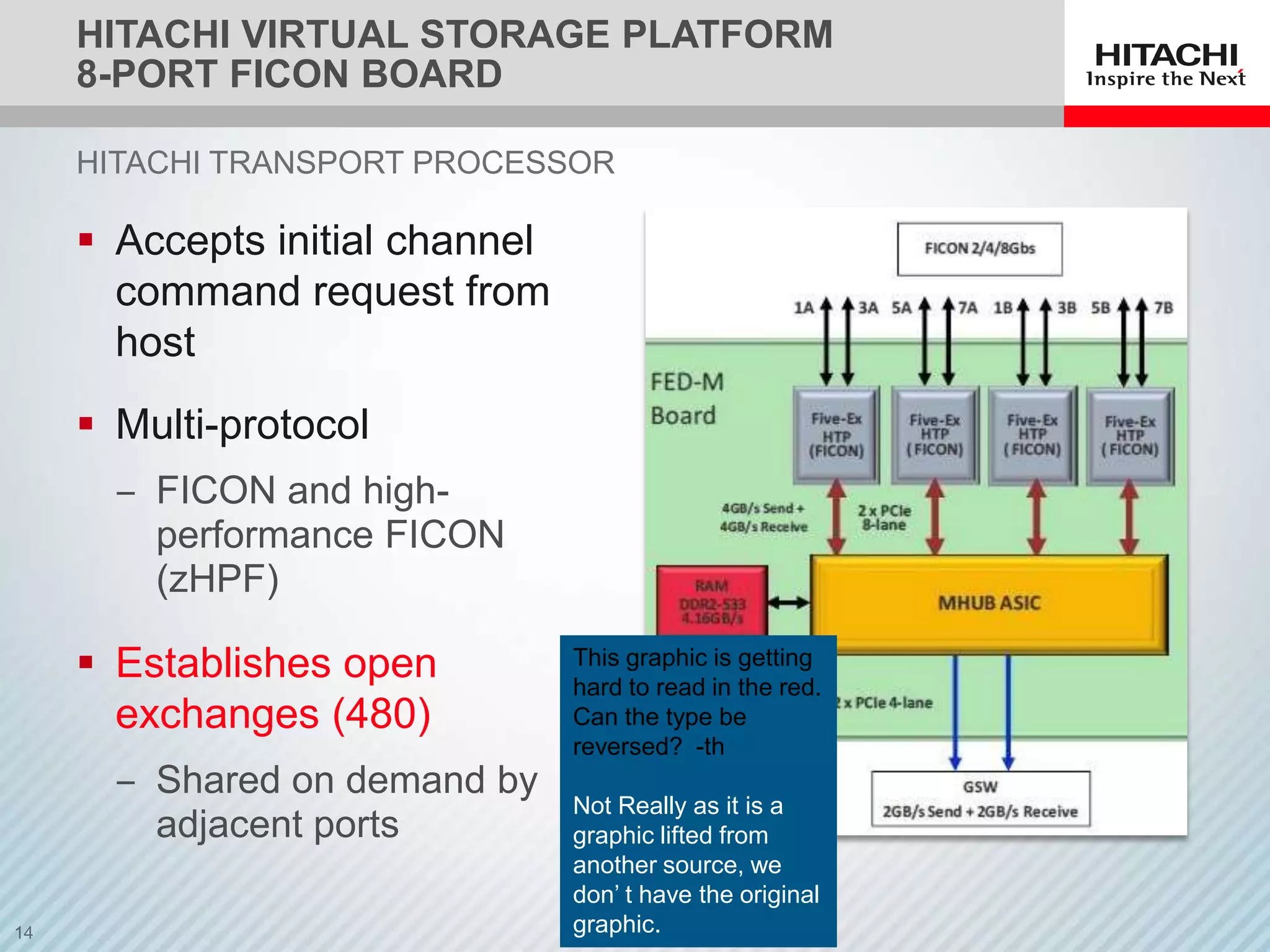

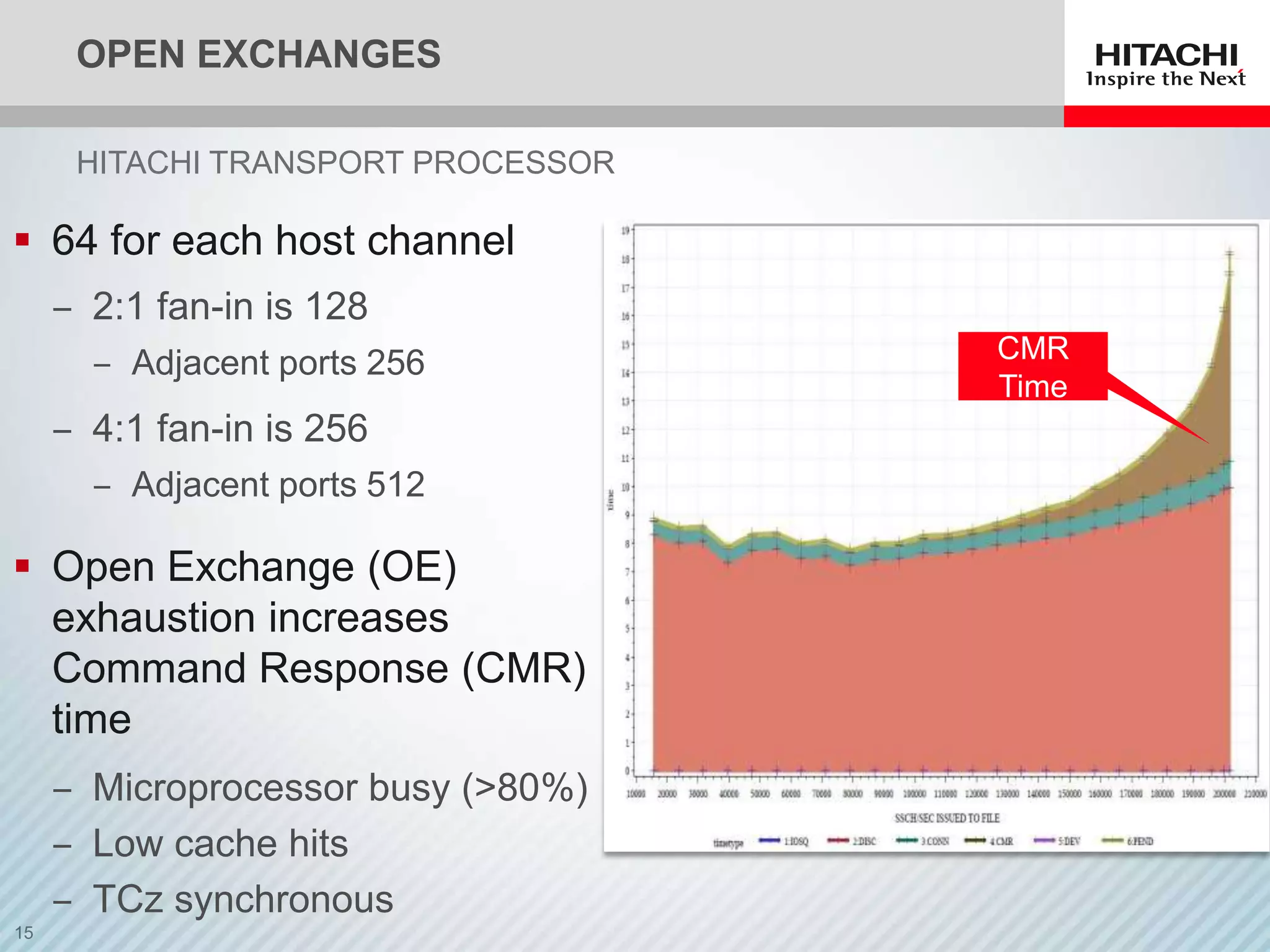

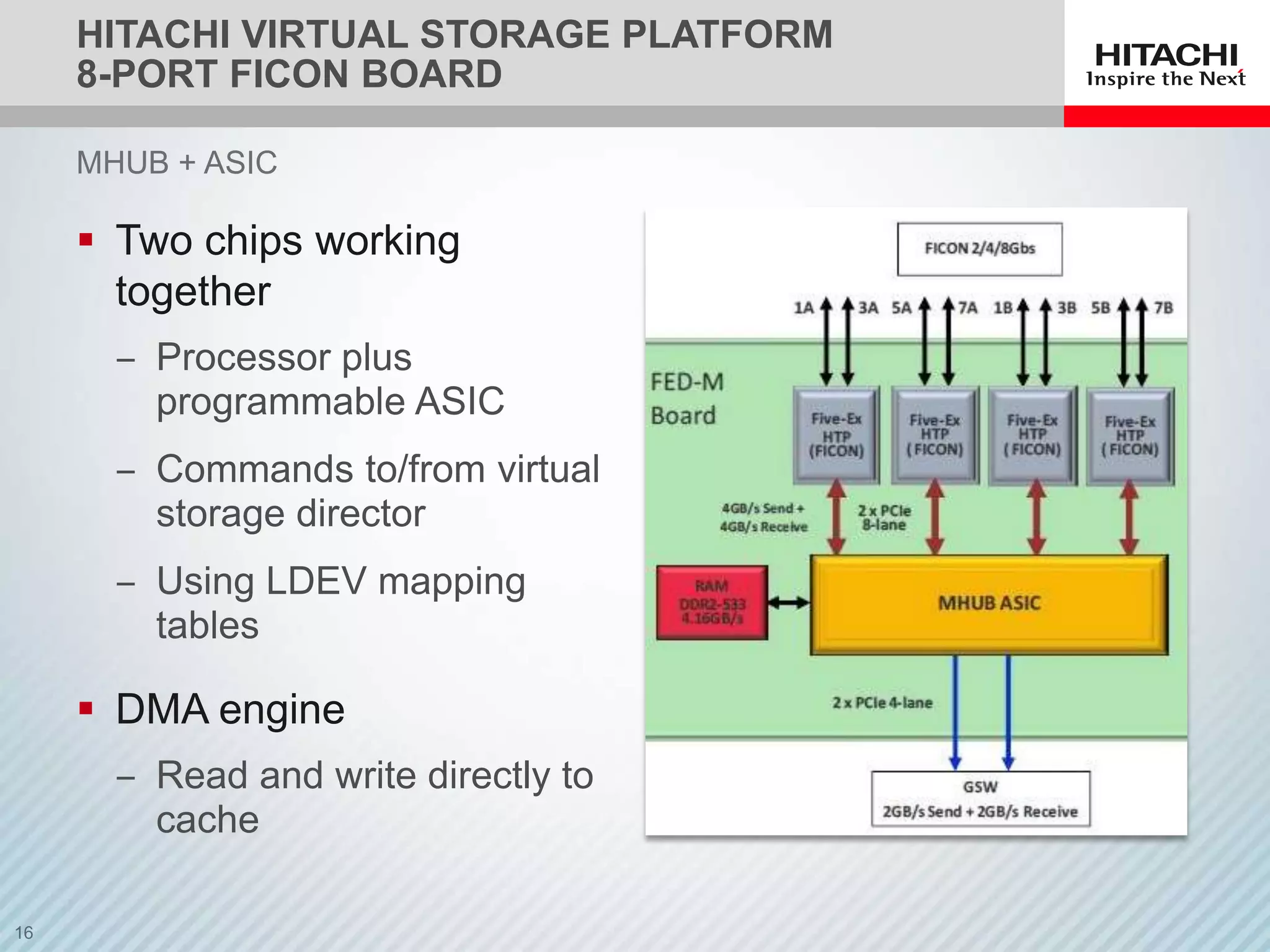

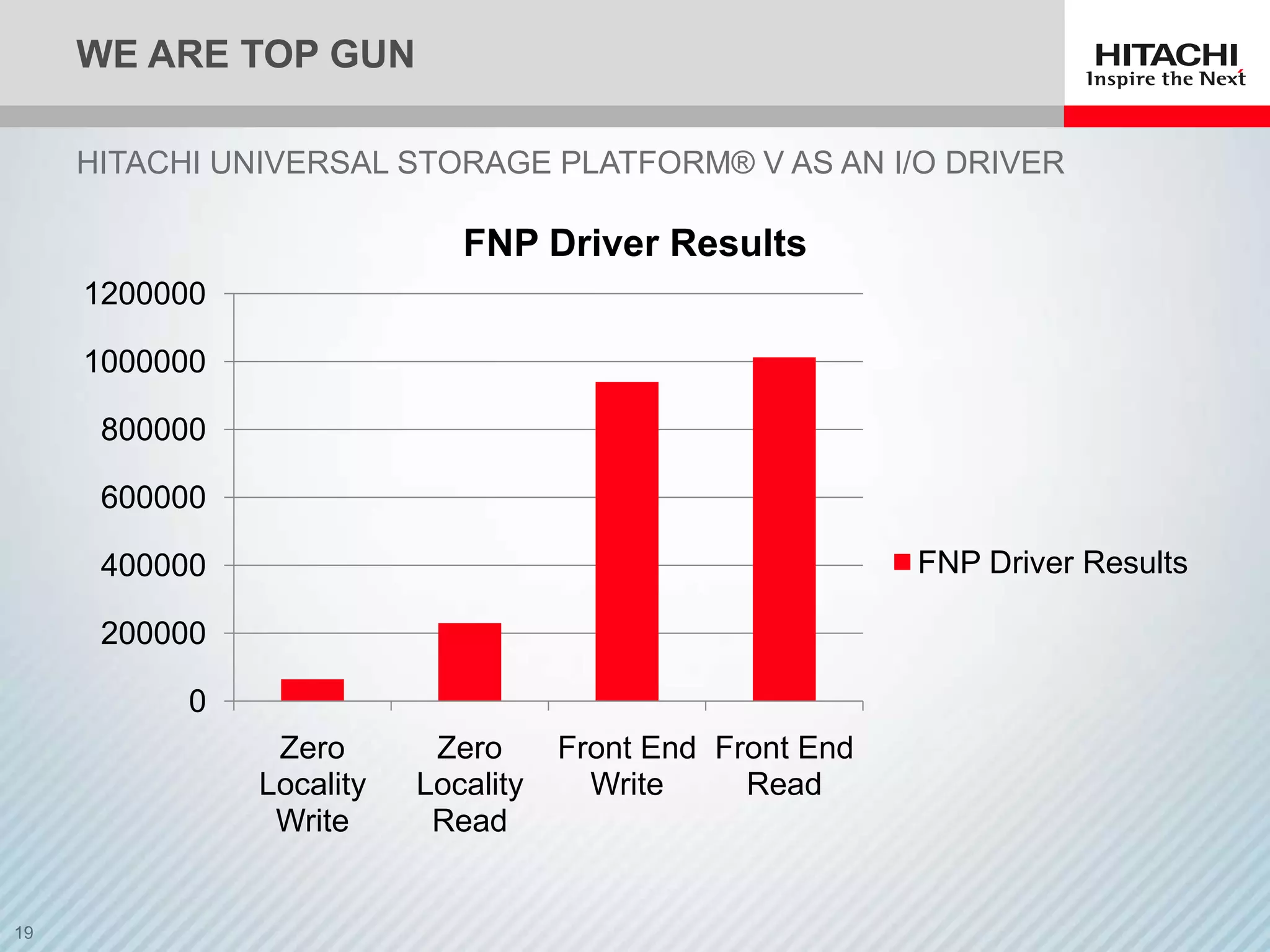

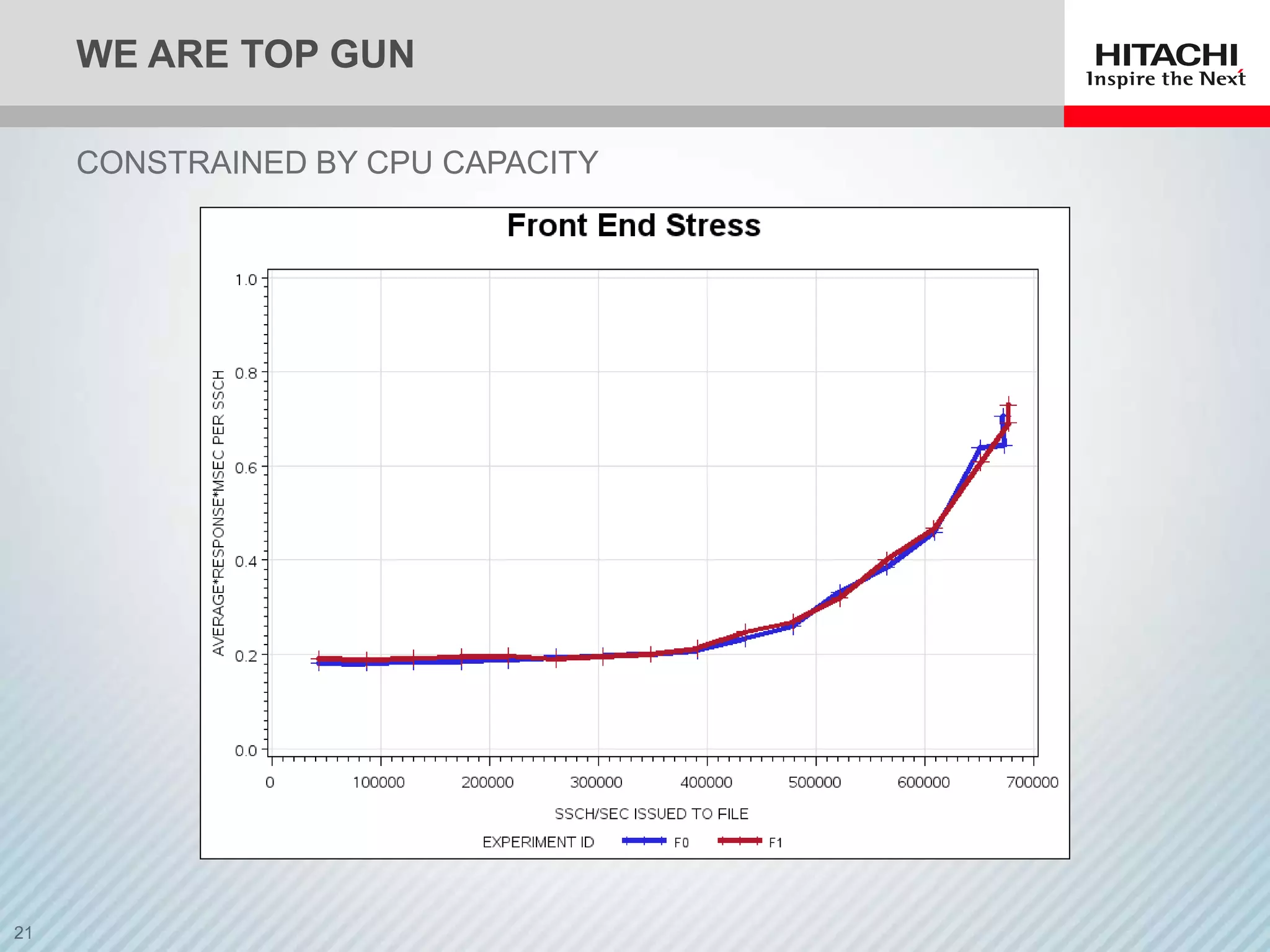

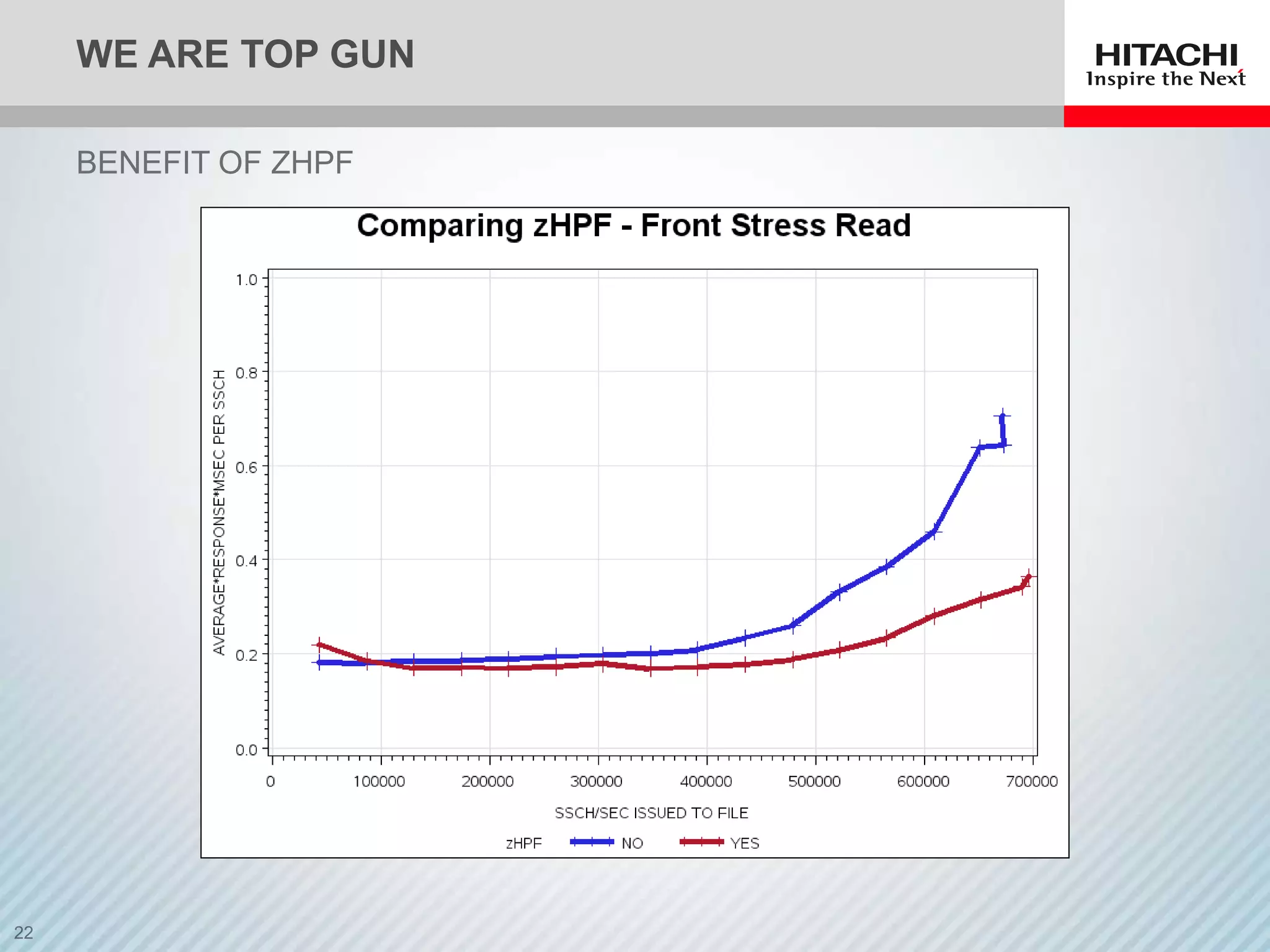

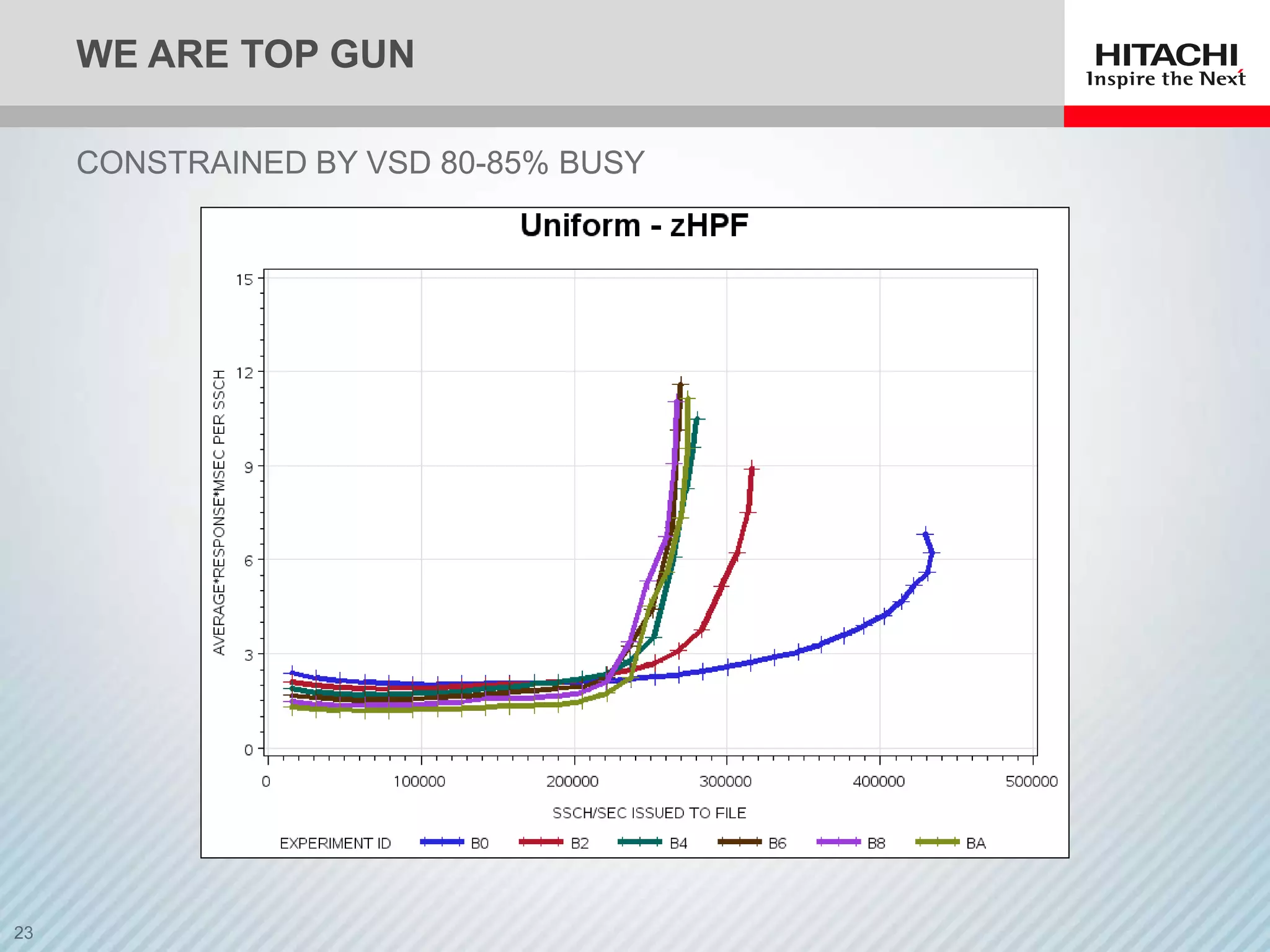

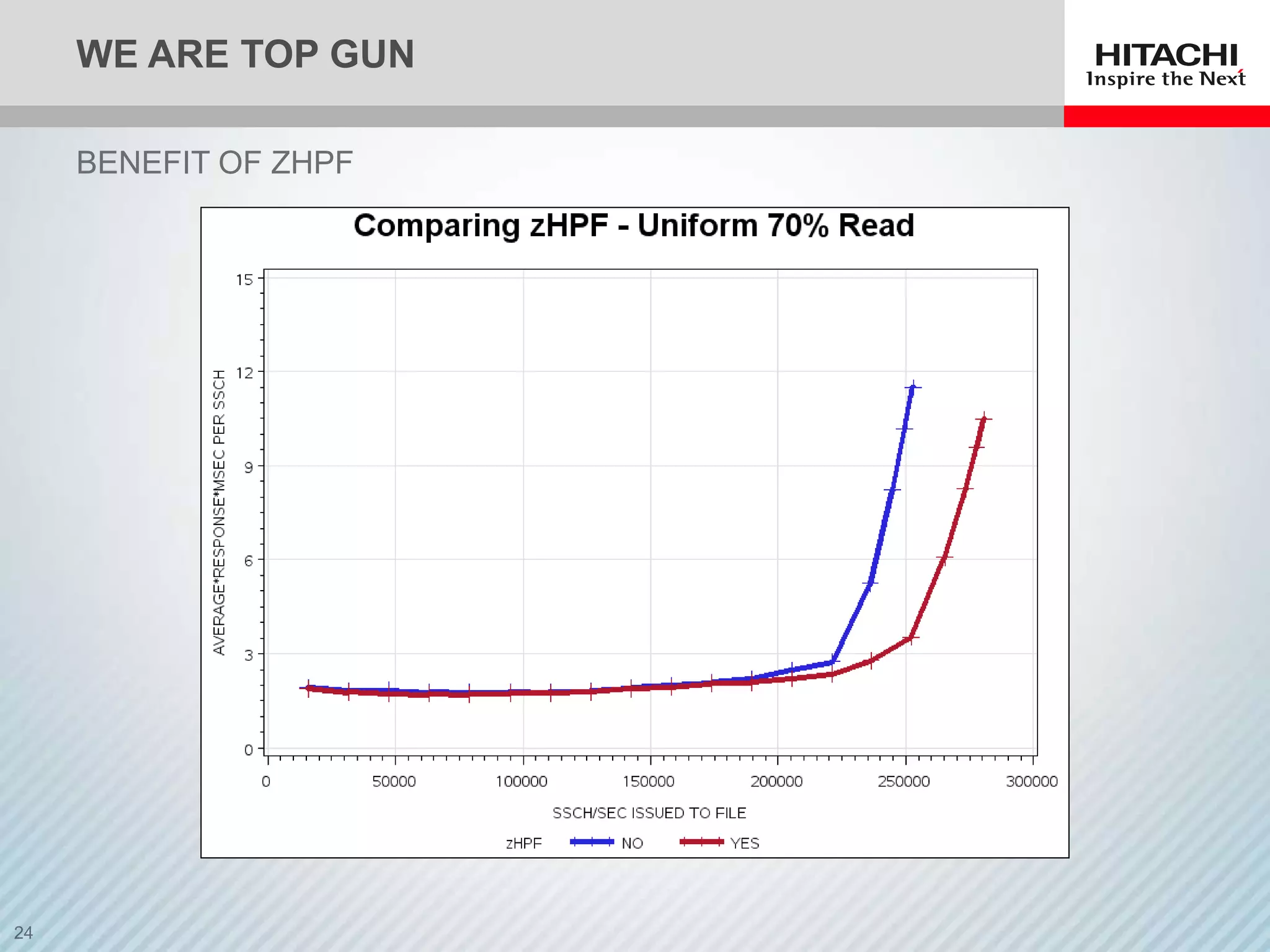

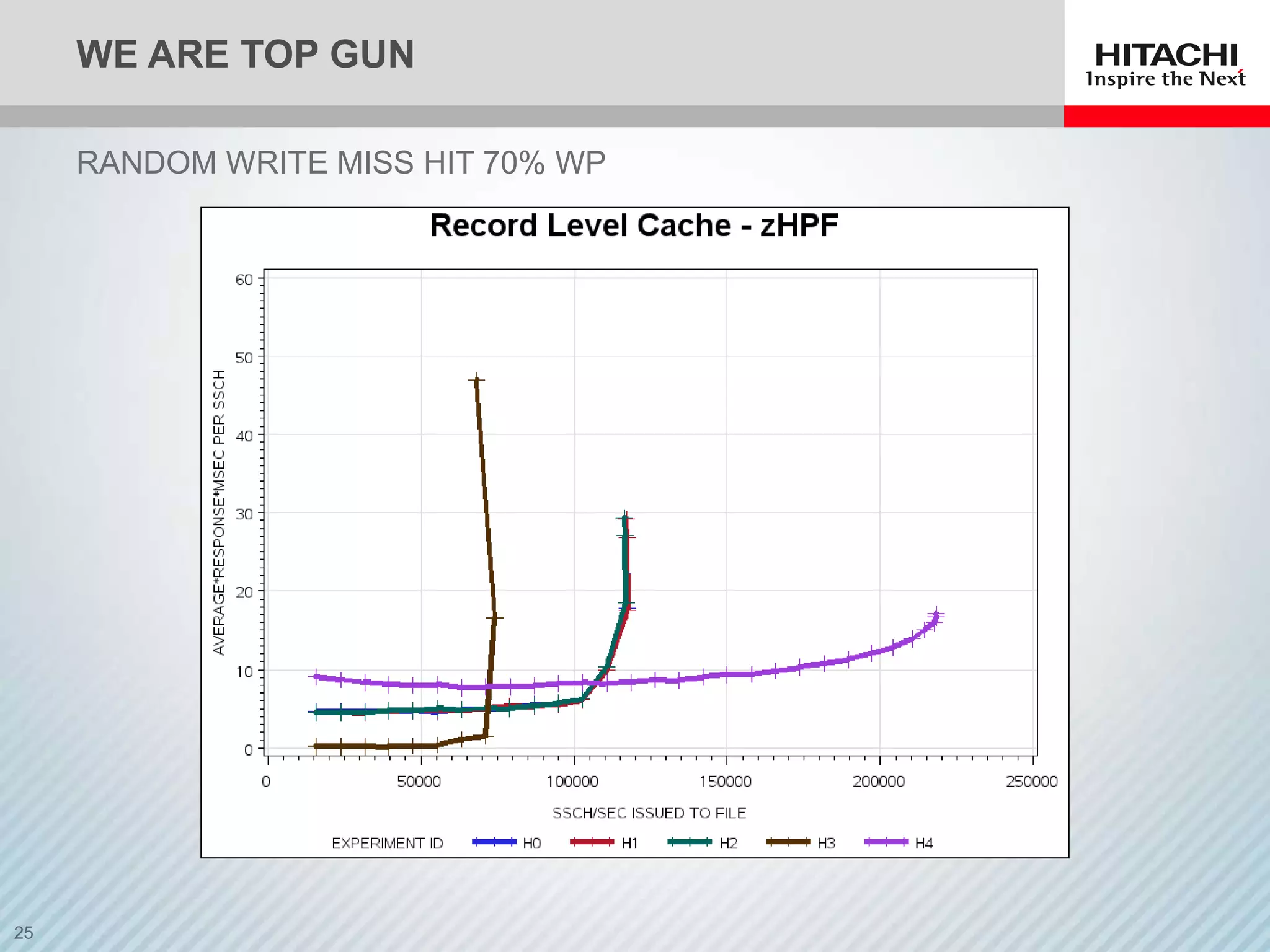

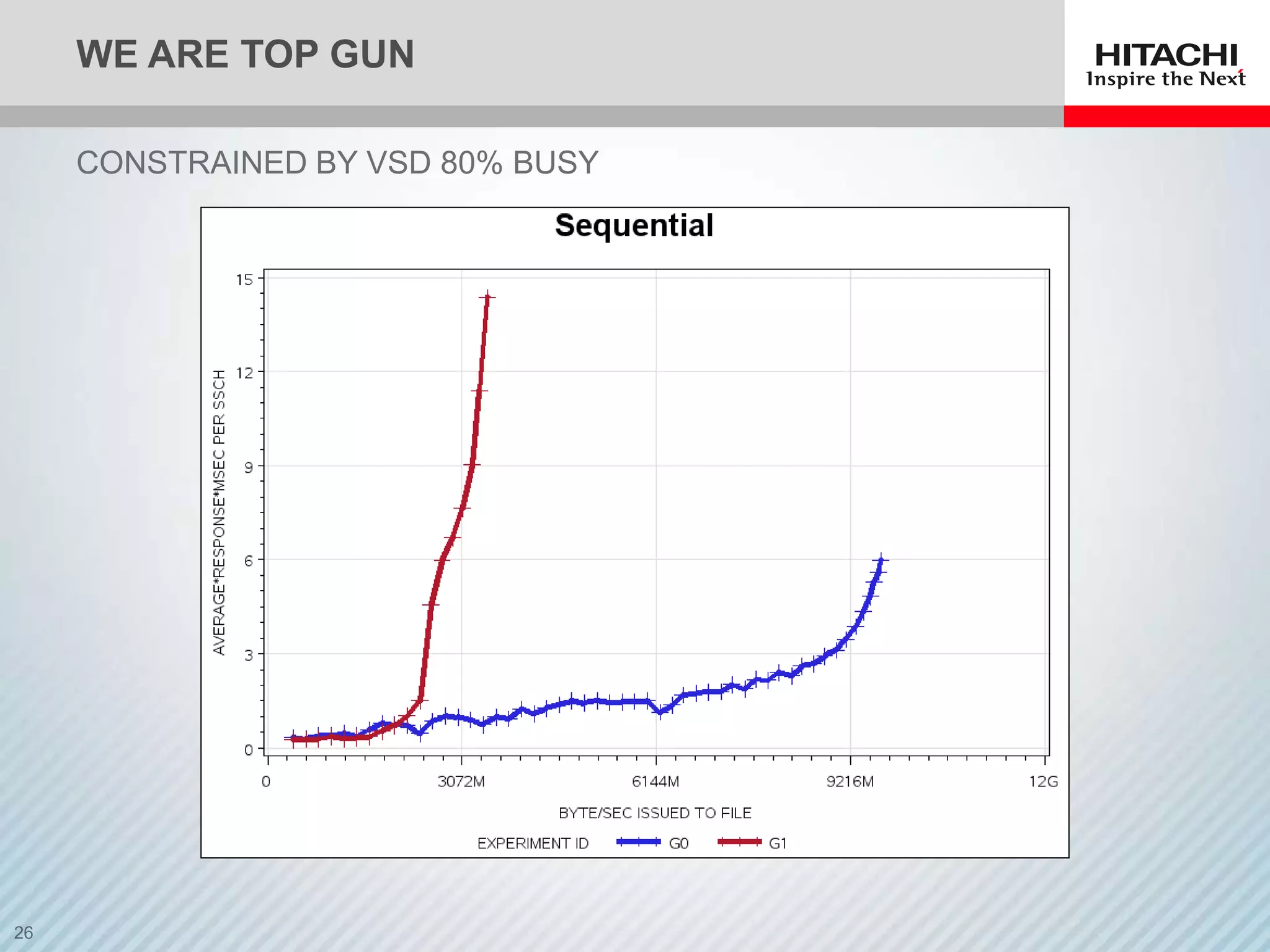

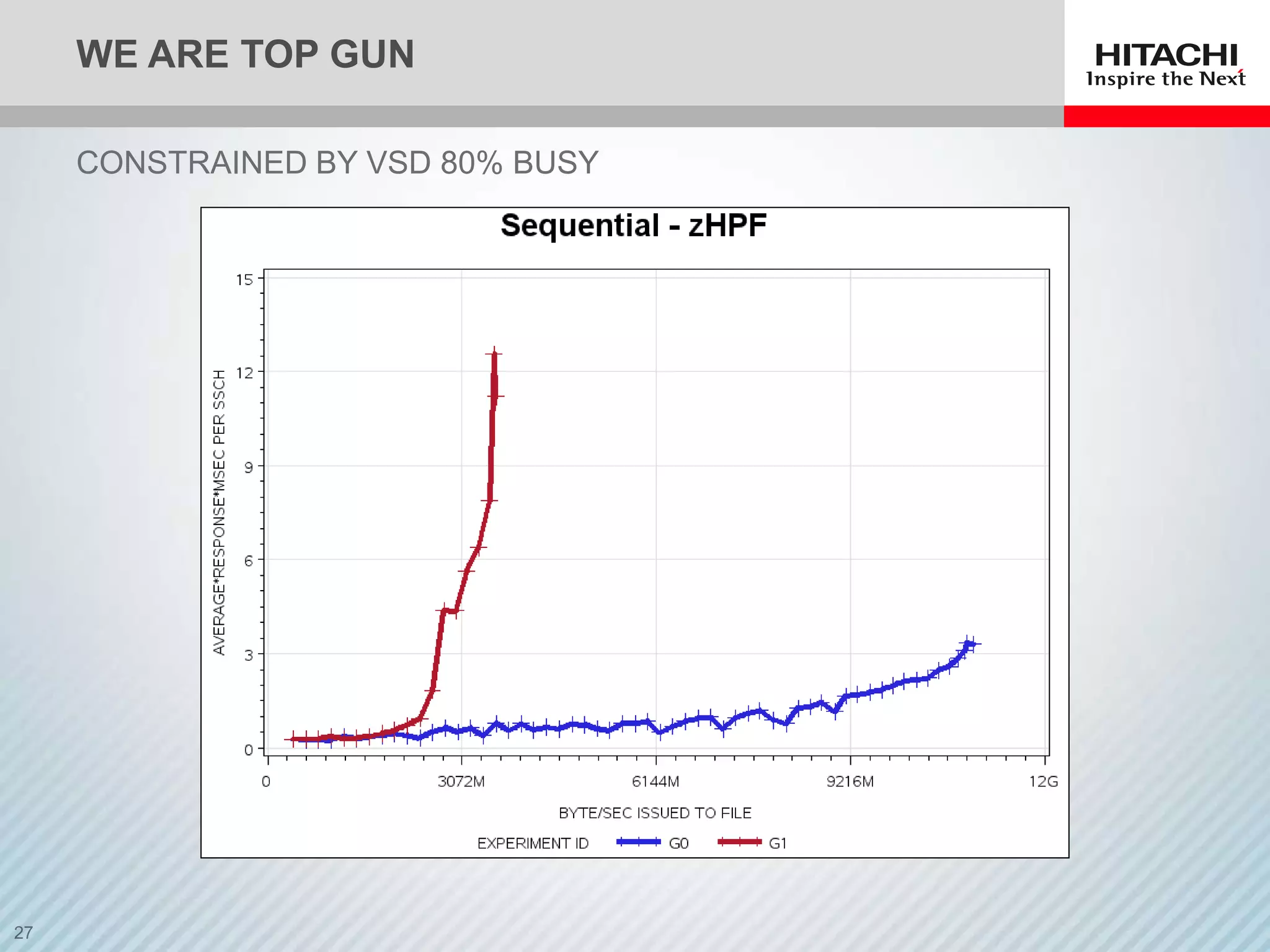

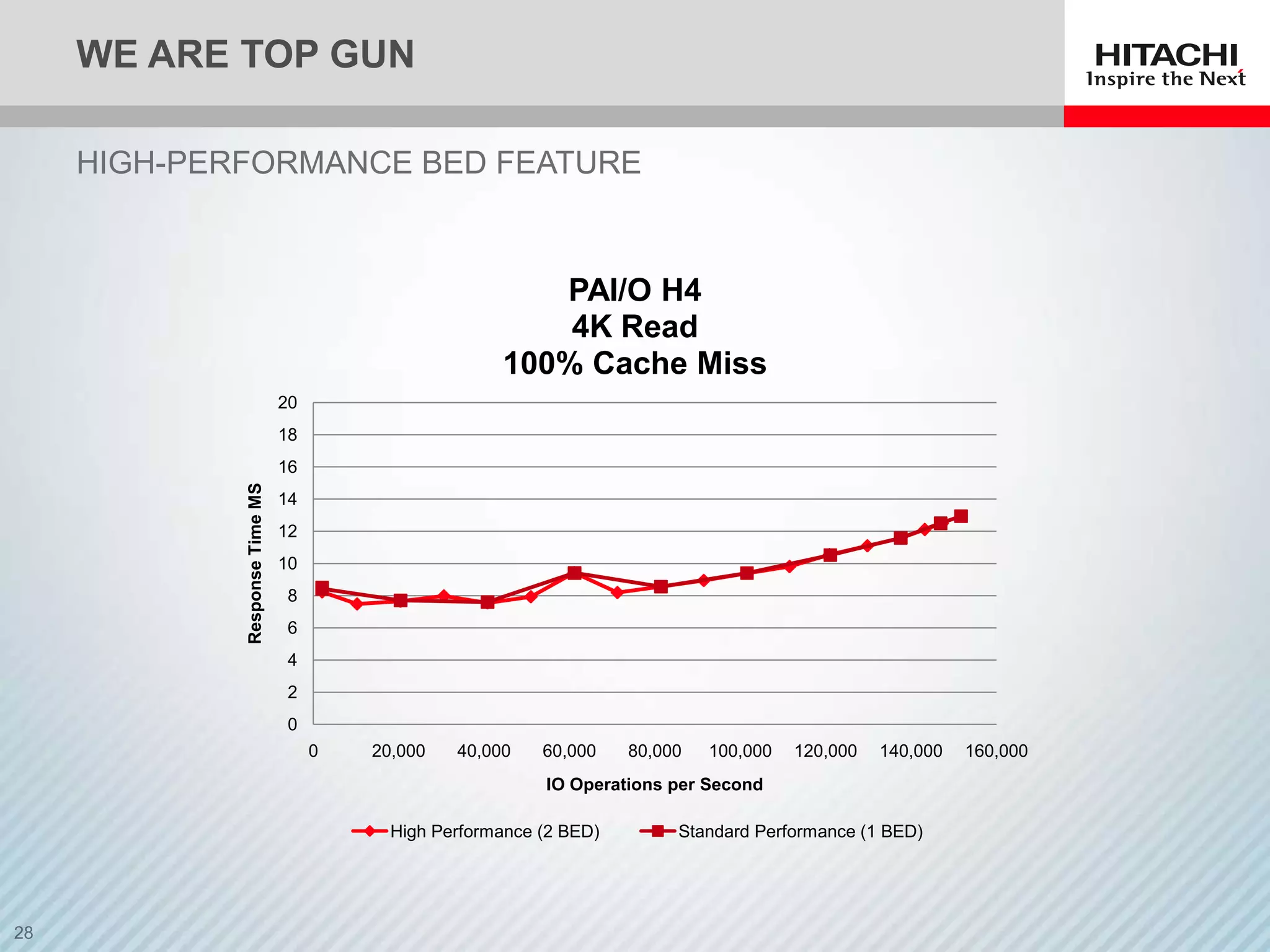

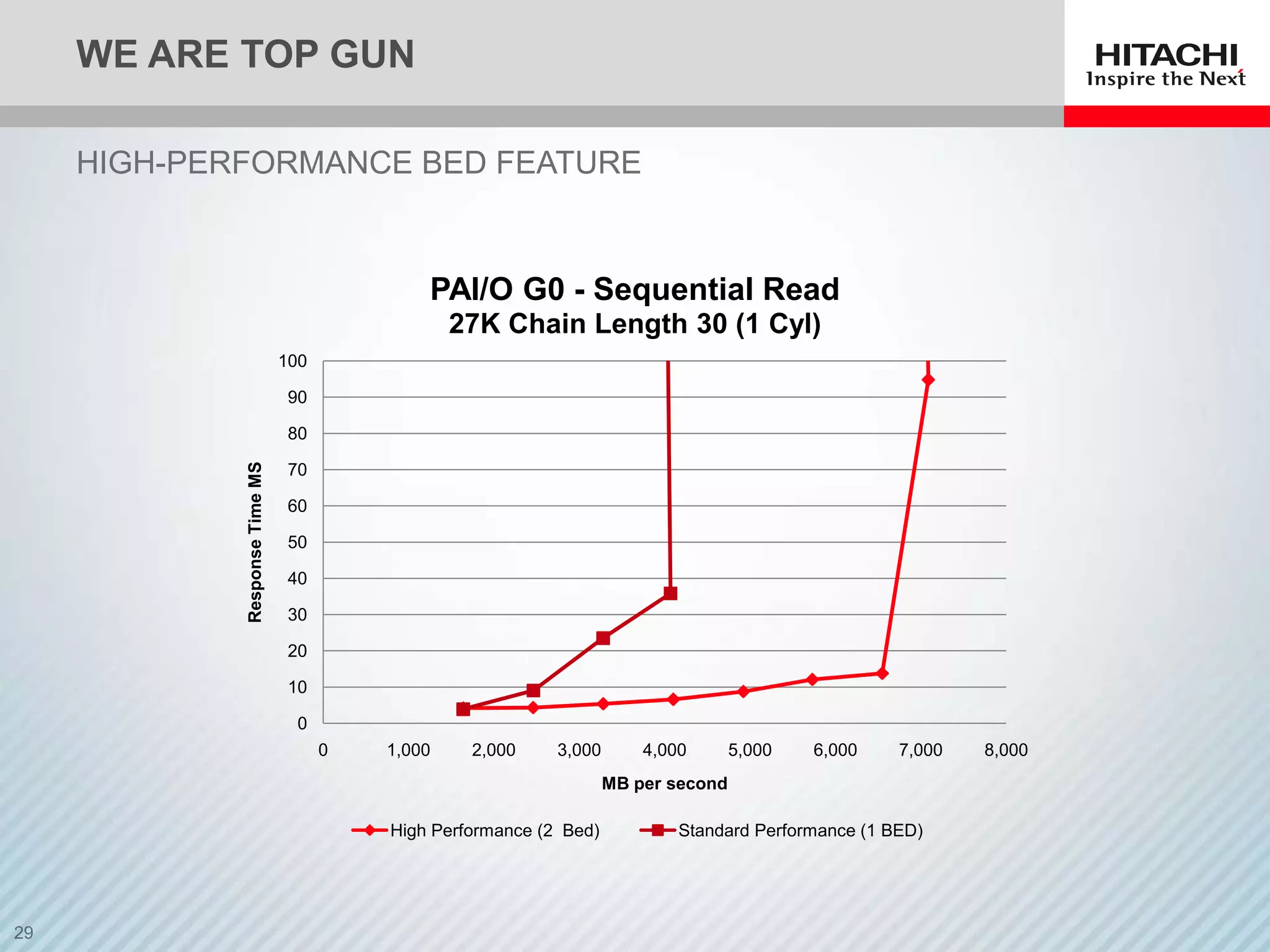

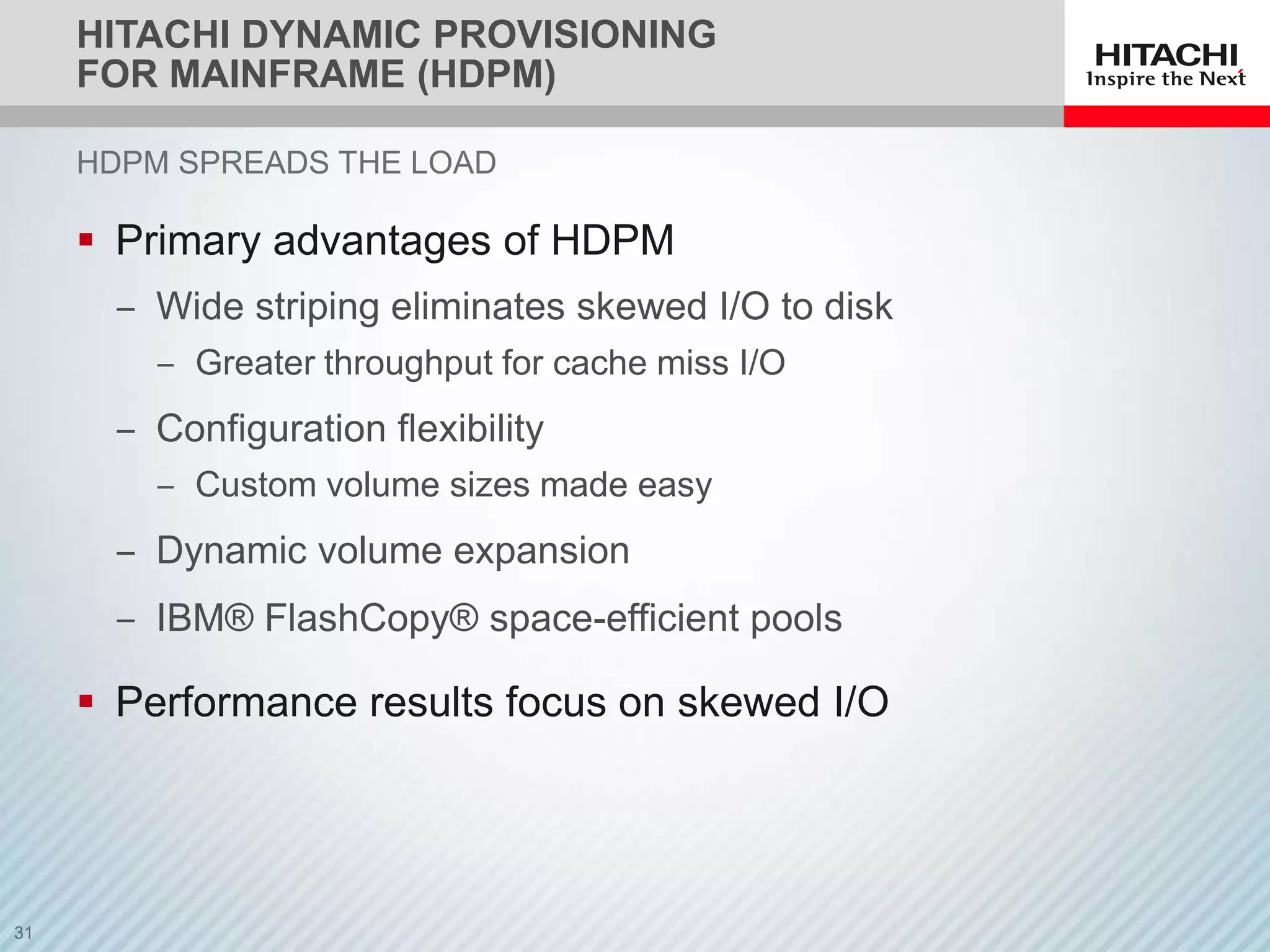

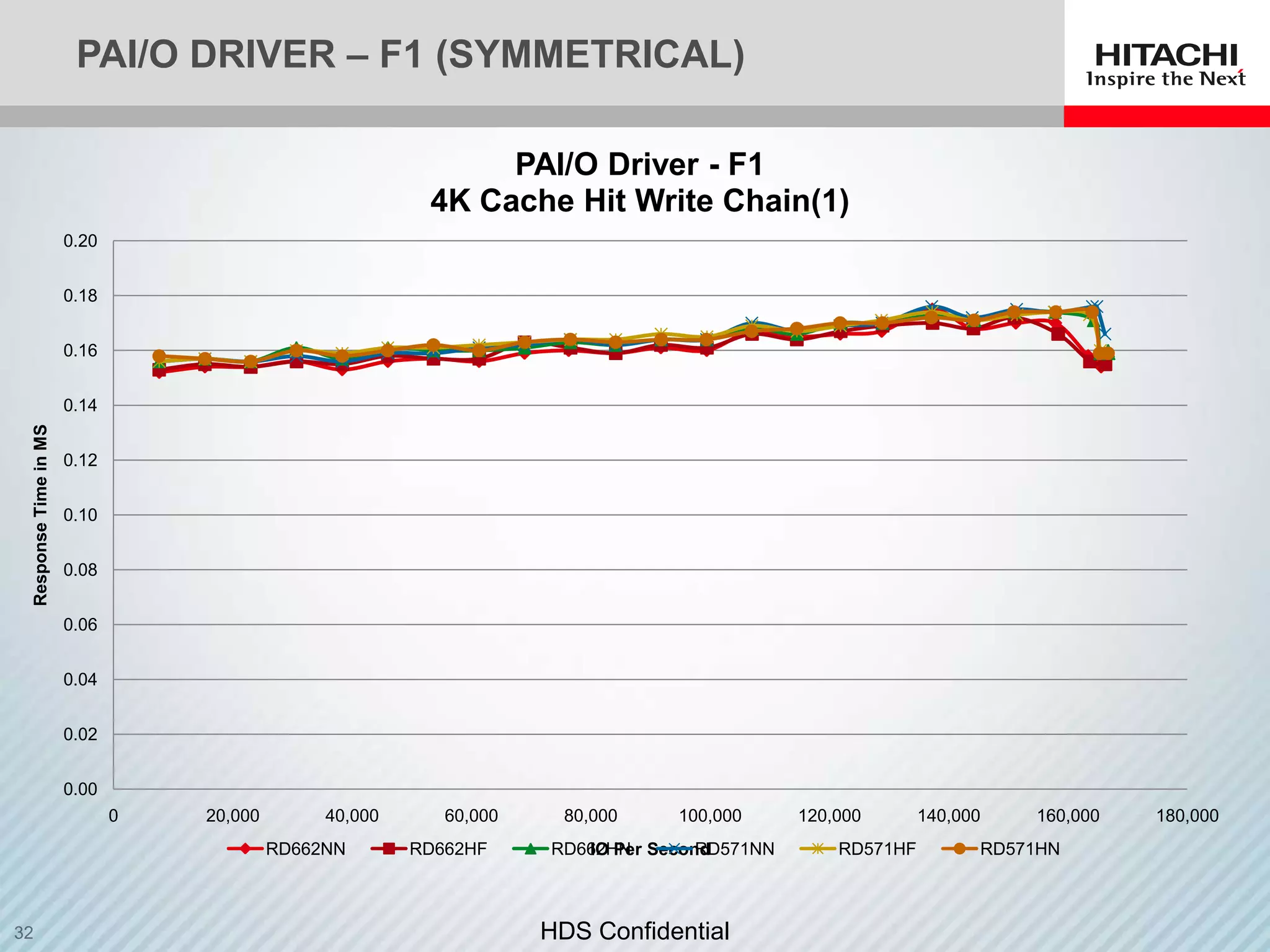

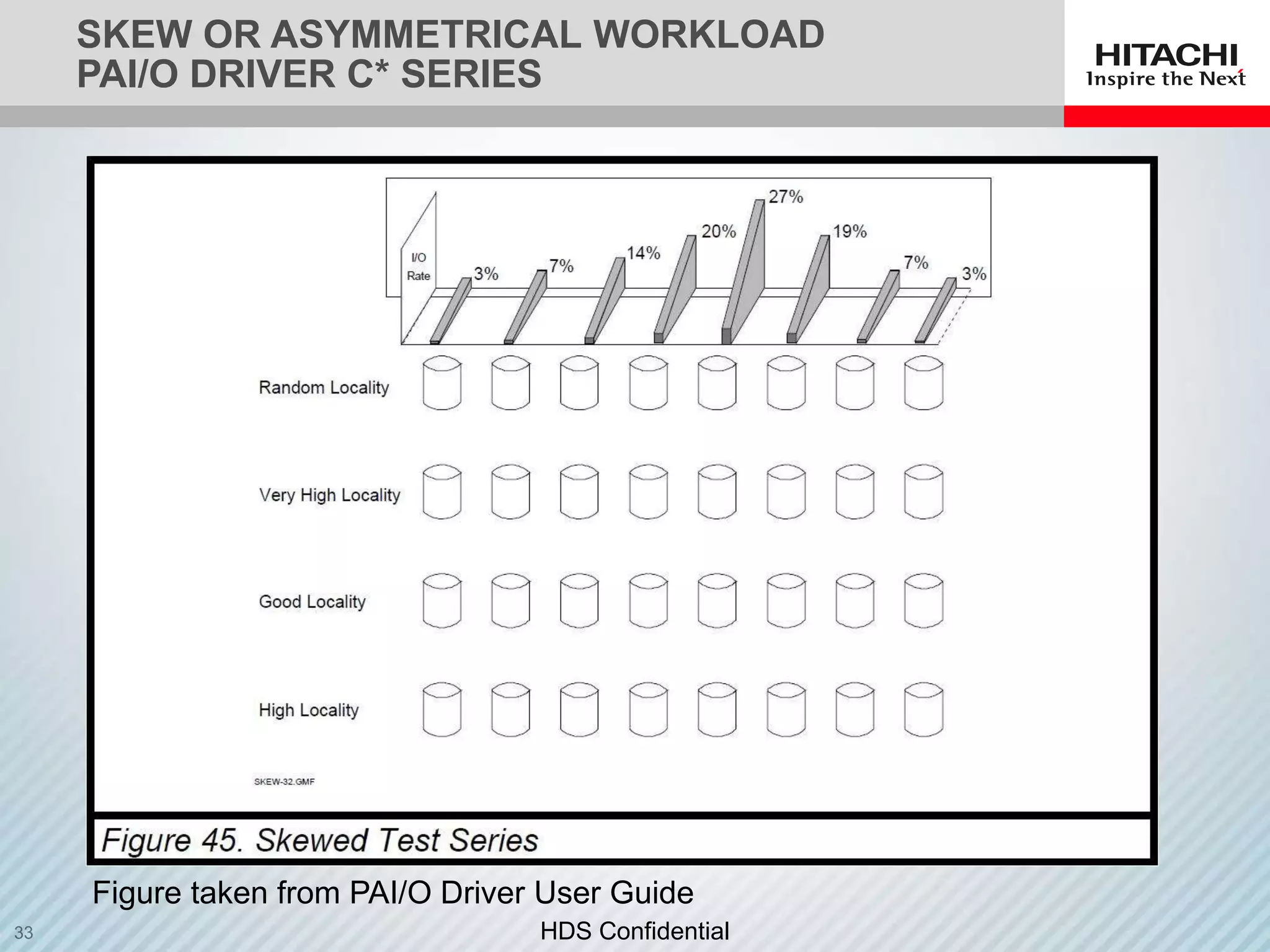

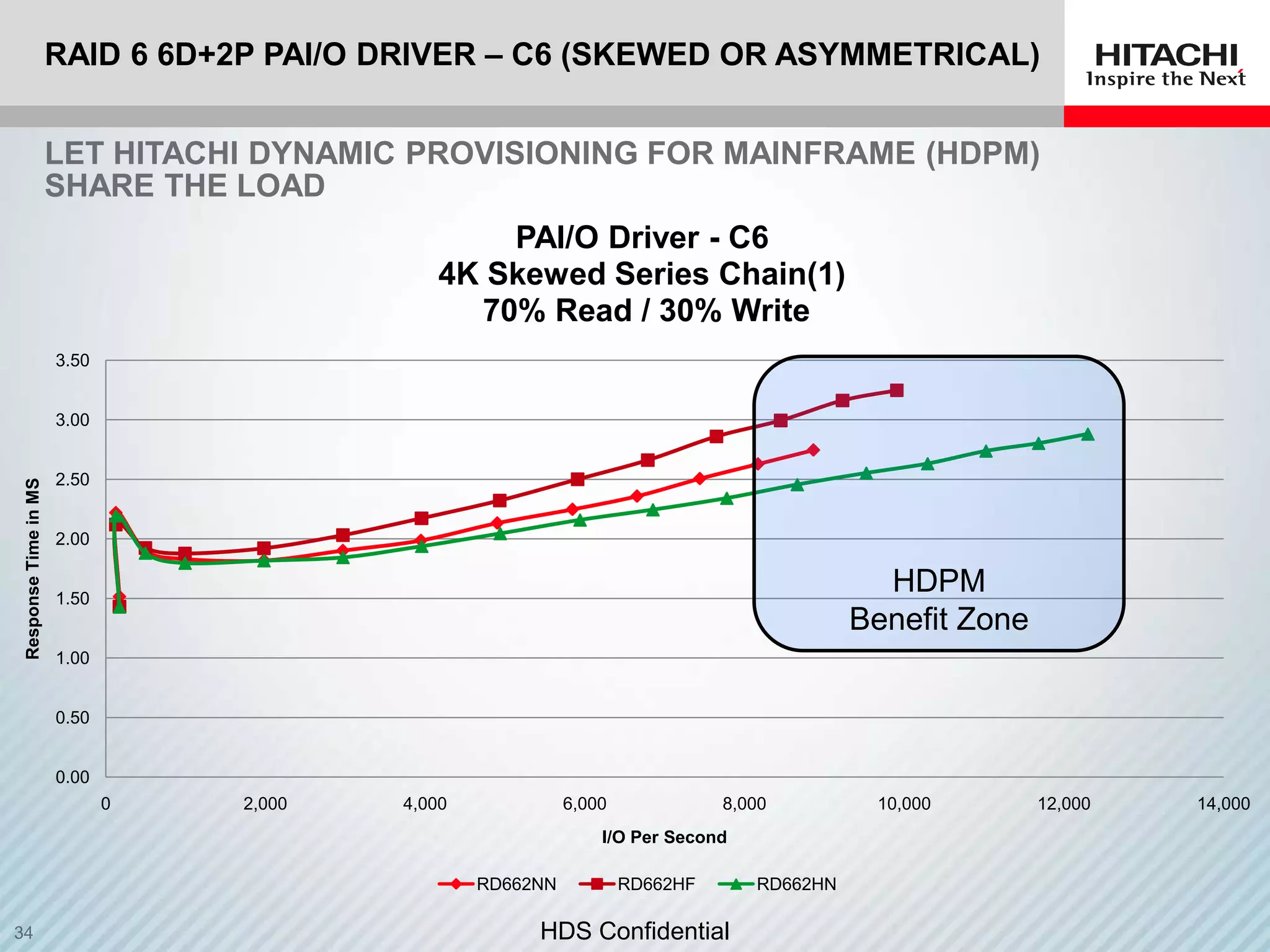

The document discusses the performance advantages of the Hitachi Virtual Storage Platform (VSP) in mainframe environments, highlighting its architecture and testing results. It outlines how features like unified microprocessors and Hitachi Dynamic Provisioning enhance performance and optimize I/O operations. Additionally, upcoming webtech sessions are mentioned, focusing on topics like IT agility and best practices for upgrading systems.