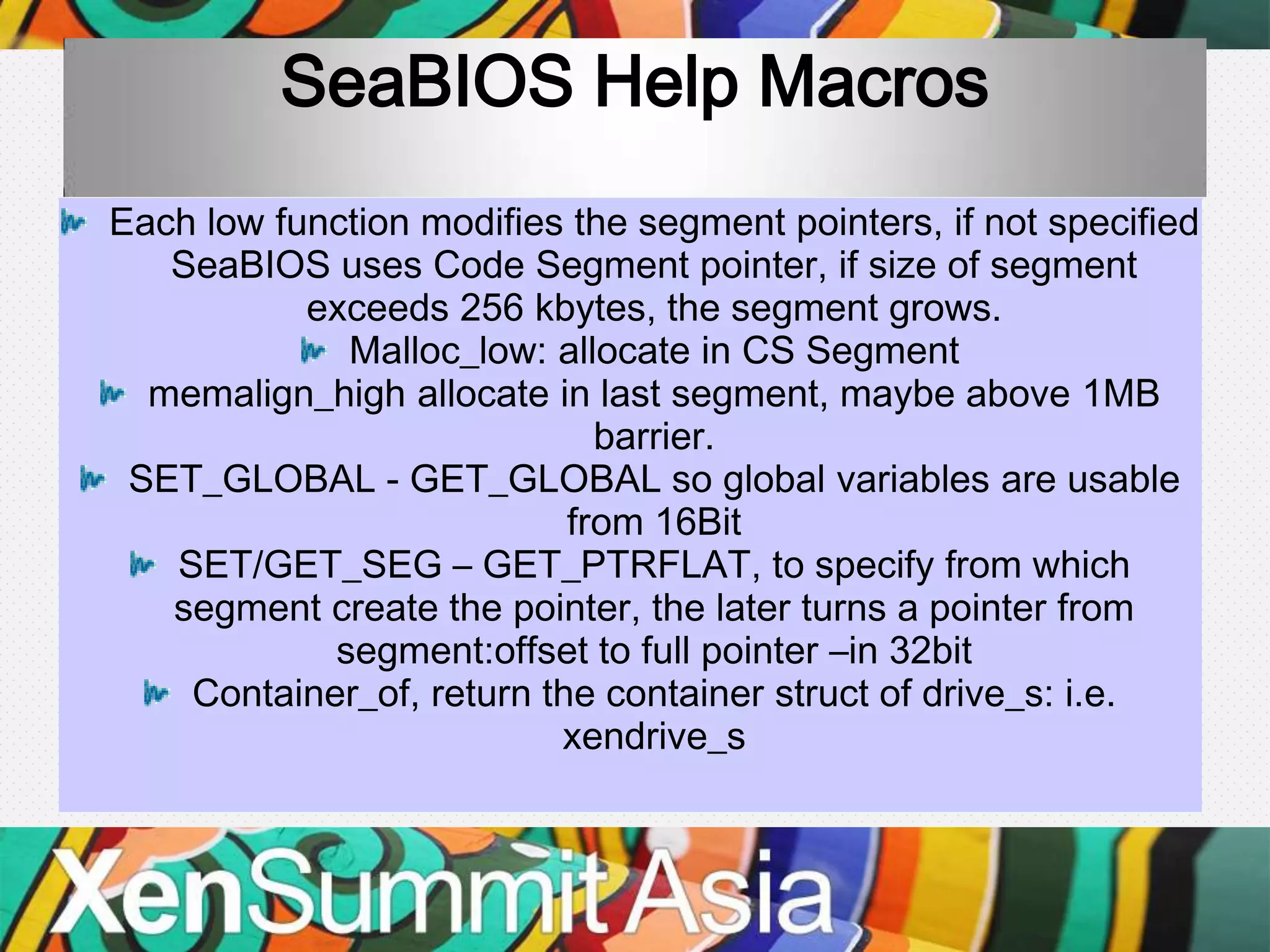

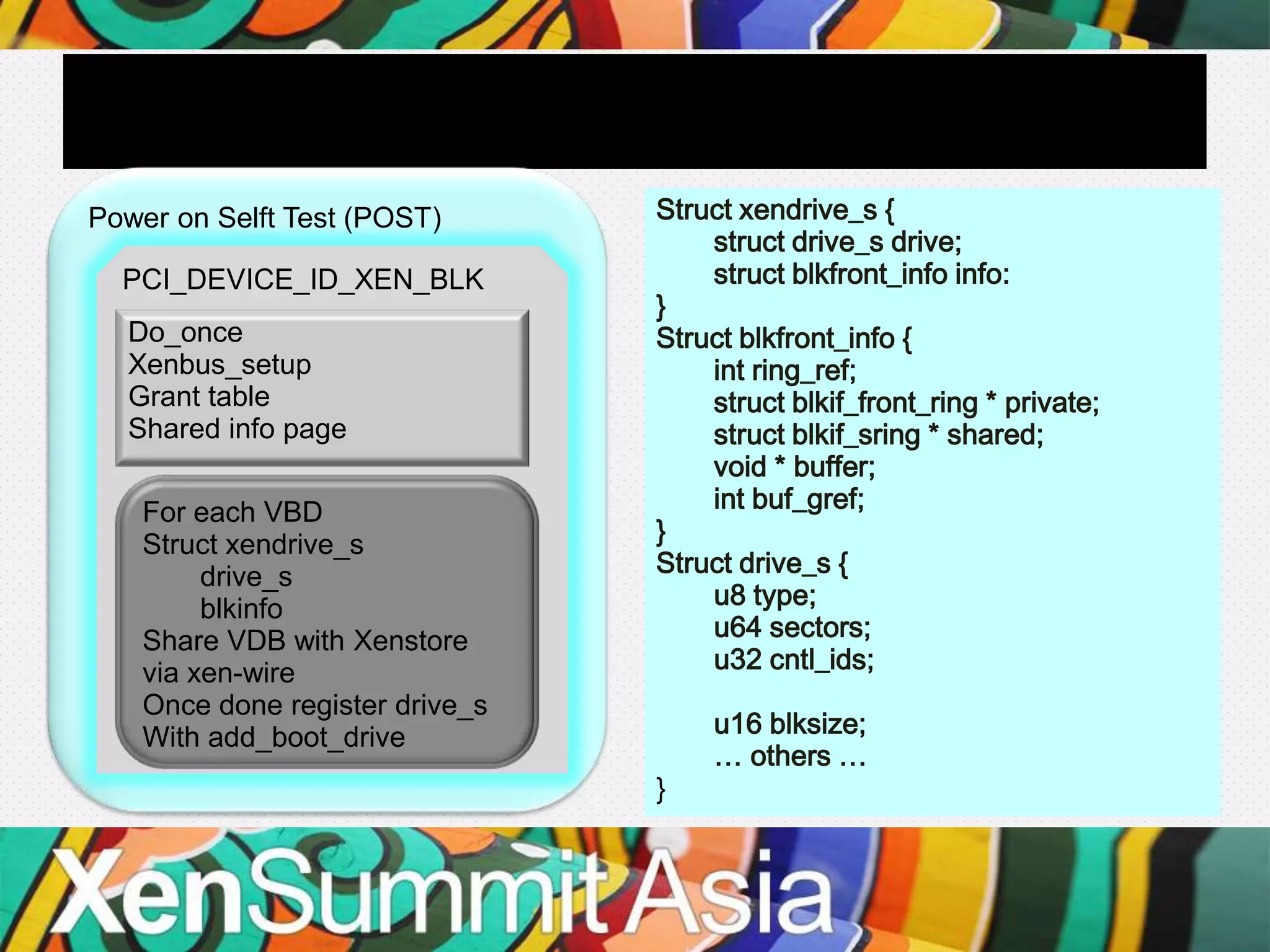

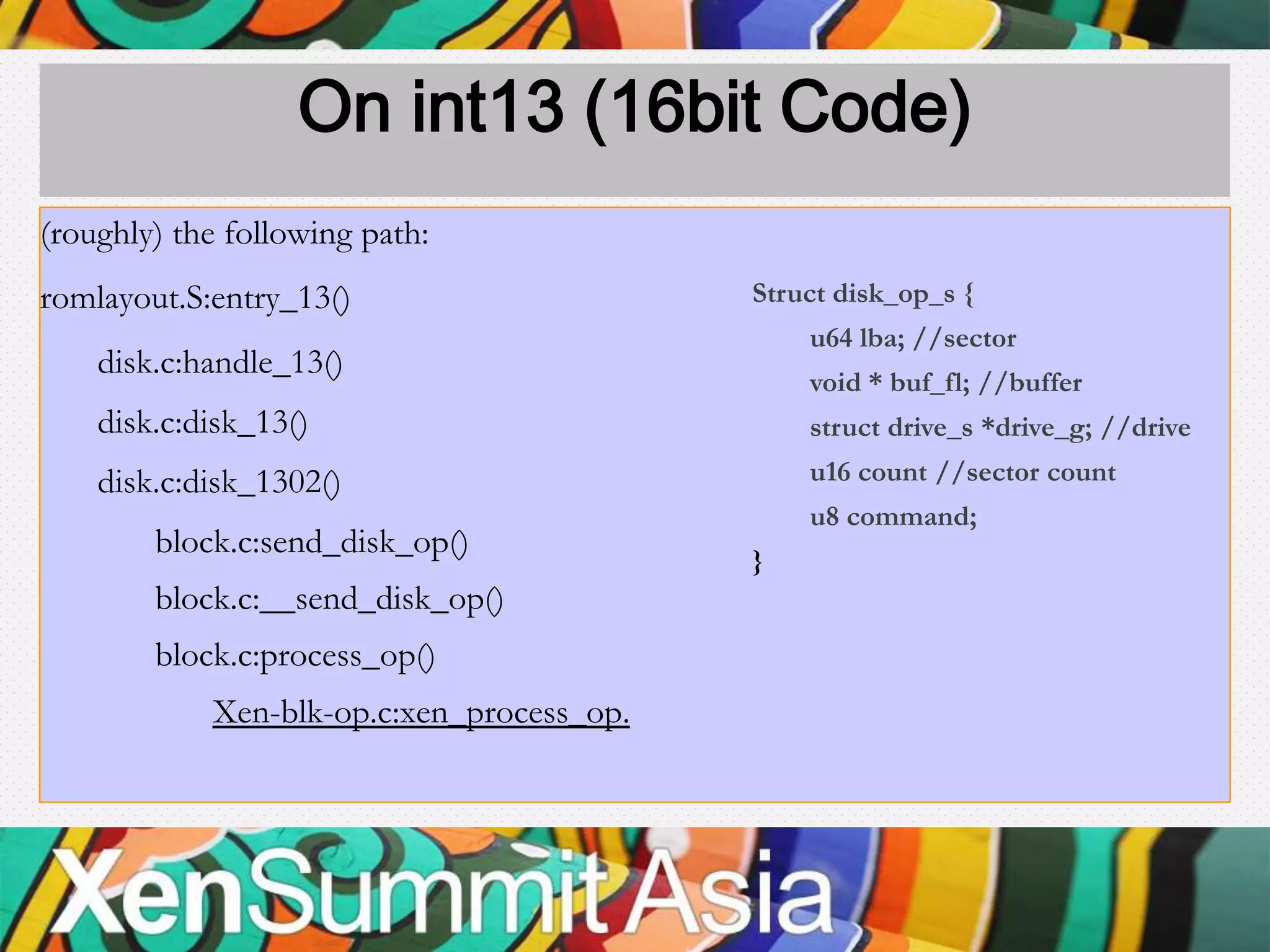

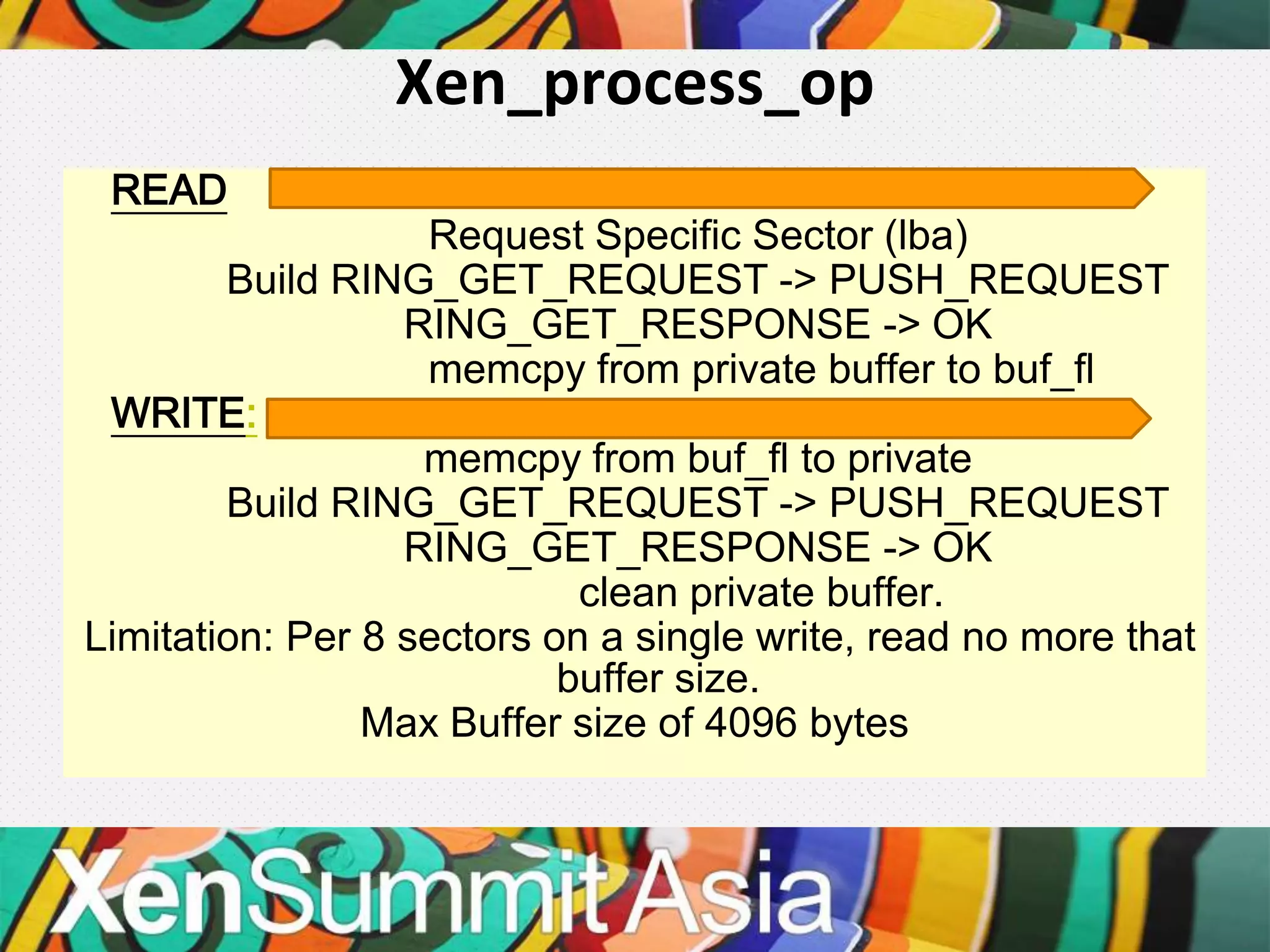

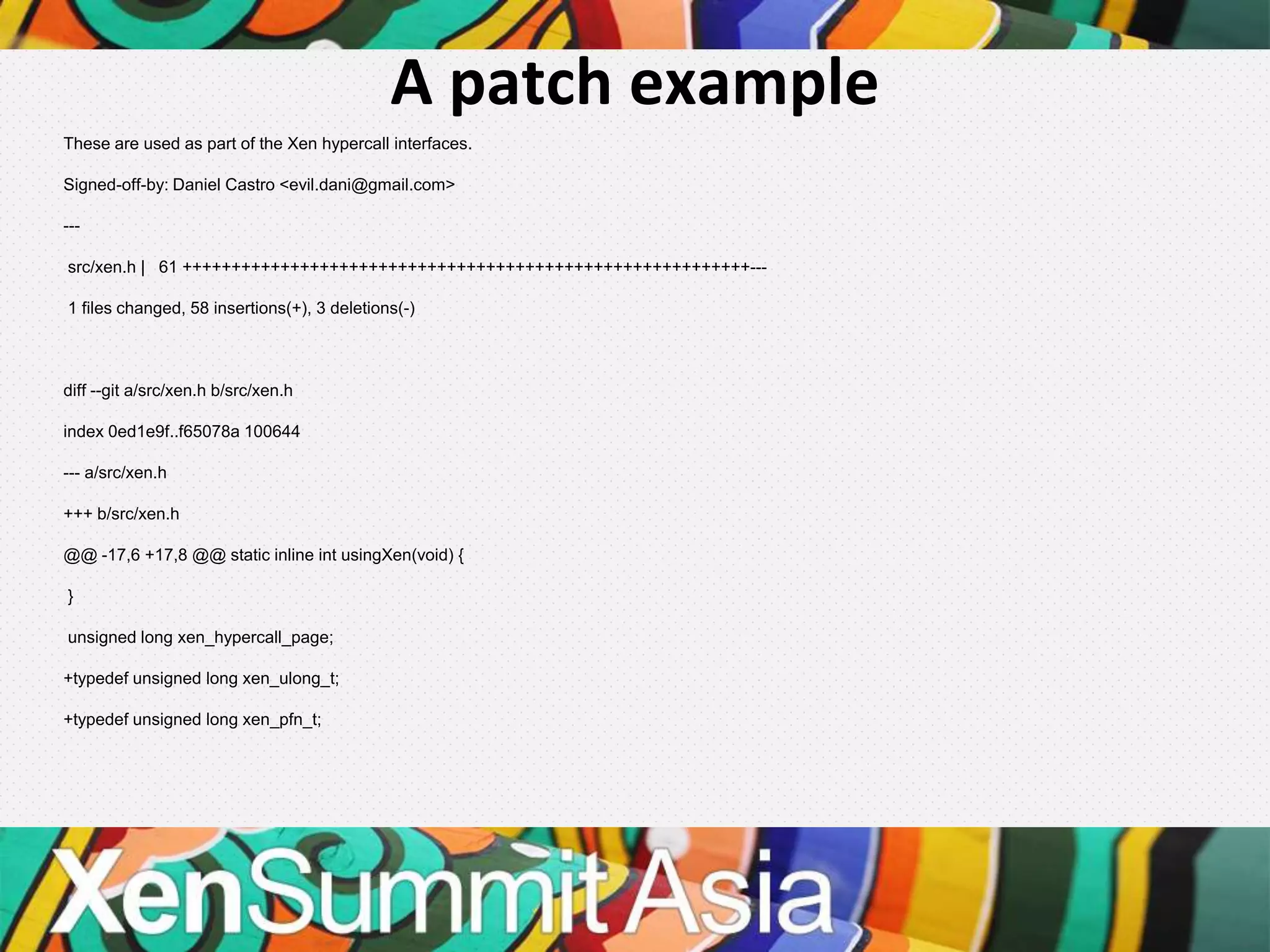

The document discusses the implementation of prototype paravirtualized (pv) drivers in SeaBIOS, an open-source legacy BIOS for HVM machines, used with upstream QEMU. It outlines the components of SeaBIOS, including initialization code and hypercall interfaces, and discusses the workflow for block device operations. Future work includes avoiding the use of private buffers and possibly incorporating net pv drivers with PXE support.