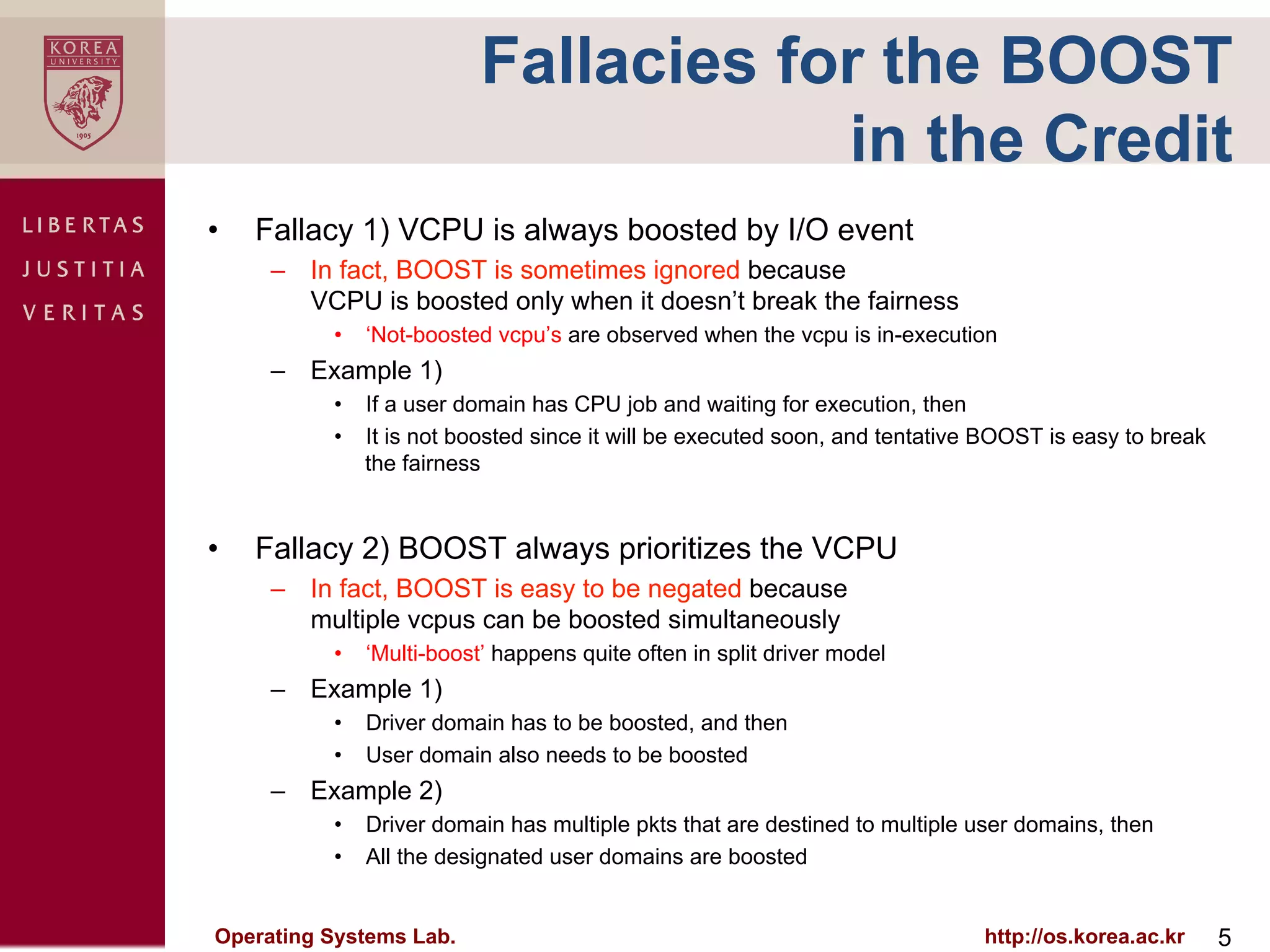

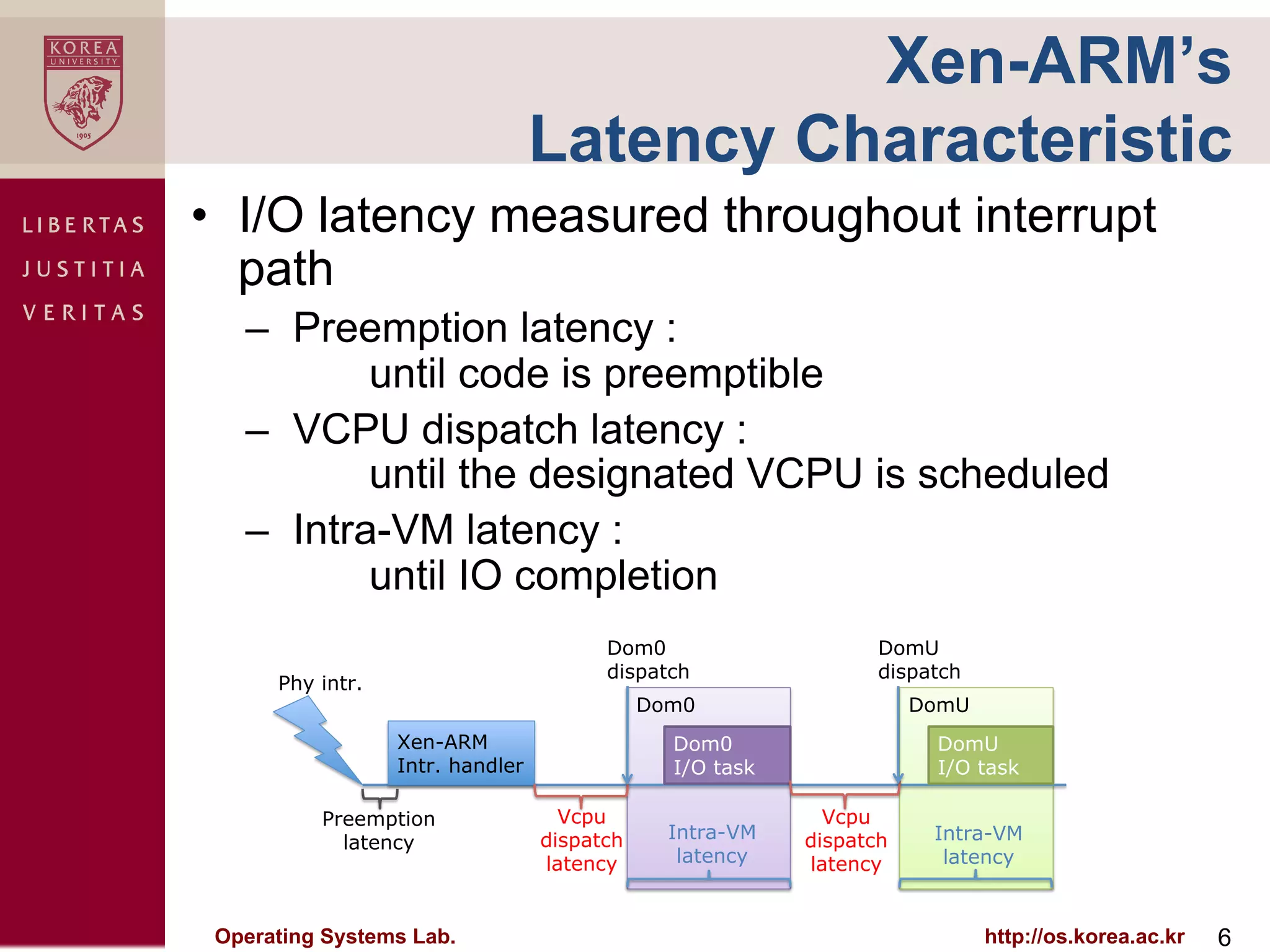

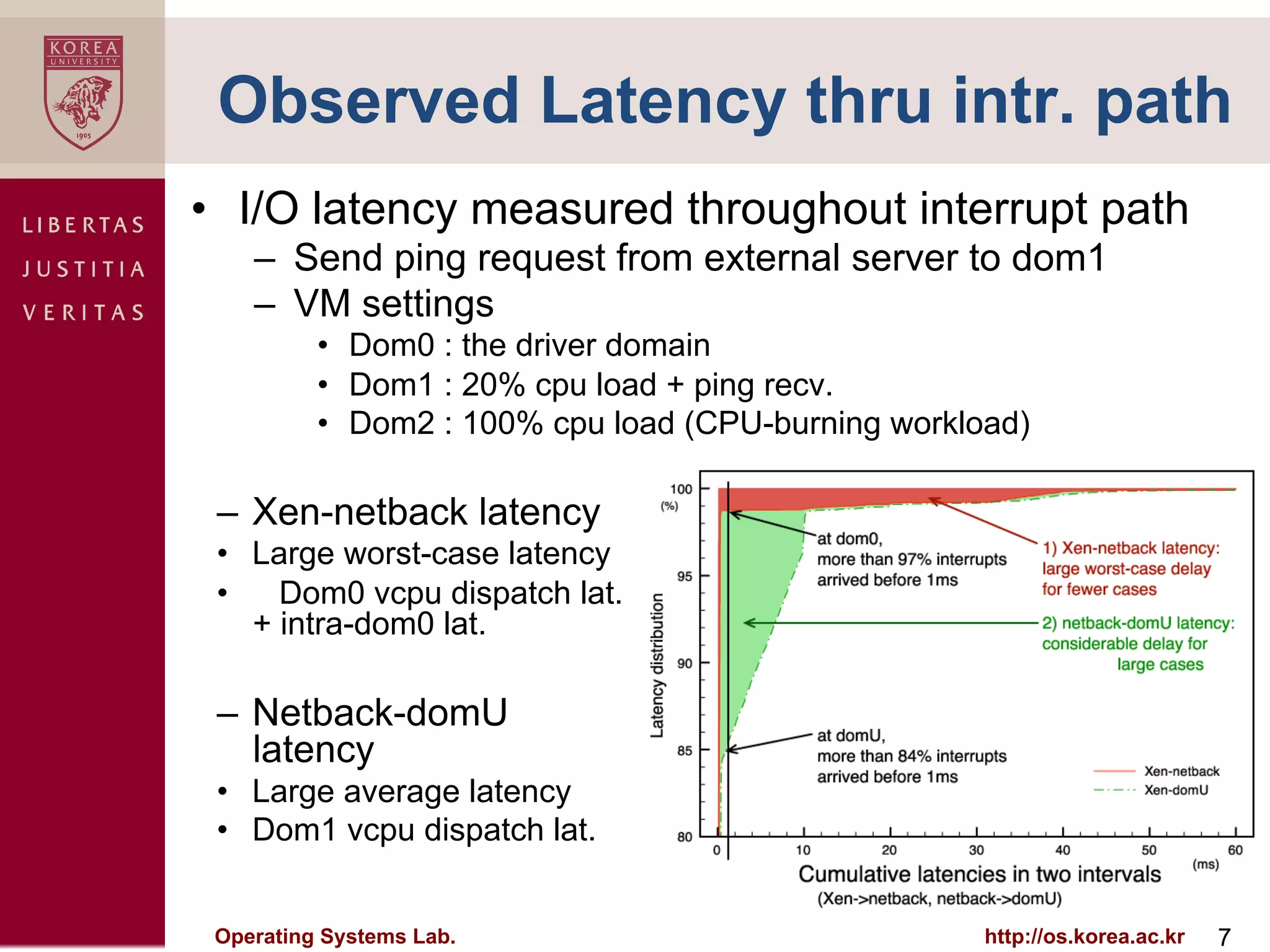

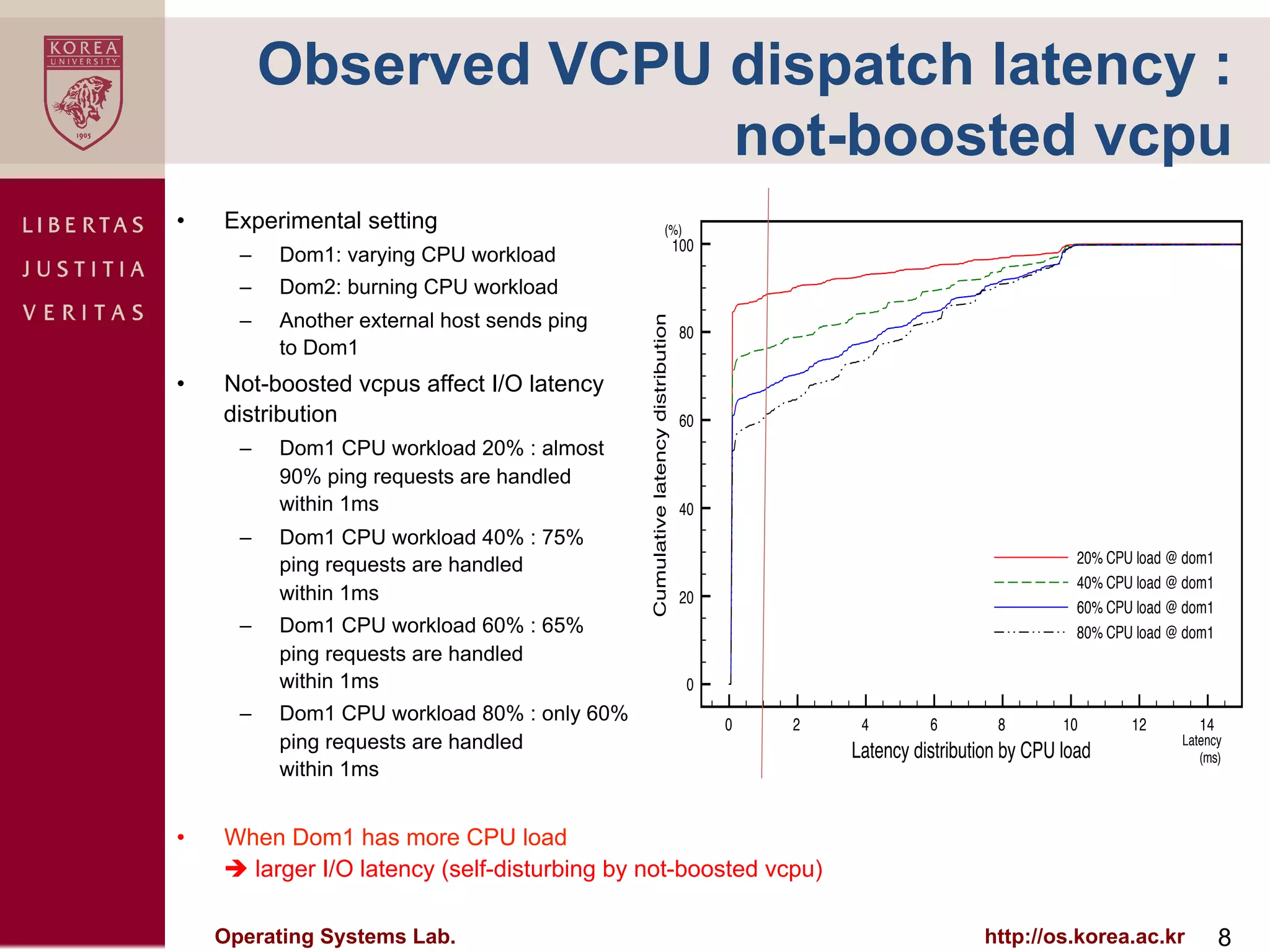

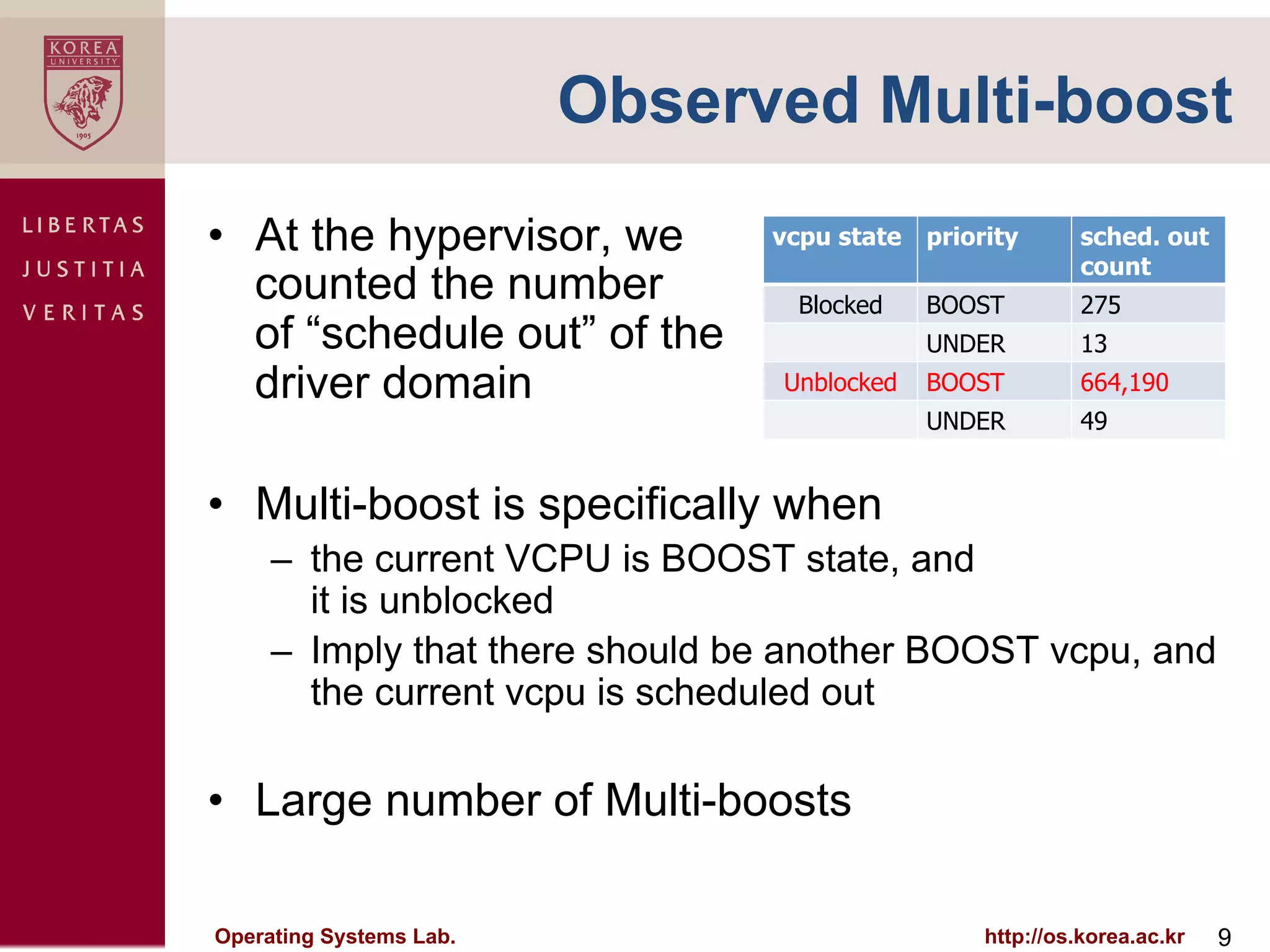

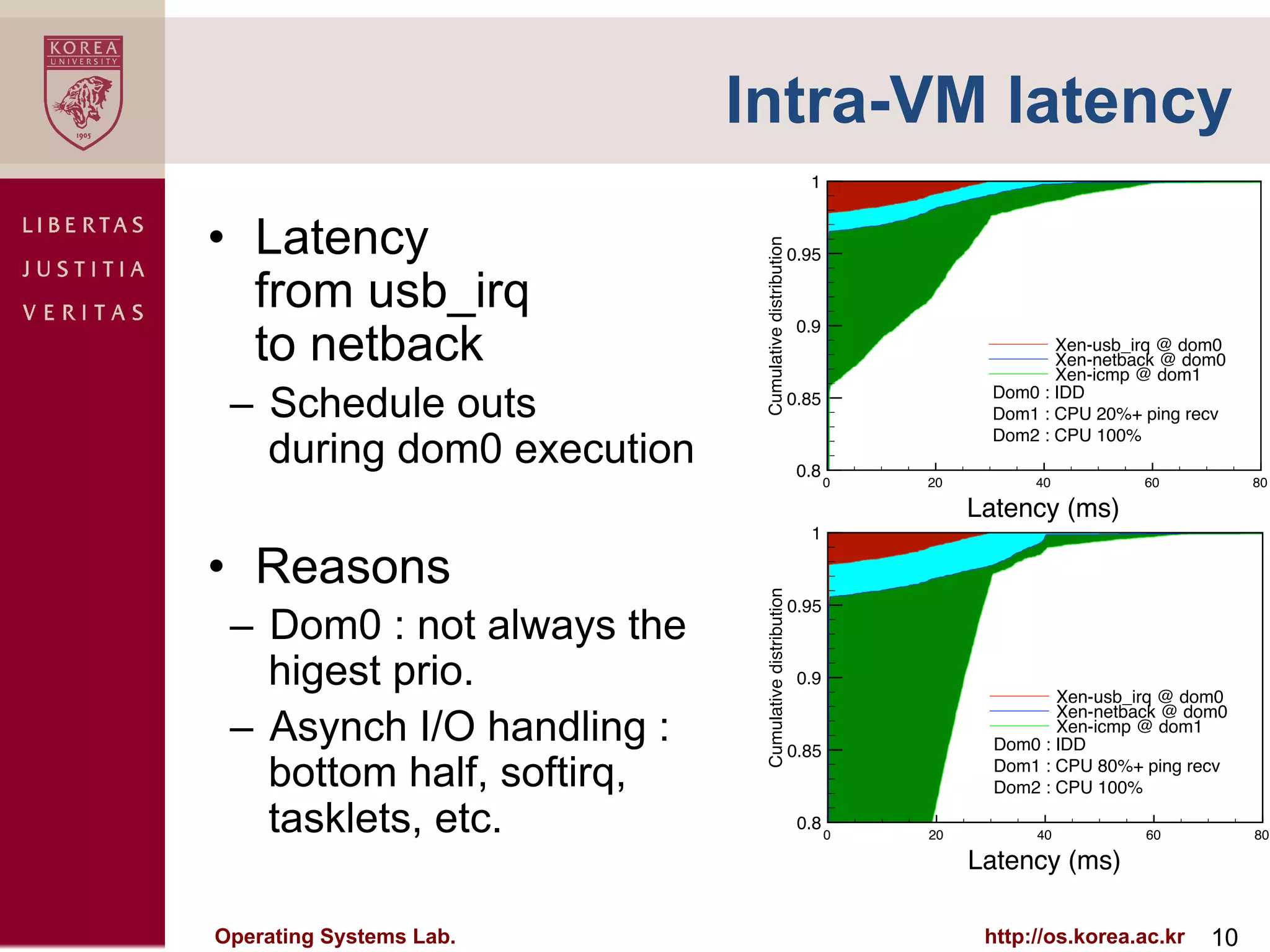

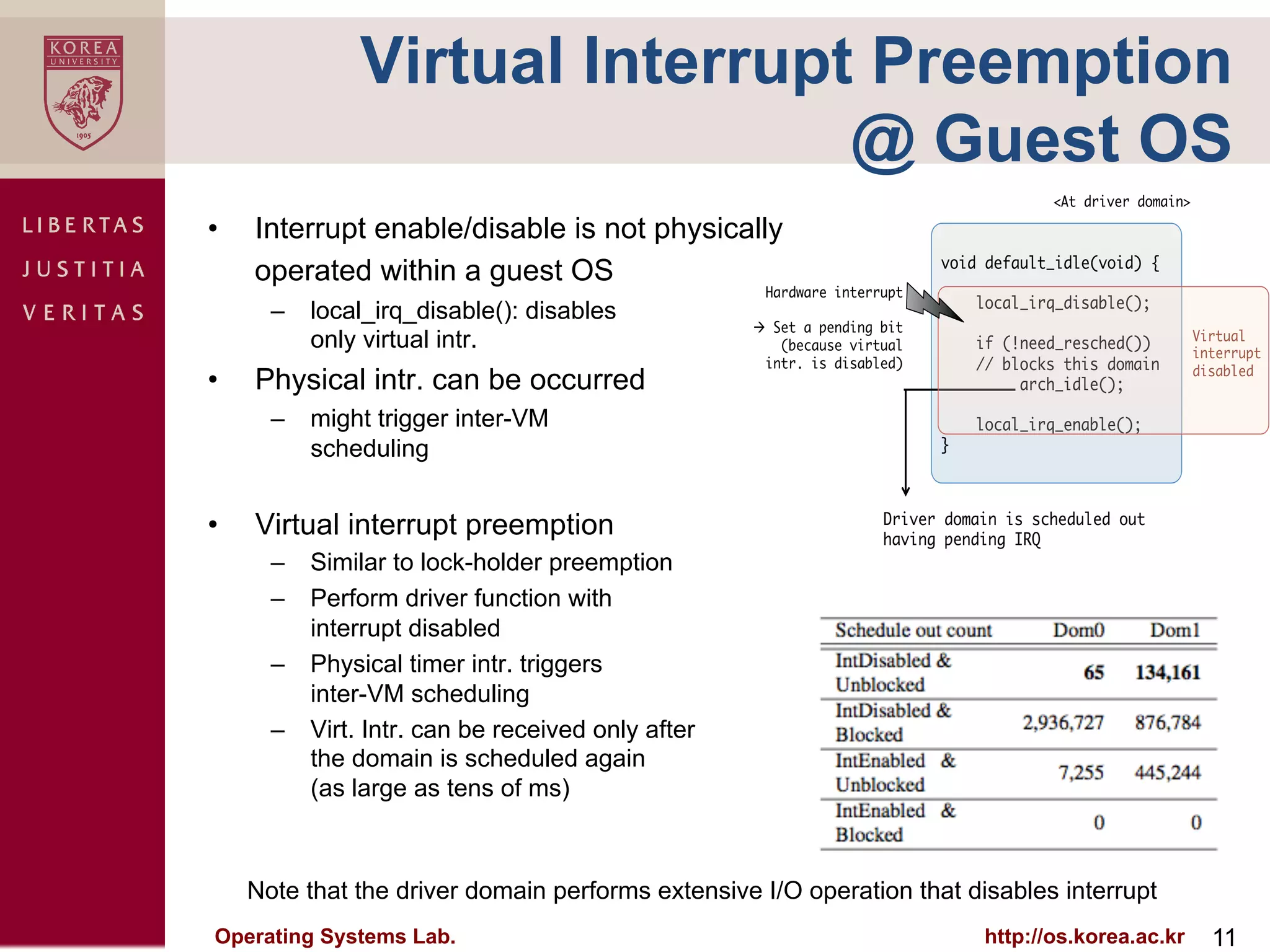

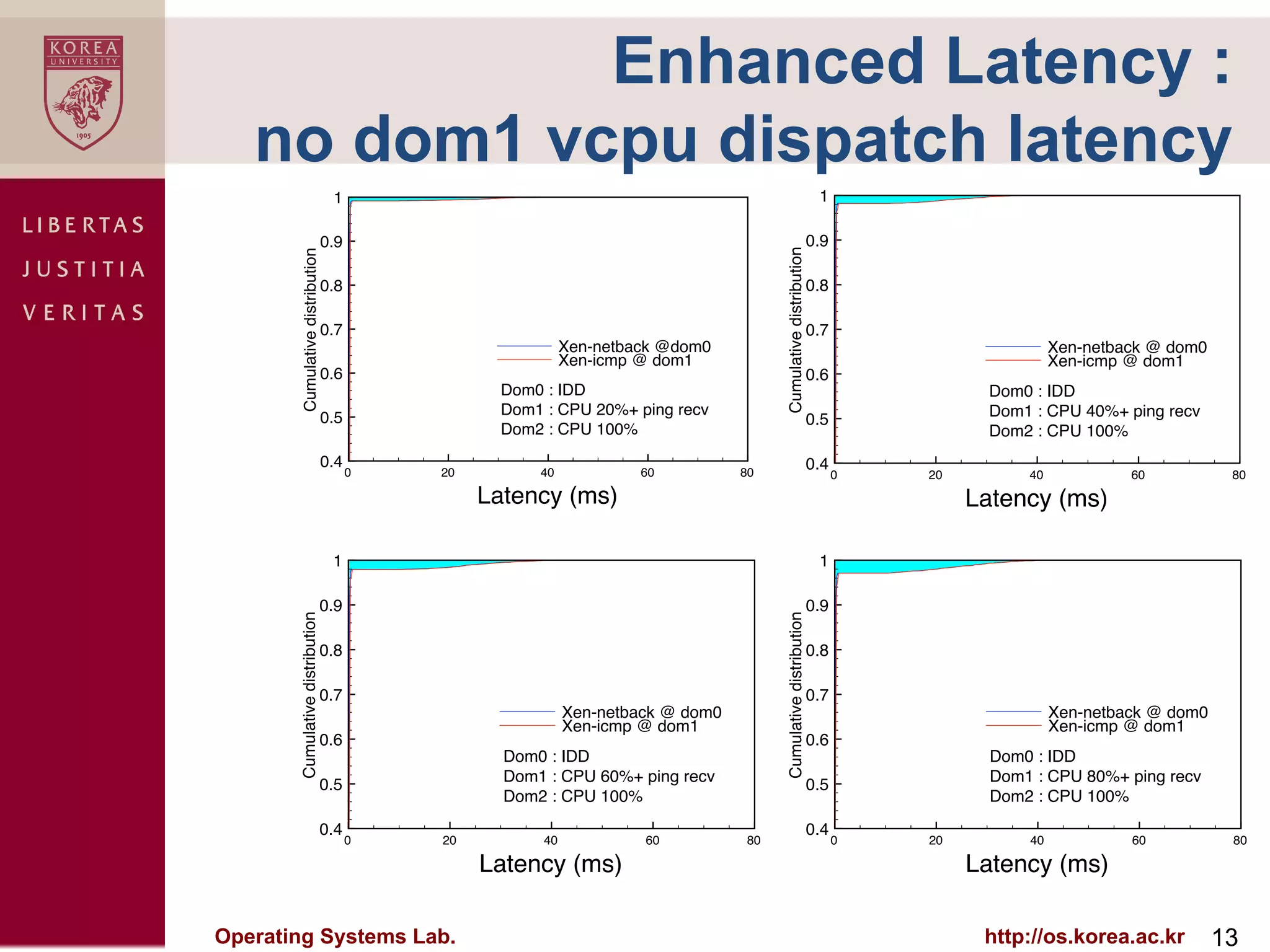

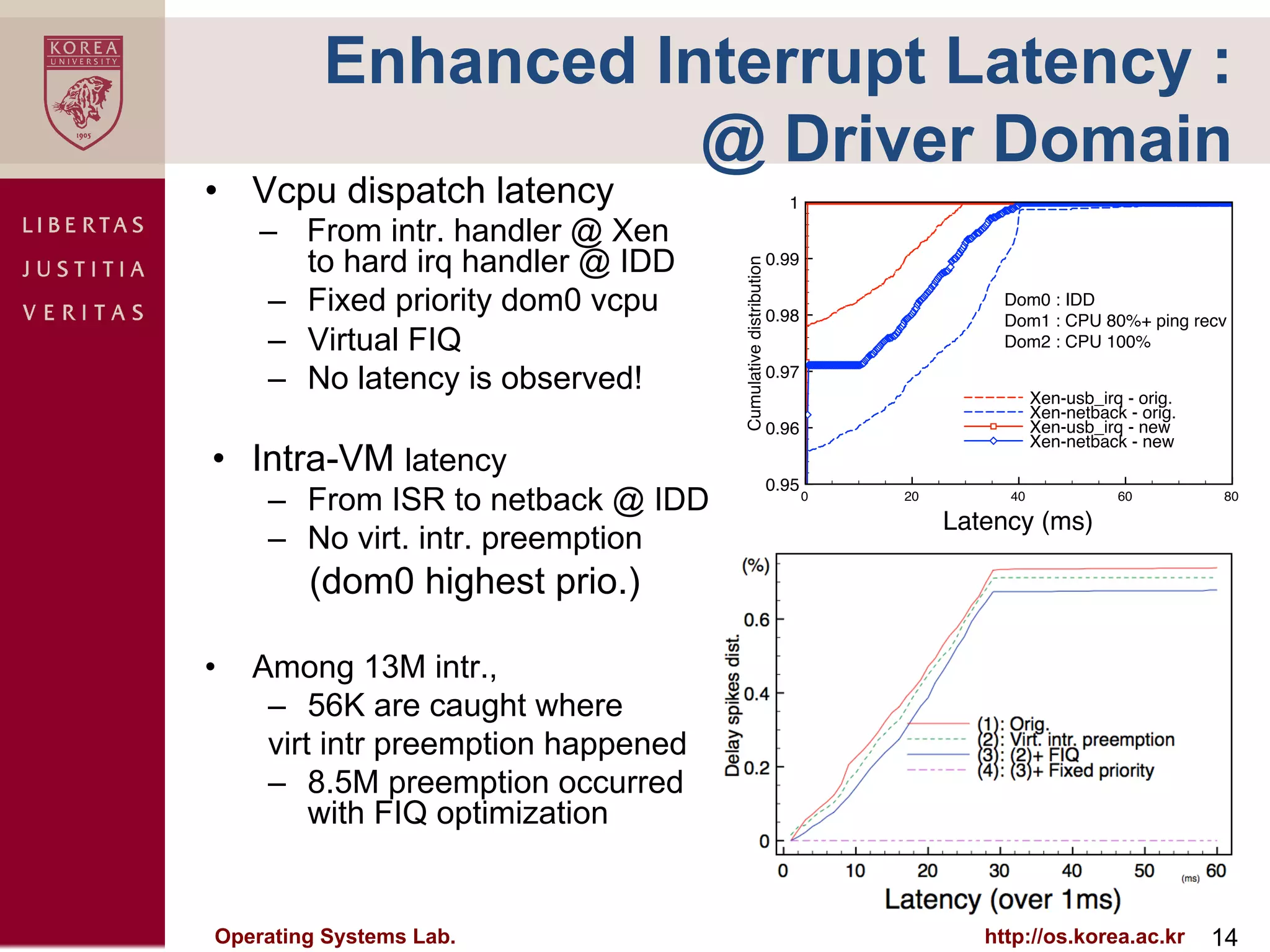

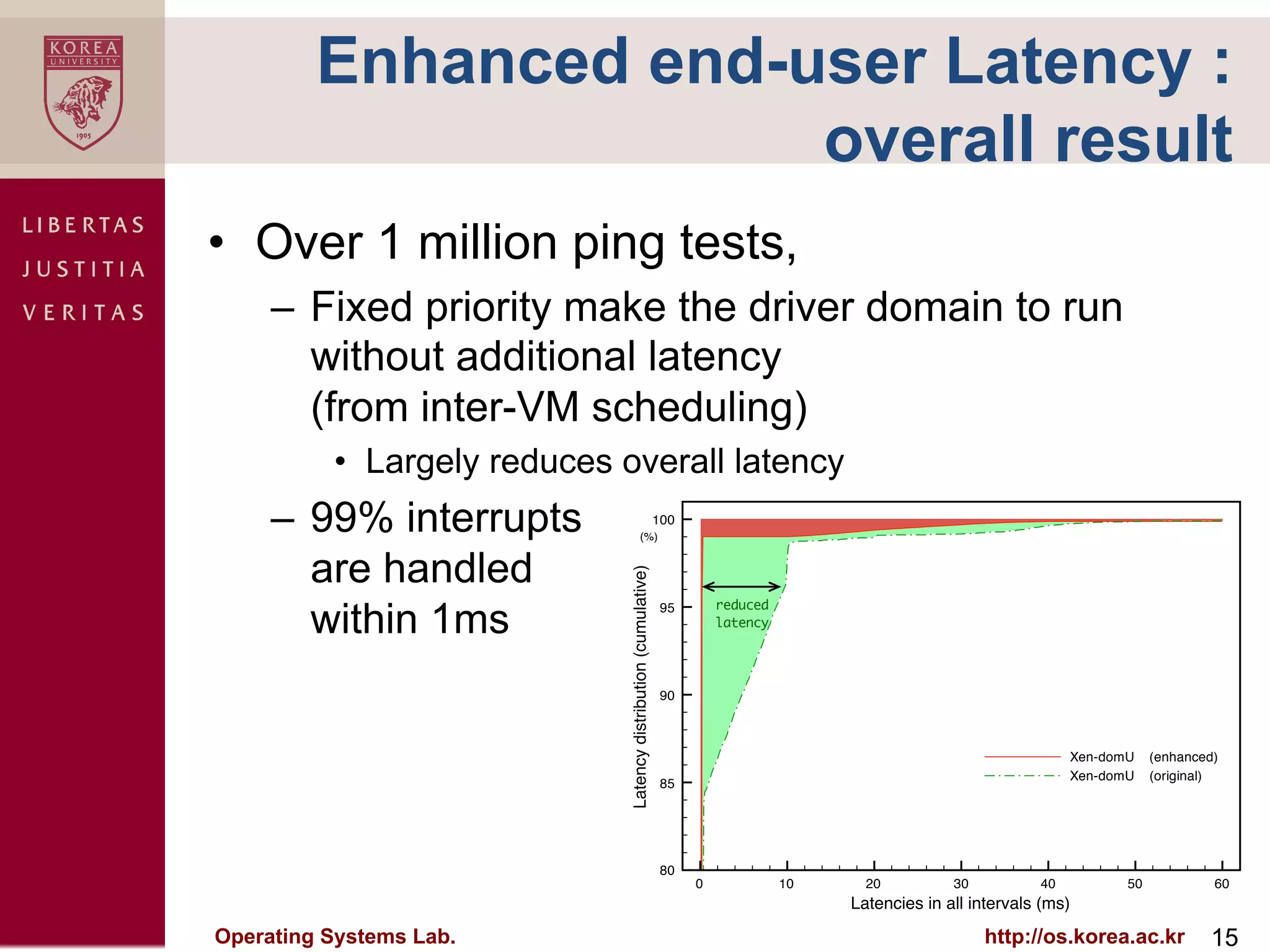

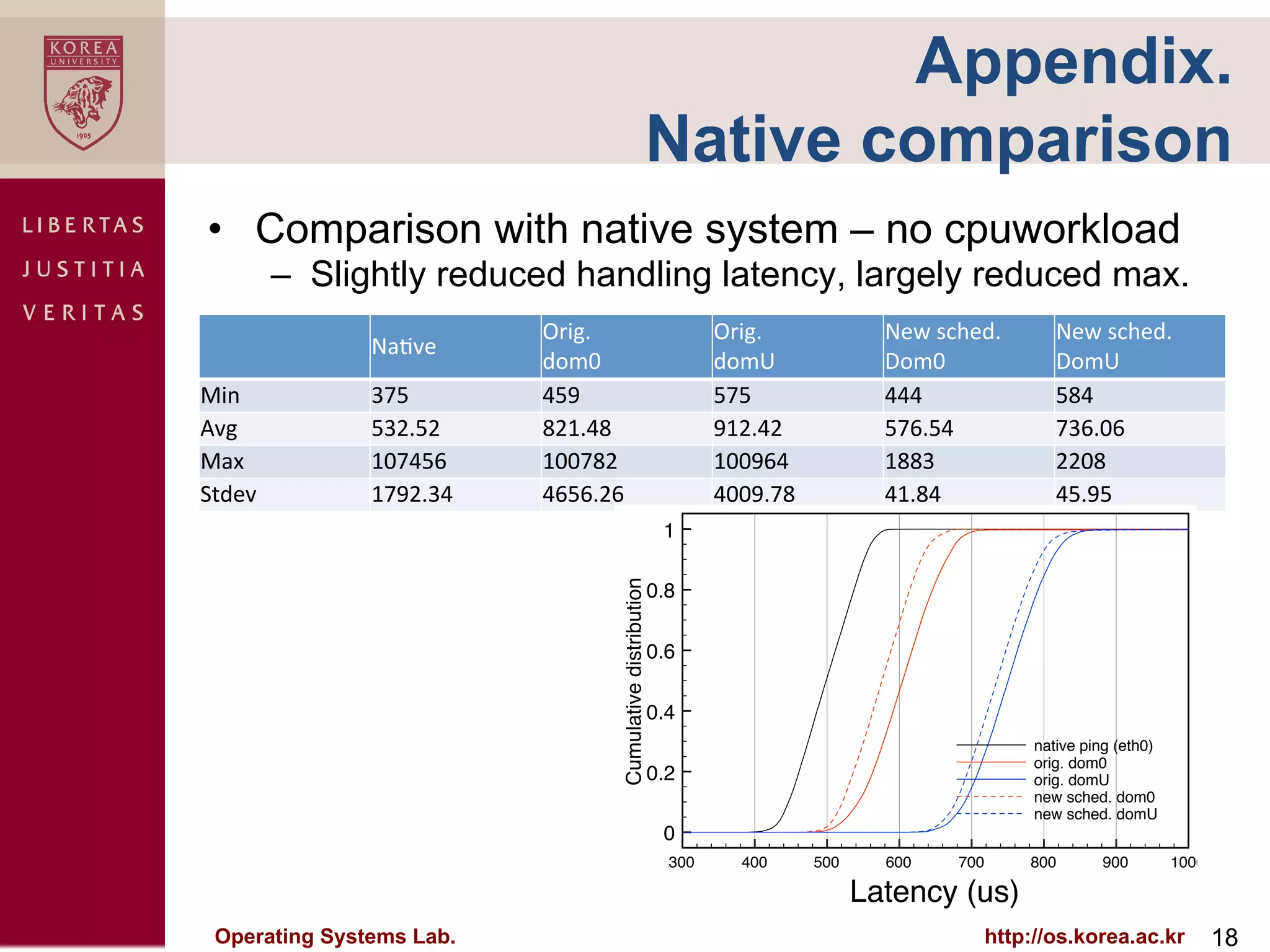

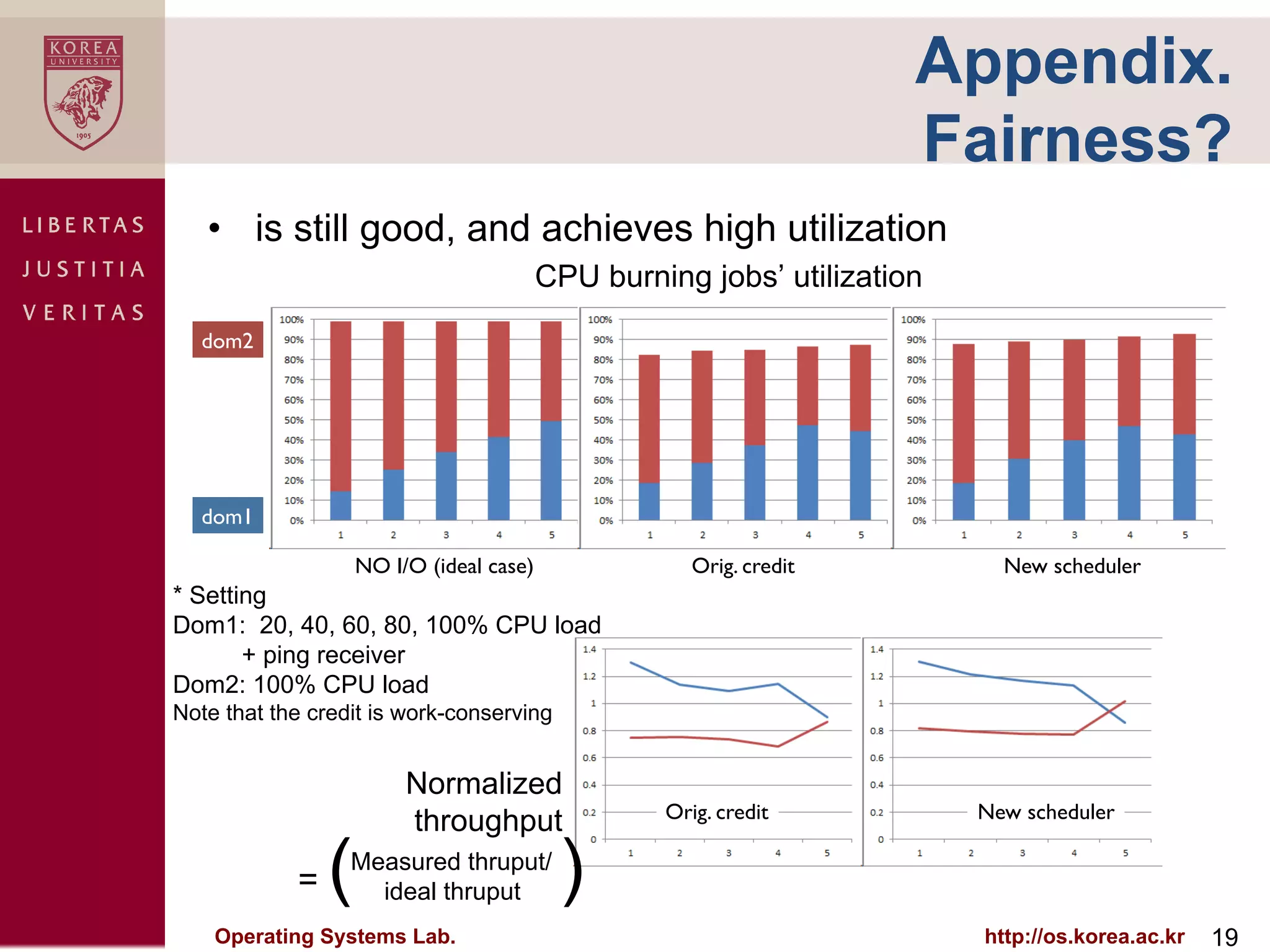

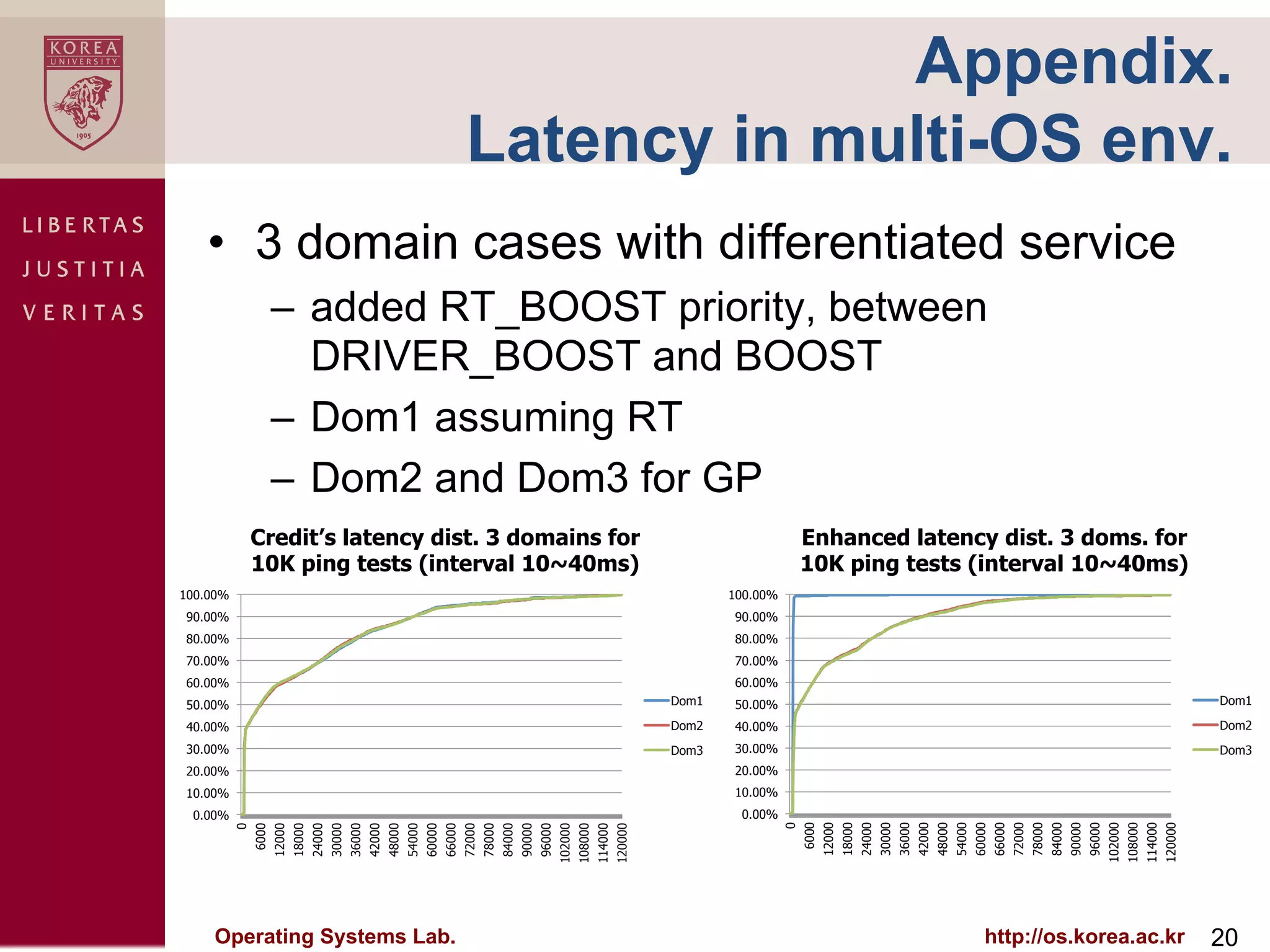

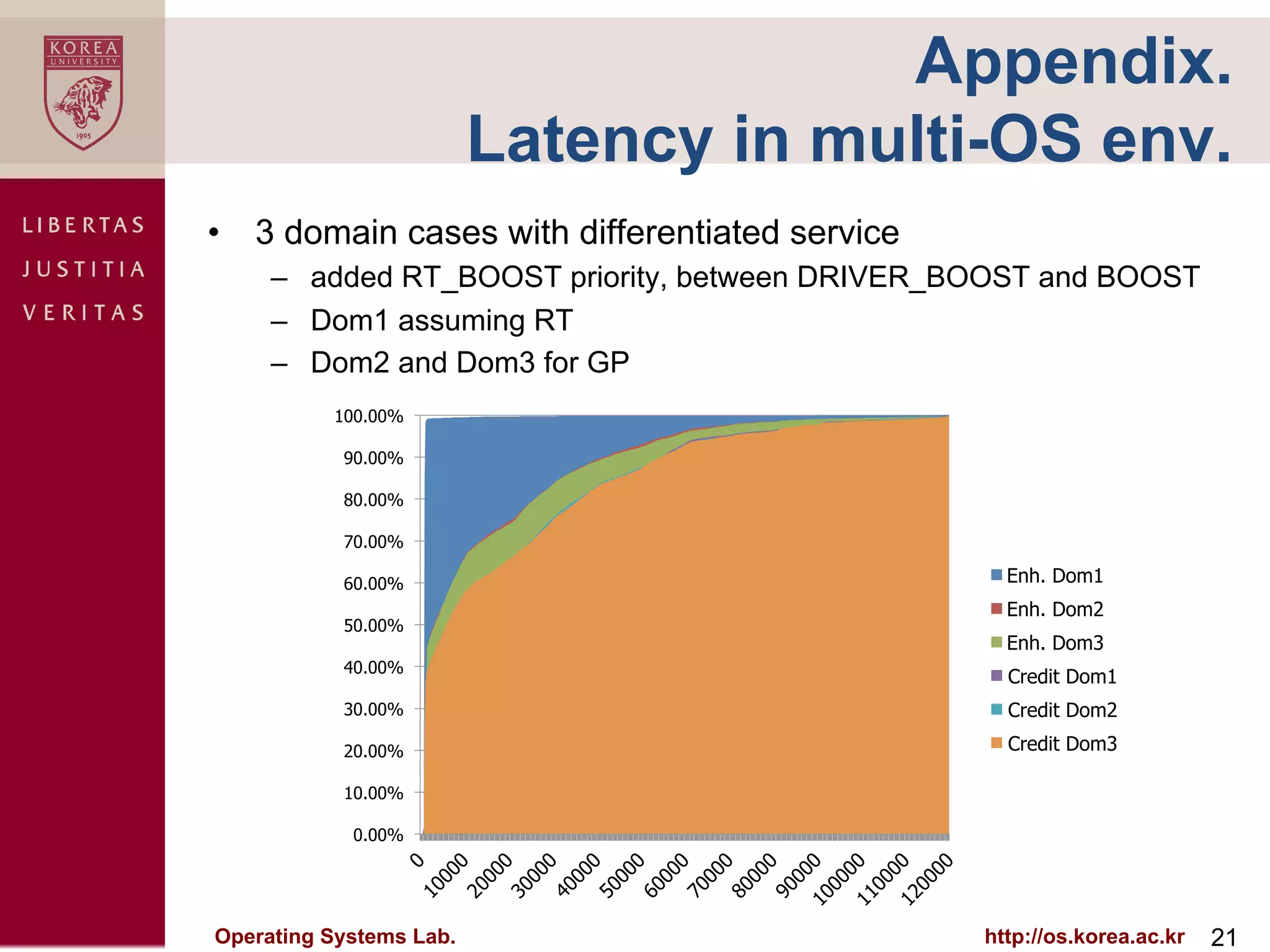

The document discusses I/O latency issues in Xen-ARM virtualization. It finds that the credit scheduler does not adequately support time-sensitive applications due to the hierarchical scheduling nature and split driver model. Measurements show large worst-case latencies throughout the interrupt path, including preemption, scheduling, and intra-domain latencies. Experiments reveal that not-boosted virtual CPUs and multi-boost situations negatively impact latency. Solutions are needed to minimize I/O latency for mobile and real-time environments in Xen-ARM.