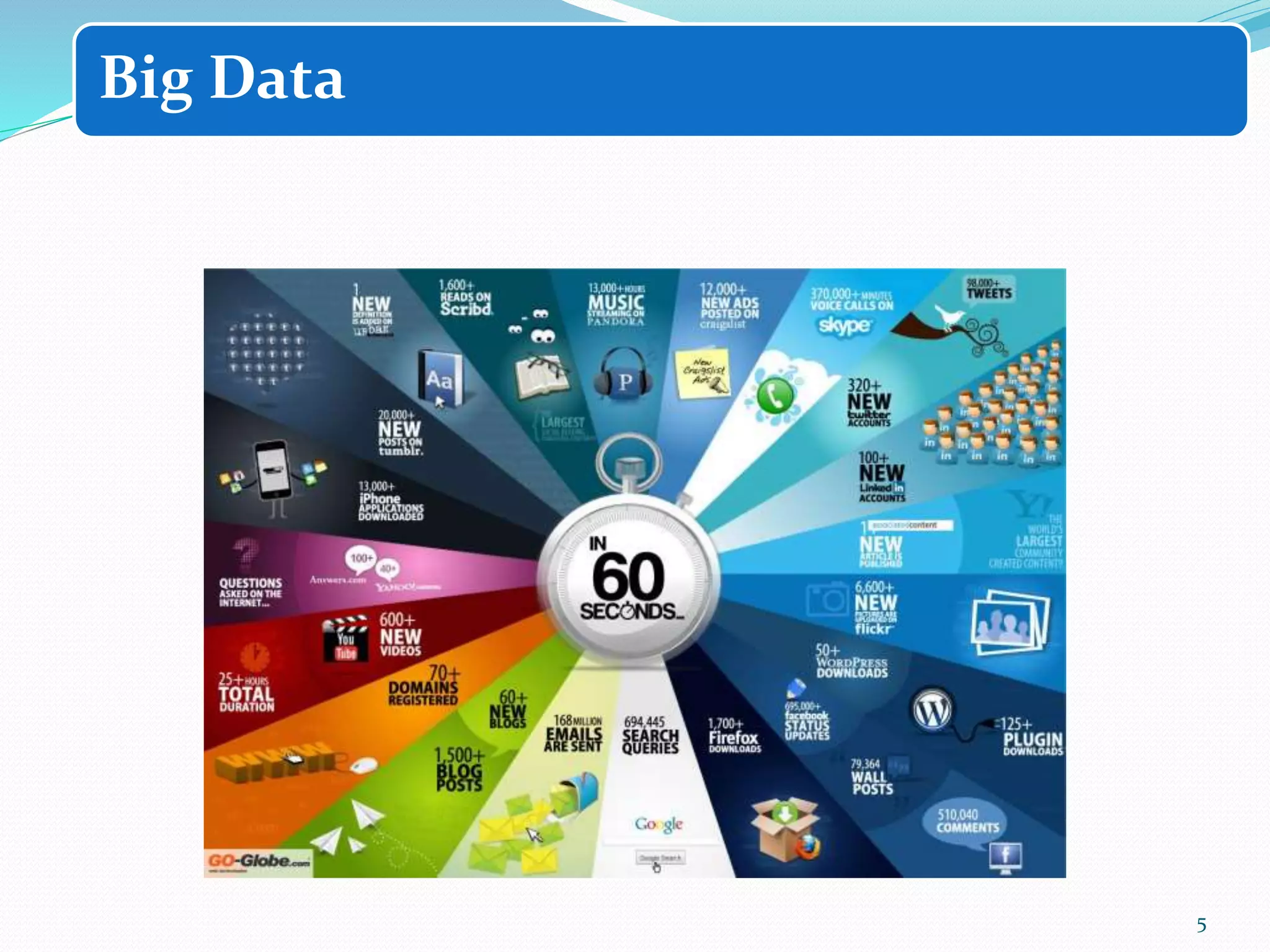

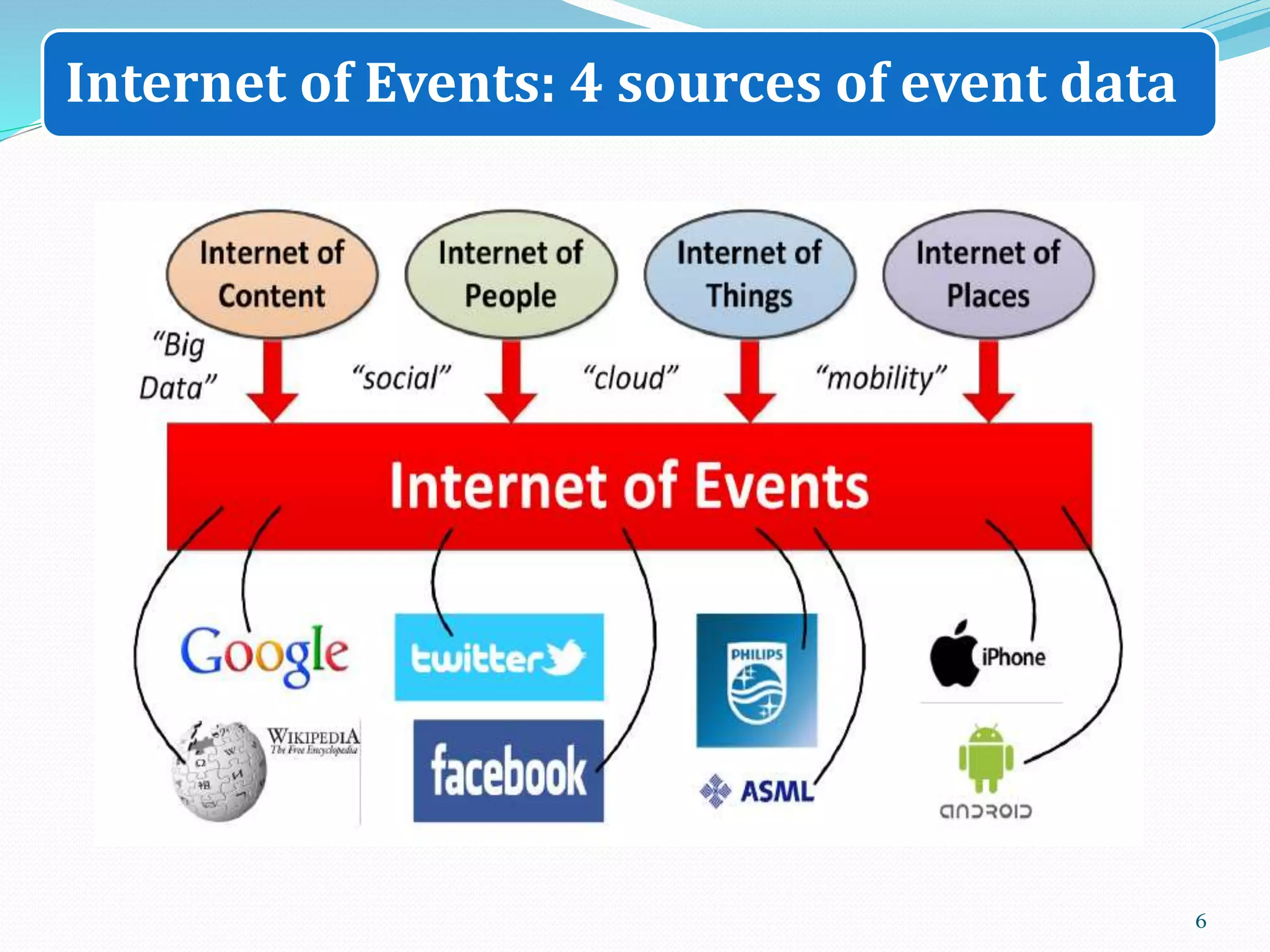

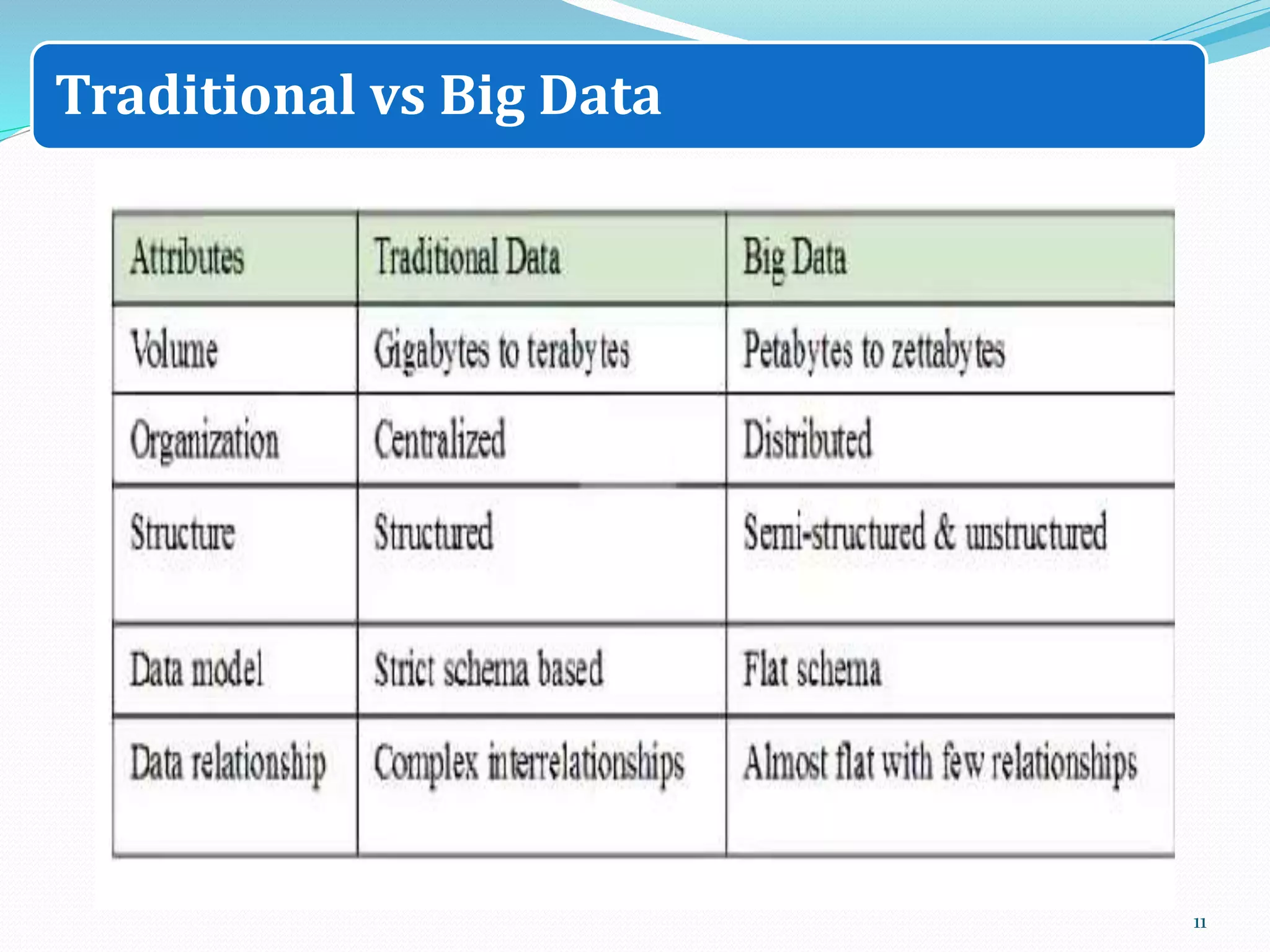

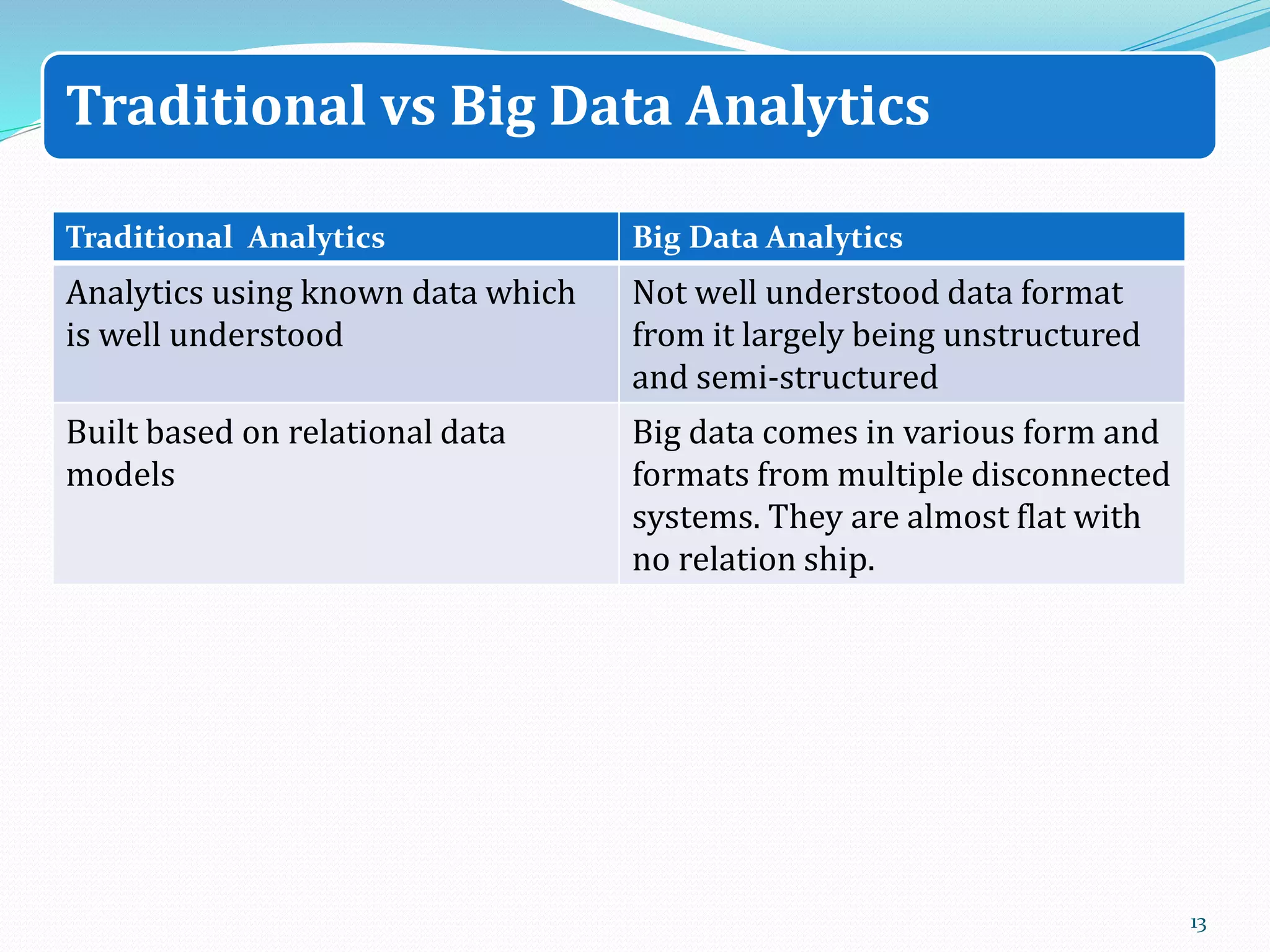

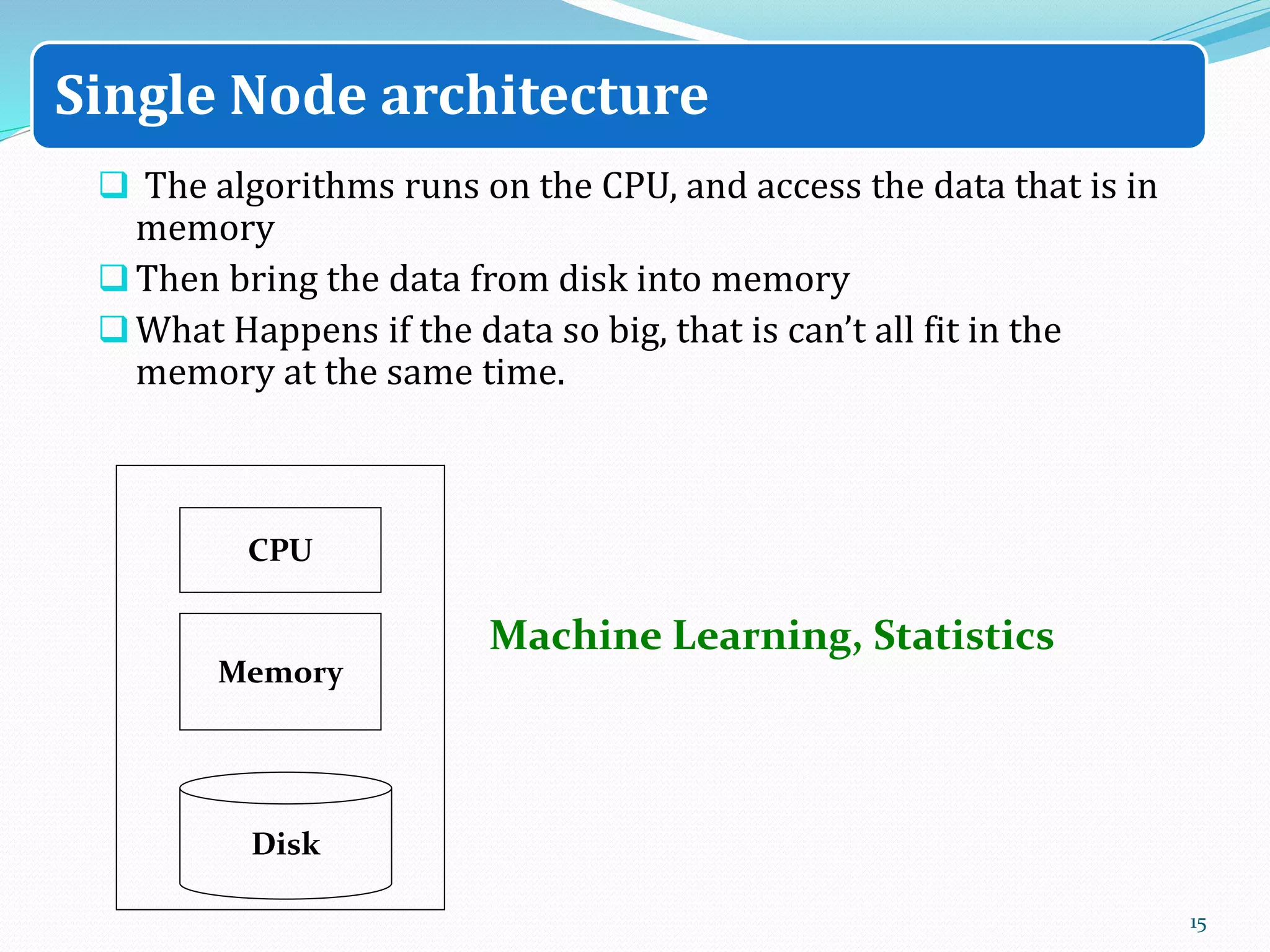

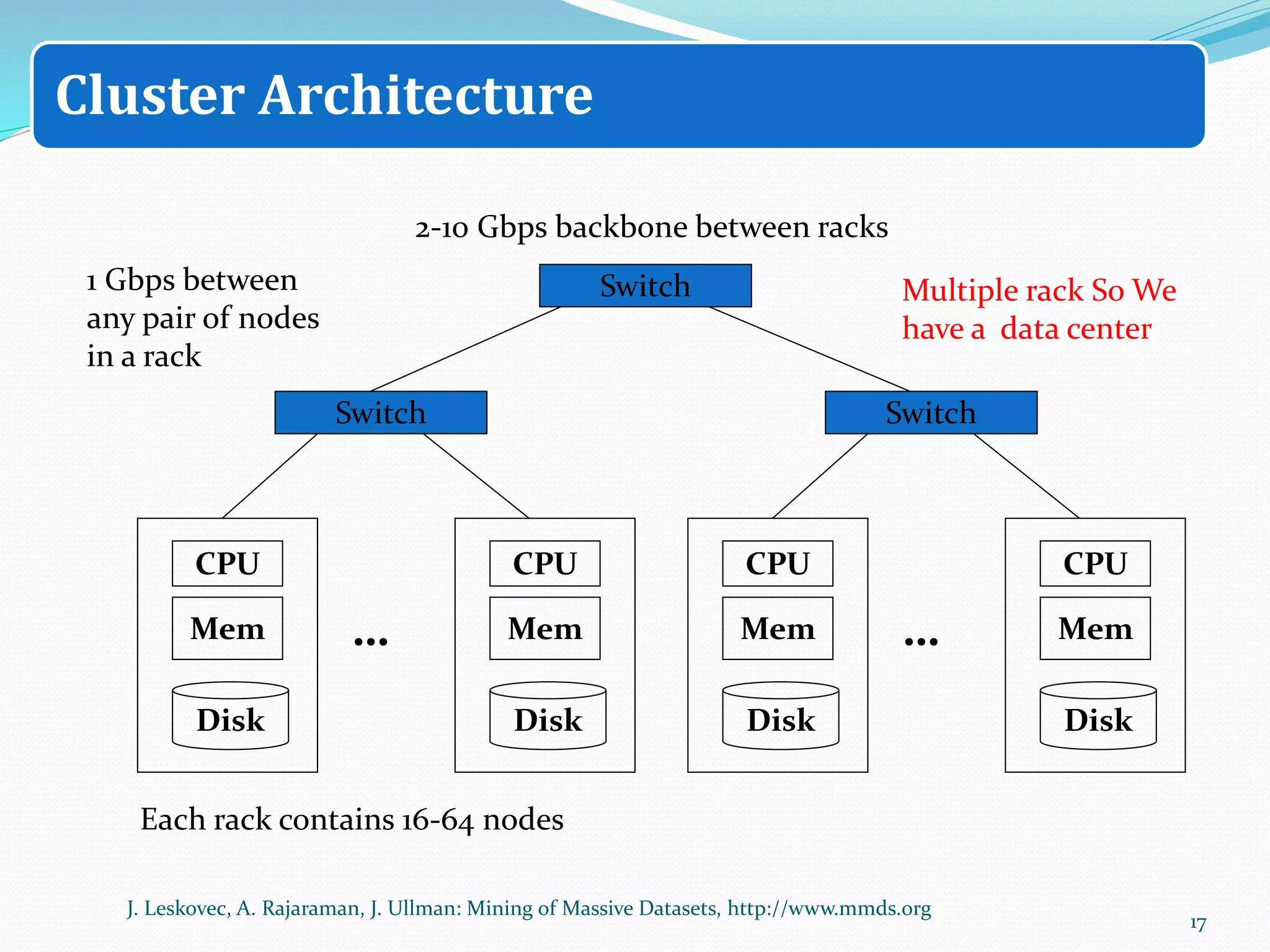

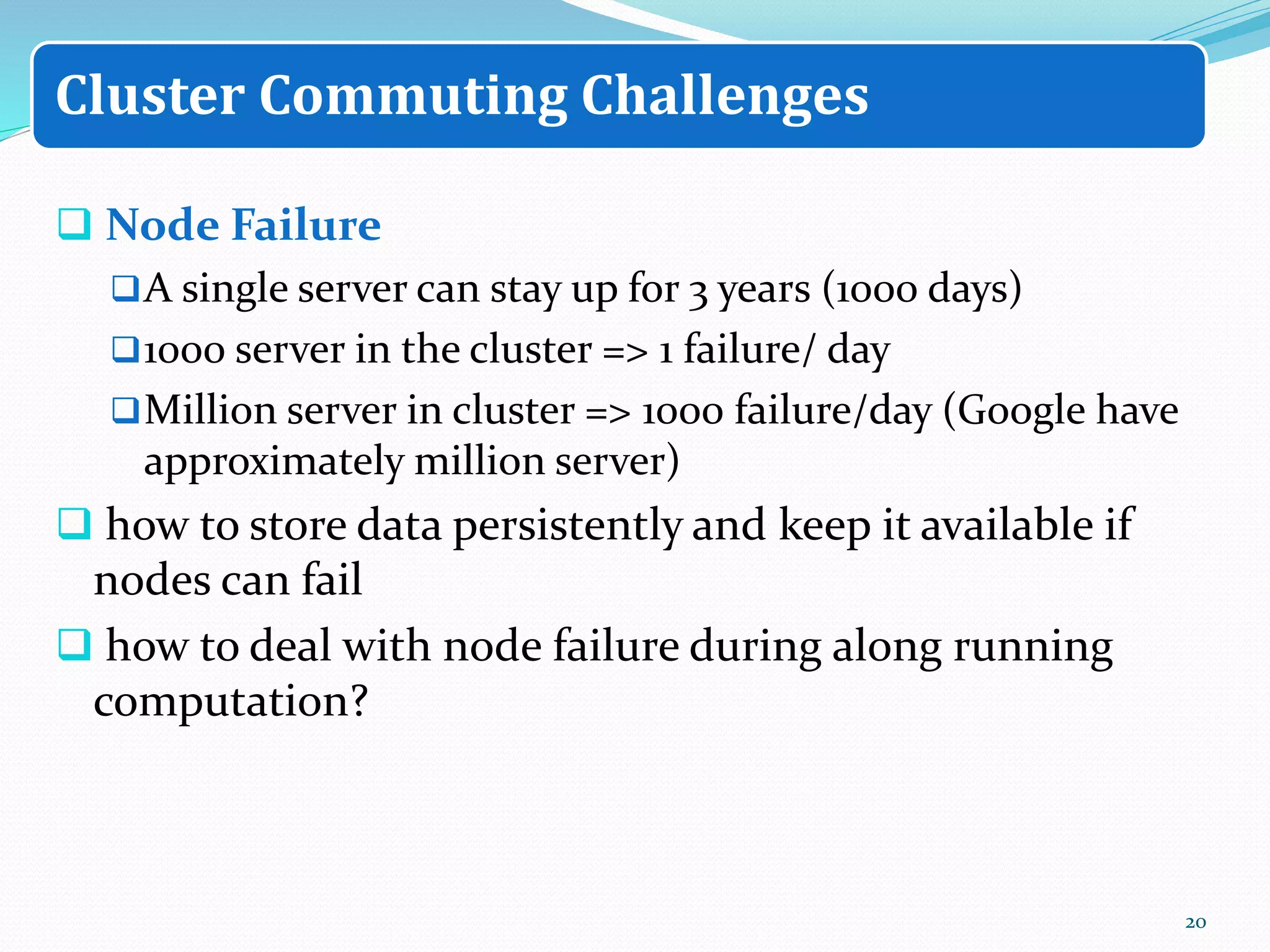

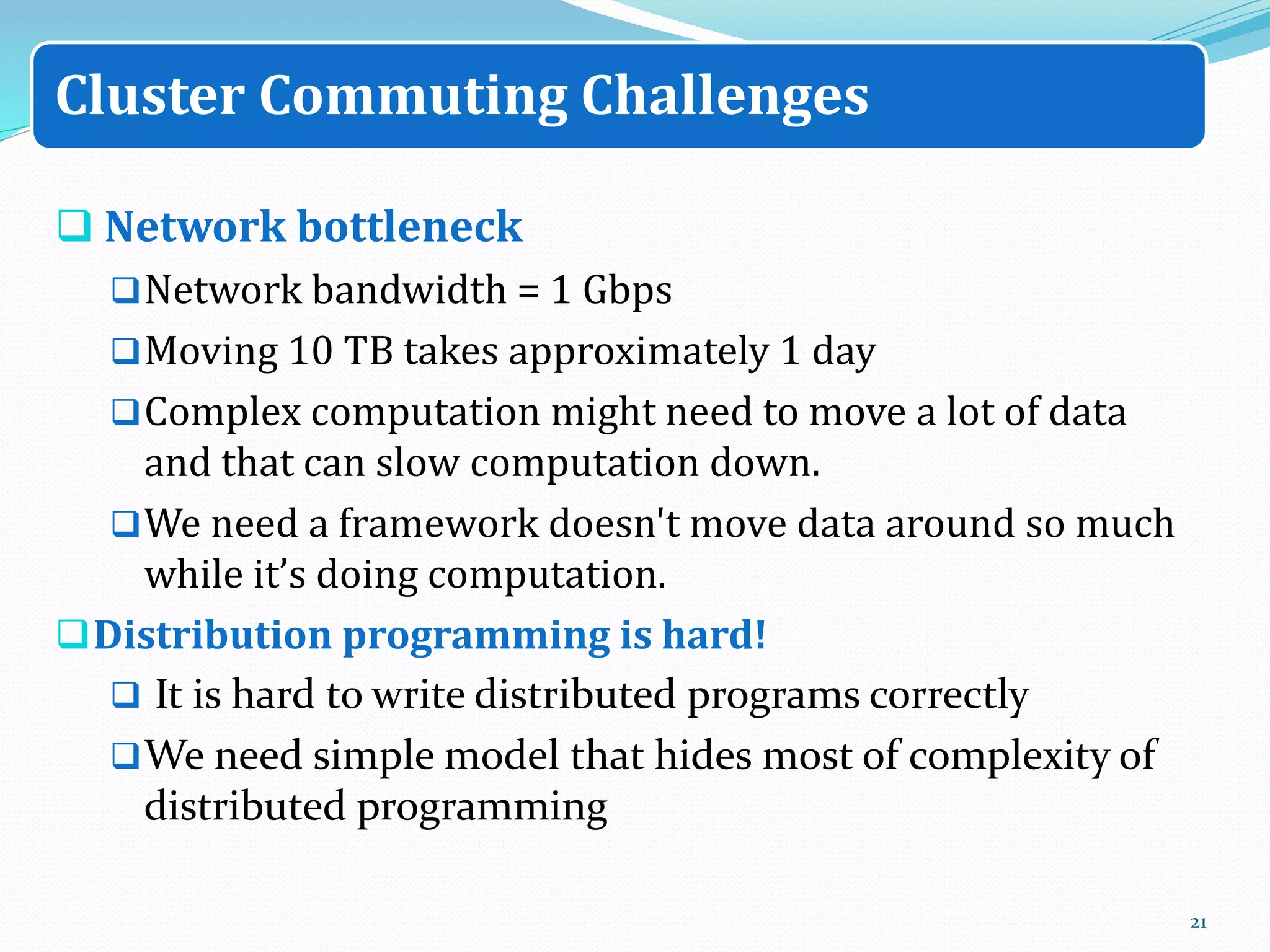

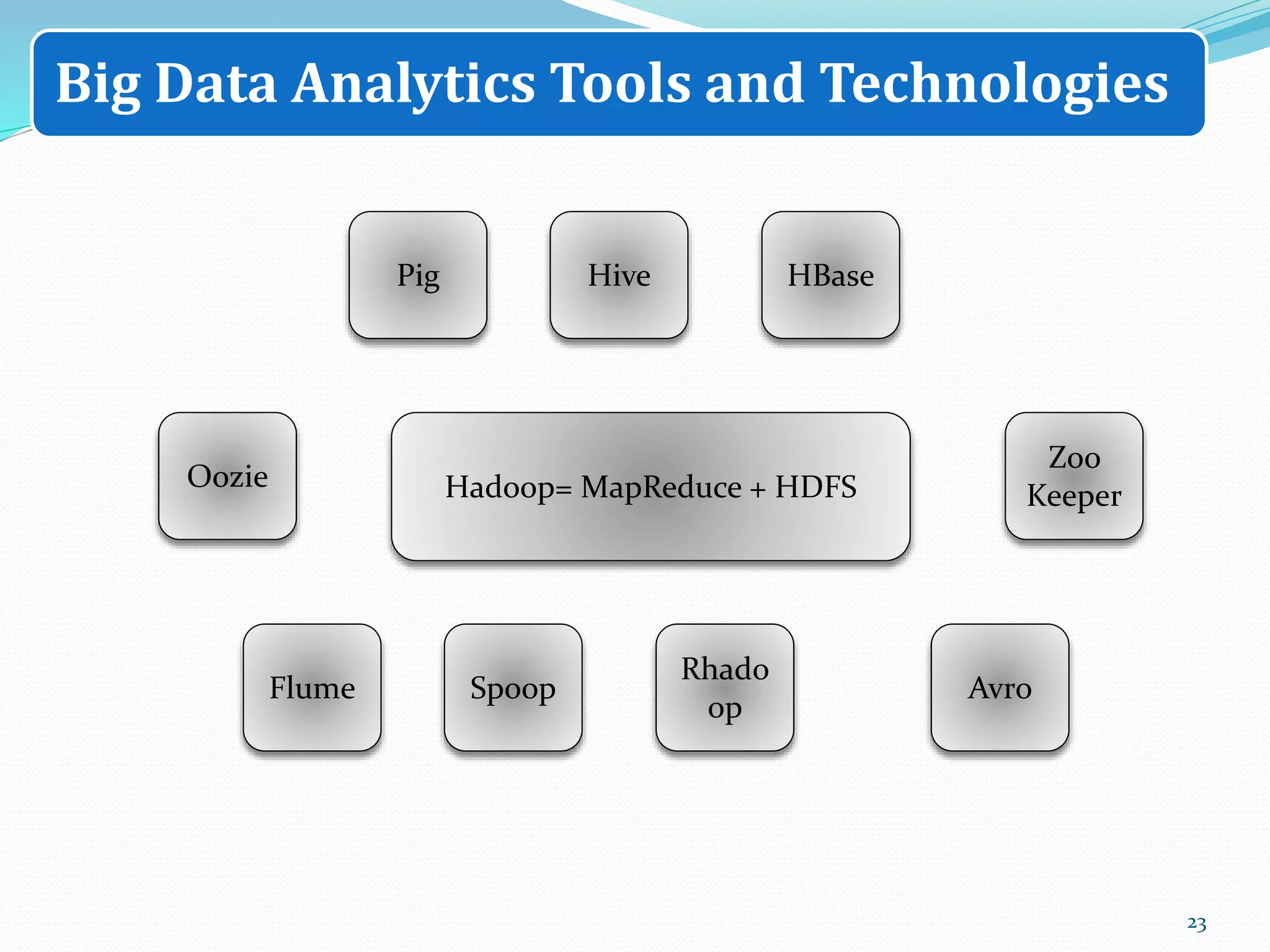

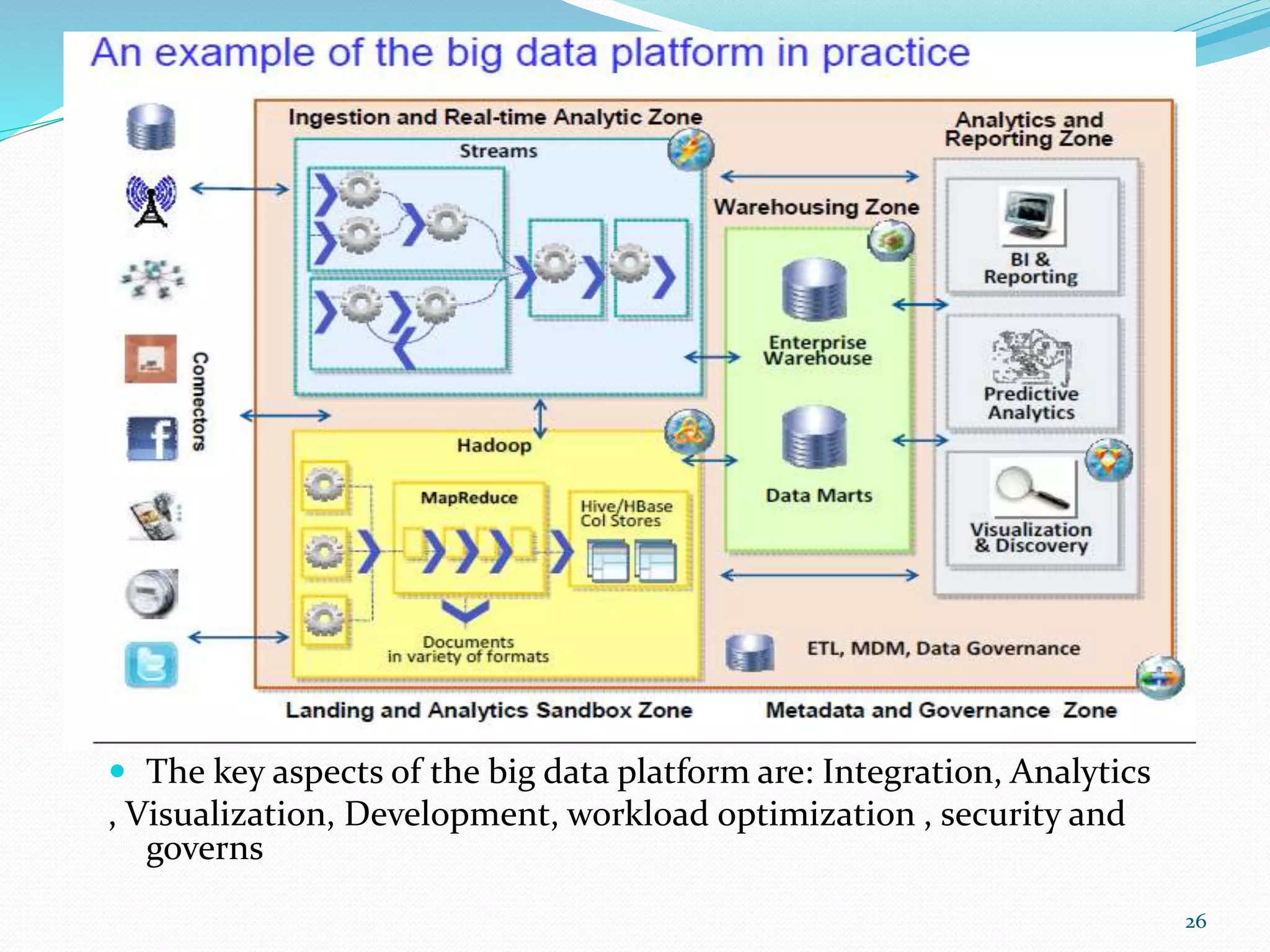

The document discusses big data sources, challenges, and analytics. It describes how big data is too large to be managed by traditional databases due to its volume, velocity, variety, and veracity. Big data comes from sources like web pages, social media, sensors, and financial transactions. Analyzing big data requires distributed computing across clusters of servers to store and process the data in parallel. Frameworks like MapReduce and Hadoop were developed to perform big data analytics across clusters and address challenges of node failures, network bottlenecks, and distributed programming.