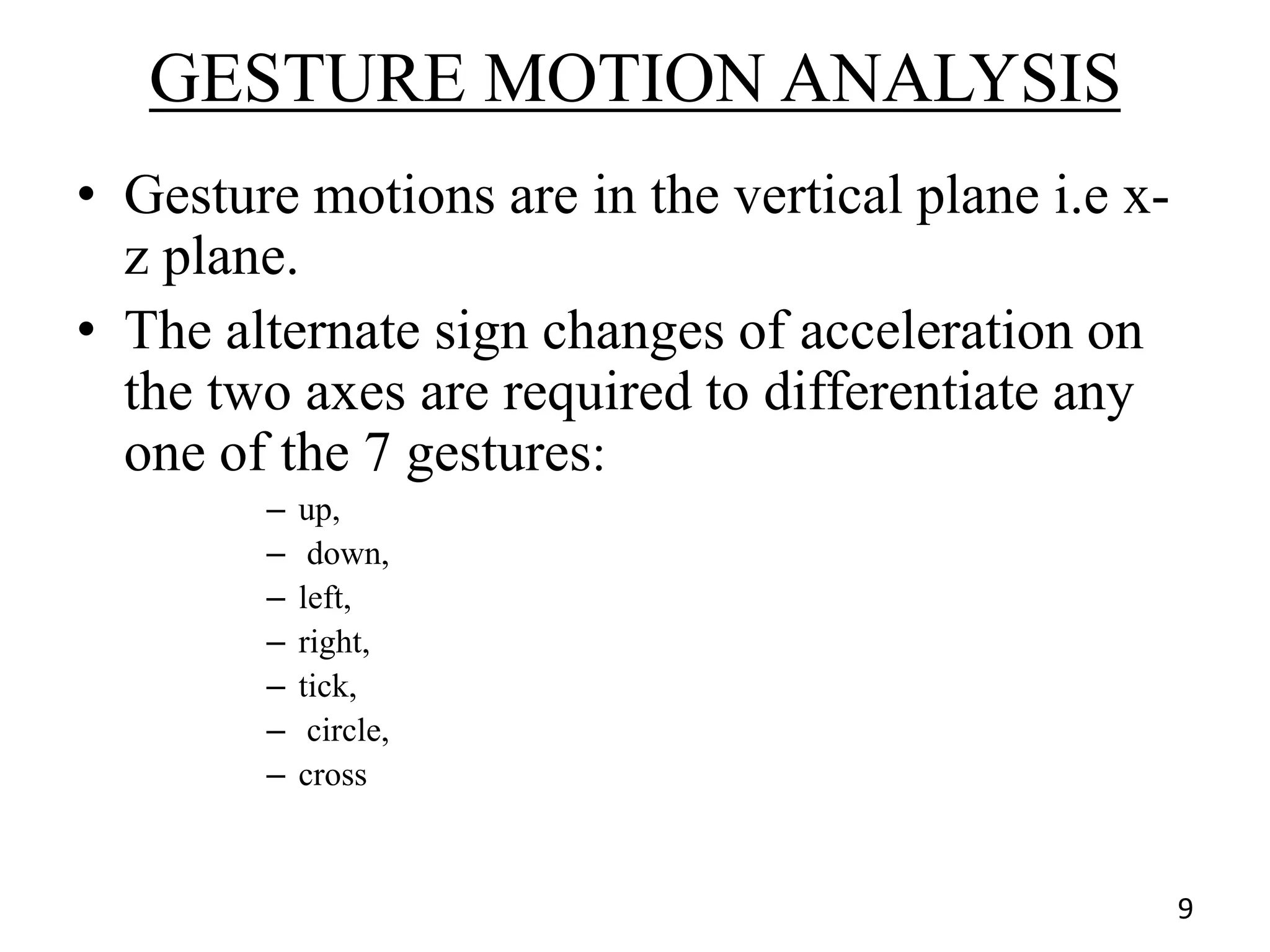

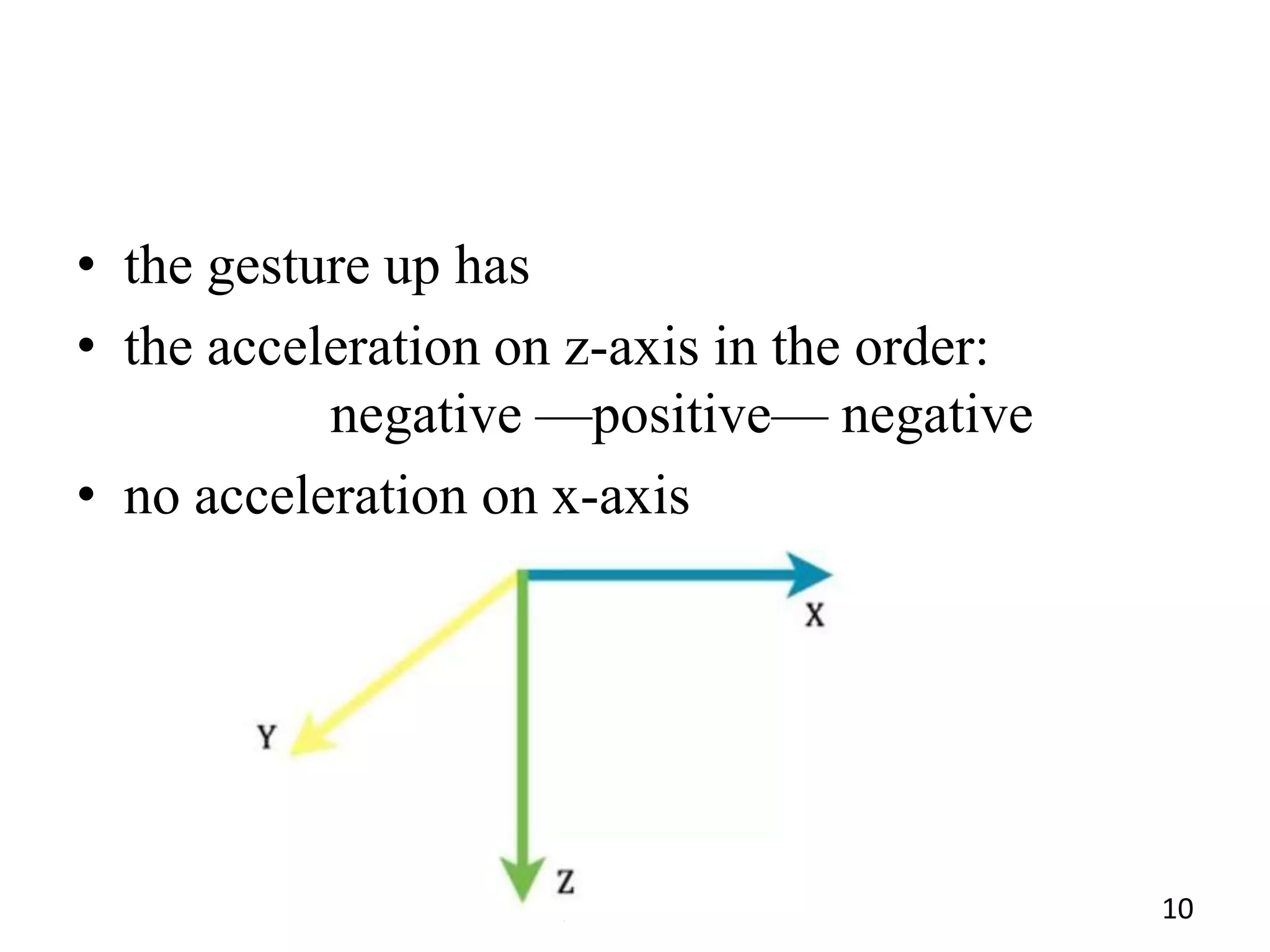

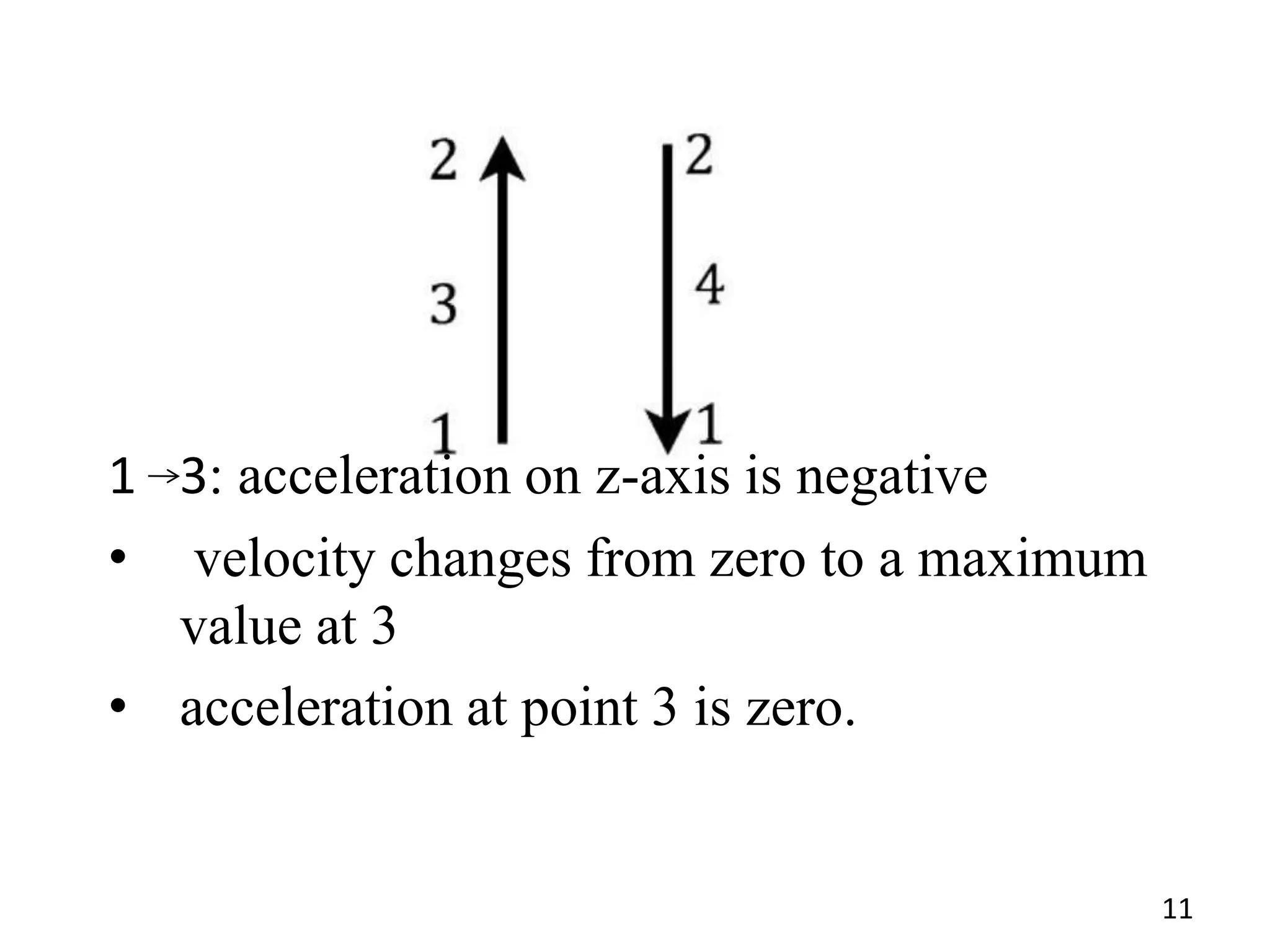

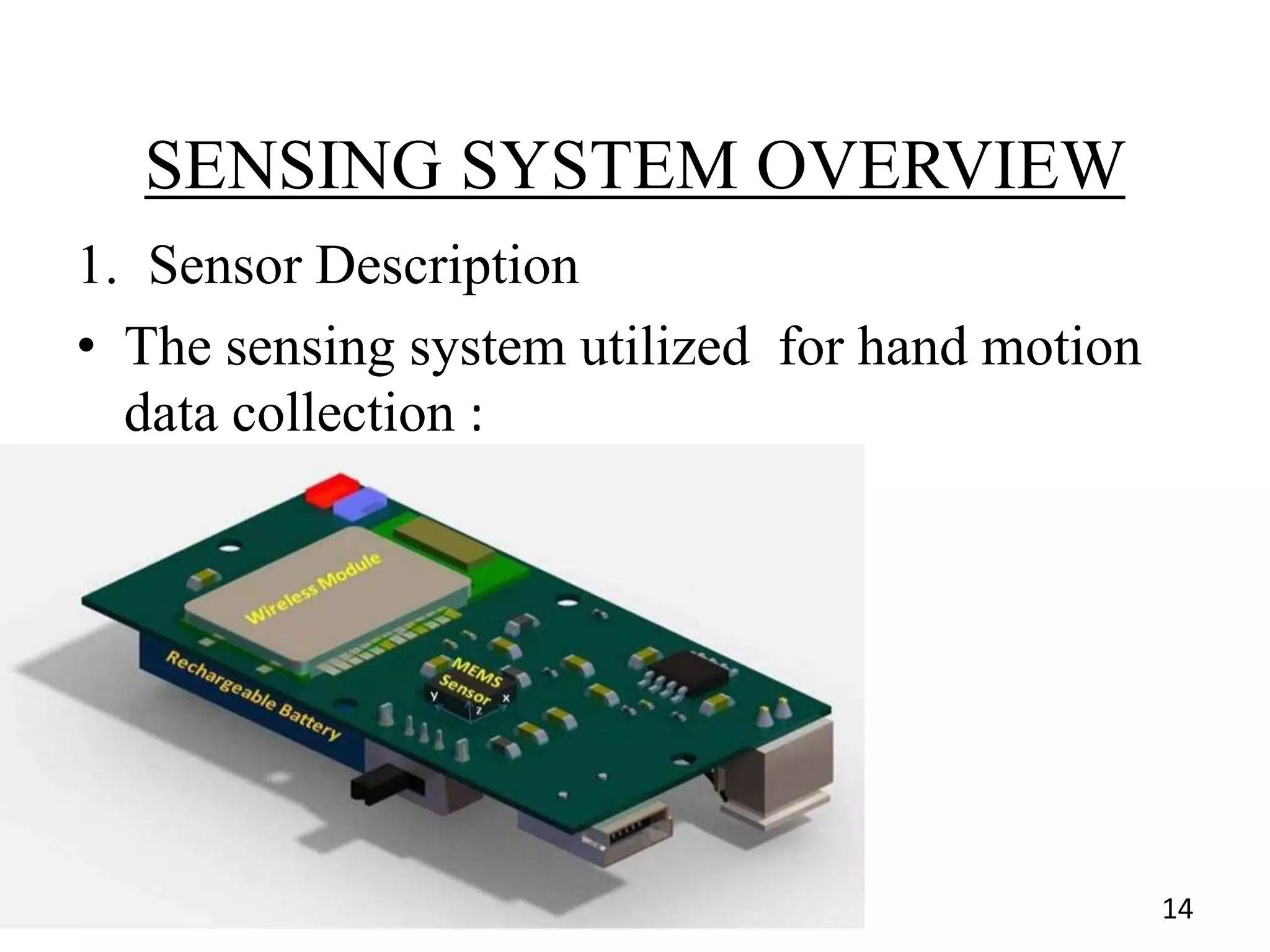

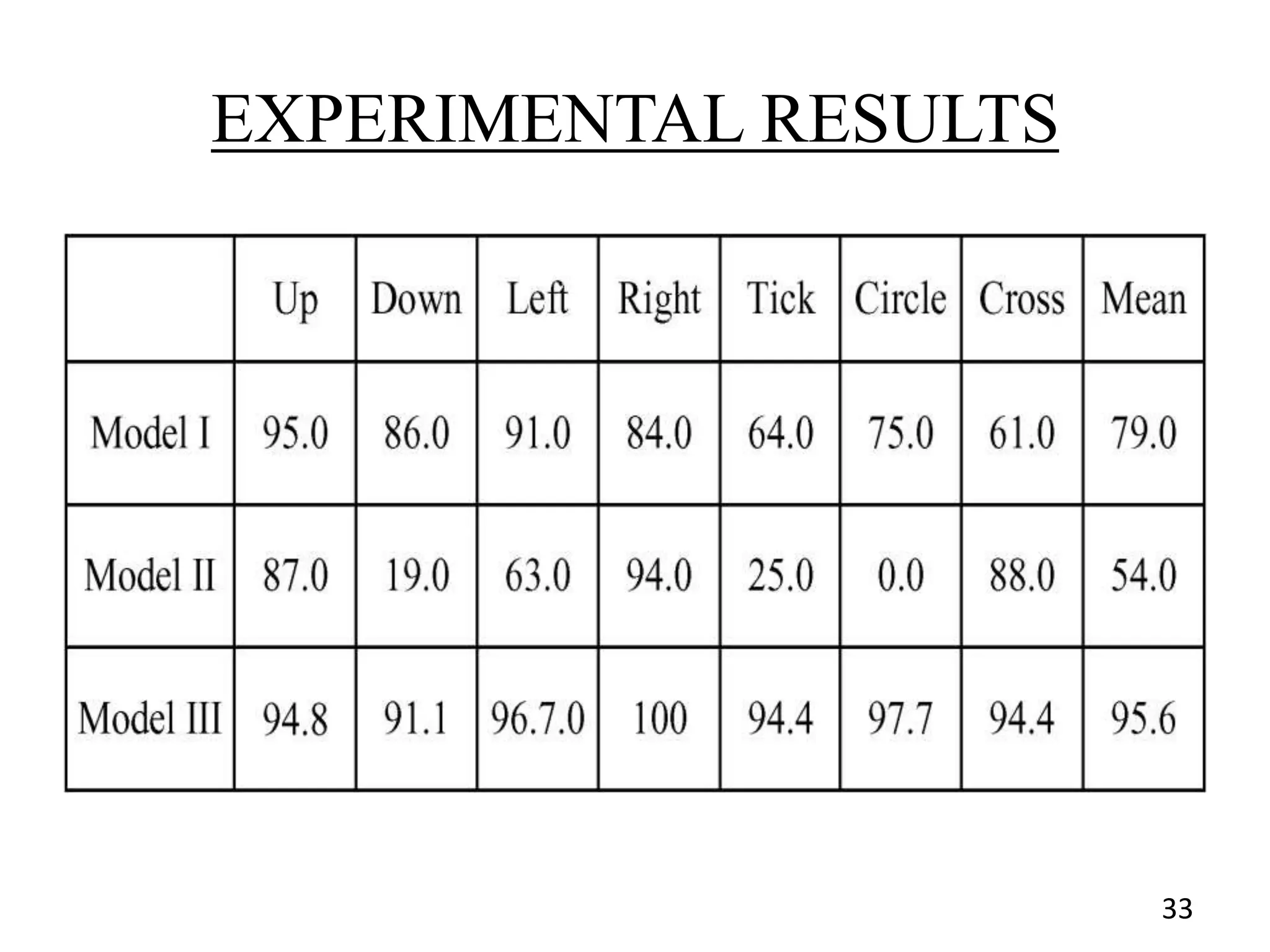

This document presents three methods for hand gesture recognition using MEMS accelerometer sensors:

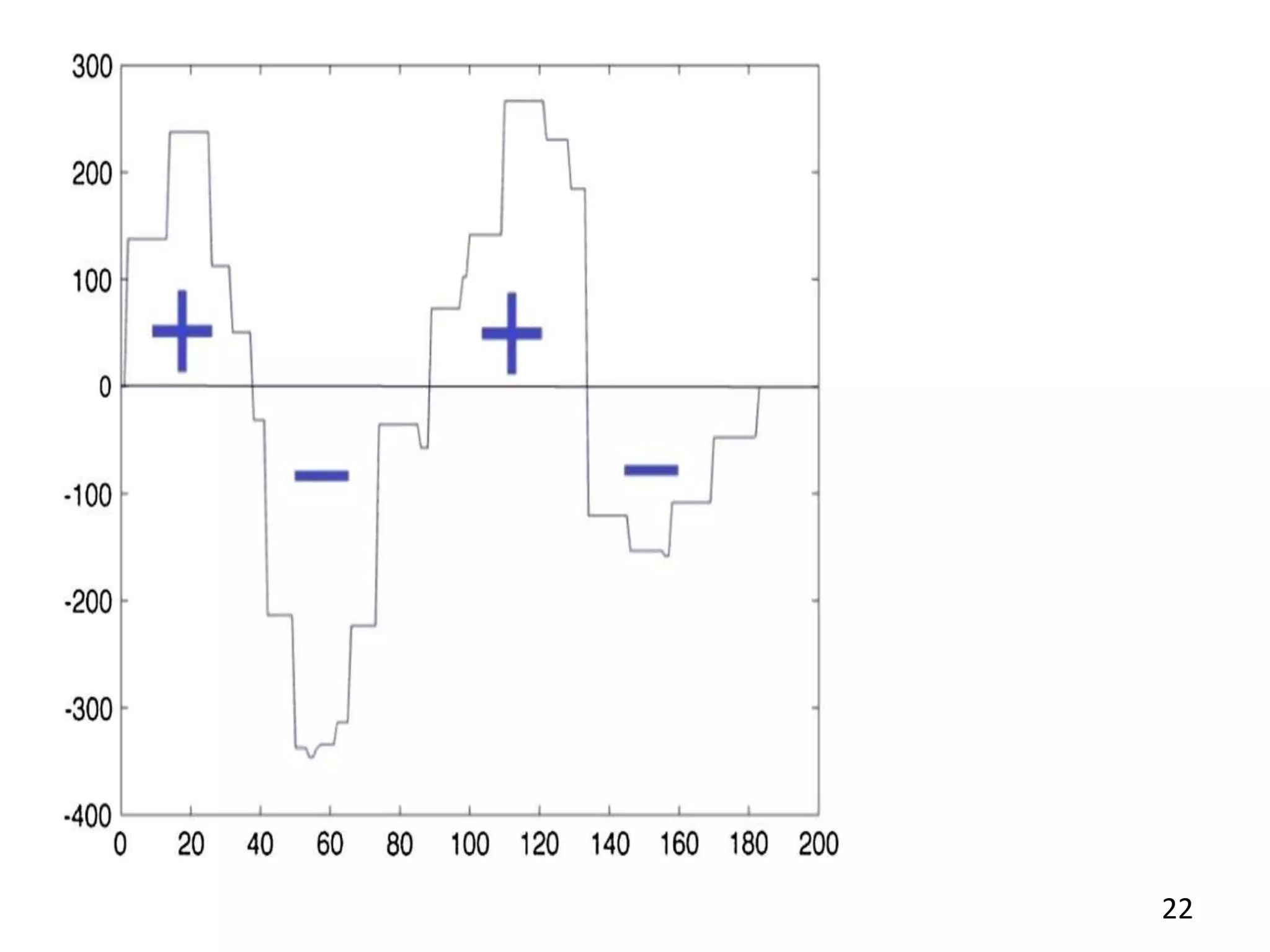

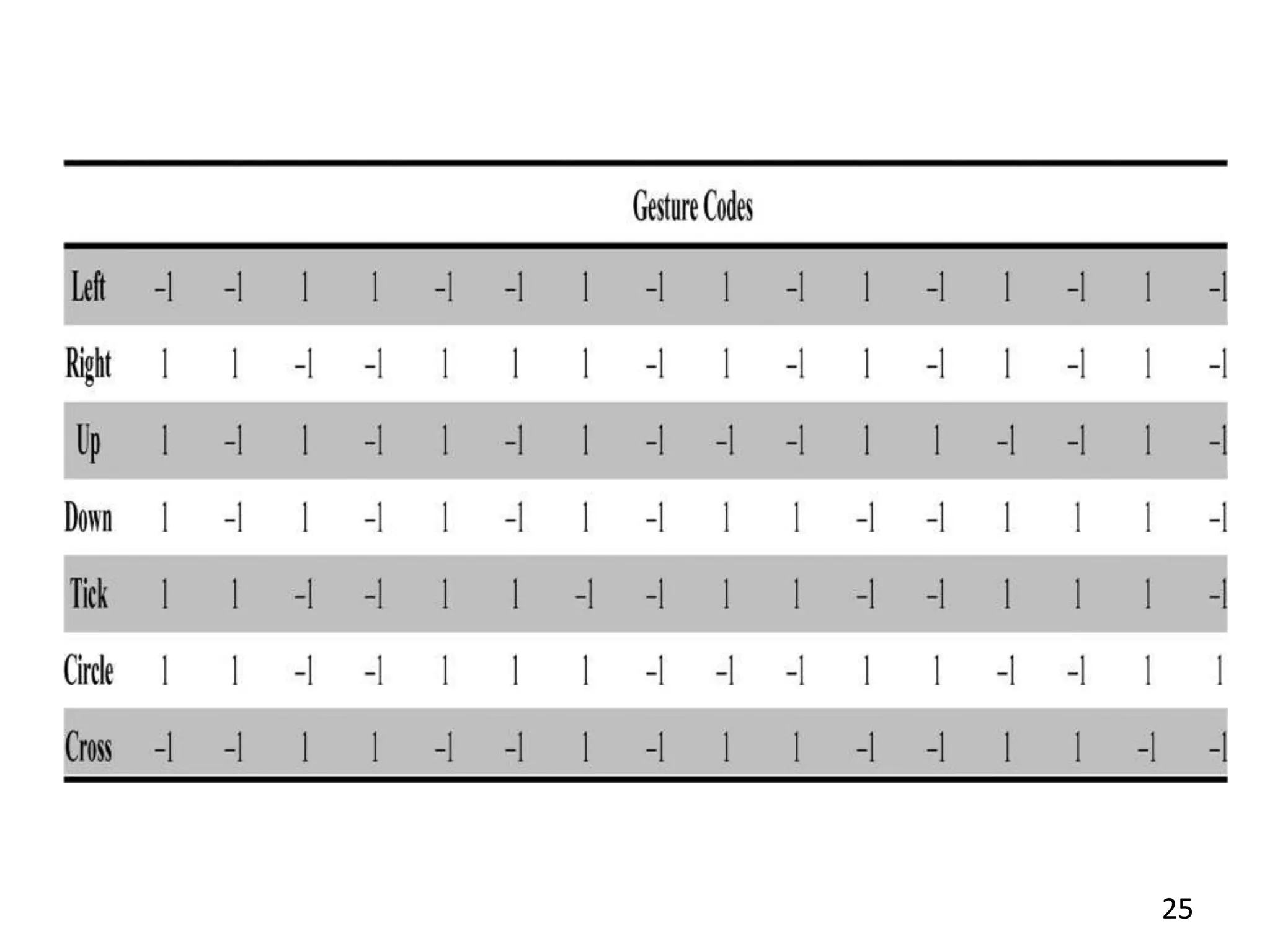

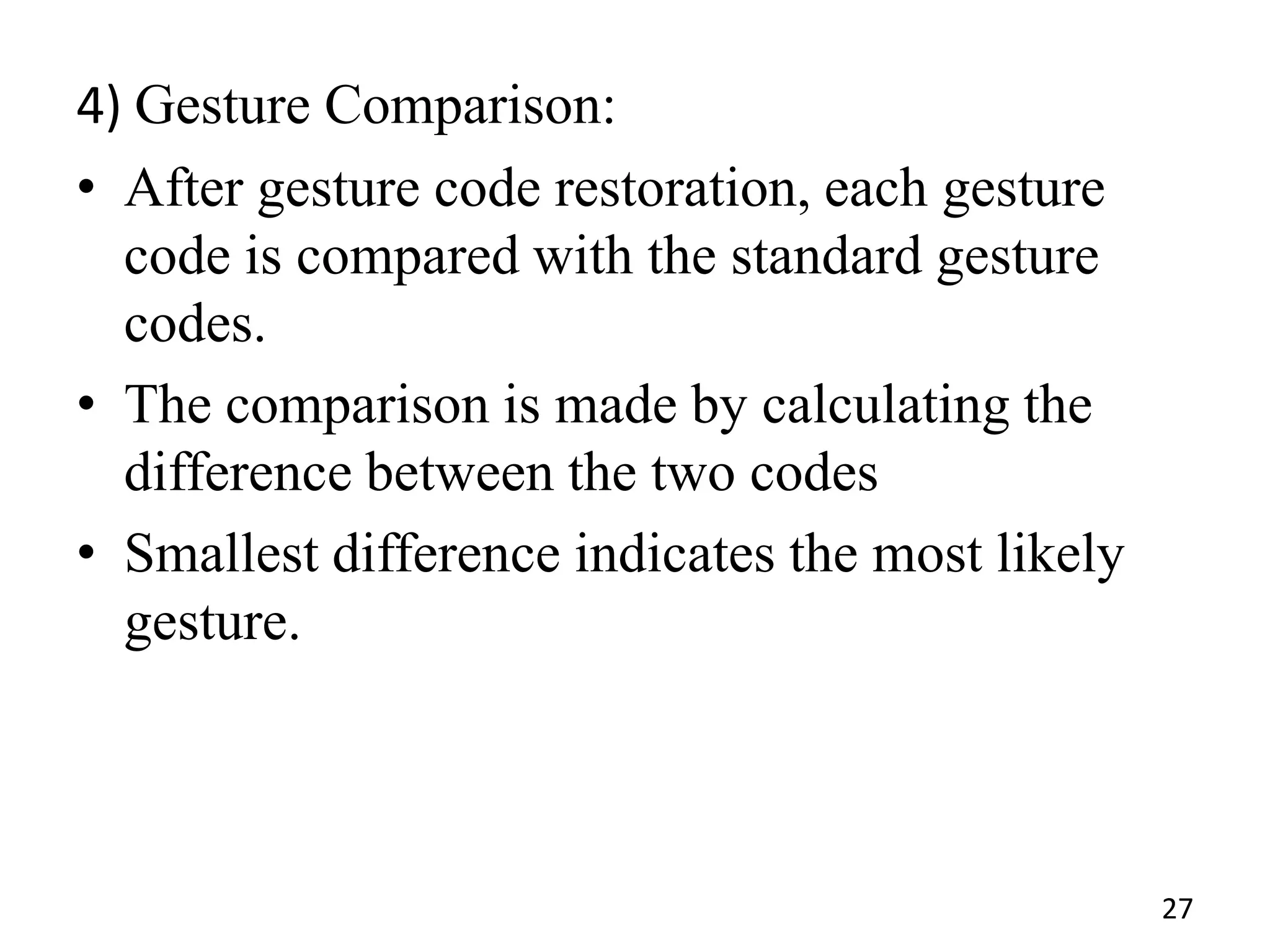

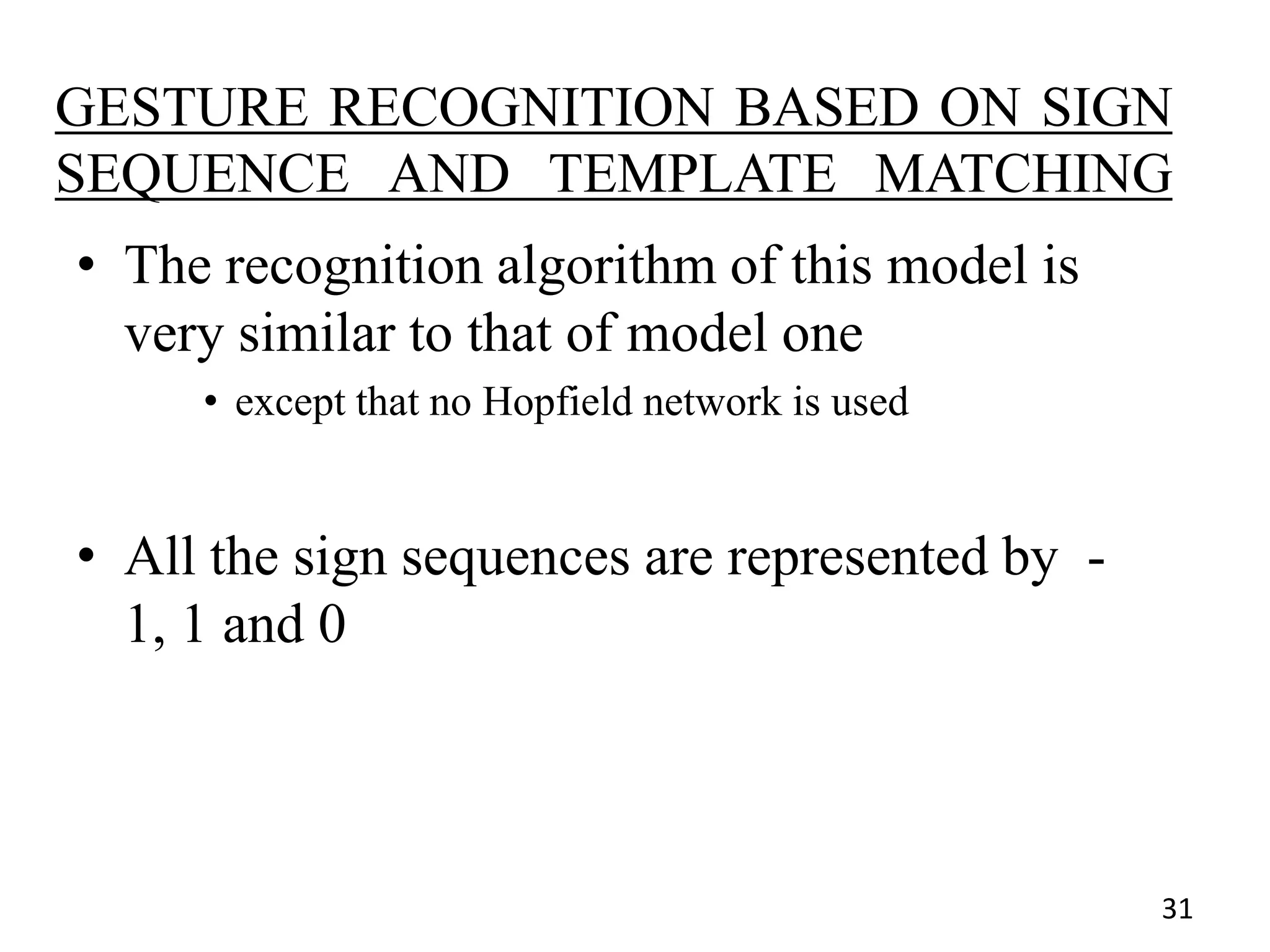

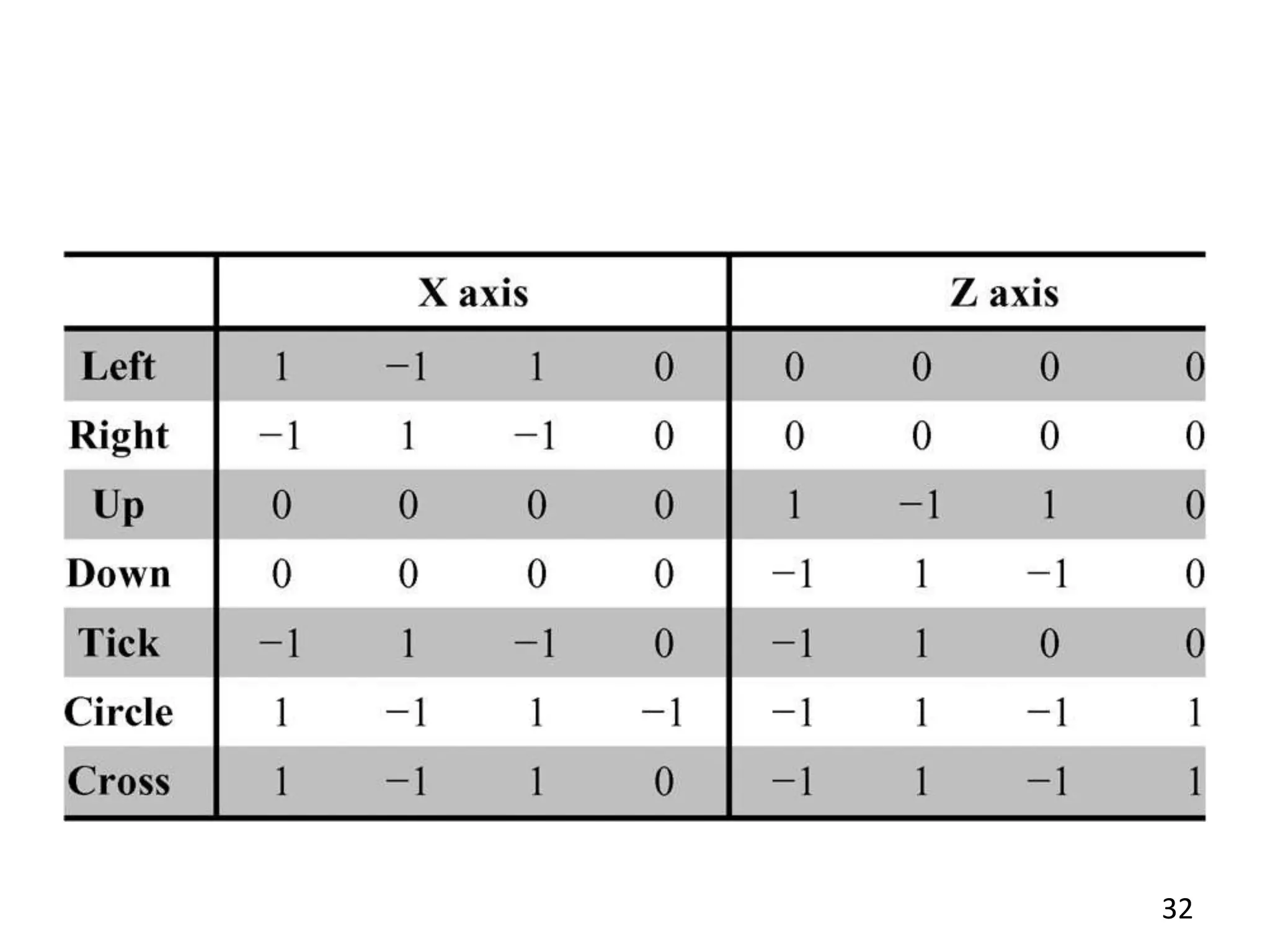

1) A sign sequence and Hopfield network model that extracts features from acceleration data, encodes gesture sequences, and uses a Hopfield network for recognition.

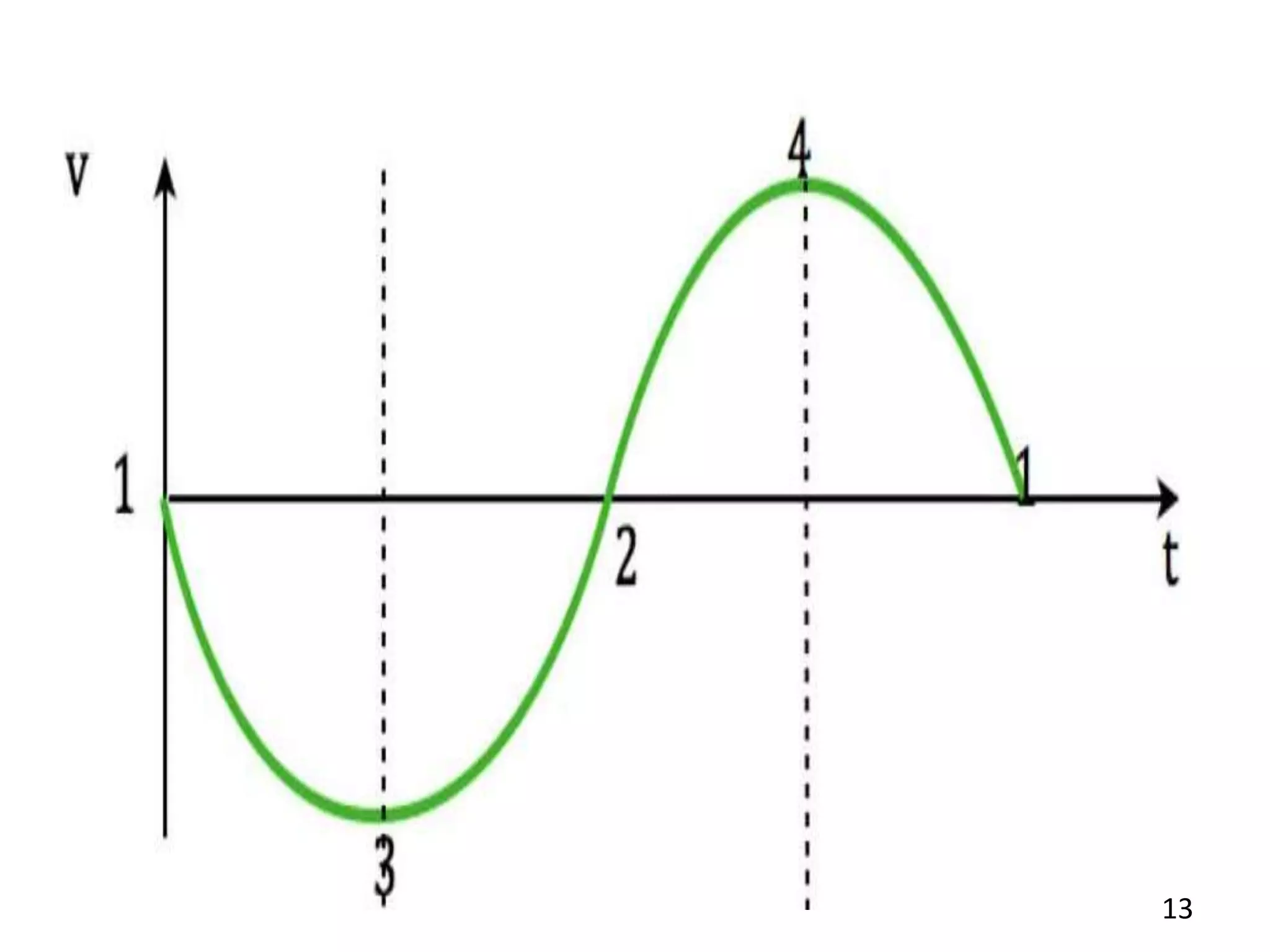

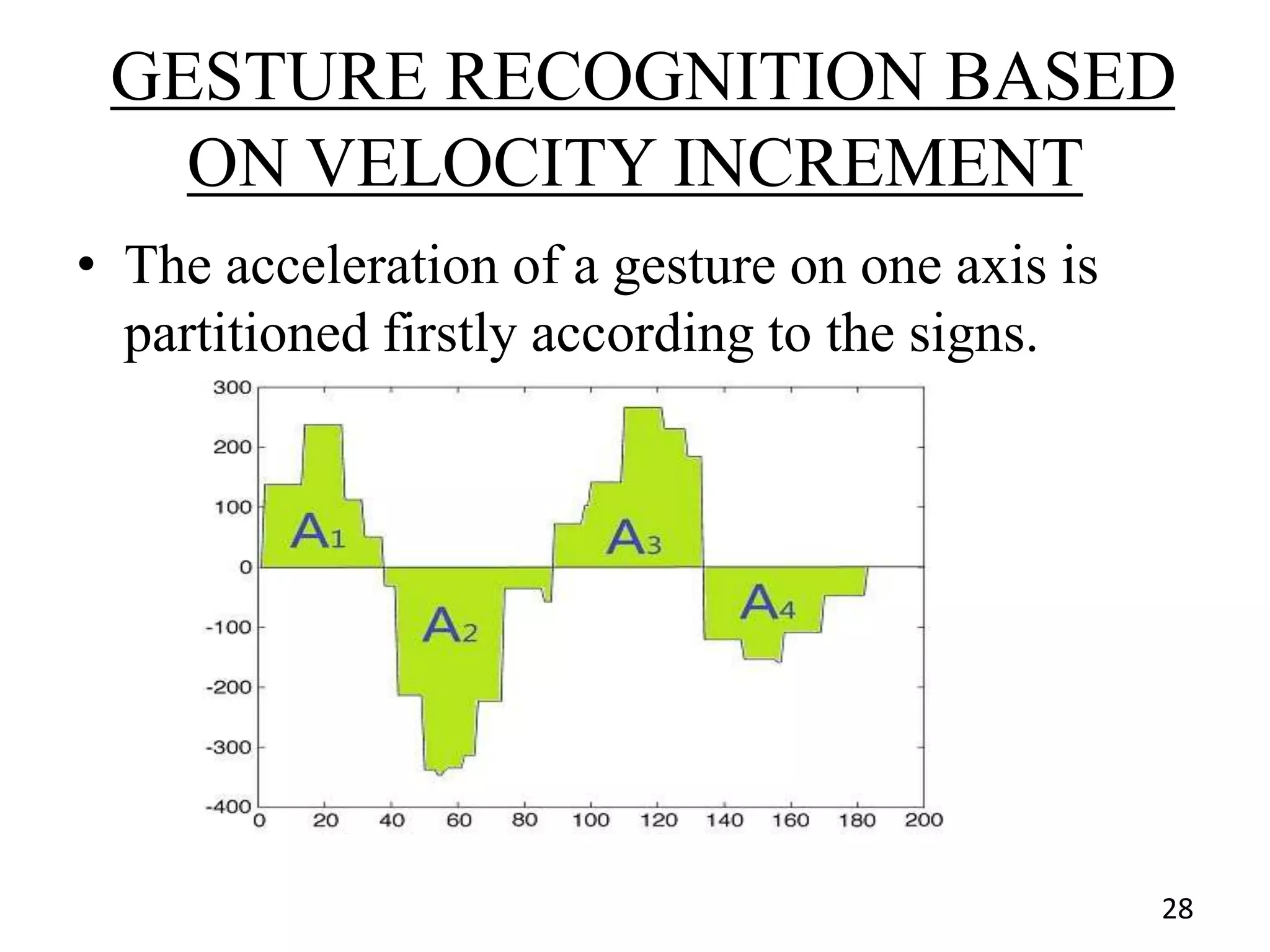

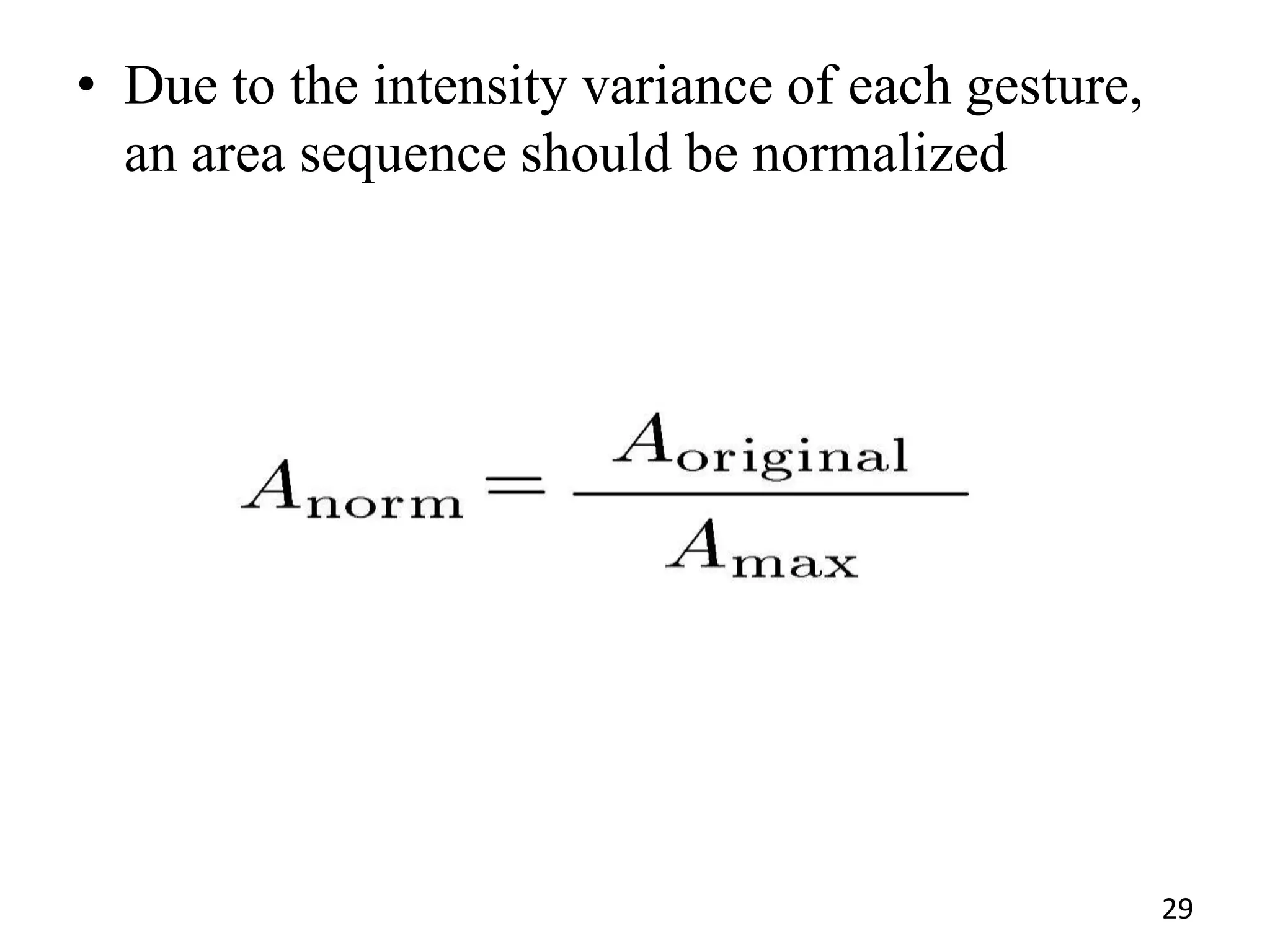

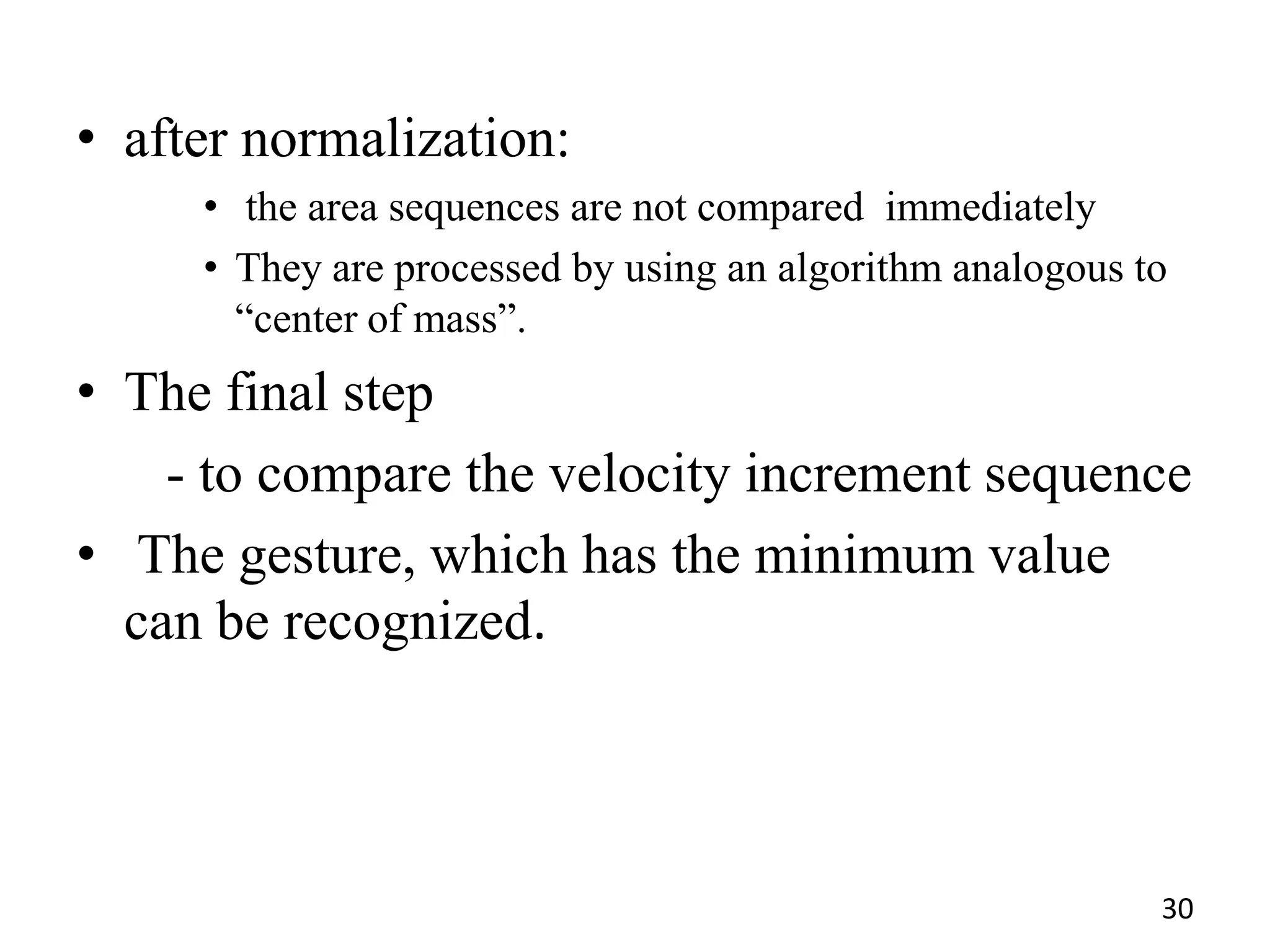

2) A velocity increment model that normalizes acceleration data based on sign and calculates velocity increments for comparison.

3) A sign sequence and template matching model similar to the first but without a Hopfield network. Experimental results found the third model had the highest accuracy. The research aims to enable natural human-computer interaction through gesture recognition using low-cost MEMS sensors.

![REFERENCES

[1] T. H. Speeter, ―Transformation human hand motion for telemanipulation,‖

Presence, vol. 1, no. 1, pp. 63–79, 1992.

[2] S. Zhou, Z. Dong, W. J. Li, and C. P. Kwong, ―Hand-written char-

acter recognition using MEMS motion sensing technology,‖ in

Proc.IEEE/ASMEInt.Conf. Advanced Intelligent Mechatronics, 2008, pp.

1418–1423.

[3] J. K. Oh, S. J. Cho, and W. C. Bang et al., ―Inertial sensor based recognition of

3-D character gestures with an ensemble of classifiers,‖ pre-

sented at the 9th Int. Workshop on Frontiers in Handwriting

RecogniTion, 2004.

[4] W. T. Freeman and C. D. Weissman, ―TV control by hand gestures,‖

presented at the IEEE Int. Workshop on Automatic Face and Gesture

Recognition, Zurich, Switzerland, 1995.

36](https://image.slidesharecdn.com/introduction-140321122550-phpapp01/75/Introduction-36-2048.jpg)