This document provides information about building a basic web crawler, including:

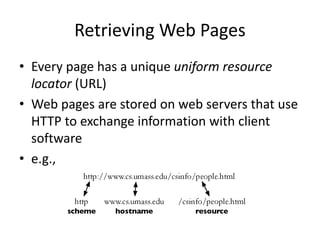

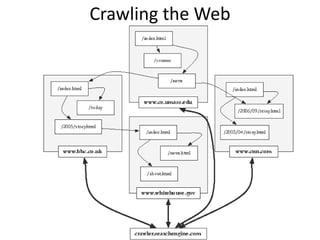

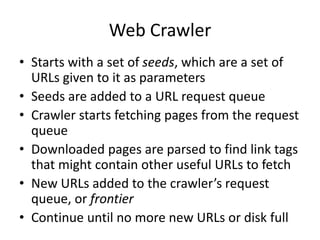

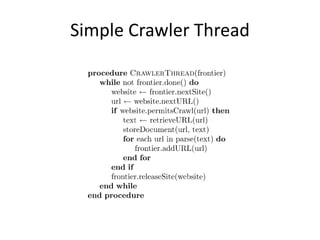

- Crawlers find and download web pages to build a collection for search engines by starting with seed URLs and following links on downloaded pages.

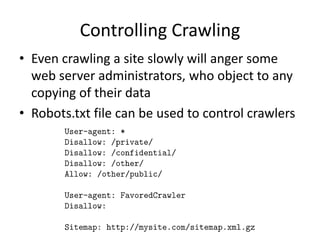

- Crawlers use techniques like multiple threads, politeness policies, and robots.txt files to efficiently and politely crawl the web at scale.

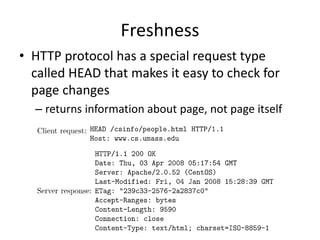

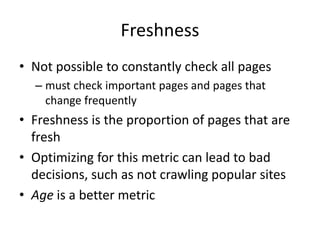

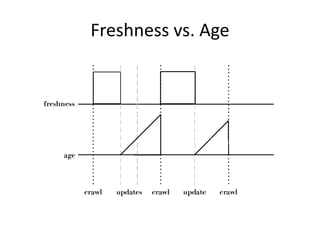

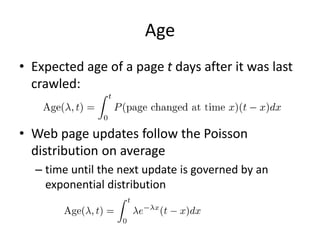

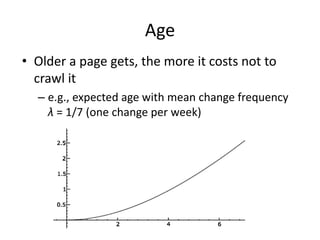

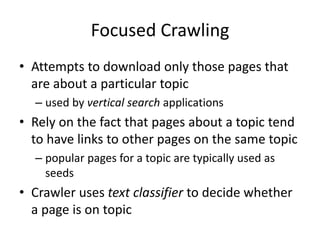

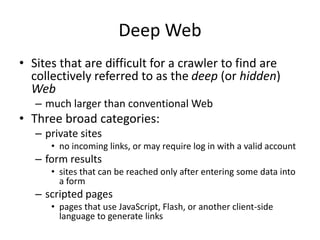

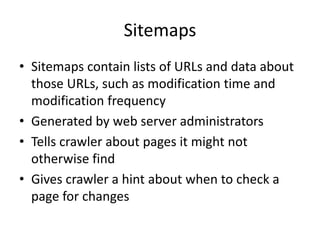

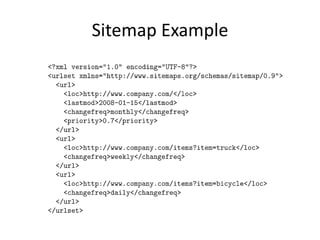

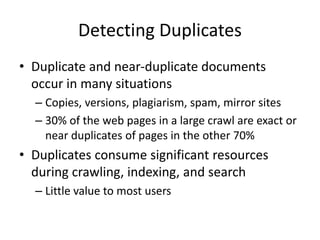

- Crawlers must also handle challenges like duplicate pages, updating freshness, focused crawling of specific topics, and accessing deep web or private pages.