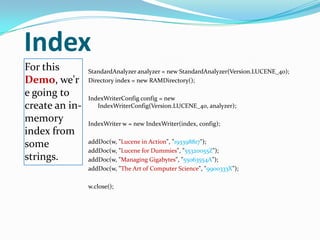

This document provides an overview of searching and Apache Lucene. It discusses what a search engine is and how it builds an index and answers queries. It then describes Apache Lucene as a high-performance Java-based search engine library. Key features of Lucene like its powerful query syntax, relevance ranking, and flexibility are outlined. Examples of indexing and searching code in Lucene are also provided. The document concludes with a discussion of Lucene's scalability and how it can handle increasing query rates, index sizes, and update rates.

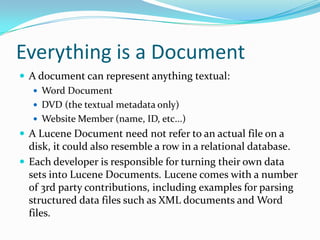

![Lucene Query Example

JUGNagpur

JUGNagpur AND Lucene +JUGNagpur +Lucene

JUGNagpur OR Lucene

JUGNagpur NOT PHP +JUGNagpur -PHP

“Java Conference”

Title: Lucene

J?GNagpur

JUG*

schmidt~ schmidt, schmit, schmitt

price: [100 TO 500]](https://image.slidesharecdn.com/apacheluceneautosaved-130528223110-phpapp02/85/Apache-lucene-11-320.jpg)

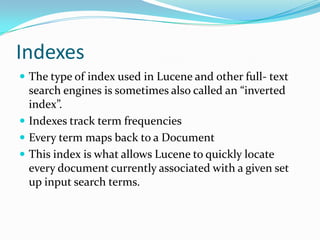

![Query

We read the

query from

stdin, parse

it and build

a lucene

Query out

of it.

String querystr = args.length > 0 ? args[0] : "lucene";

Query q = new

QueryParser(Version.LUCENE_40, "title", analyzer).parse(queryst

r);](https://image.slidesharecdn.com/apacheluceneautosaved-130528223110-phpapp02/85/Apache-lucene-14-320.jpg)

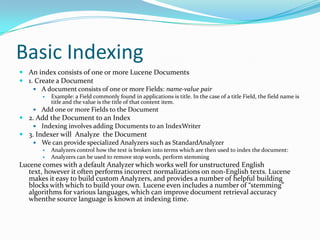

![Search

Using the

Query we

create a

Searcher to

search the

index.

Then a

TopScoreDocC

ollector is

instantiated to

collect the top

10 scoring hits.

int hitsPerPage = 10;

IndexReader reader = IndexReader.open(index);

IndexSearcher searcher = new IndexSearcher(reader);

TopScoreDocCollector collector = TopScoreDocCollector.create(hitsPerPage,

true);

searcher.search(q, collector);

ScoreDoc[] hits = collector.topDocs().scoreDocs;](https://image.slidesharecdn.com/apacheluceneautosaved-130528223110-phpapp02/85/Apache-lucene-15-320.jpg)

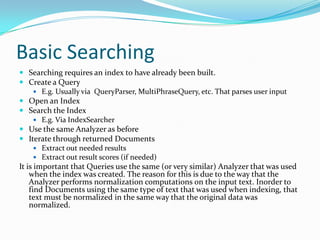

![Display

Now that we

have results

from our

search, we

display the

results to

the user.

System.out.println("Found " + hits.length + " hits.");

for(int i=0;i<hits.length;++i) {

int docId = hits[i].doc;

Document d = searcher.doc(docId);

System.out.println((i + 1) + ". " + d.get("isbn") + "t" +

d.get("title"));

}](https://image.slidesharecdn.com/apacheluceneautosaved-130528223110-phpapp02/85/Apache-lucene-16-320.jpg)