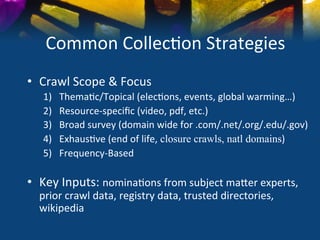

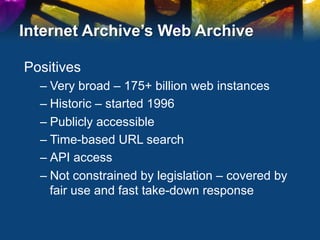

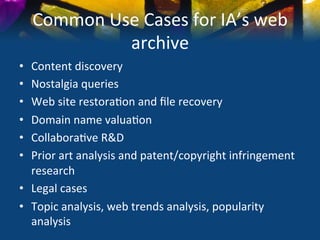

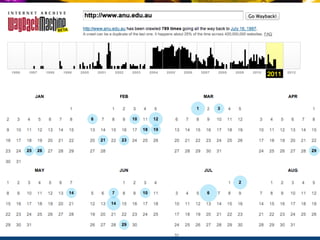

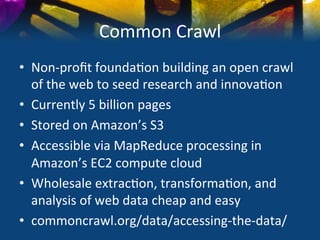

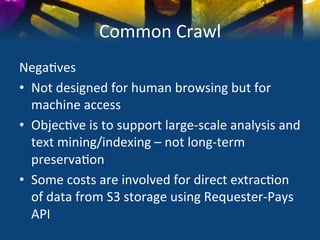

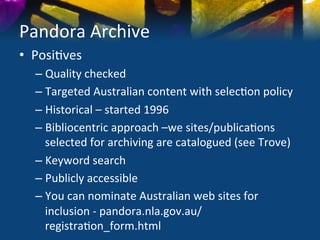

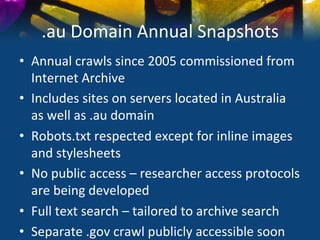

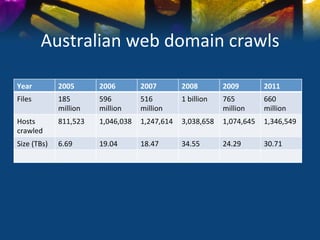

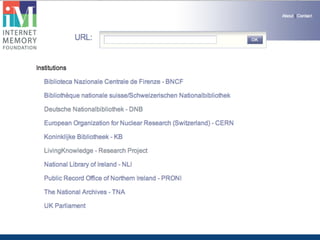

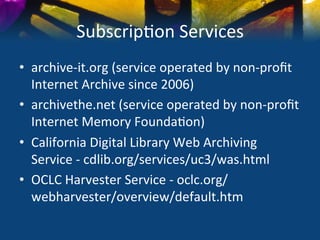

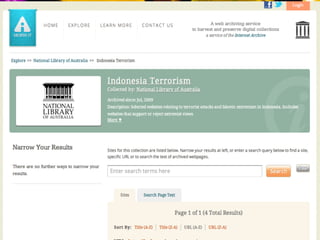

This document discusses various strategies and resources for archiving internet content for research purposes. It describes several existing large-scale web archives like the Internet Archive and Common Crawl, as well as national and institutional archives. It also outlines how researchers can collect targeted web archives using open-source tools or subscription-based services.