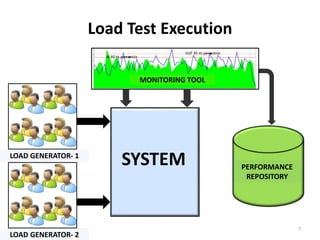

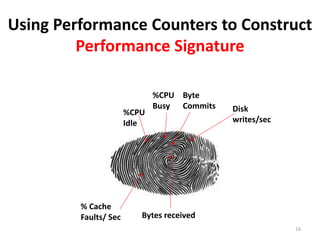

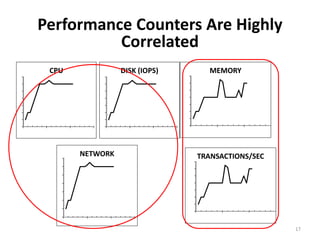

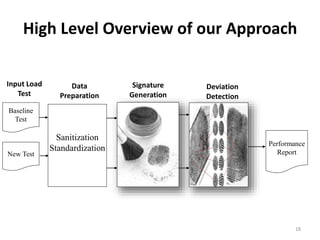

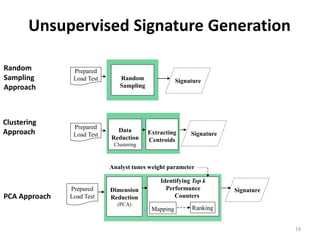

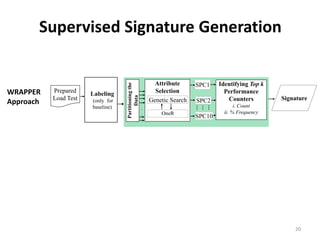

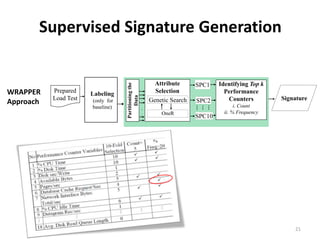

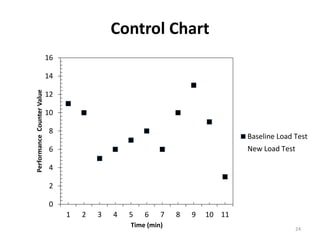

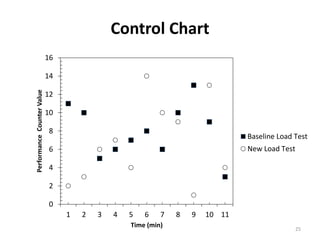

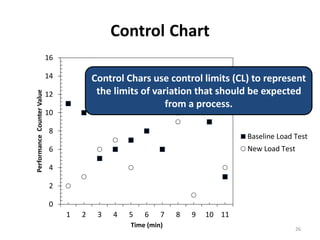

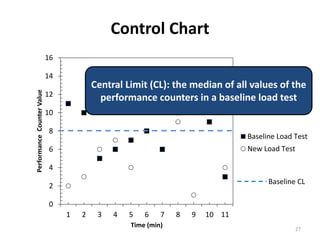

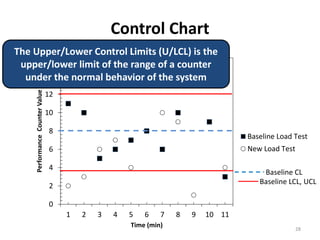

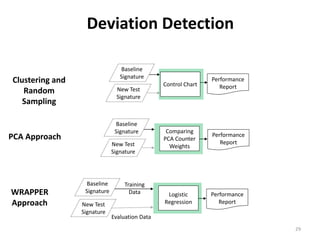

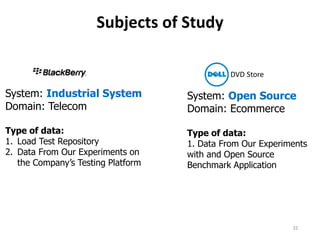

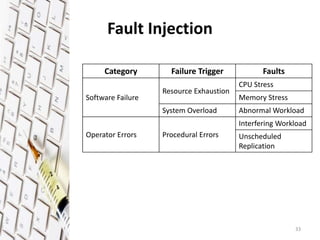

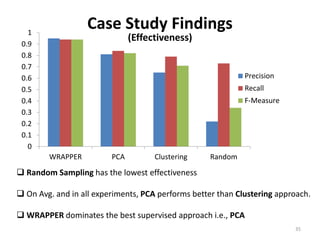

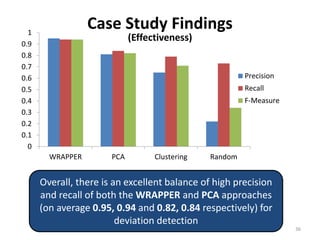

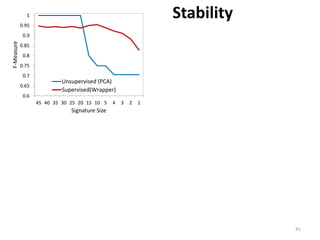

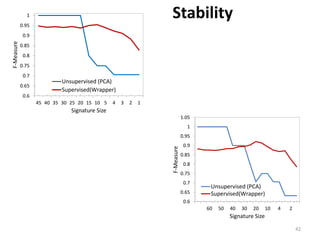

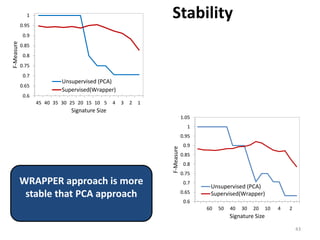

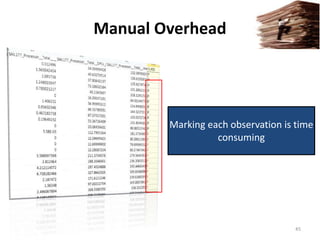

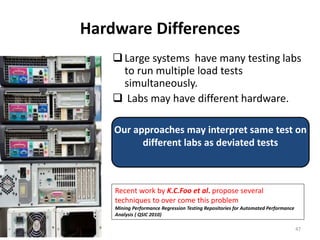

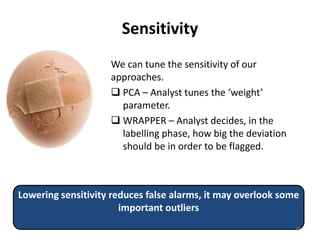

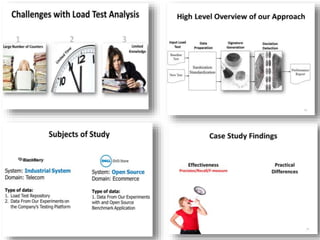

The document describes approaches for automatically detecting performance deviations in load testing of large scale systems. It presents four approaches: three unsupervised using clustering, random sampling, and PCA; and one supervised using WRAPPER. A case study evaluates the approaches on an open-source ecommerce system and industrial telecom system, finding the supervised WRAPPER and unsupervised PCA approaches most effective with high precision and recall. The WRAPPER approach allows real-time analysis but requires more manual overhead during training.