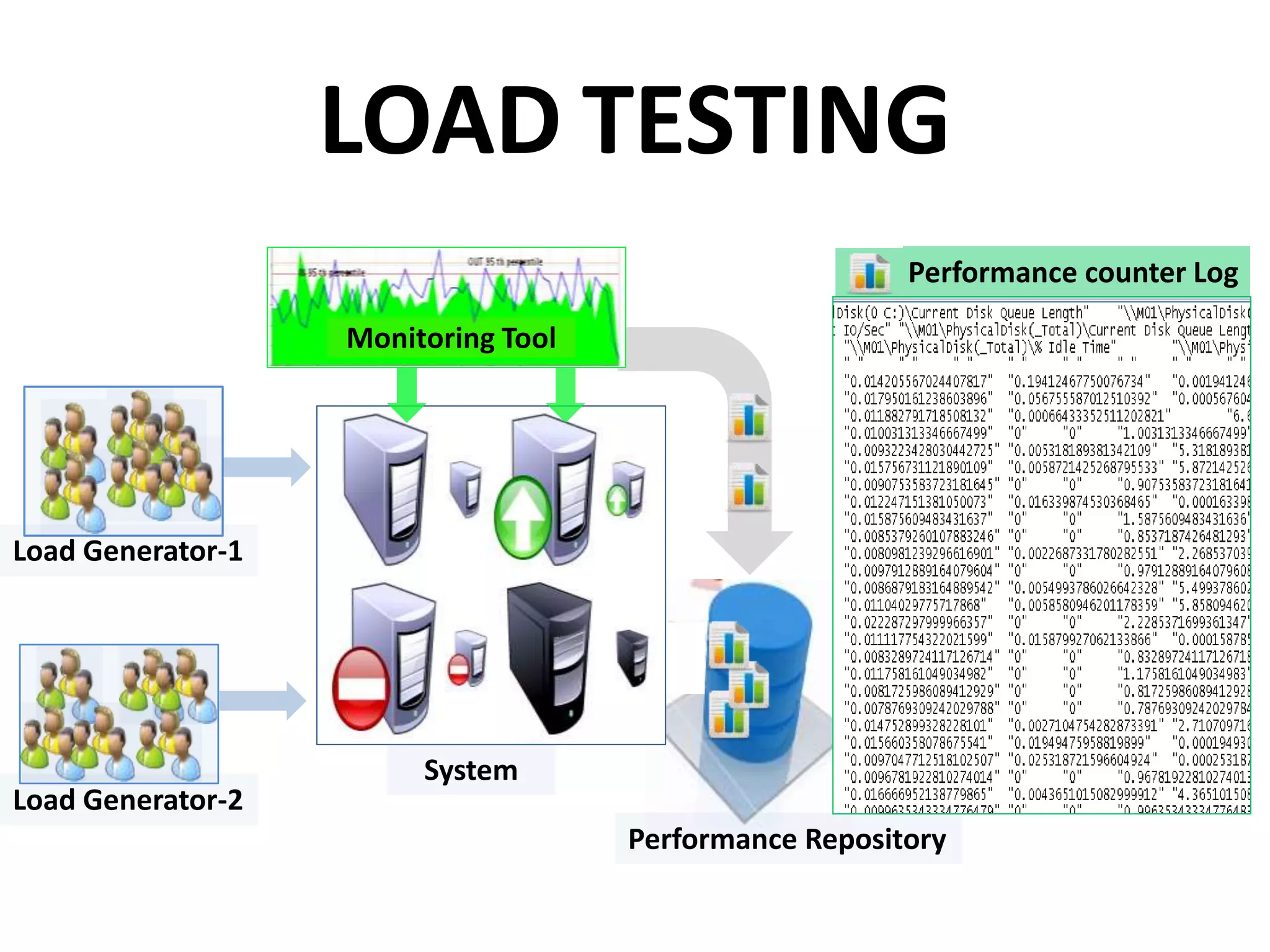

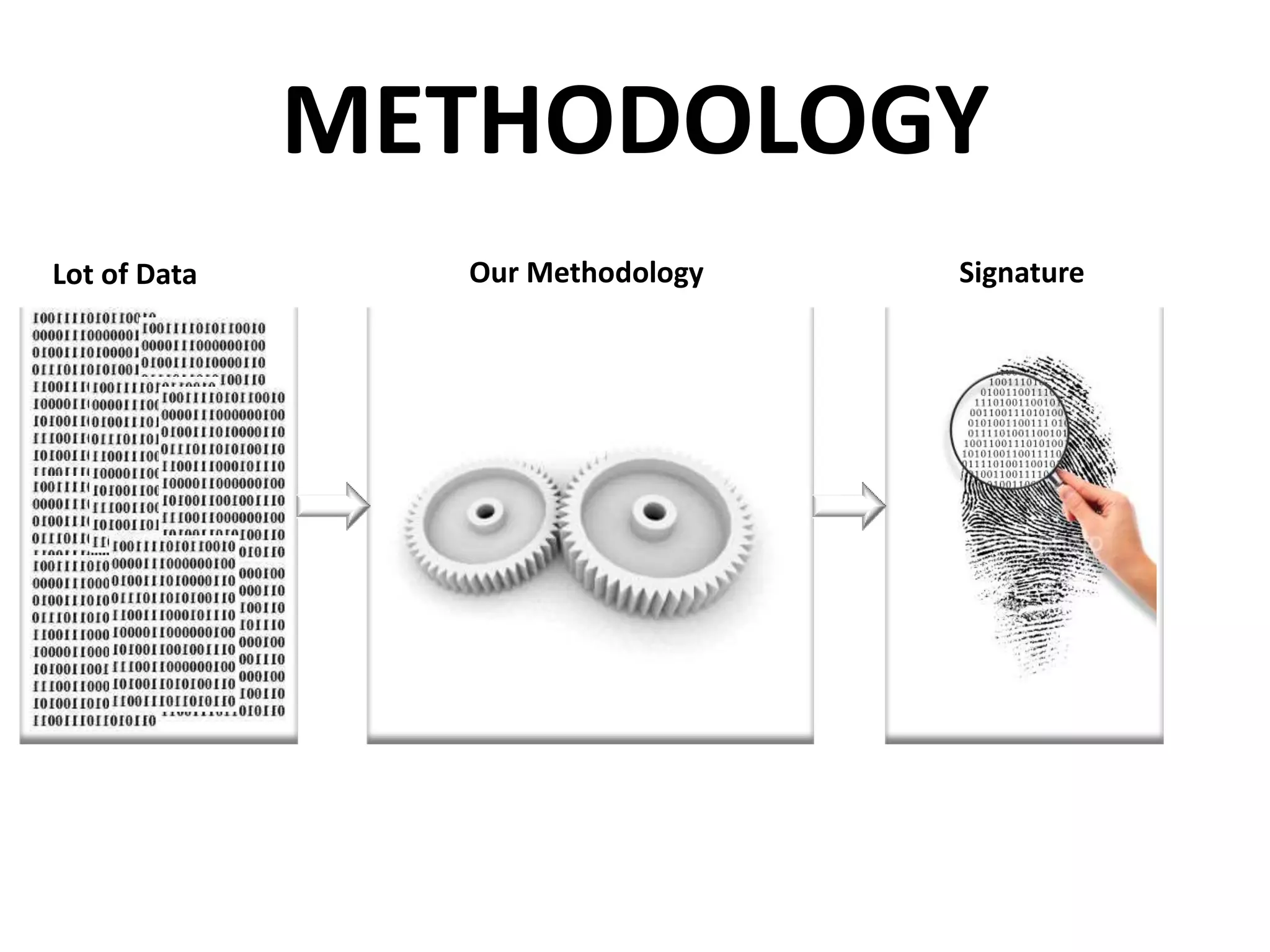

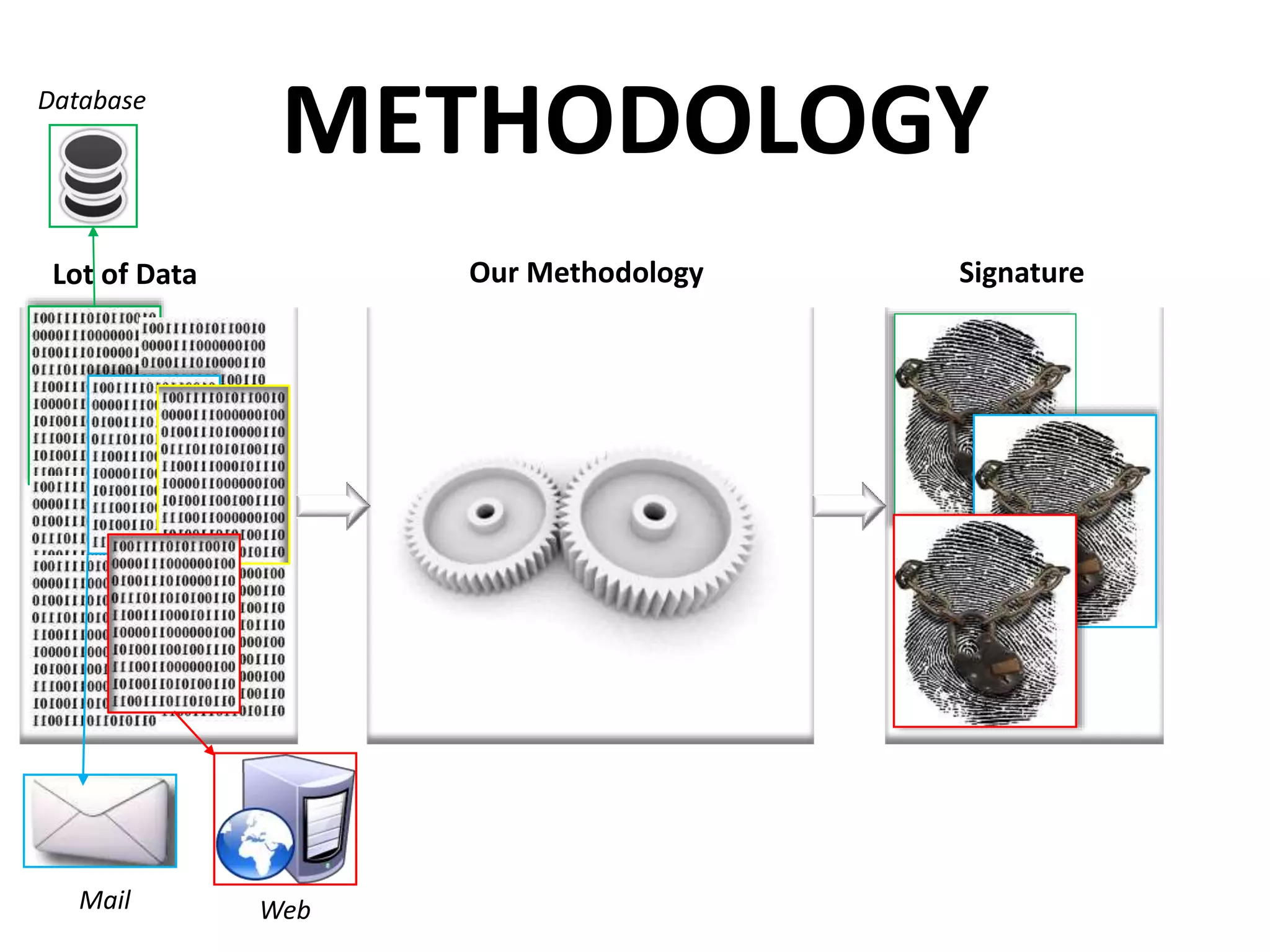

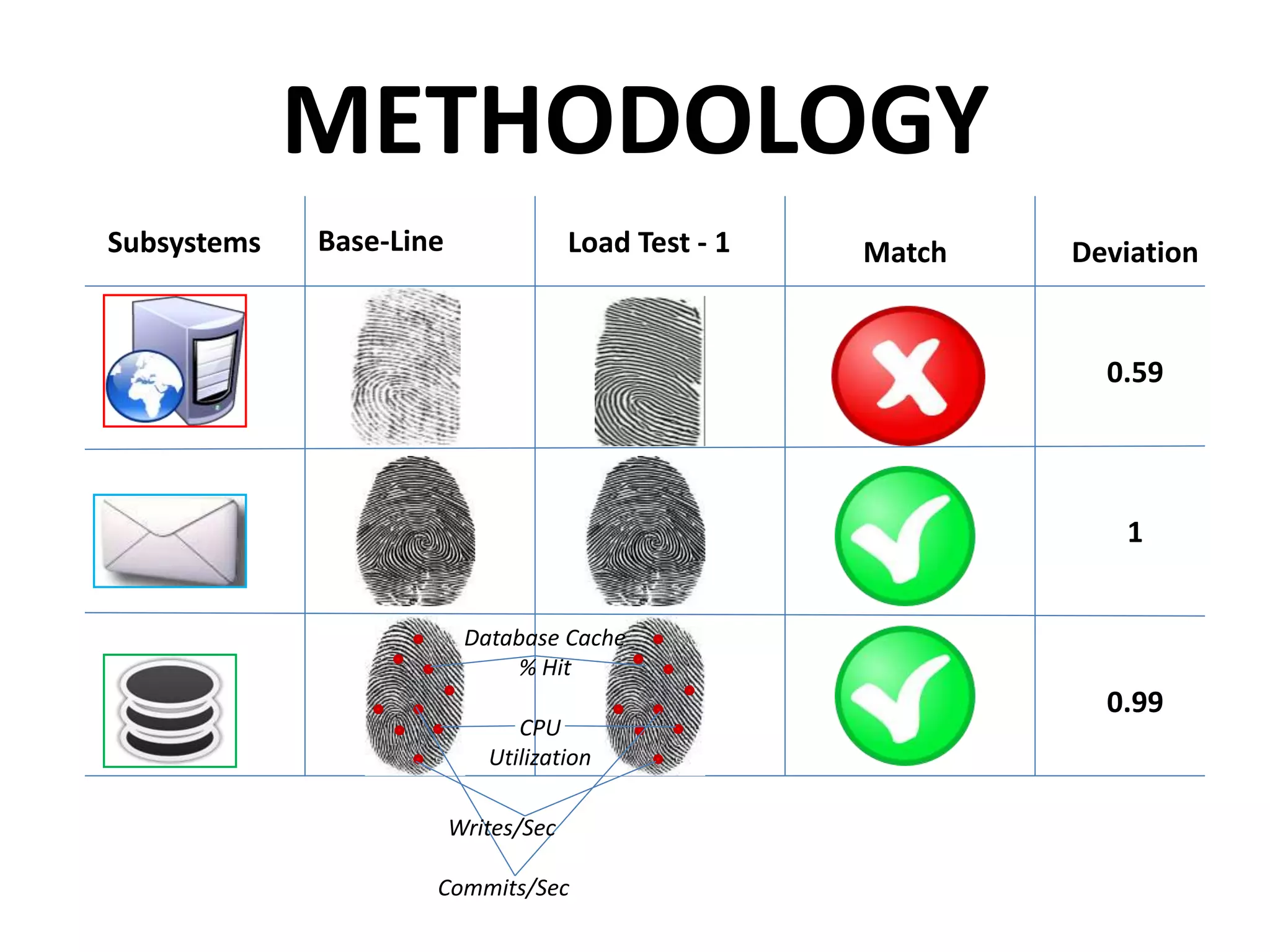

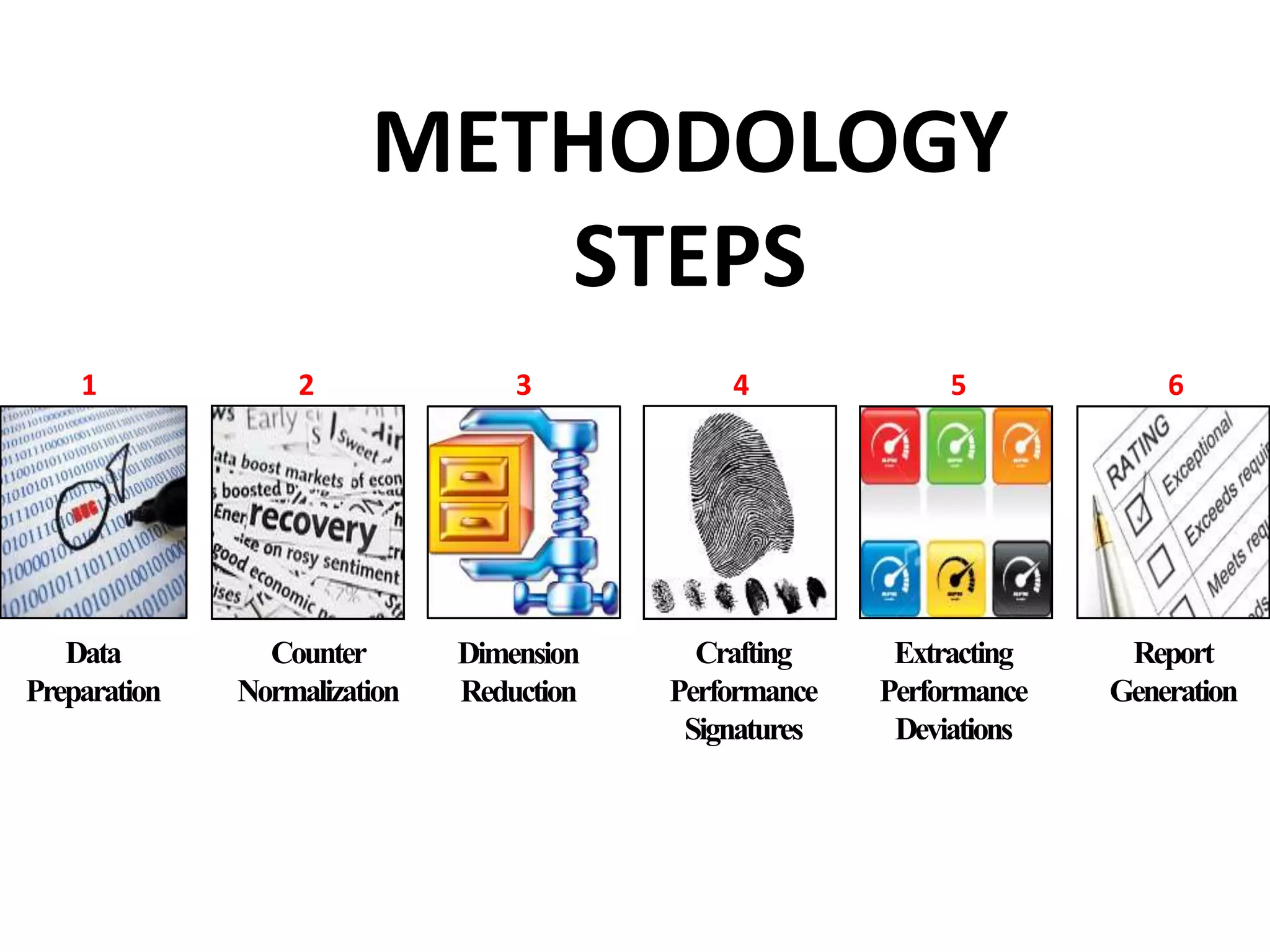

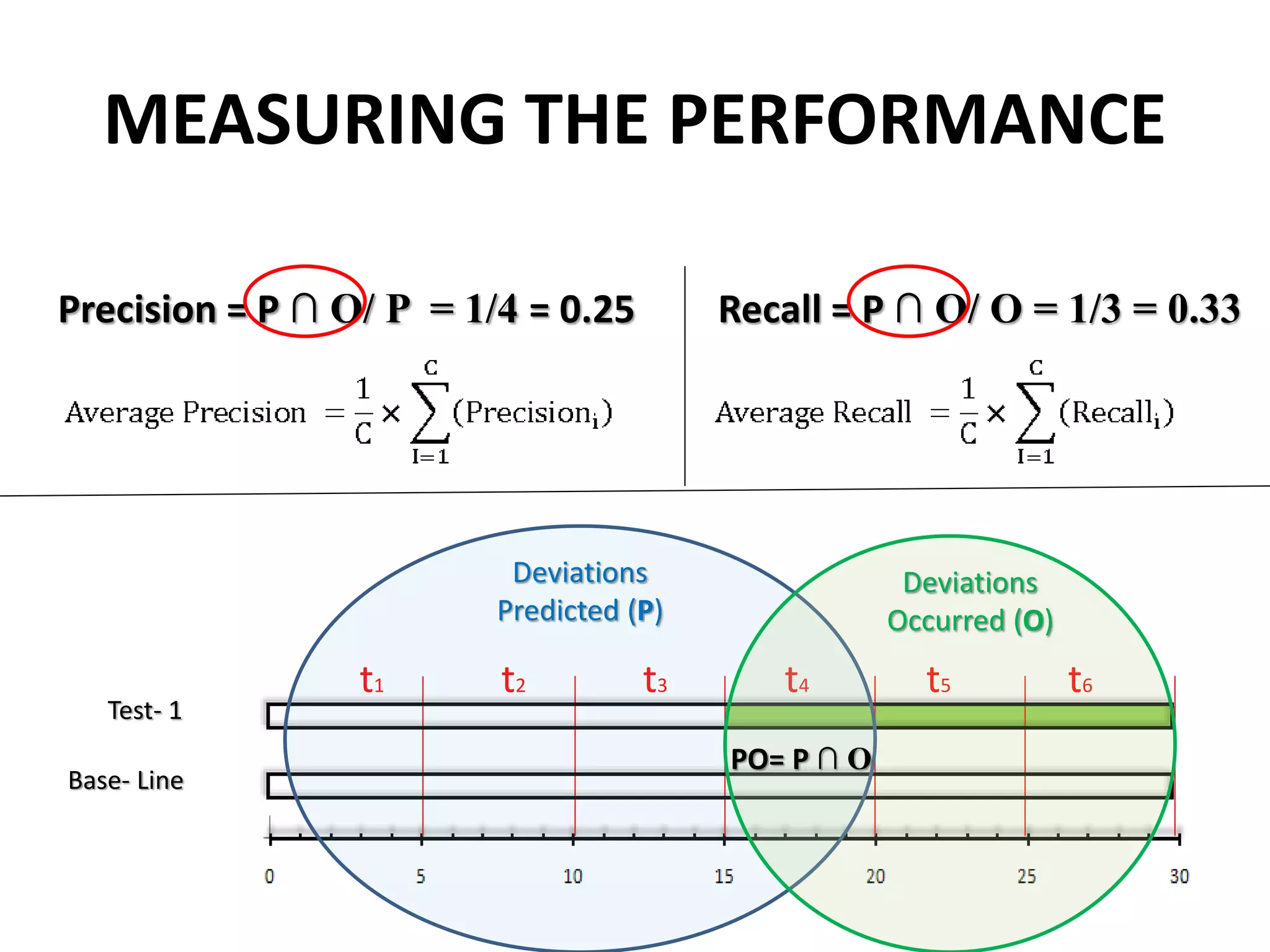

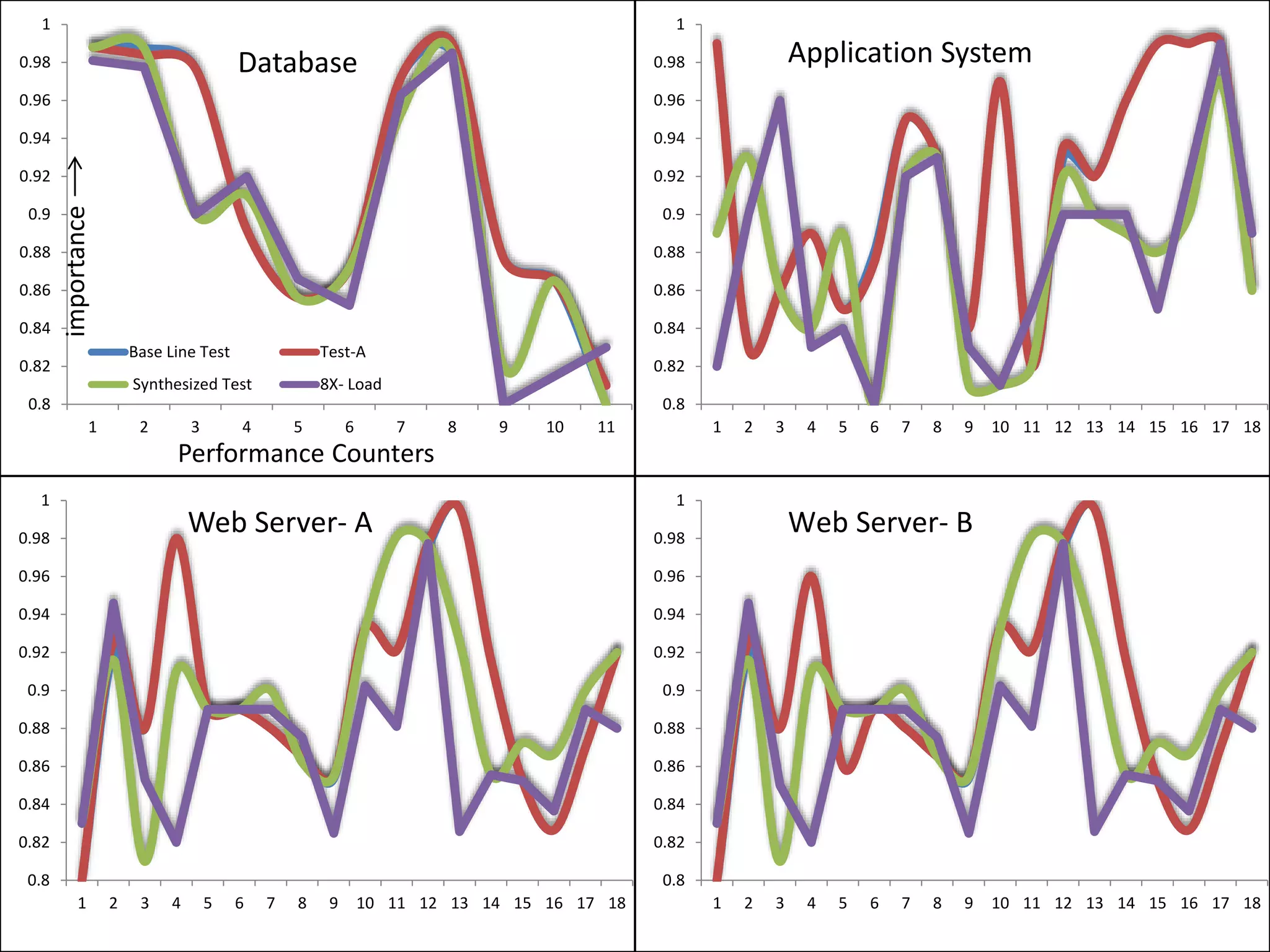

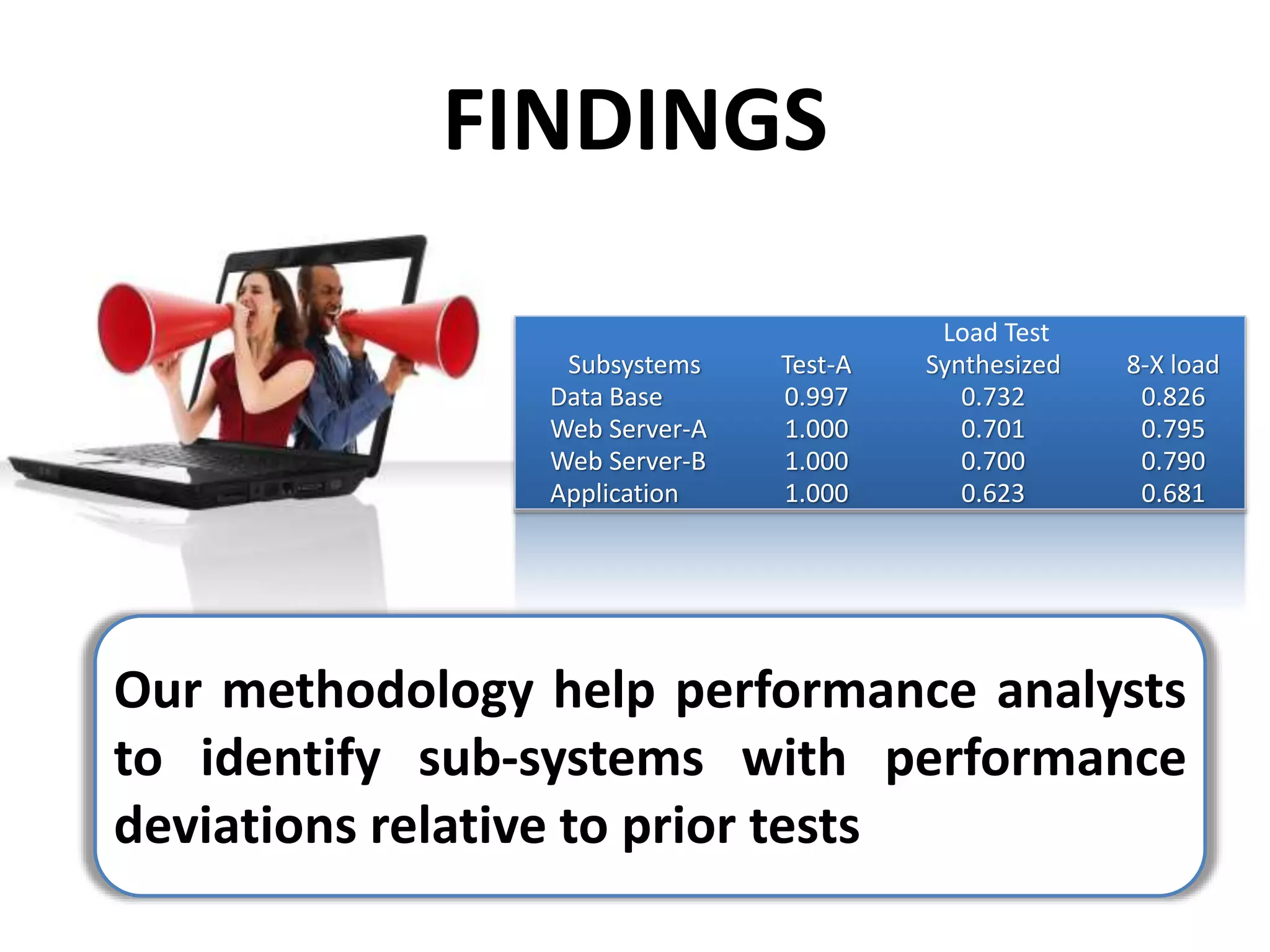

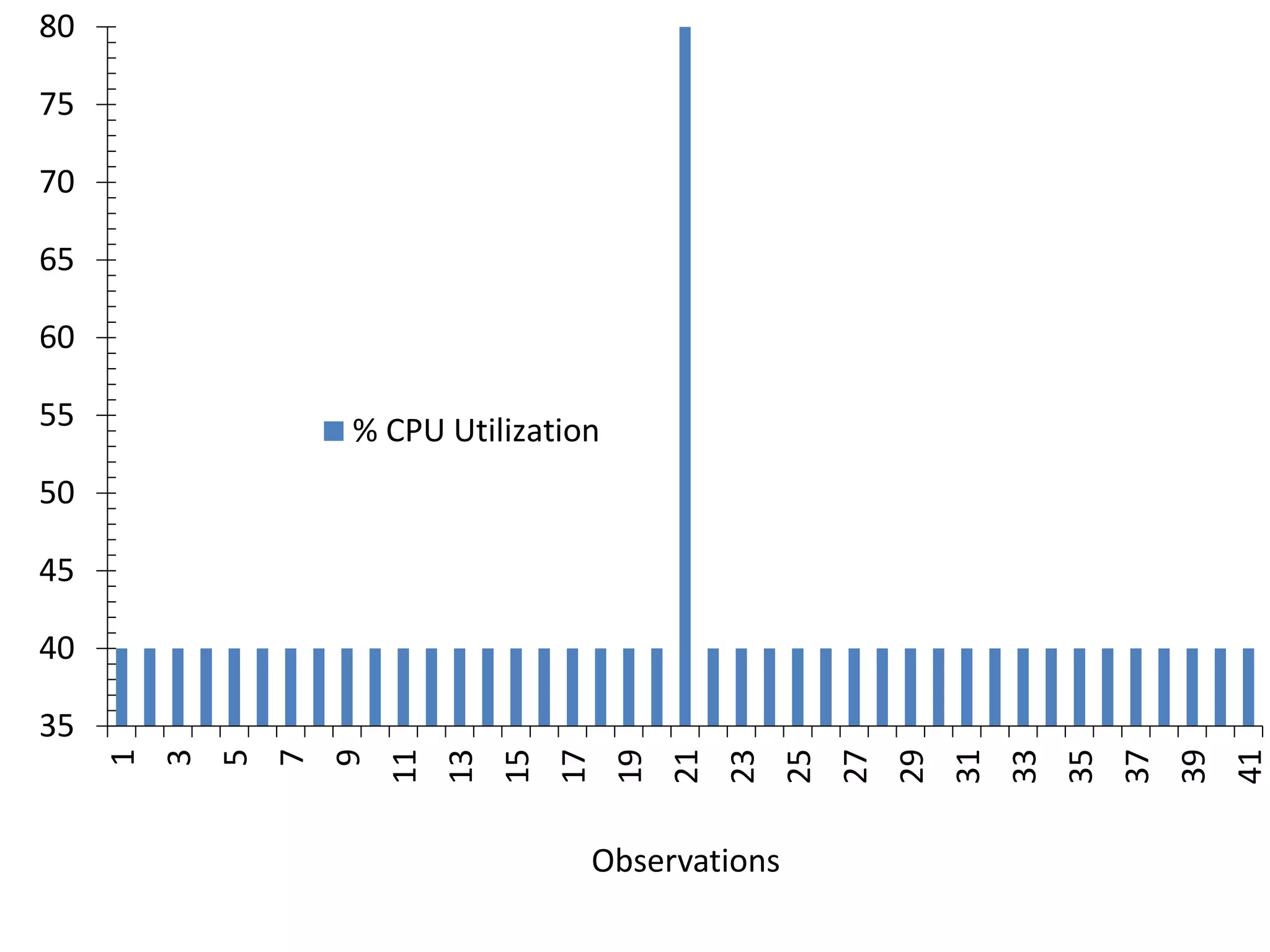

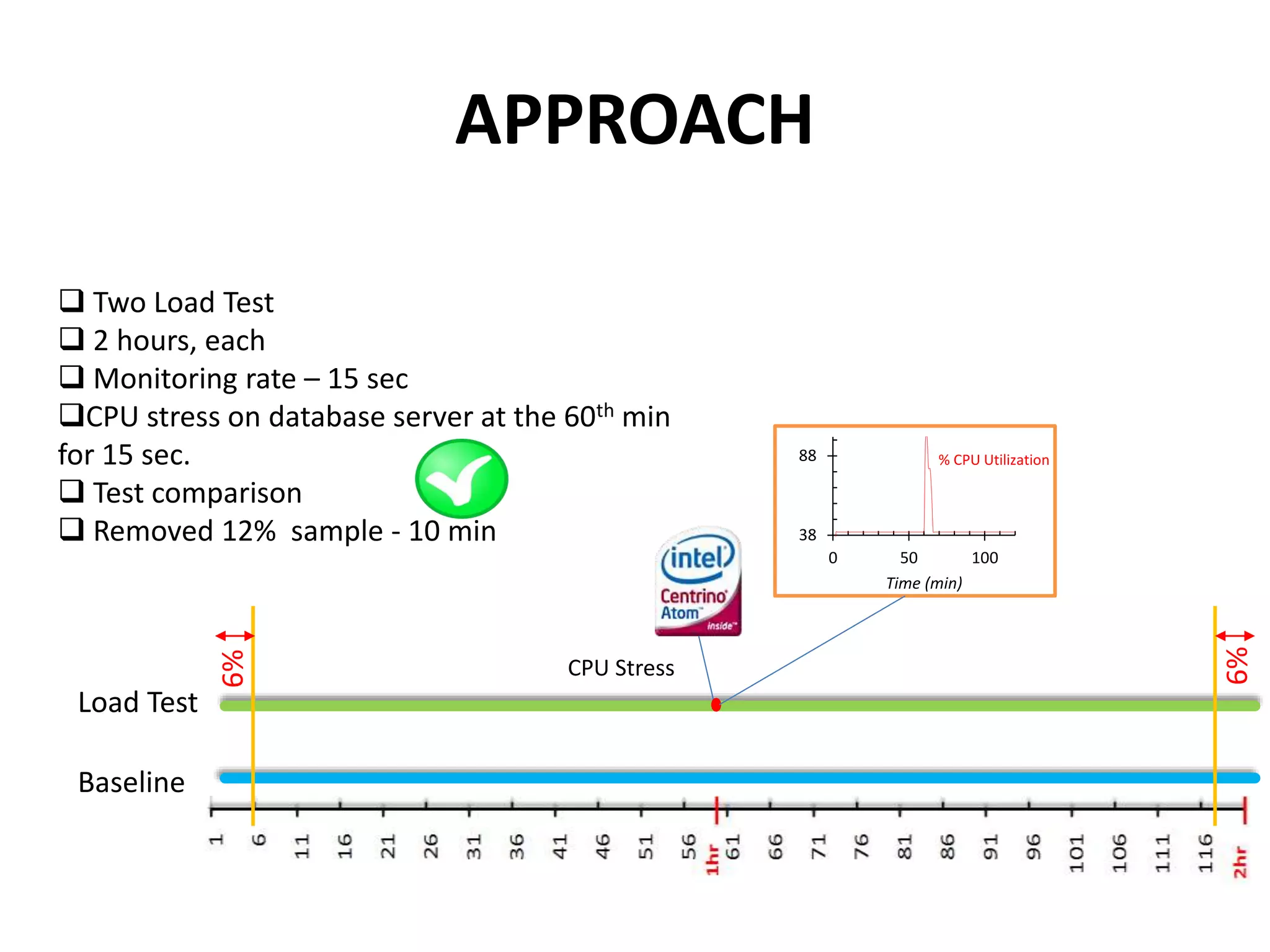

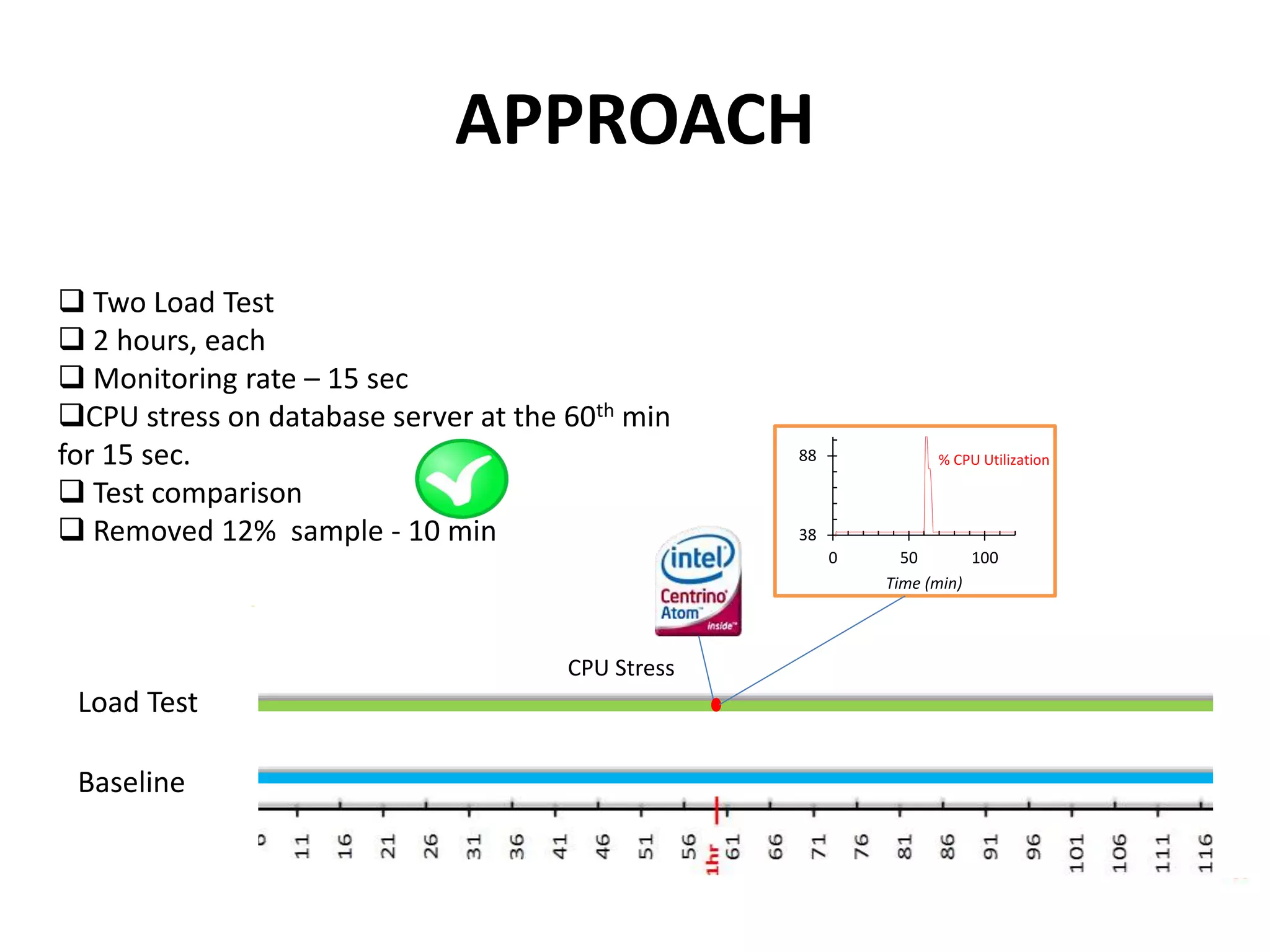

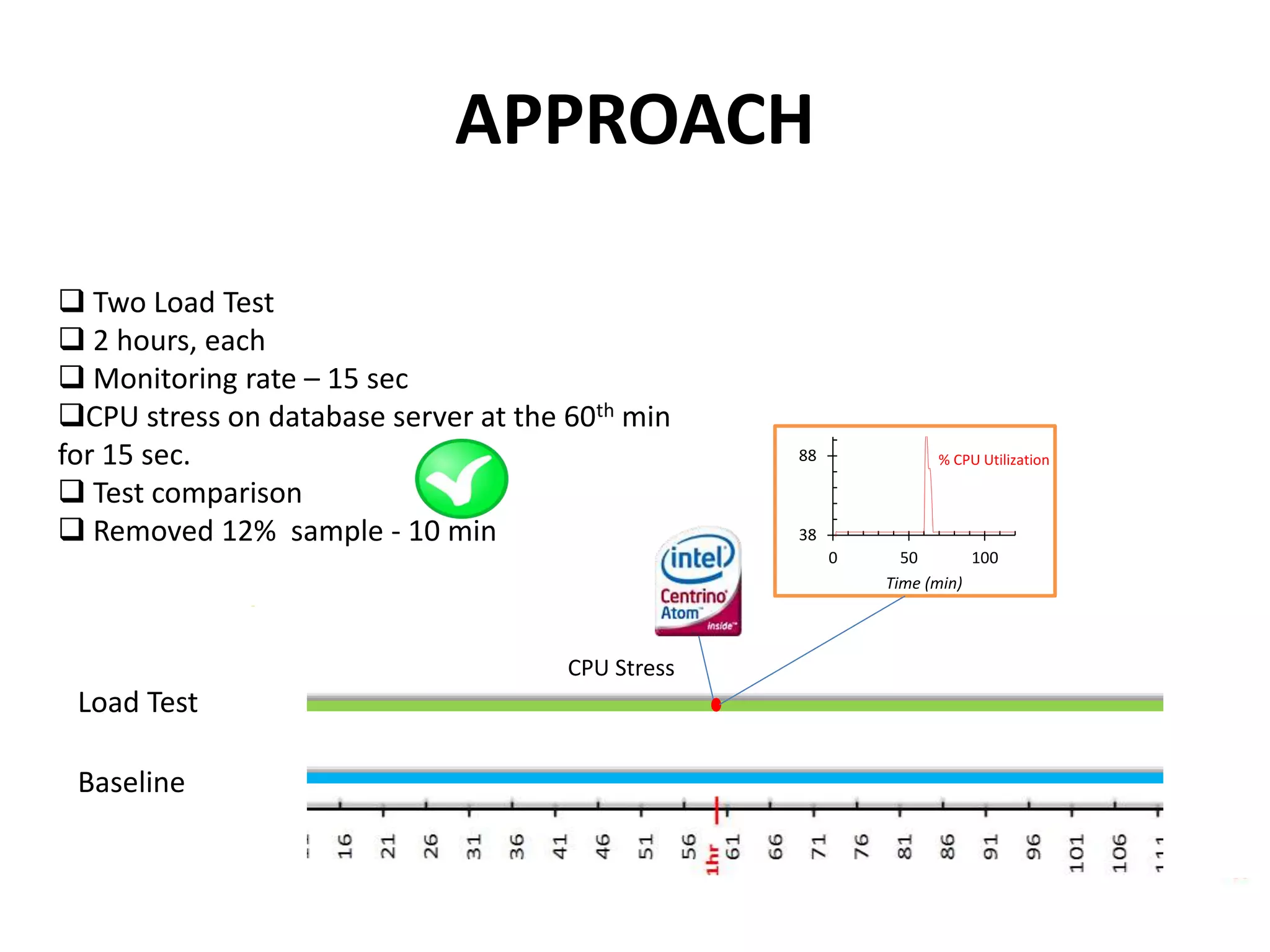

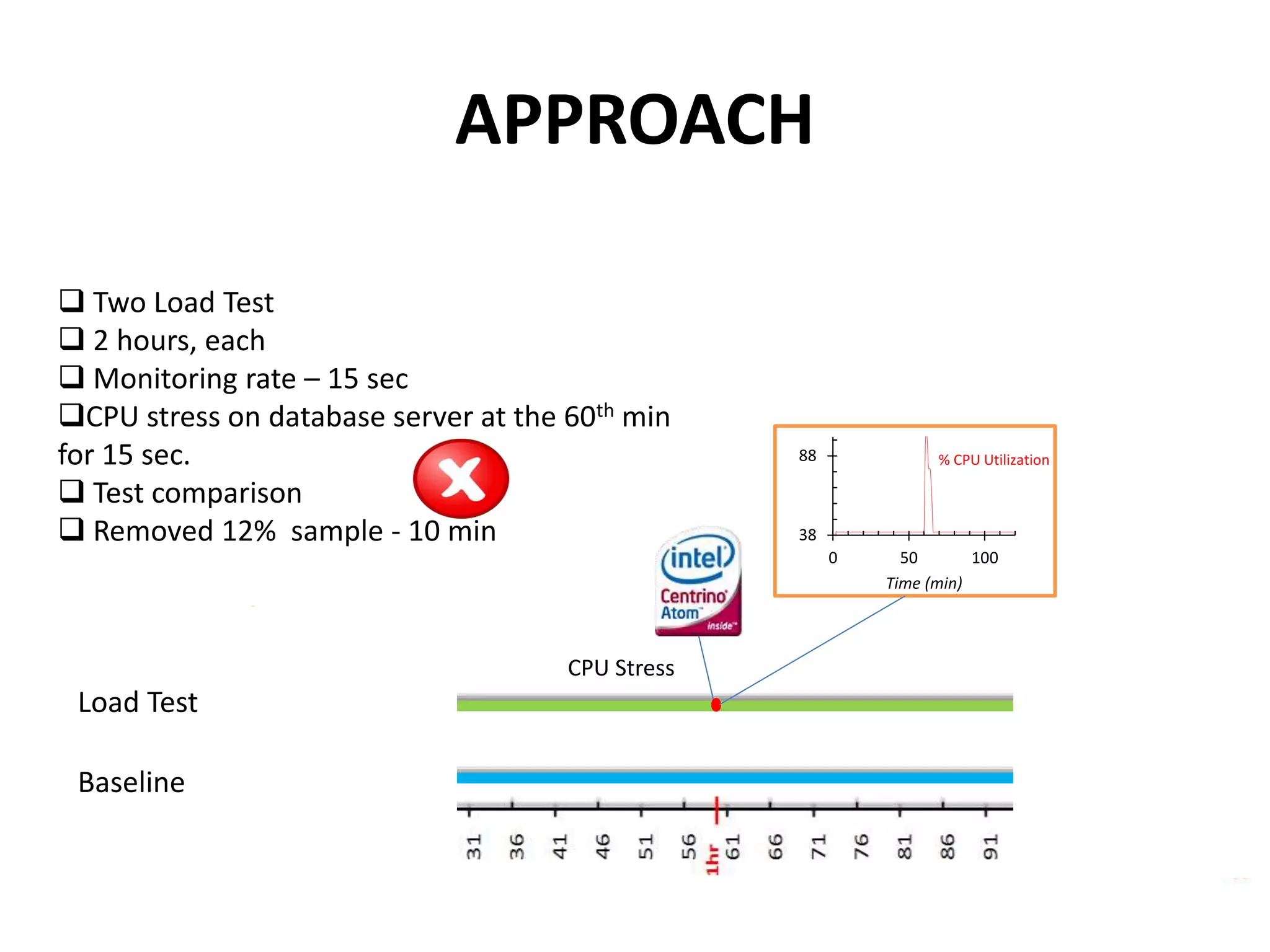

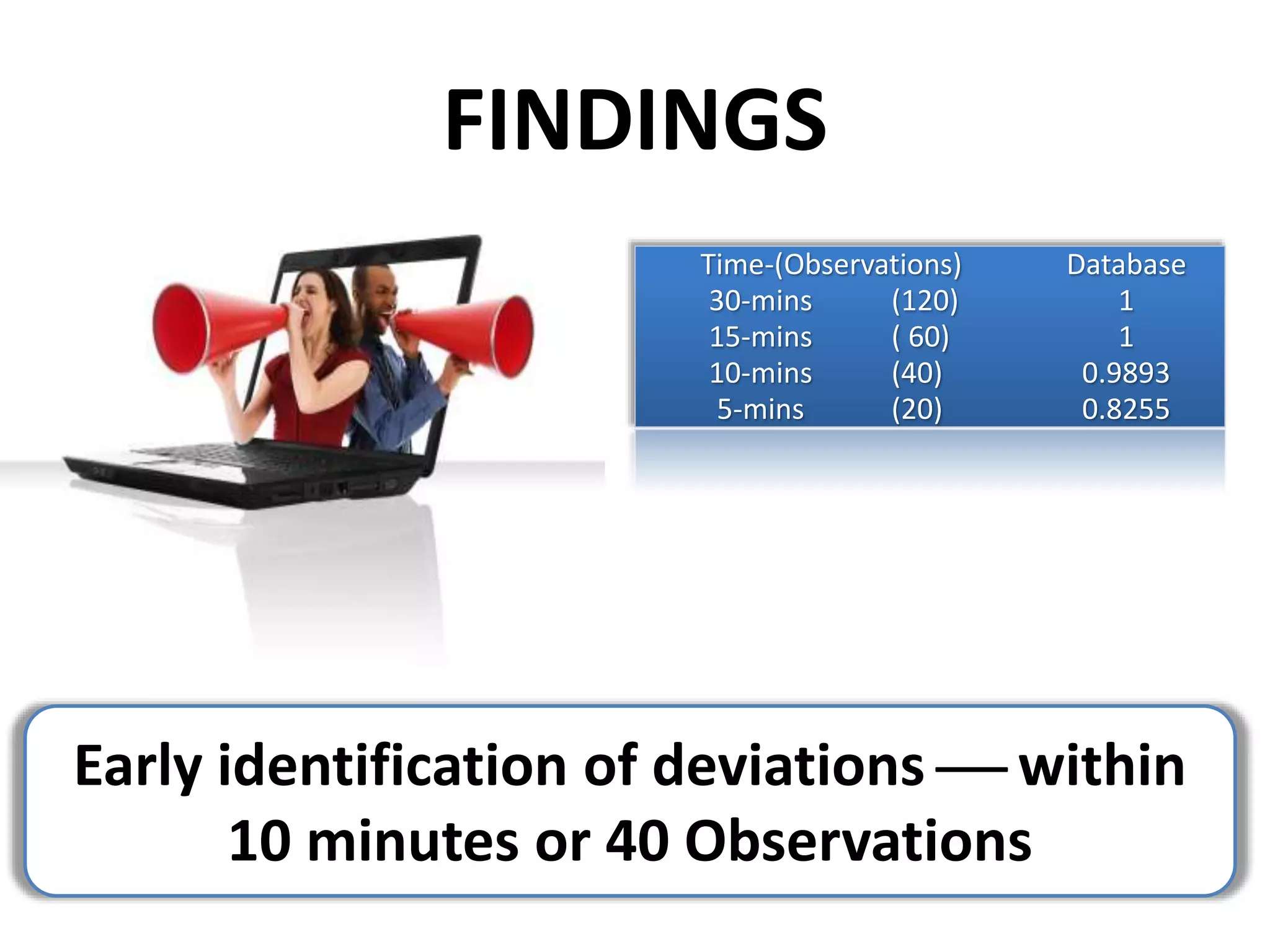

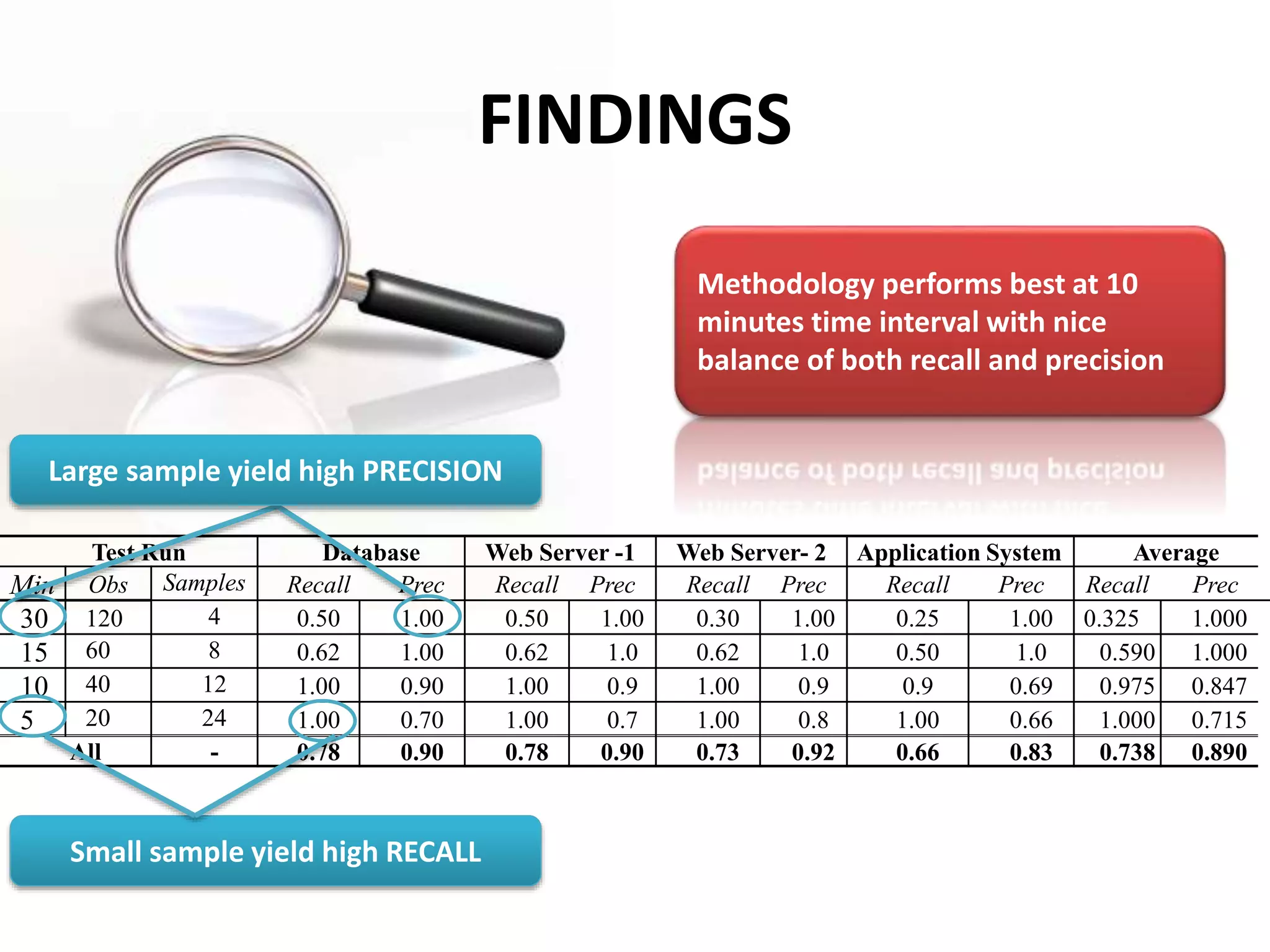

The document presents a methodology for automatically comparing the performance of subsystems in a large enterprise system using load testing. The methodology involves collecting performance counter data during load tests, normalizing and reducing the data, crafting performance signatures for subsystems, and measuring deviations. It was found to accurately identify subsystems with performance deviations compared to a baseline. It could identify deviations within 10 minutes, allowing tests to be stopped early. Performance was best at a 10 minute sampling interval with a balance of recall and precision.