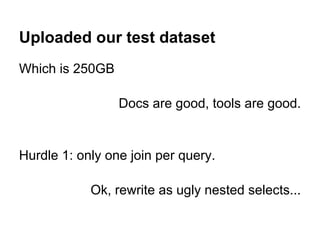

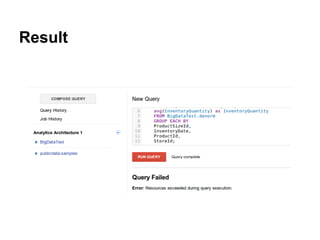

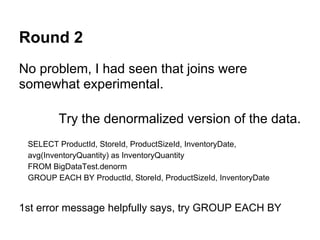

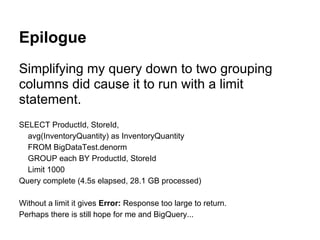

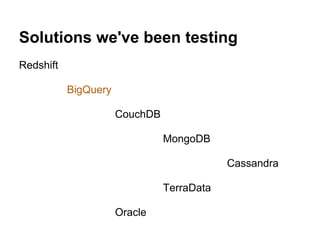

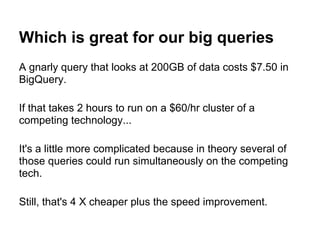

The document discusses Gabe Hamilton's experience evaluating BigQuery as a replacement for SQL Server in reporting and business intelligence, highlighting its cost-effectiveness and speed advantages. While BigQuery initially impressed with low query costs and performance metrics, Hamilton faced challenges like limitations on joins and query grouping that complicated usage. Ultimately, the experience was mixed, revealing both promise and hurdles in the transition to BigQuery.

![Example: Github data from past year

3.5 GB Table

SELECT type, count(*) as num FROM [publicdata:samples.github_timeline]

group by type order by num desc;

Query complete (1.1s elapsed, 75.0 MB processed)

Event Type num

PushEvent 2,686,723

CreateEvent 964,830

WatchEvent 581,029

IssueCommentEvent 507,724

GistEvent 366,643

IssuesEvent 305,479

ForkEvent 180,712

PullRequestEvent 173,204

FollowEvent 156,427

GollumEvent 104,808

Cost $0.0026

or 5 for a penny](https://image.slidesharecdn.com/howbigquerybrokemyheart-130522164709-phpapp02/85/How-BigQuery-broke-my-heart-9-320.jpg)