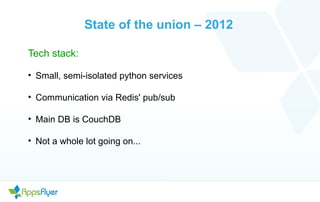

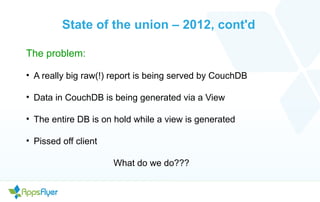

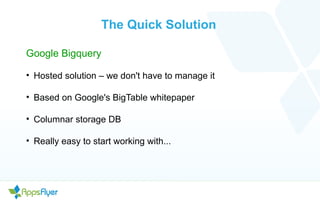

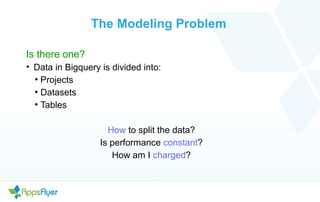

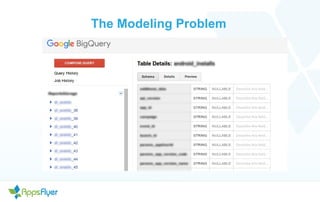

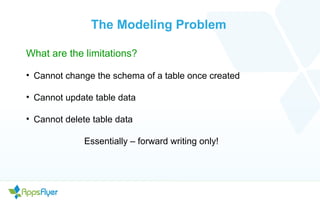

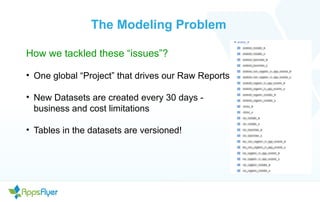

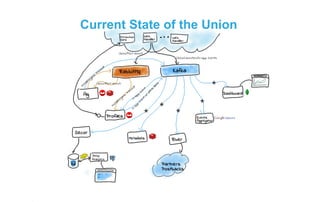

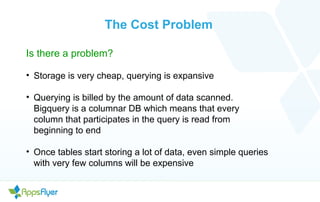

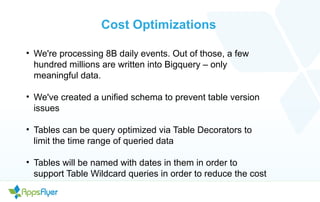

- The document discusses the past, present, and future of using BigQuery for mobile campaign analytics. In the past, they used small Python services and CouchDB which had performance issues. They started using BigQuery which improved performance but had some limitations and cost challenges. Through optimizations like unified schemas and table decorators, they addressed these issues. Going forward, they are waiting for custom partitioning functions in BigQuery to further improve performance and reduce costs.