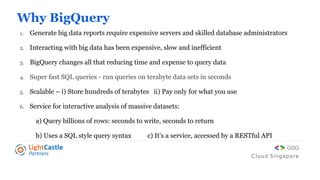

Google BigQuery revolutionizes analytics by providing a fast, scalable solution for querying massive datasets at a lower cost and with less technical expertise required. It enables users to run SQL queries on terabytes of data in seconds, thereby cutting analysis time by up to 90%. The platform supports various data formats and best practices to optimize performance and reduce query costs.