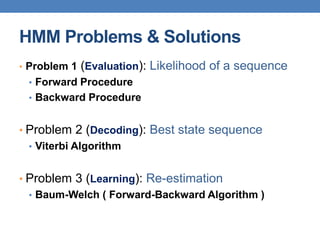

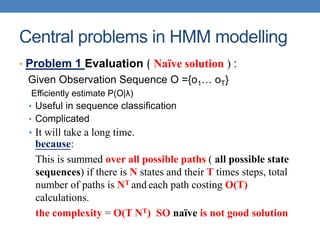

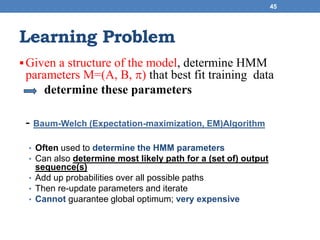

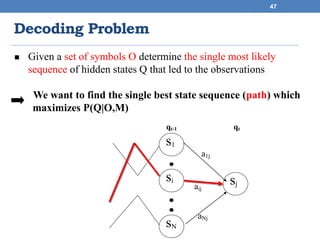

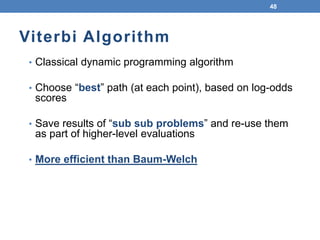

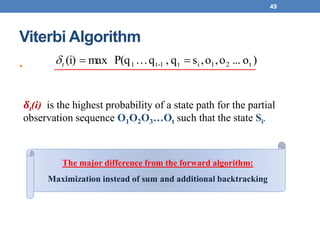

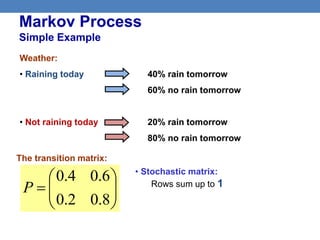

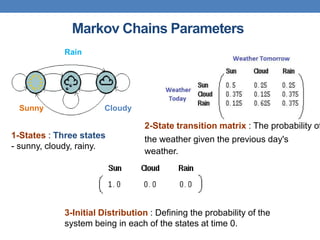

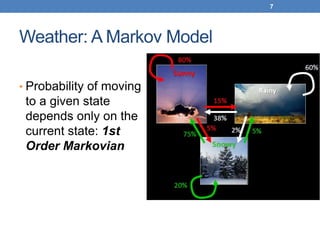

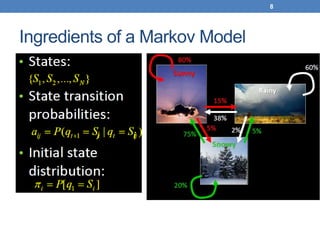

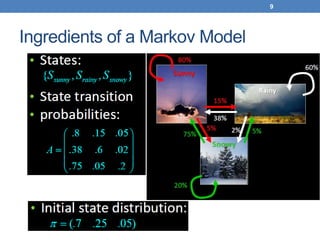

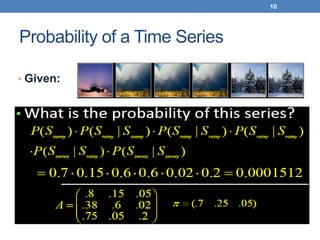

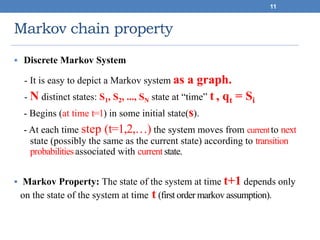

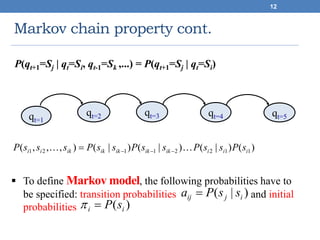

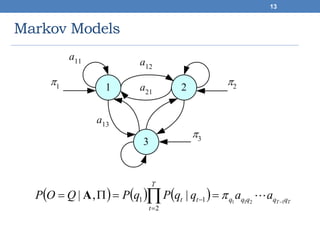

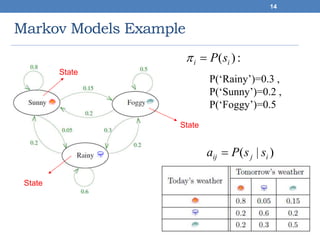

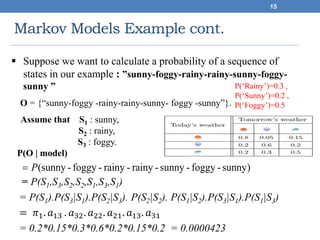

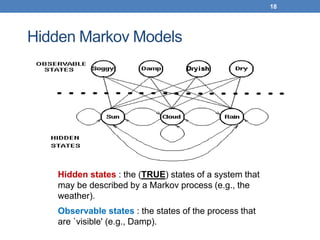

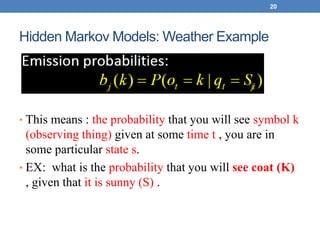

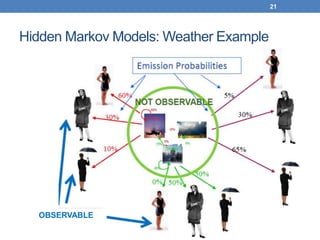

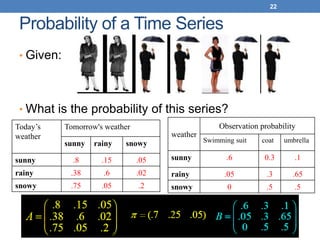

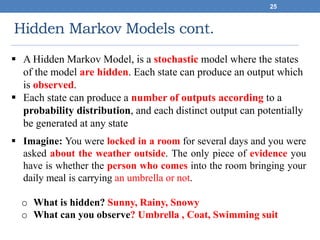

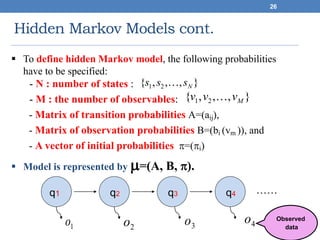

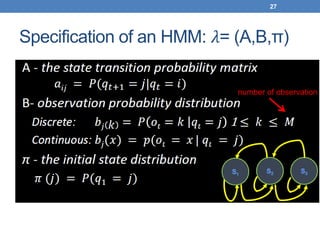

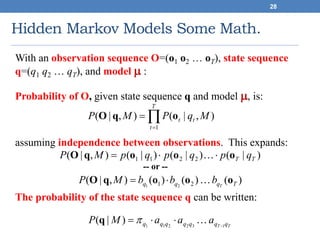

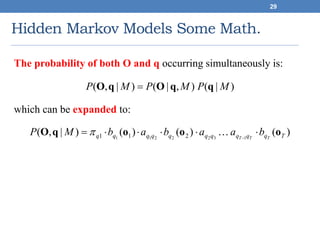

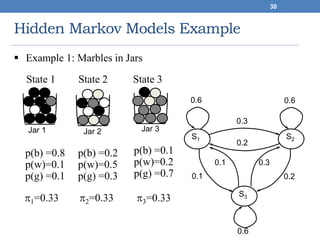

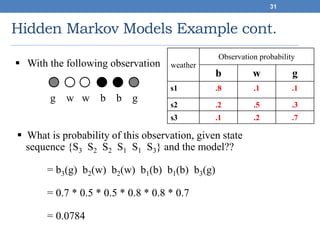

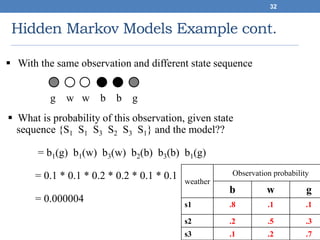

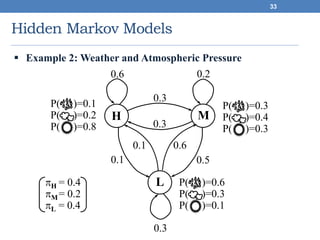

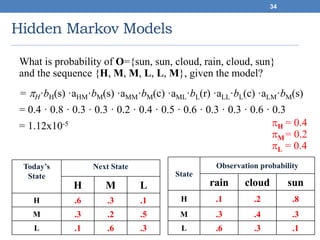

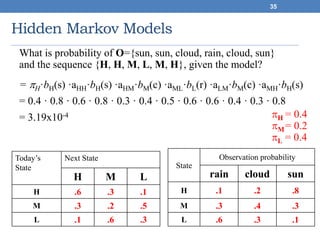

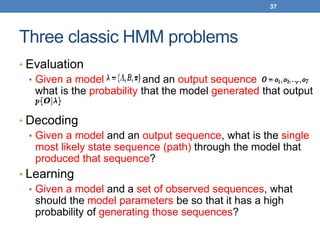

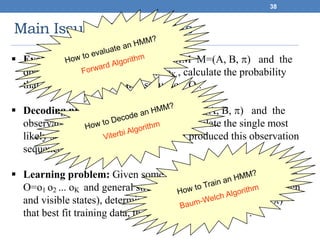

This document discusses hidden Markov models (HMMs). It begins by covering Markov chains and Markov models. It then defines HMMs, noting that they have hidden states that can produce observable outputs. The key components of an HMM - the transition probabilities, observation probabilities, and initial probabilities - are explained. Examples of HMMs for weather and marble jars are provided to illustrate calculating probabilities. The main issues in using HMMs are identified as evaluation, decoding, and learning. Evaluation calculates the probability of an observation sequence given a model. Decoding finds the most likely state sequence that produced an observation sequence. Learning determines model parameters to best fit training data.

![The three main problems on HMMs

1. Evaluation

GIVEN a HMM , and a sequence O,

FIND Prob[ O | ]

2. Decoding

GIVEN a HMM , and a sequence O,

FIND the sequence X of states that maximizes P[X | O, ]

3. Learning

GIVEN a sequence O,

FIND a model with parameters , A and B that

maximize P[ O | ]](https://image.slidesharecdn.com/08machinelearninghmm1edited-190407053613/85/Hidden-Markov-Model-39-320.jpg)