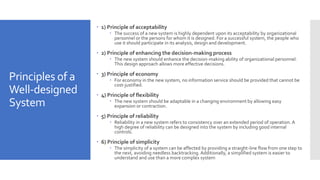

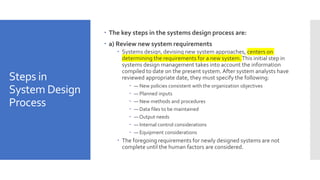

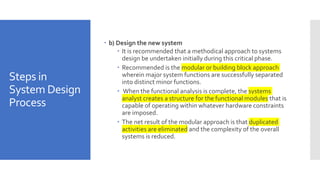

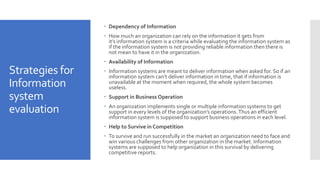

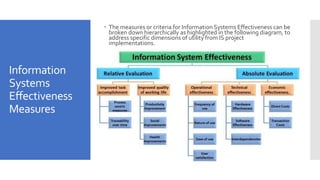

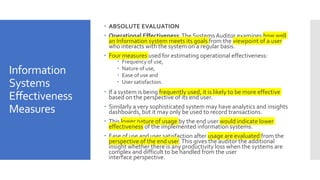

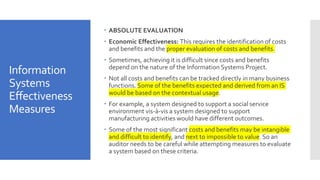

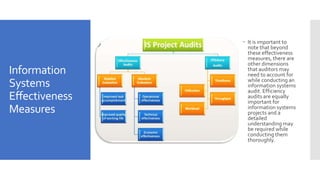

The document discusses the design and evaluation of information systems and services in health informatics, emphasizing principles, strategies for quality improvement, and evidence-based healthcare protocols. It outlines crucial system design principles, key steps in the design process, and criteria for evaluating information systems effectiveness. Additionally, it highlights the importance of user acceptance, decision-making enhancement, and cost-effectiveness in the successful implementation of such systems.