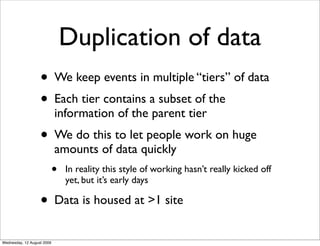

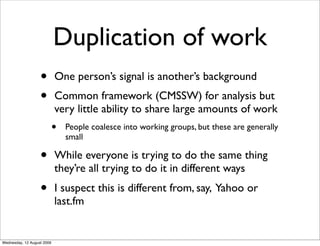

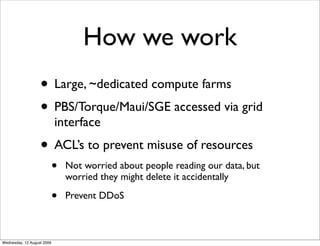

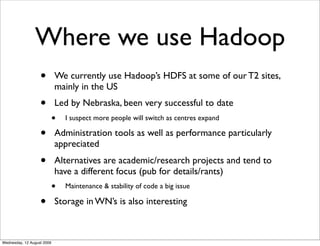

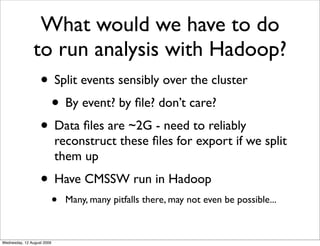

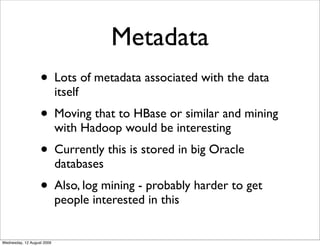

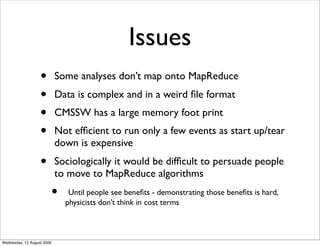

The document discusses the significant data handling requirements of the CMS experiment, which produces and processes vast amounts of data from proton collisions for research purposes. It outlines the challenges of duplicating data and work, along with the current use of Hadoop's HDFS for data management at various computing centers. Issues with running analyses on complex data using MapReduce algorithms are highlighted, emphasizing the need for more effective collaboration and methodologies within the physics community.