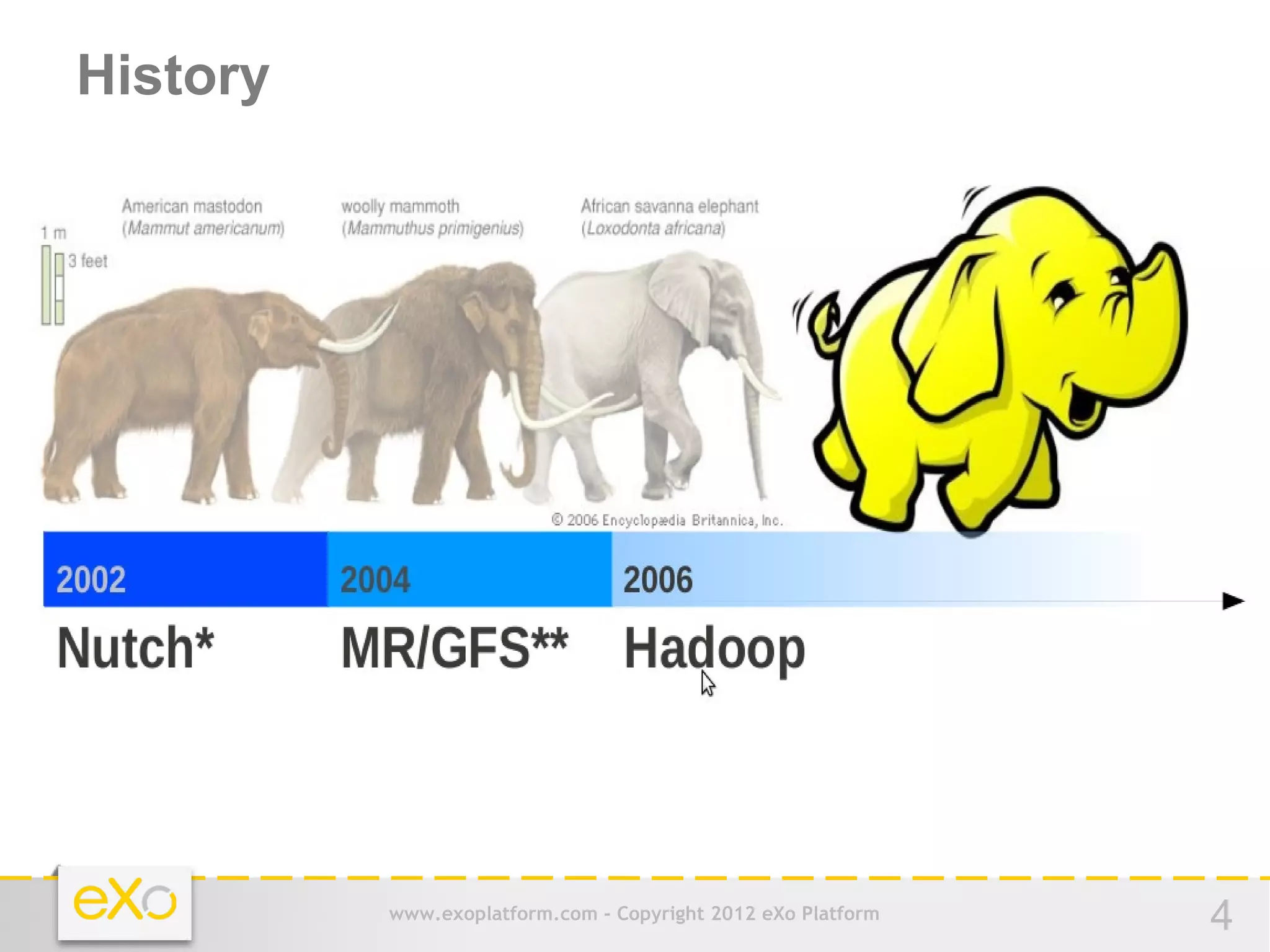

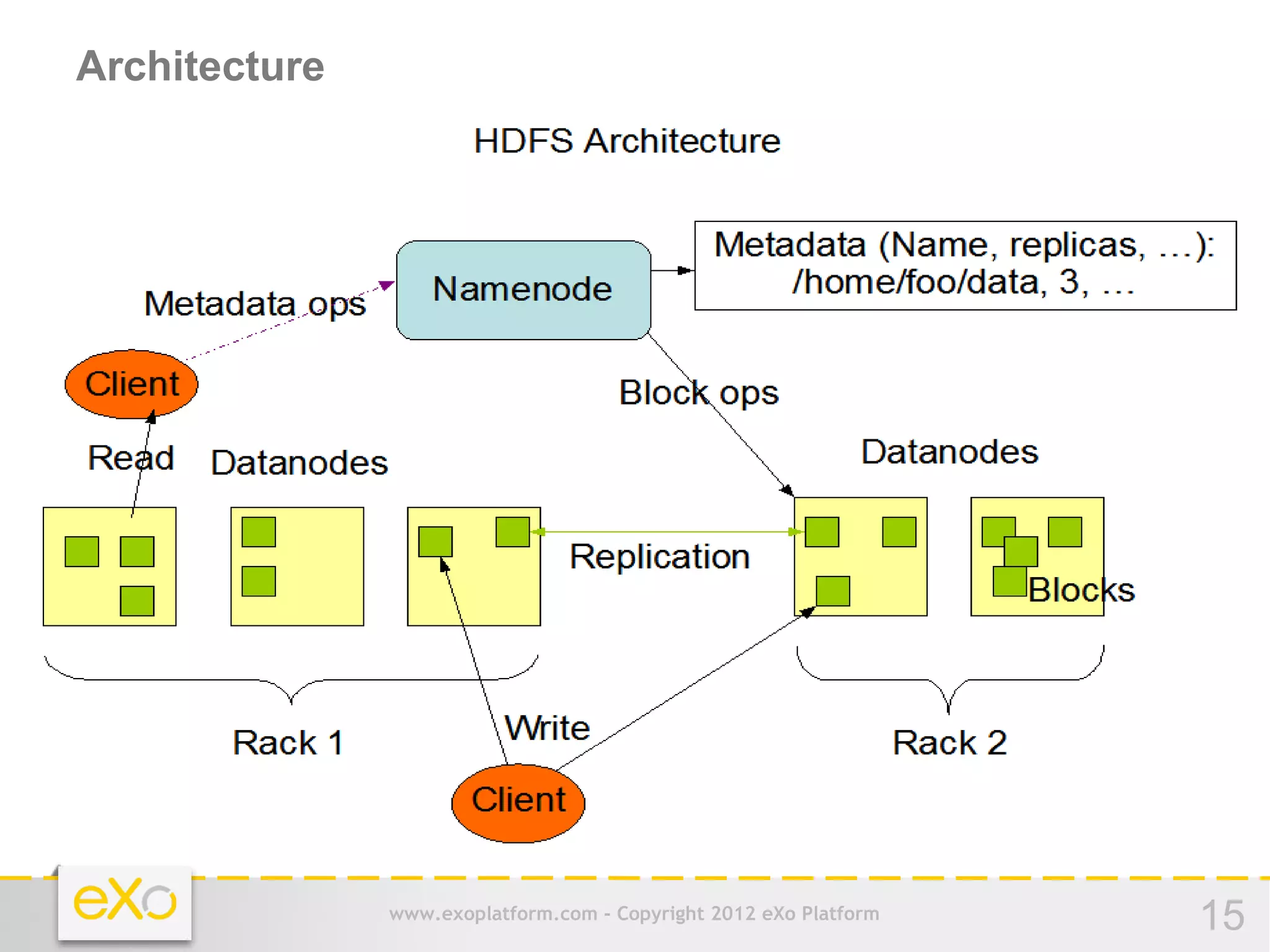

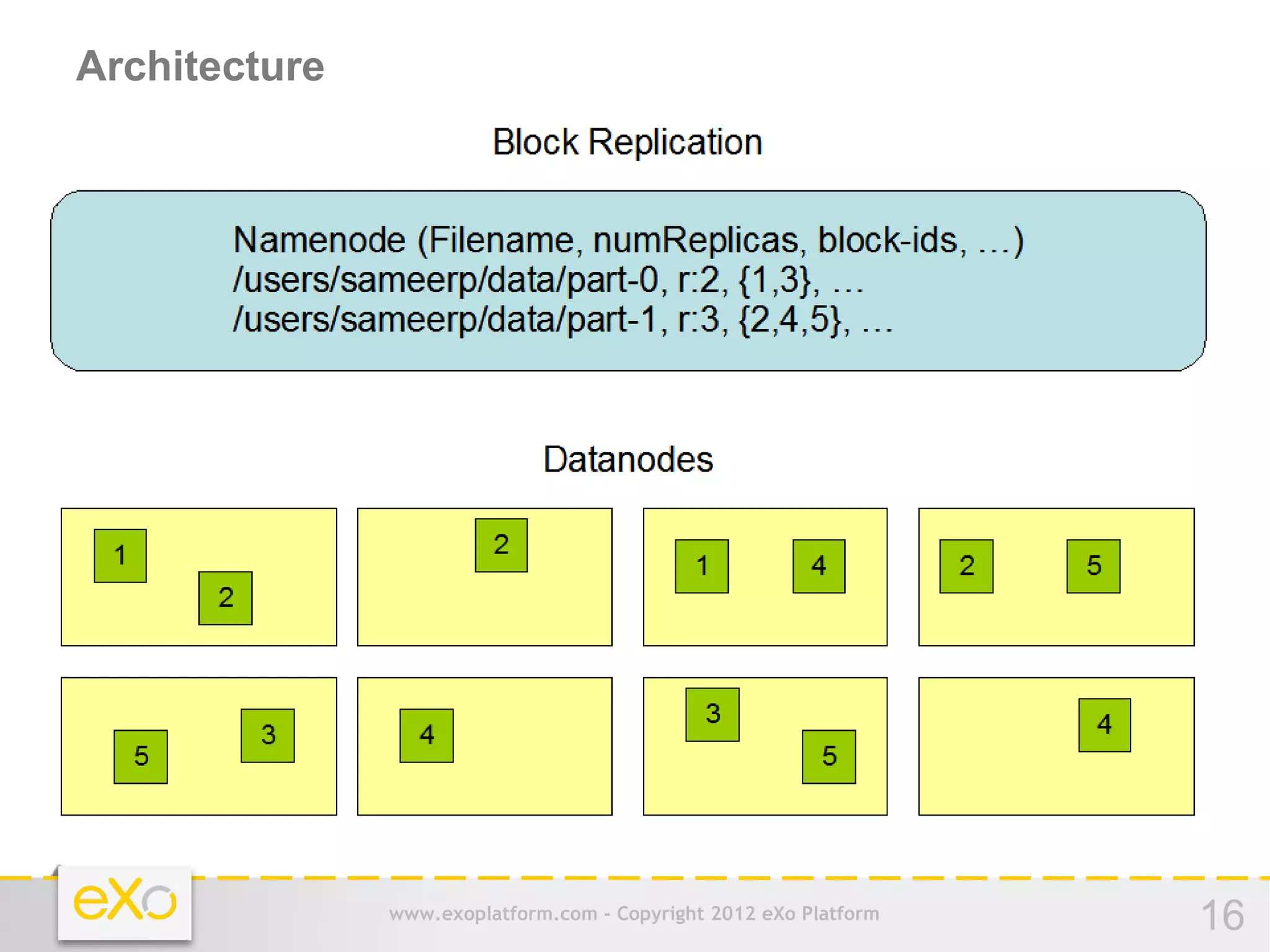

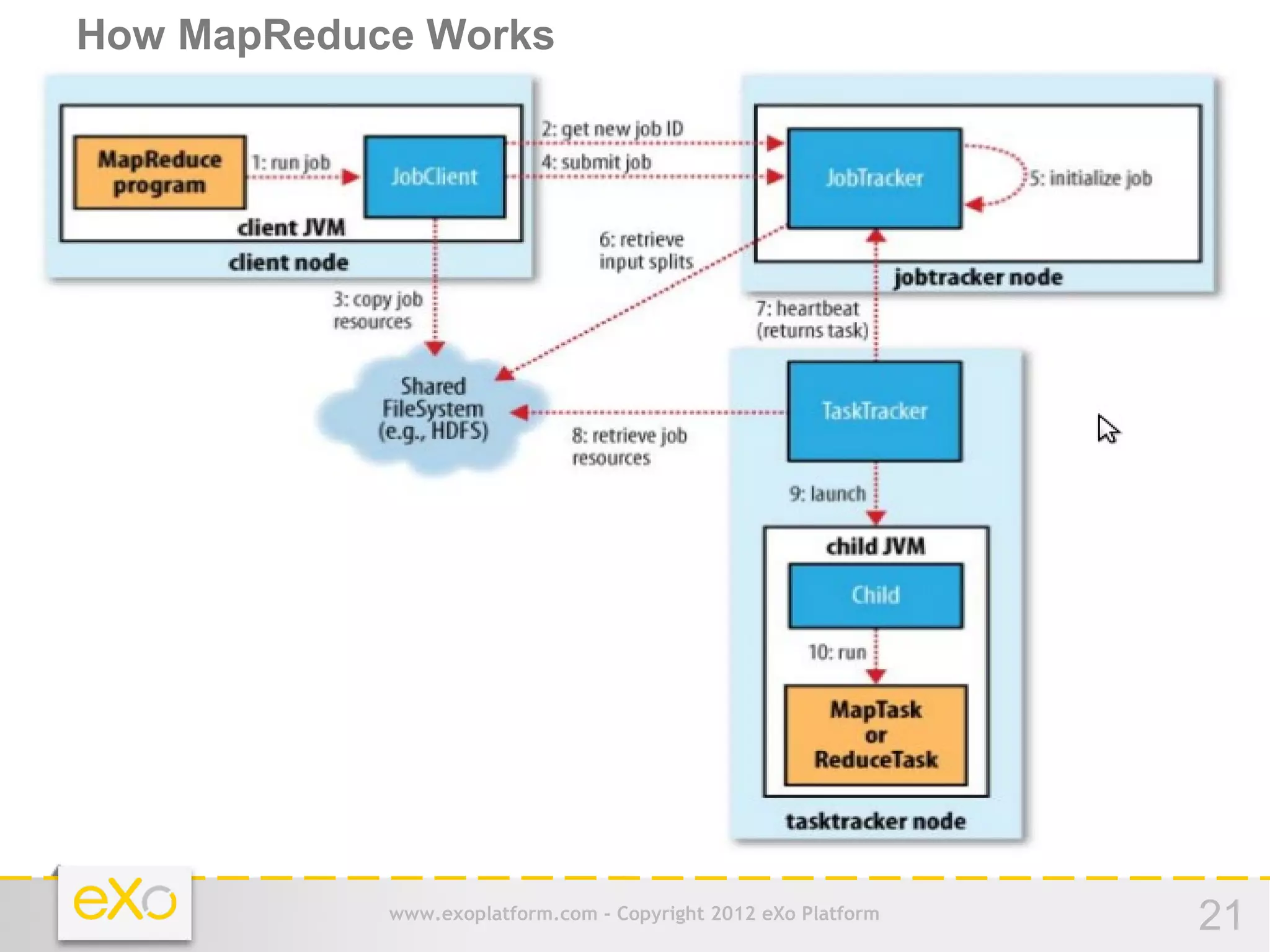

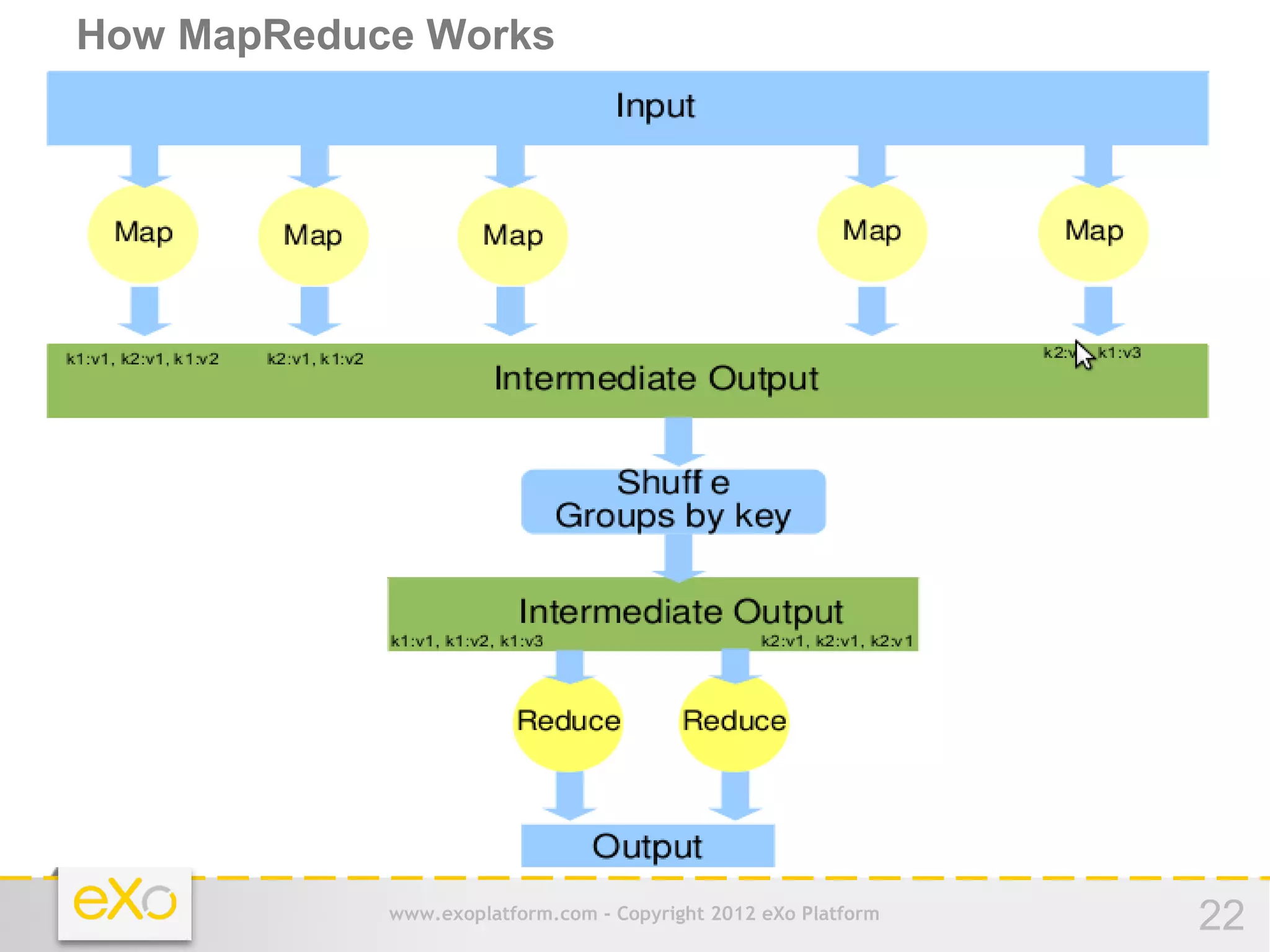

This document provides an agenda for a presentation on Hadoop. It begins with an introduction to Hadoop and its history. It then discusses data storage and analysis using Hadoop and what Hadoop is not suitable for. The remainder of the document outlines the Hadoop Distributed File System (HDFS), MapReduce framework, and concludes with a practice section involving a demo and discussion.