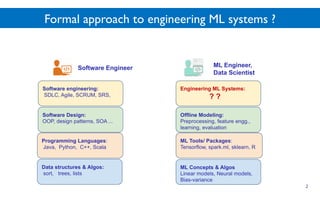

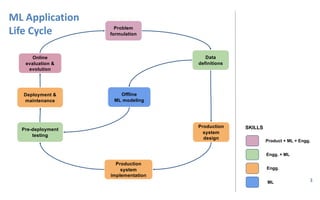

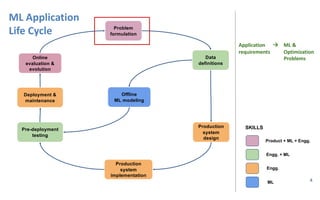

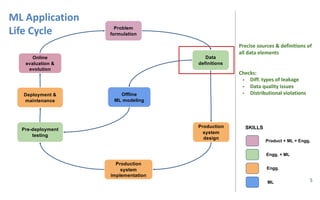

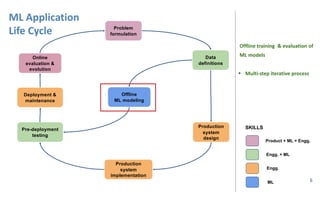

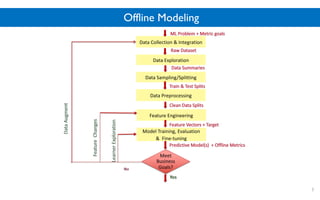

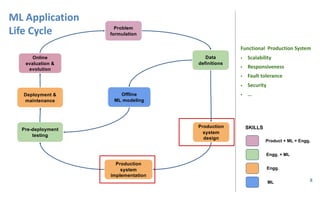

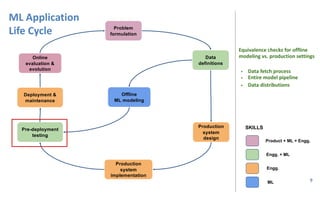

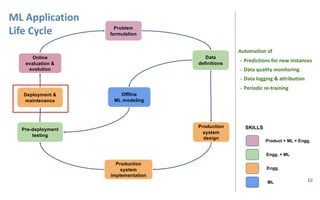

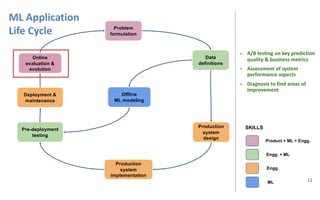

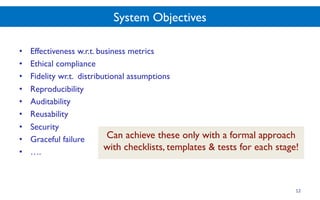

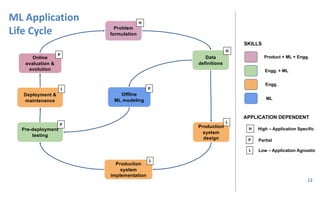

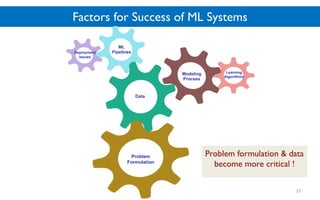

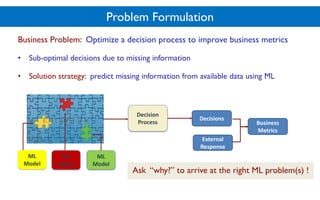

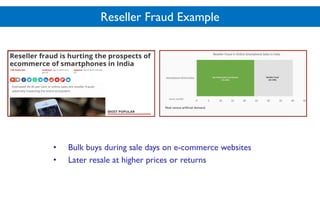

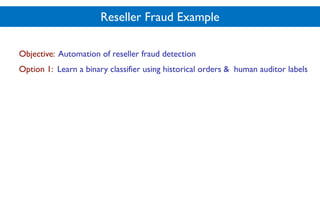

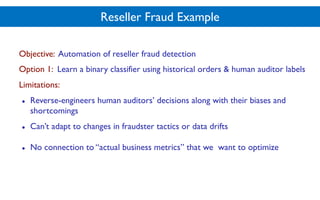

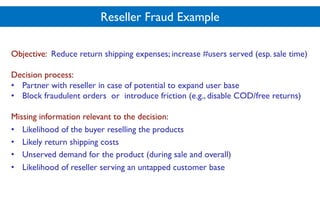

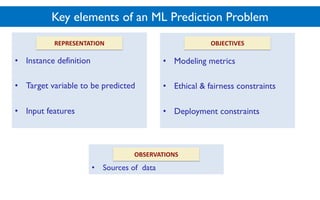

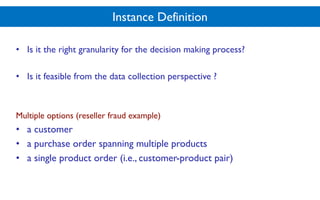

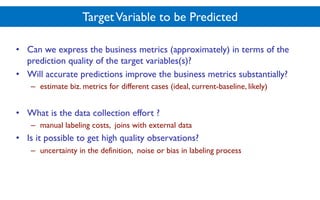

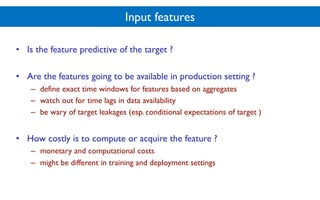

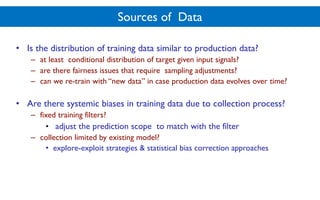

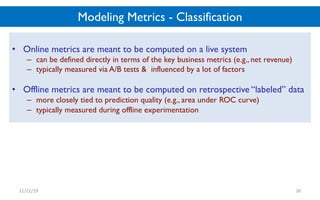

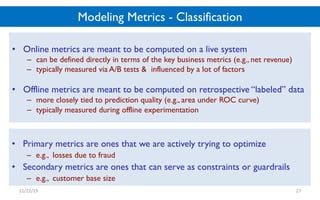

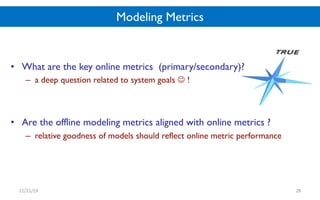

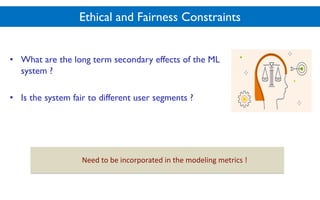

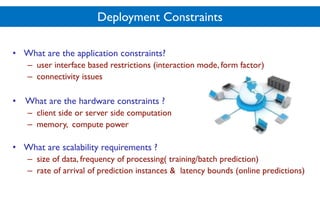

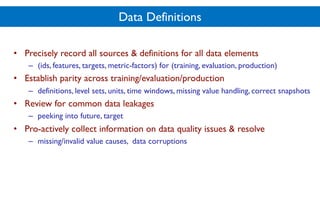

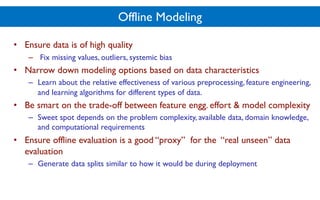

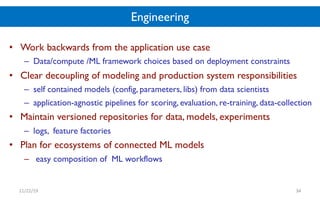

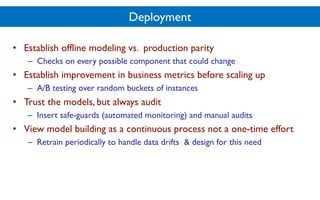

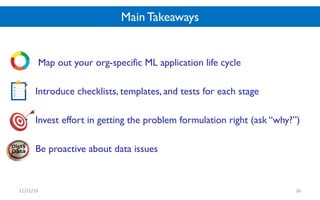

The document outlines the machine learning (ML) application life cycle, emphasizing stages such as problem formulation, data definitions, production system design, testing, and deployment. It highlights the importance of effective data management, monitoring system performance, ethical considerations, and continuous improvement through retraining. A structured approach with checklists and templates is advocated to ensure successful ML system development and optimization.