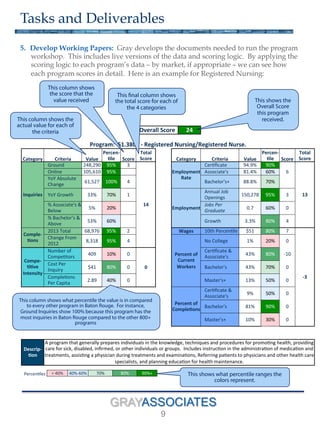

The document outlines a structured approach for program selection in educational institutions, emphasizing clear criteria, data analysis, and collaborative decision-making. It details the methodology for evaluating programs based on student demand, job opportunities, and competition, culminating in a decision-making workshop to refine scoring systems and identify high-potential programs. Deliverables include comprehensive datasets and profiles for selected programs to facilitate strategic planning.