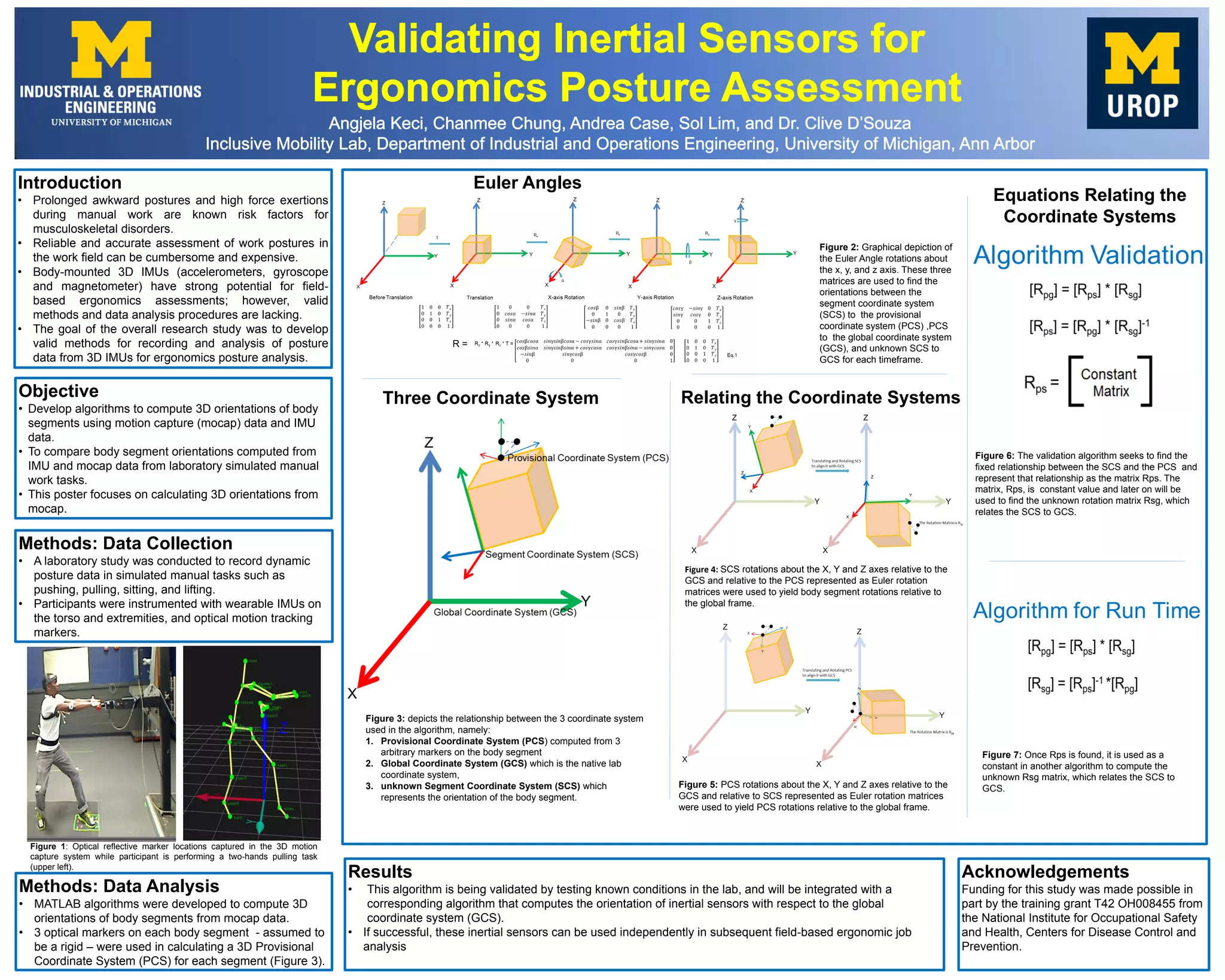

This document describes a study that aimed to develop algorithms to calculate 3D body segment orientations from motion capture (mocap) and IMU data during simulated manual work tasks. Participants performed tasks while wearing IMUs and optical markers tracked with mocap. Algorithms were developed in MATLAB to compute orientations of body segments from the mocap data by defining three coordinate systems - a provisional, global, and segment system - and relating them using Euler rotation matrices. The goal was to validate the mocap algorithm and integrate it with one calculating IMU orientations to allow field-based ergonomic assessments using body-worn IMUs.