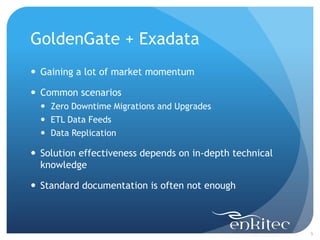

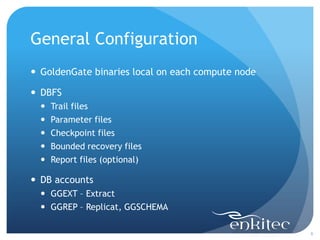

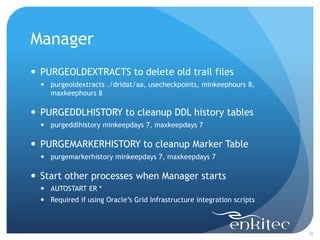

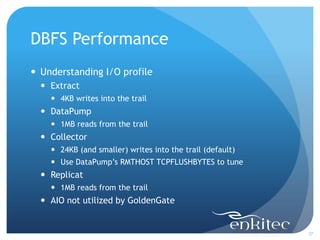

This document summarizes Alex Fatkulin's experience running GoldenGate on Exadata. It discusses general configuration considerations like using DBFS for trail files and parameter files. It provides tips for optimizing the Manager, Extract, DataPump, and Replicat components, including redo access options, bounded recovery, compressed tables, and transient primary key updates. It also covers DBFS performance considerations related to GoldenGate's I/O profile.

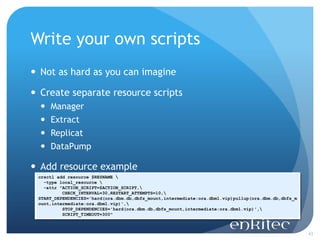

![DataPump – Trail not Available

Process will get stuck on positioning if trail [sequence]

is not available

GGSCI (exa1.test.com) 4> add extract exa_dp, exttrailsource ./dirdat/aa

EXTRACT added.

GGSCI (exa1.test.com) 2> info EXA_DP

EXTRACT EXA_DP Last Started 2013-01-26 19:51 Status RUNNING

Checkpoint Lag 00:00:00 (updated 00:00:03 ago)

Log Read Checkpoint File ./dirdat/aa000000

First Record RBA 0

...

open("./dirdat/aa000000", O_RDONLY) = -1 ENOENT (No such file or directory)

nanosleep({1, 0}, NULL) = 0

open("./dirdat/aa000000", O_RDONLY) = -1 ENOENT (No such file or directory)

nanosleep({1, 0}, NULL) = 0

...

GGSCI (exa1.test.com) 7> alter EXA_DP, extseqno 2

EXTRACT altered.

28](https://image.slidesharecdn.com/fatkulinpresentation-130220153431-phpapp02/85/Fatkulin-presentation-27-320.jpg)

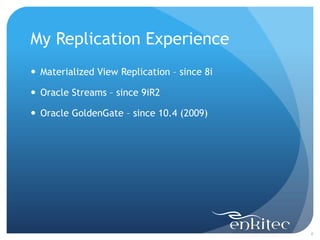

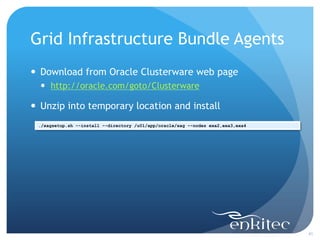

![Grid Infrastructure Bundle Agents

Make sure CRS_HOME environment variable is set

Script relies on CRS_HOME to find crsctl executable

./agctl.pl add goldengate ogg1

--gg_home /u01/app/oracle/ggs

--instance_type both

--oracle_home /u01/app/oracle/product/11.2.0/db_1

--db_services dbm.ogg_rep1

--databases dbm

--monitor_extracts exa_ext

--monitor_replicats exa_rep

--vip_name ora.dbm1.vip

[oracle@exa1 ~]$ crsctl status res xag.ogg1.goldengate

NAME=xag.ogg1.goldengate

TYPE=xag.goldengate.type

TARGET=OFFLINE

STATE=OFFLINE

[oracle@exa1 ~]$ crsctl start res xag.ogg1.goldengate

CRS-2672: Attempting to start 'xag.ogg1.goldengate' on ‘exa1'

CRS-2676: Start of 'xag.ogg1.goldengate' on ‘exa1' succeeded

42](https://image.slidesharecdn.com/fatkulinpresentation-130220153431-phpapp02/85/Fatkulin-presentation-41-320.jpg)