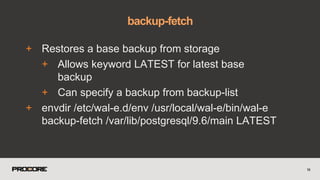

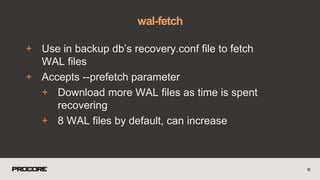

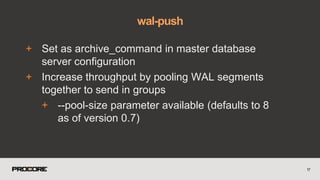

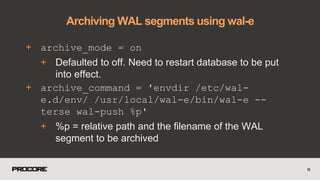

The document outlines the steps for creating an automation plan for database recovery using PostgreSQL, focusing on aspects such as essential configurations, logging mechanisms, and archiving WAL segments. It details the use of the WAL-E tool for backup and restore processes, including commands for handling WAL files and setting up recovery configurations. Additionally, it emphasizes the importance of proper permissions, environment variables, and testing recovery procedures to ensure reliability and readiness in case of database failures.

![+ https://github.com/wal-e/wal-e

+ Continuous WAL archiving Python tool

+ sudo python3 -m pip install wal-e[aws,azure,google,swift]

+ Works on most operating systems

+ Can push to S3, Azure Blob Store, Google Storage, Swift

7

Archiving WAL segments](https://image.slidesharecdn.com/databaserecoveryautomation-170308230050/85/Automating-Disaster-Recovery-PostgreSQL-7-320.jpg)

![+ Delete data from storage

+ Needs --confirm flag

+ Also accepts --dry-run

+ Accepts 'before', 'retain', 'everything'

+ wal-e delete [--confirm] retain 5

+ Delete all backups and segment files older

than the 5 most recent

15

delete](https://image.slidesharecdn.com/databaserecoveryautomation-170308230050/85/Automating-Disaster-Recovery-PostgreSQL-15-320.jpg)

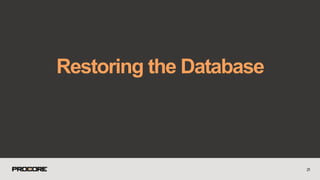

![Configure Postgresql settings

Create a recovery.conf file

Start backup fetch

Start Postgres

Perform sample queries

Notify on success

Automated Restoration Script

26

I, [2016-08-17T20:54:16.516658 #9196] INFO -- :

Setting up configuration files

I, [2016-08-17T20:55:30.782533 #9300] INFO -- :

Setup complete. Beginning backup fetch.

I, [2016-08-18T21:12:05.646145 #29825] INFO -- :

Backup fetch complete.

I, [2016-08-18T22:20:06.445003 #29825] INFO -- :

Starting postgres.

I, [2016-08-18T22:12:07.082780 #29825] INFO -- :

Postgres started. Restore under way

I, [2016-08-18T24:12:07.082855 #29825] INFO -- :

Restore complete. Reporting to Datadog](https://image.slidesharecdn.com/databaserecoveryautomation-170308230050/85/Automating-Disaster-Recovery-PostgreSQL-26-320.jpg)

![34

Checking for Completion

def latest_session_page_timestamp

end

PG.connect(dbname: 'procore', user: 'postgres').e

DESC LIMIT 1;")[0]["created_at"]](https://image.slidesharecdn.com/databaserecoveryautomation-170308230050/85/Automating-Disaster-Recovery-PostgreSQL-34-320.jpg)