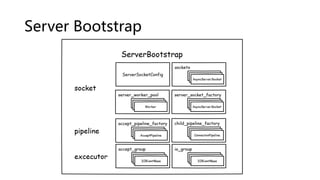

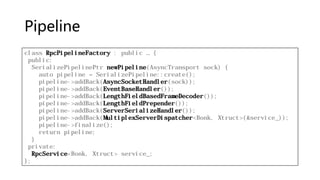

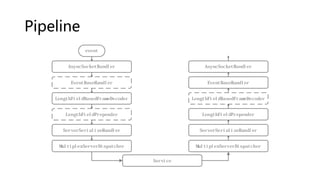

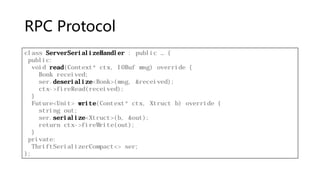

This document summarizes Wangle, a C++ network framework. It provides examples of using Wangle to build RPC servers and clients with asynchronous pipelines. Key features discussed include asynchronous I/O with EventLoops, thread pools like IOThreadPoolExecutor, pipelining with handlers, RPC protocols using serialization, and routing requests across server nodes.

{

cout << response.string_thing << endl;

});

}](https://image.slidesharecdn.com/facebookcwangle-160909125141/85/Facebook-C-Wangle-10-320.jpg)

![RPC Handler

class BonkMultiplexClientDispatcher : public … {

public:

void read(Context* ctx, Xtruct in) override {

auto search = requests_.find(in.i32_thing);

auto p = move(search->second);

requests_.erase(in.i32_thing);

p.setValue(in);

}

Future<Xtruct> operator()(Bonk arg) override {

auto& p = requests_[arg.type];

auto f = p.getFuture();

this->pipeline_->write(arg);

return f;

}

private:

unordered_map<int32_t, Promise<Xtruct>> requests_;

};](https://image.slidesharecdn.com/facebookcwangle-160909125141/85/Facebook-C-Wangle-14-320.jpg)

![Connection Routing

class NaiveRoutingDataHandler : public … {

public:

bool parseRoutingData(IOBufQueue& bufQueue, RoutingData& routingData)

override {

auto buf = bufQueue.move();

buf->coalesce();

routingData.routingData = buf->data()[0];

routingData.bufQueue.append(buf);

return true;

}

};

ServerBootstrap<DefaultPipeline>

.pipeline(AcceptRoutingPipelineFactory(…));](https://image.slidesharecdn.com/facebookcwangle-160909125141/85/Facebook-C-Wangle-21-320.jpg)

![Parallel Computing

vector<Future<int>> futures;

for (int channel = 0; channel < 10; ++channel) {

futures.emplace_back(makeFuture().then([channel] {

int sum = 0;

for (int i = 100 * channel; i < 100 * channel + 100; ++i) {

sum += i;

}

return sum;

}));

}

collectAll(futures.begin(), futures.end())

.then([](vector<Try<int>>&& parts) {

for (auto& part : parts) {

cout << part.value() << endl;

}

});](https://image.slidesharecdn.com/facebookcwangle-160909125141/85/Facebook-C-Wangle-22-320.jpg)

![Parallel Computing

vector<Future<map<int, Entity>>> segments;

for (int segment = 0; segment < 10; ++segment) {

segments.emplace_back(makeFuture().then([] {

map<int, Entity> result;

// fill segment result

return result;

}));

}

Future<map<int, Entity>> entities = reduce(segments, {},

[](map<int, Entity> last, map<int, Entity> current) {

// merge current into last

});](https://image.slidesharecdn.com/facebookcwangle-160909125141/85/Facebook-C-Wangle-23-320.jpg)

{

if (t.hasException) {

p.setException(t.exception);

} else {

p.setWith([&]() { return func(t.get()); });

}

});

return f;

}](https://image.slidesharecdn.com/facebookcwangle-160909125141/85/Facebook-C-Wangle-40-320.jpg)

{

return to_string(i);

})

.then([](string s) {

return vector<string>(s, 10);

});](https://image.slidesharecdn.com/facebookcwangle-160909125141/85/Facebook-C-Wangle-41-320.jpg)

{

if (!t.withException([](Exp e) {

p.setWith([&] { return func(e); });

})) {

p.setTry(t);

}

}

return f;

}](https://image.slidesharecdn.com/facebookcwangle-160909125141/85/Facebook-C-Wangle-42-320.jpg)