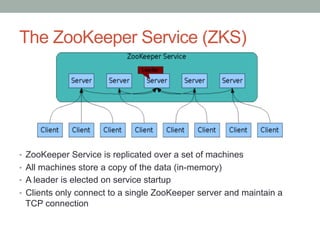

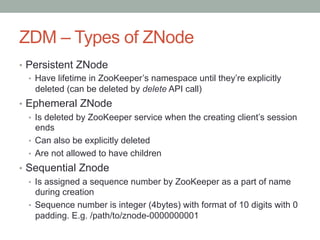

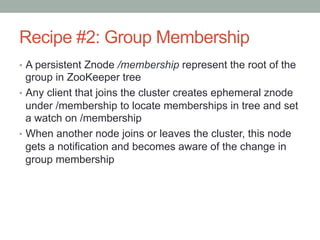

ZooKeeper is an open-source coordination service for distributed applications that provides common services like naming, configuration management, synchronization, and groups. It uses a hierarchical data model where data is stored as znodes that can be configured with watches, versions, access control, and more. Common uses include distributed queues, leader election, and group membership. Recipes demonstrate how to implement queues and group membership using ZooKeeper.

![The ZooKeeper Data Model (ZDM)

• Hierarchal name space

• Each node is called as a ZNode

• Every ZNode has data (given as byte[])

and can optionally have children

• ZNode paths:

• Canonical, absolute, slash-separated

• No relative references

• Names can have Unicode characters

• ZNode maintain stat structure](https://image.slidesharecdn.com/apachezookeeperseminartrinhvietdung032016-170424102005/85/Apache-Zookeeper-9-320.jpg)

![References

[1]. Apache ZooKeeper, http://zookeeper.apache.org

[2]. Introduction to Apache ZooKeeper,

http://www.slideshare.net/sauravhaloi

[3]. Saurav Haloi, Apache Zookeeper Essentials, 2015](https://image.slidesharecdn.com/apachezookeeperseminartrinhvietdung032016-170424102005/85/Apache-Zookeeper-24-320.jpg)