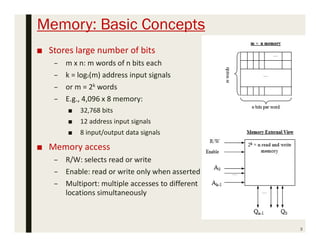

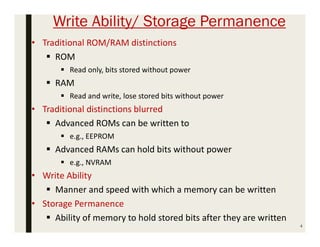

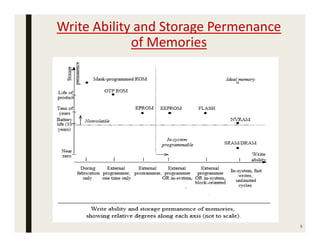

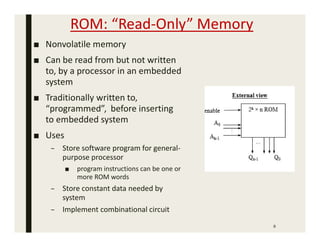

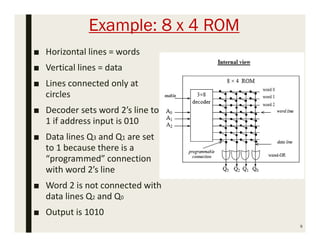

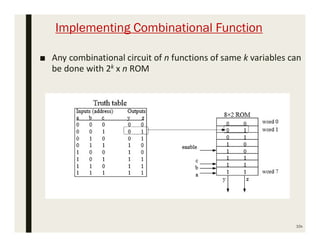

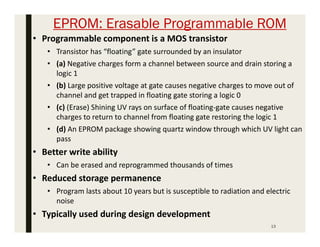

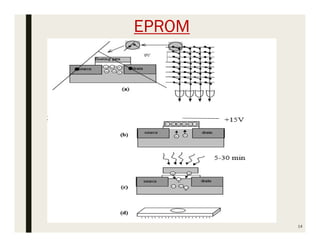

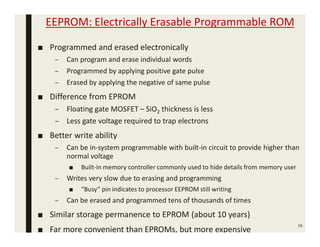

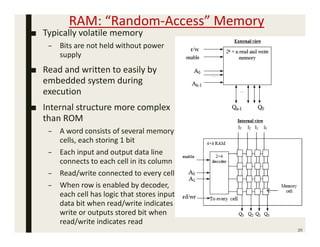

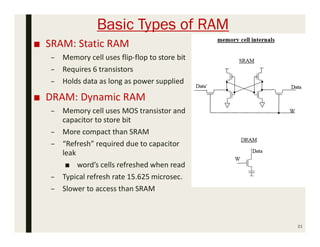

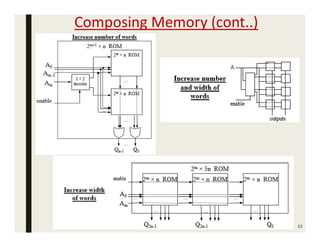

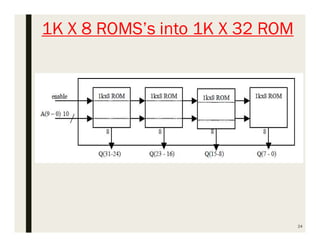

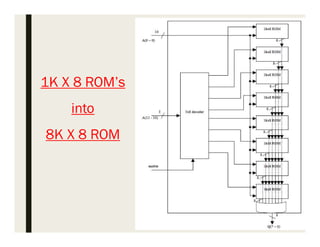

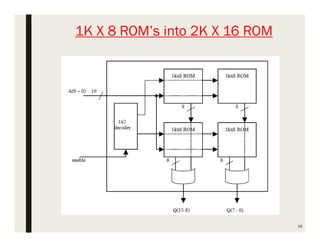

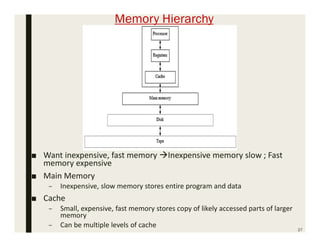

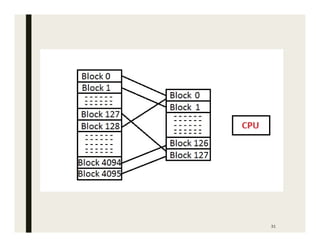

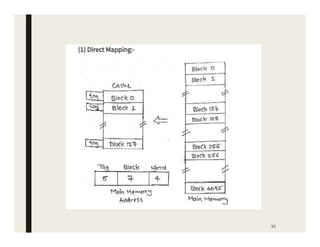

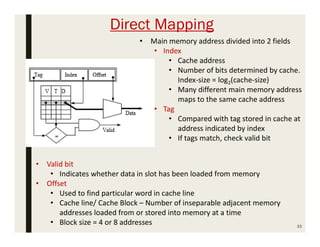

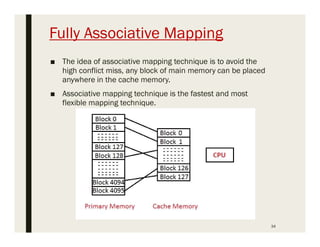

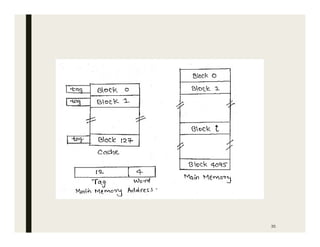

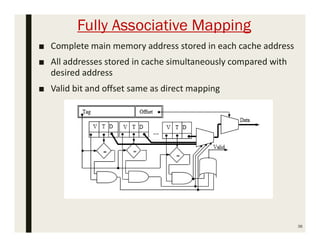

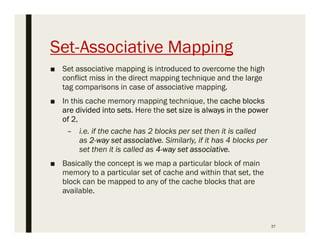

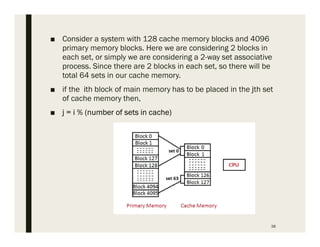

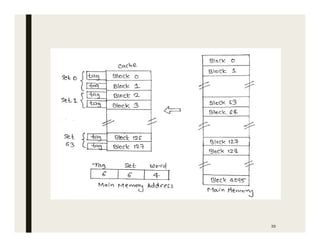

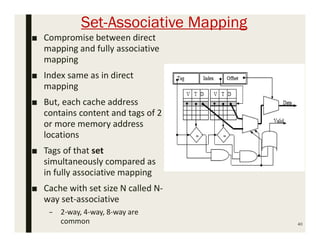

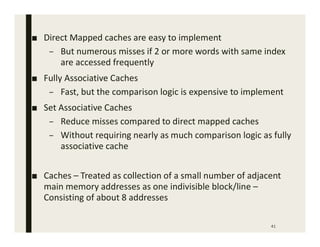

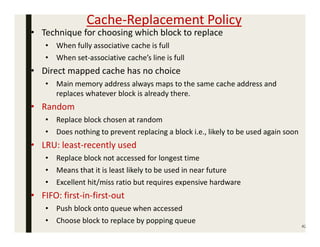

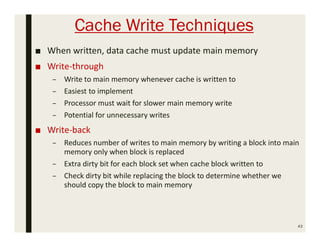

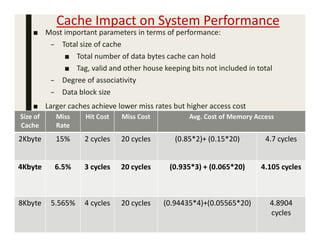

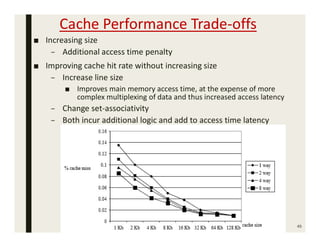

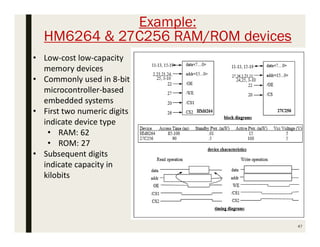

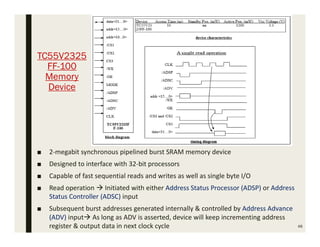

This document provides an overview of memory design concepts for microcontroller-based embedded systems. It discusses different types of memory including ROM, RAM, EEPROM, flash memory, and cache memory. It covers key concepts such as write ability, storage permanence, composing memory from smaller memories, and cache mapping techniques including direct mapping, fully associative mapping, and set-associative mapping. The document provides examples and diagrams to illustrate memory concepts.