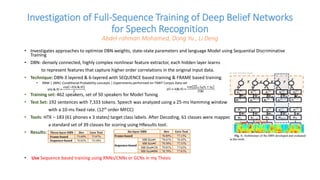

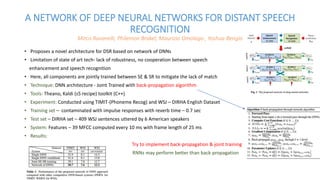

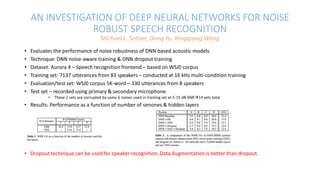

The document discusses various investigations into deep learning techniques for robust speaker recognition and speech emotion recognition using deep belief networks (DBNs) and deep neural networks (DNNs). It explores the optimization of DBN weights and the joint training of speech enhancement and recognition components to improve robustness against noise. The results emphasize the effectiveness of techniques like sequence-based training and dropout training in enhancing performance, along with references to related research papers.