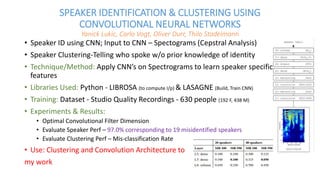

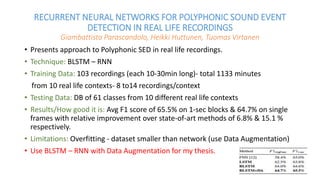

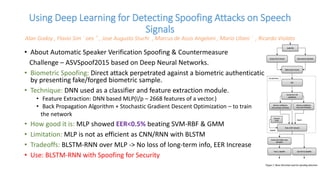

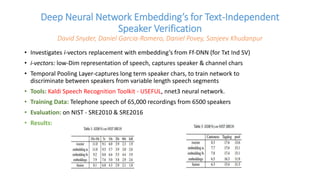

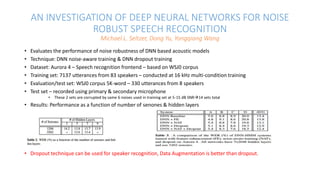

The document discusses advancements in deep learning techniques for speaker recognition and associated applications, including speaker verification, emotion recognition, and countermeasures against spoofing attacks. Various methods using convolutional neural networks, recurrent neural networks, and deep neural networks are evaluated, revealing performance metrics and limitations in experimental settings. The findings from these studies indicate potential future applications in security and IoT domains for robust speaker identification and verification systems.