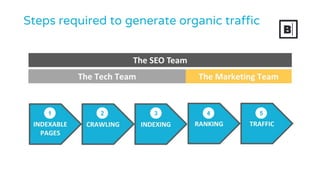

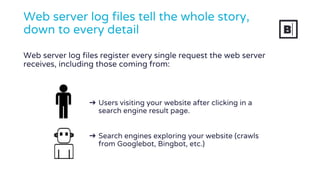

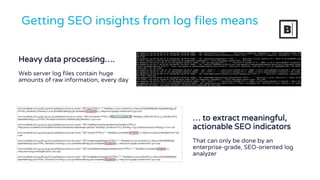

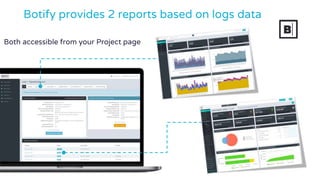

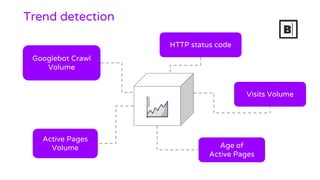

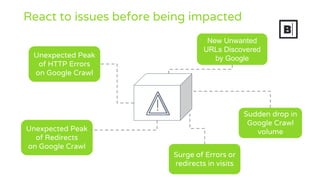

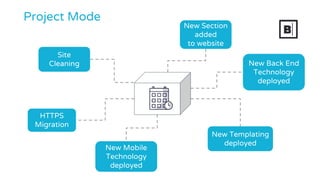

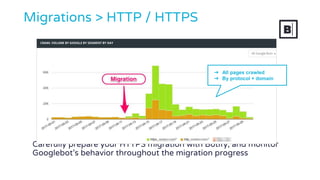

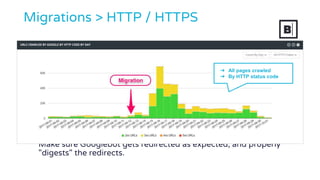

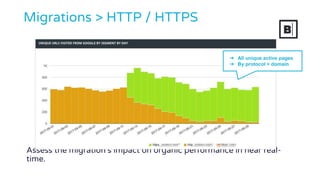

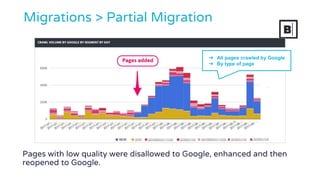

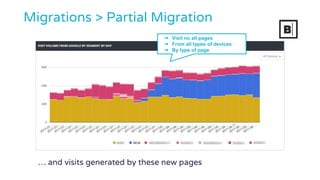

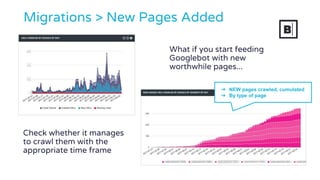

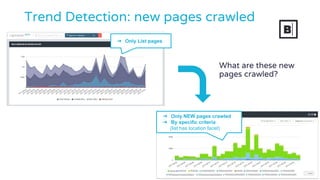

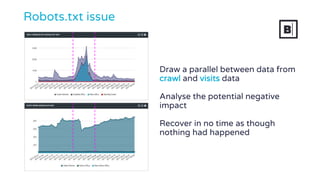

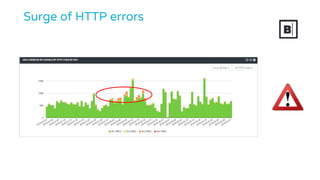

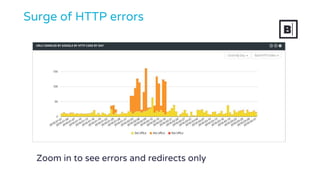

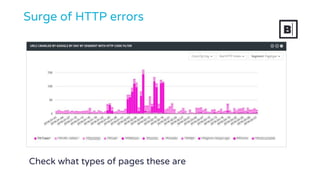

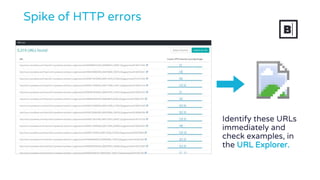

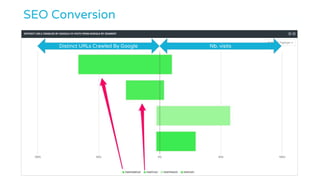

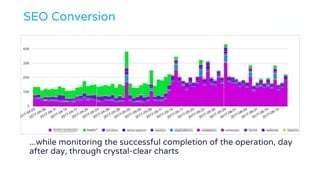

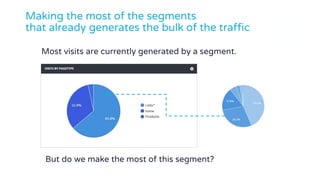

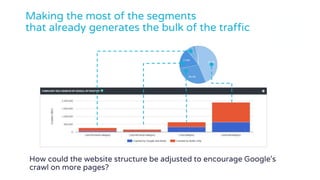

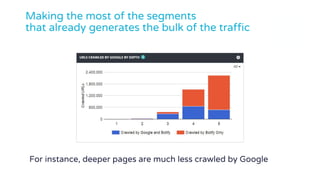

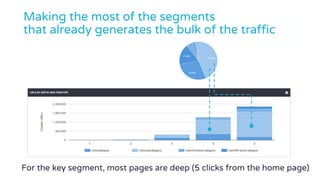

The webinar discusses the importance of web server log file analysis for understanding Google’s crawling behavior and optimizing organic traffic. It covers various use cases, real-life examples of migration processes, and how to identify issues and growth opportunities using tools like Botify. Attendees learn to leverage data from server logs to enhance SEO strategies and respond to changes effectively.