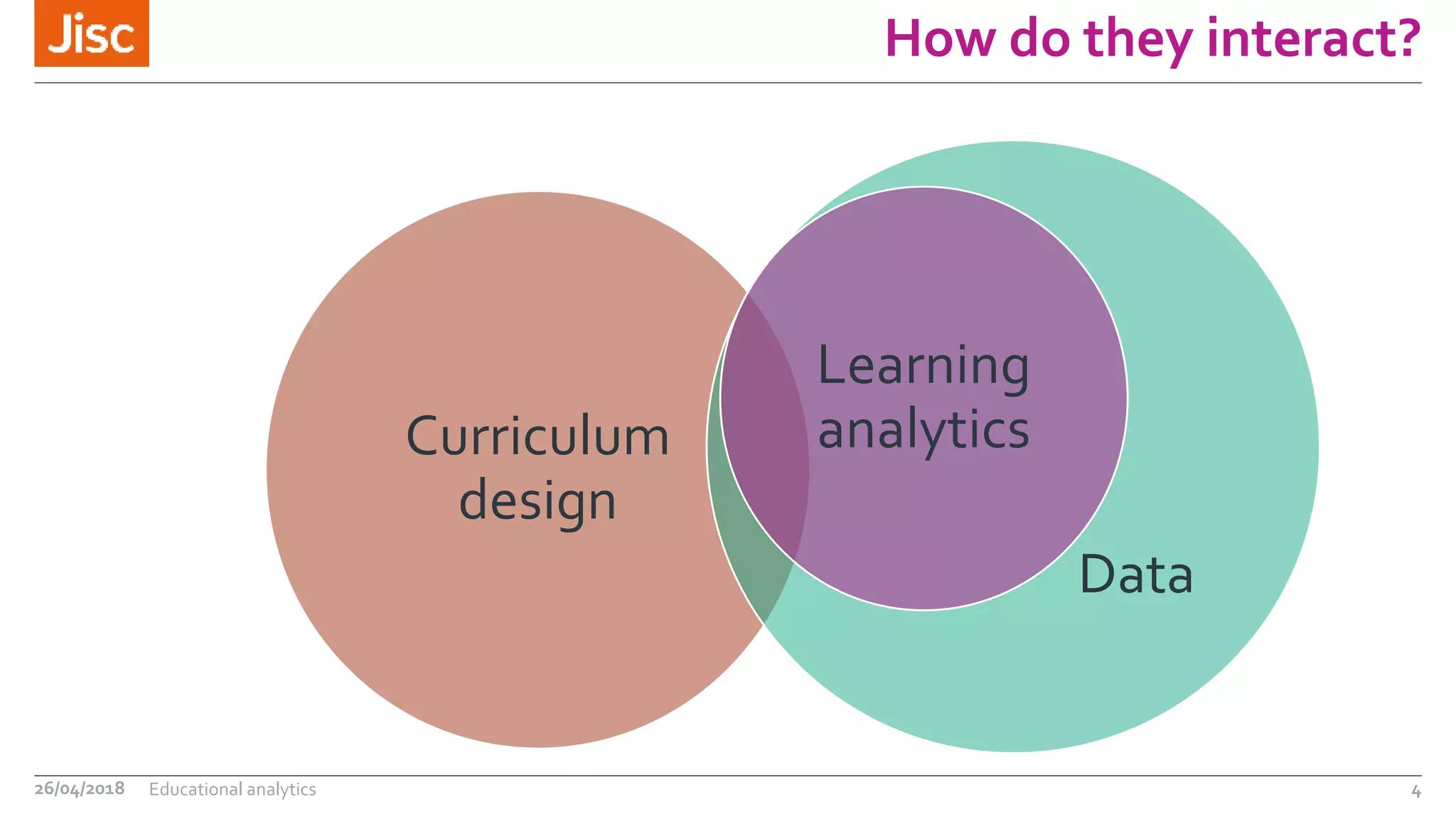

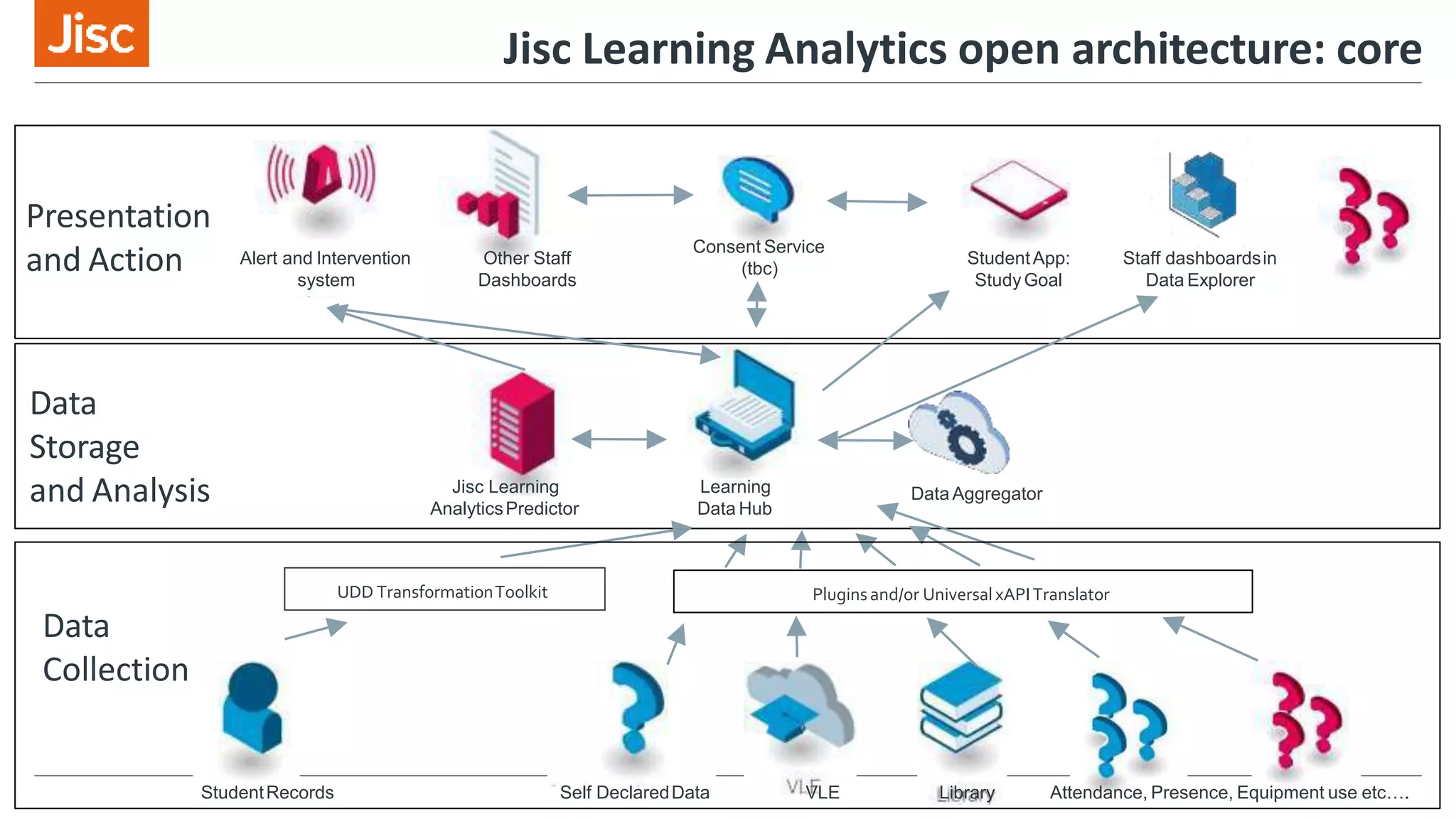

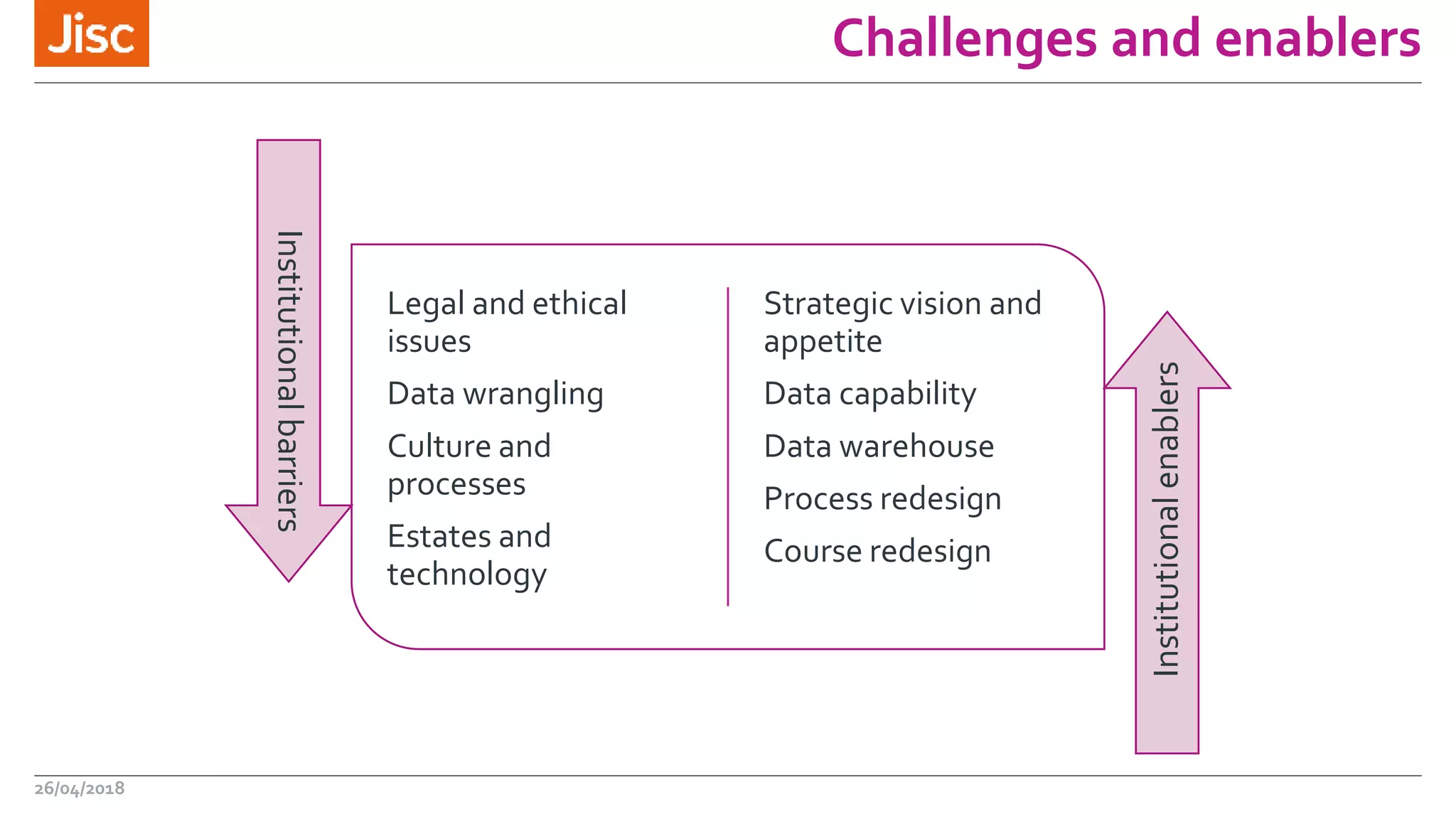

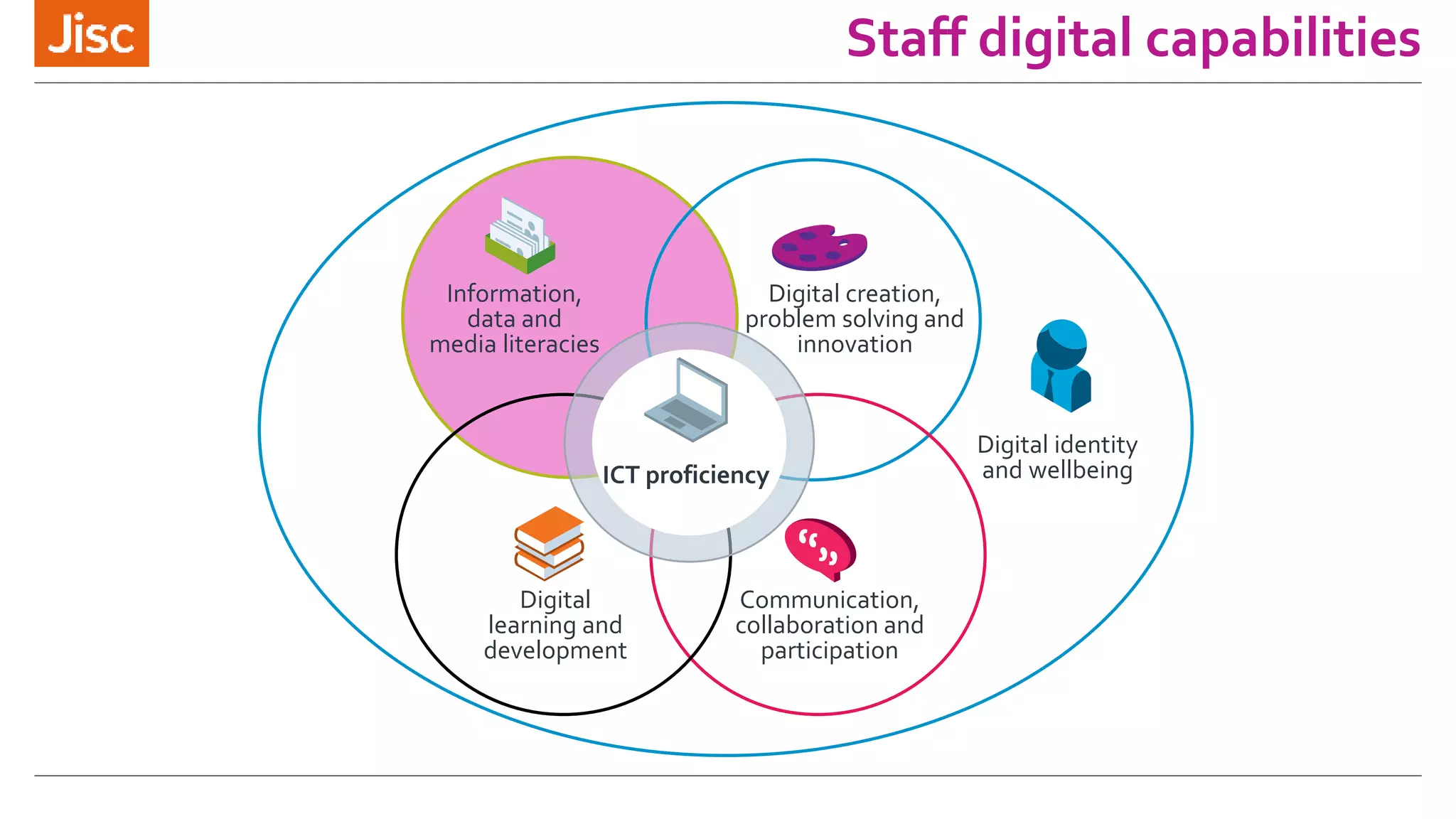

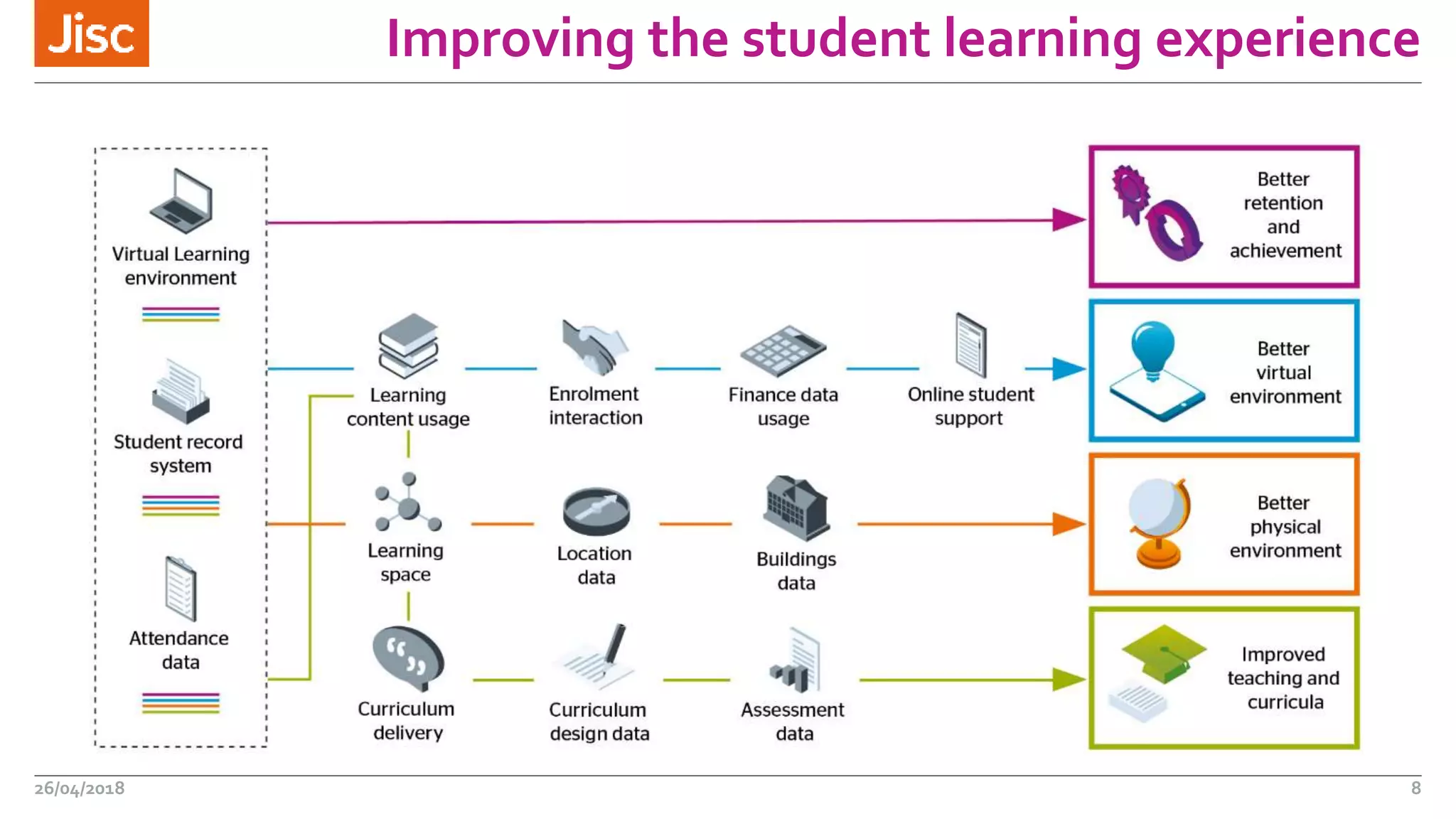

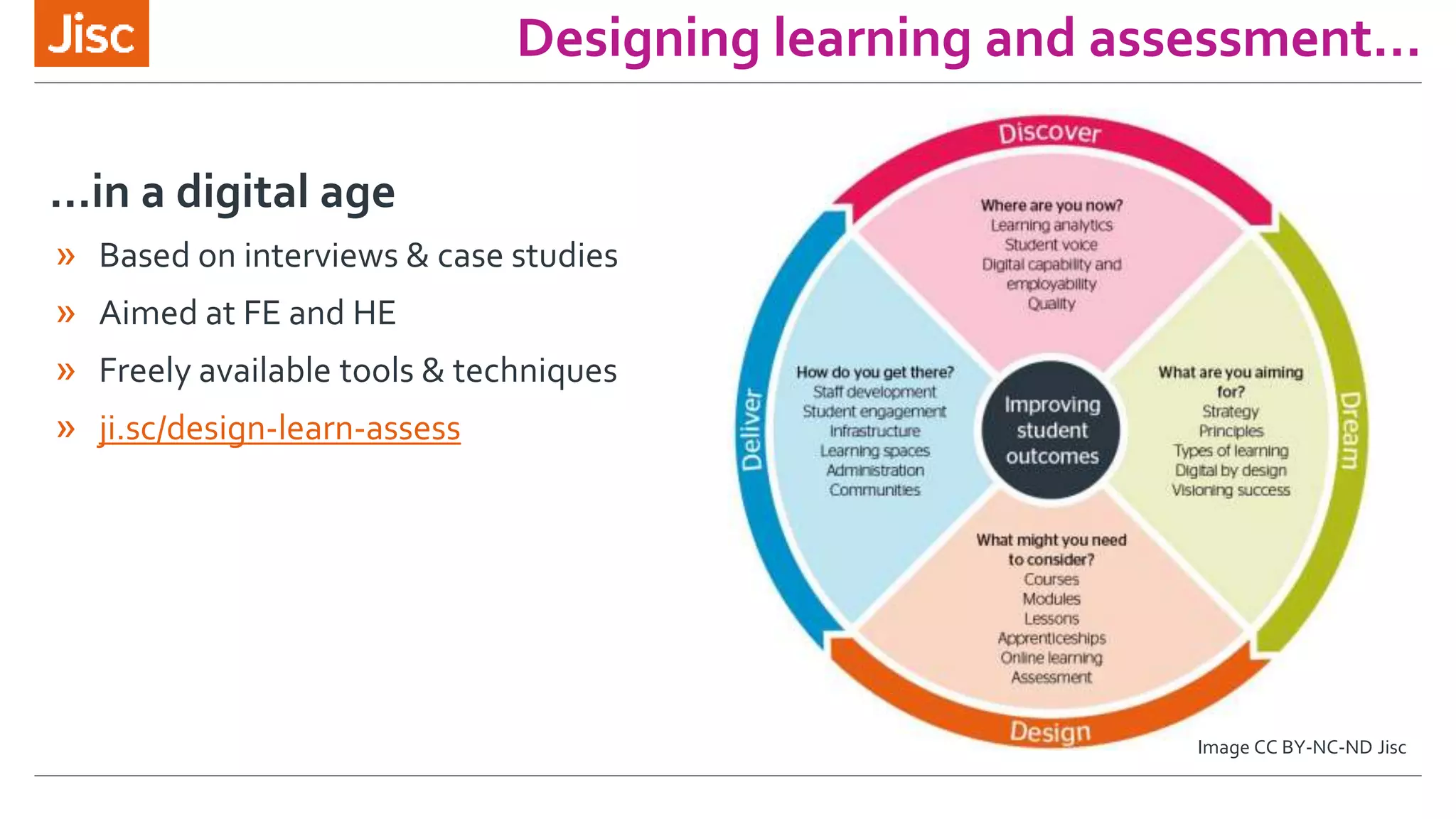

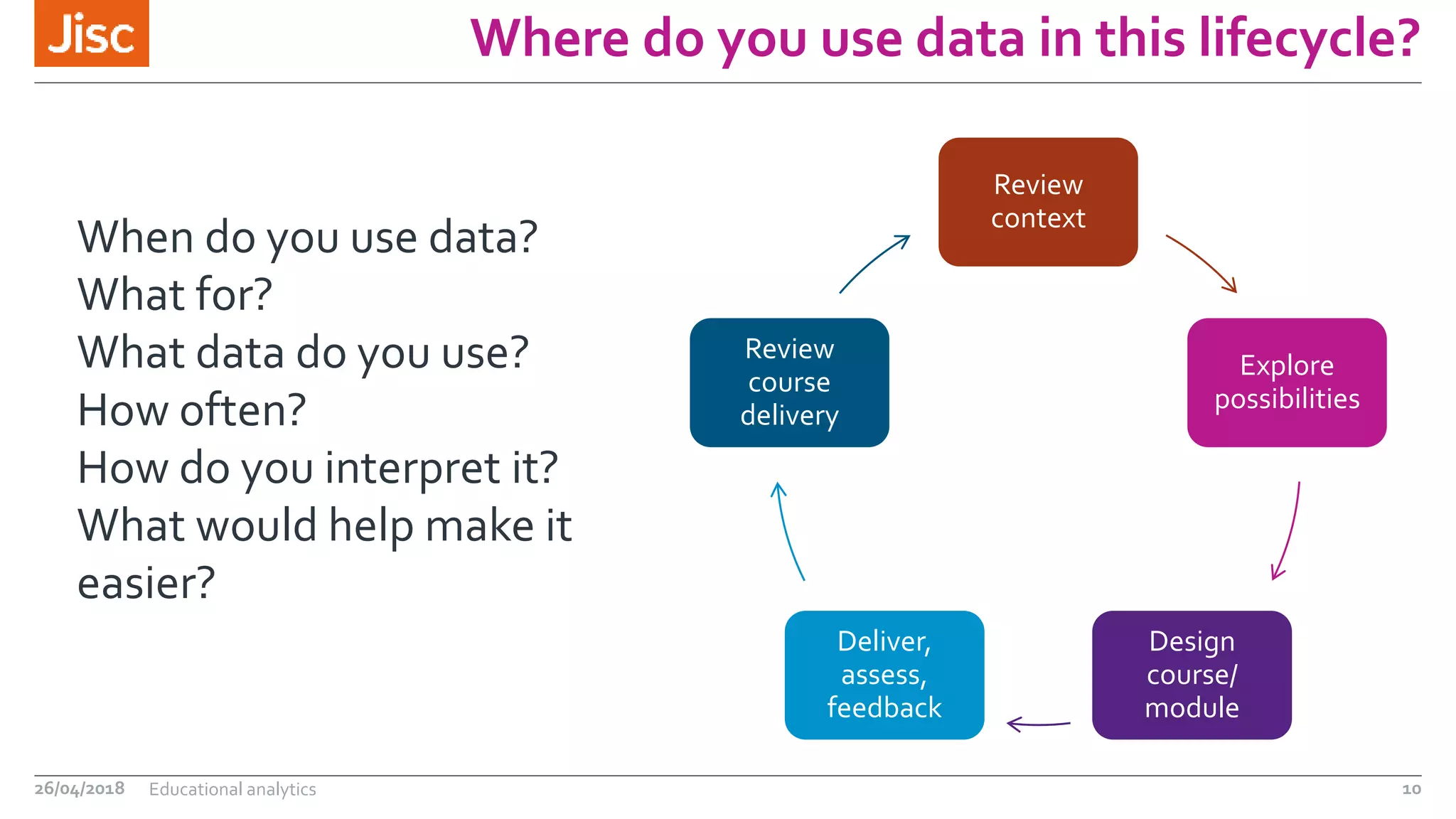

This document discusses using data and learning analytics to inform blended learning design and delivery. It describes learning design and curriculum design, as well as learning analytics and how they interact. Potential uses of data discussed include understanding what learning materials and activities students engage with the most, what students are learning, and tailoring learning design based on the characteristics of incoming student cohorts. Challenges around legal/ethical issues, data wrangling, and institutional culture are also addressed. The document encourages discussion of current and potential uses of curriculum analytics within institutions.