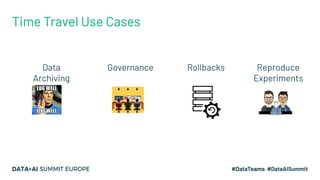

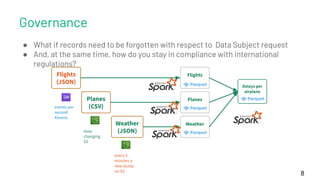

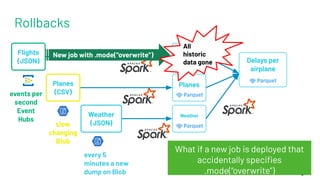

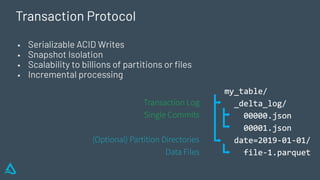

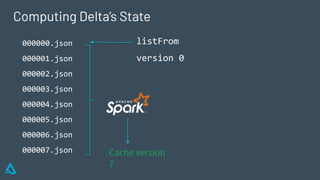

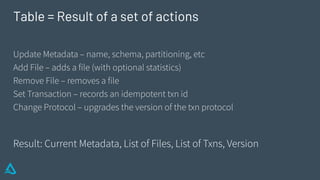

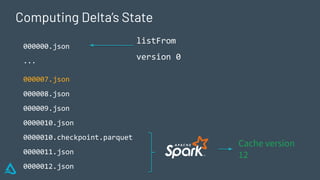

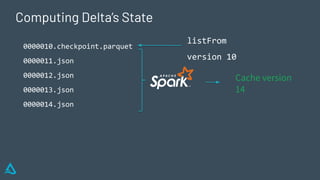

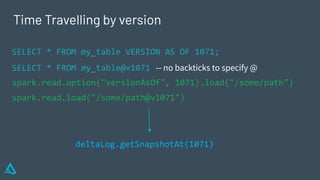

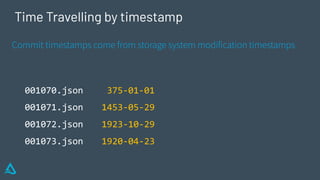

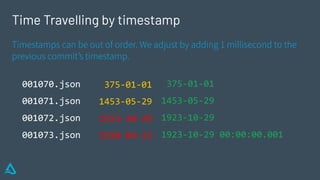

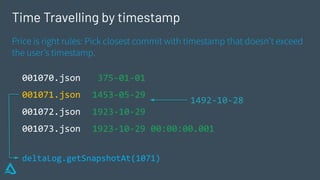

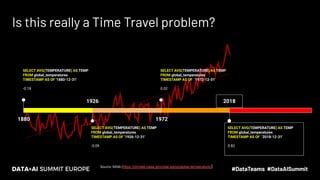

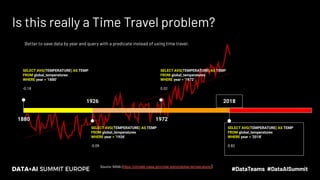

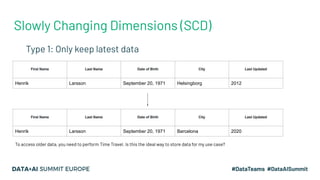

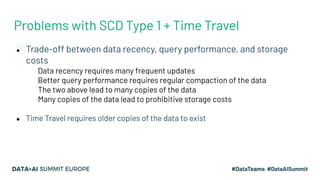

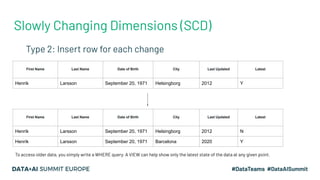

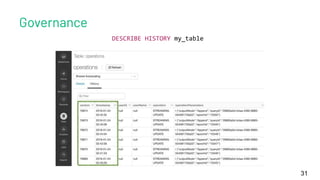

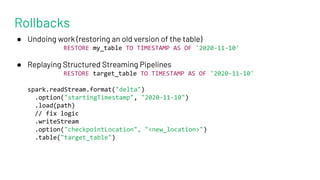

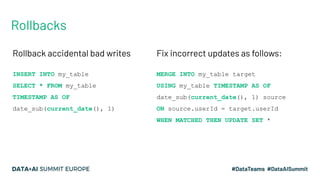

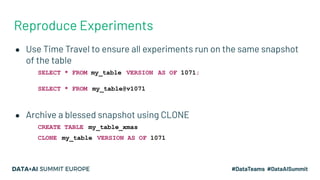

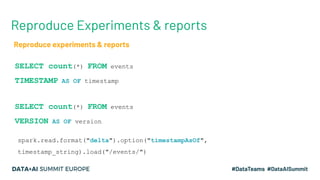

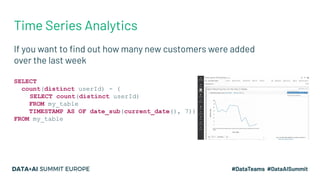

The document discusses the concept of time travel in data management, focusing on its use cases like data archiving, rollbacks, governance, and reproducing machine learning experiments. It elaborates on the Delta Lake technology that supports time travel and highlights practical examples such as querying historical data and managing changes to datasets over time. Additionally, it addresses challenges related to data storage, recency, and the trade-offs involved in historical data access with time travel mechanisms.