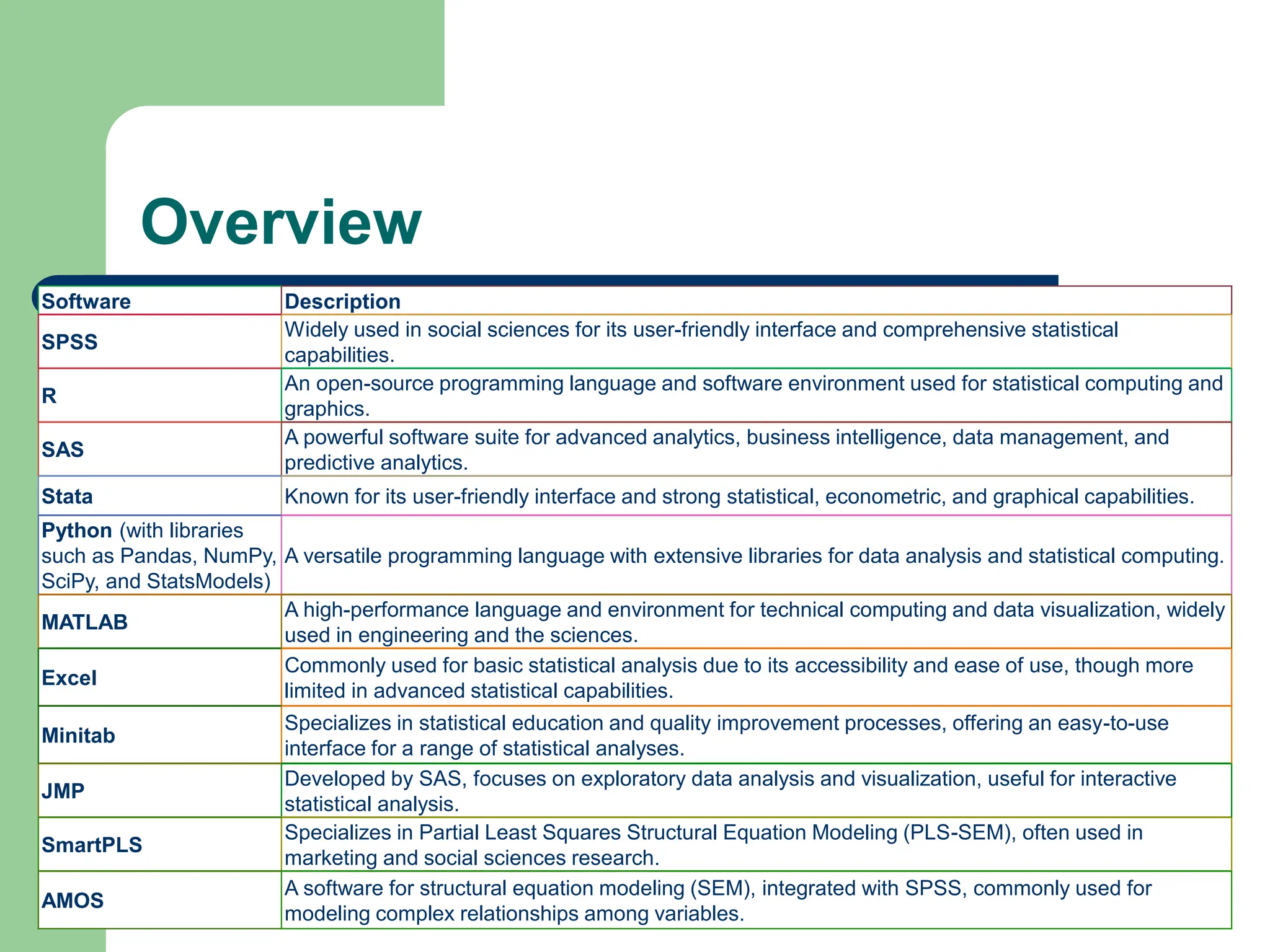

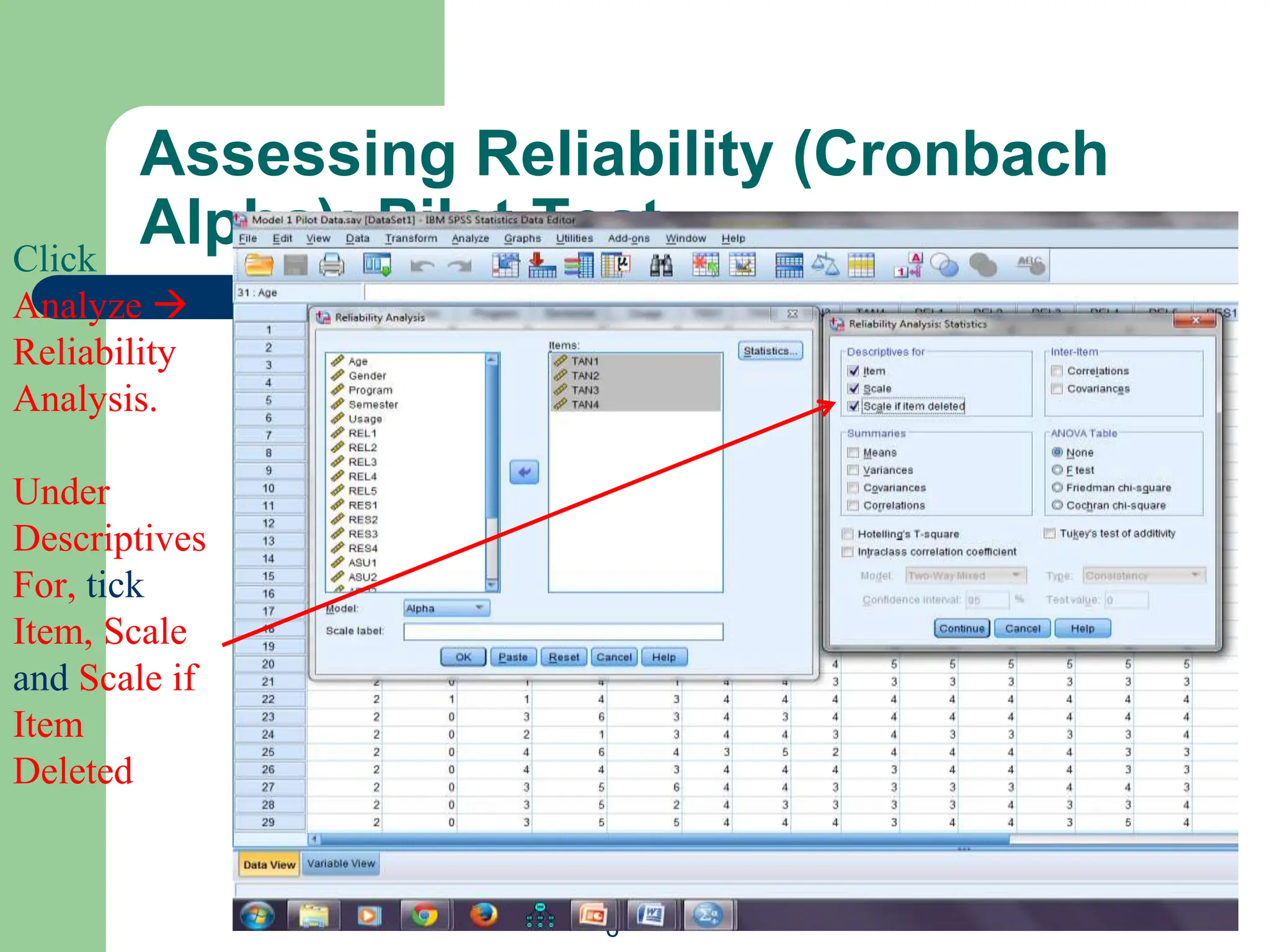

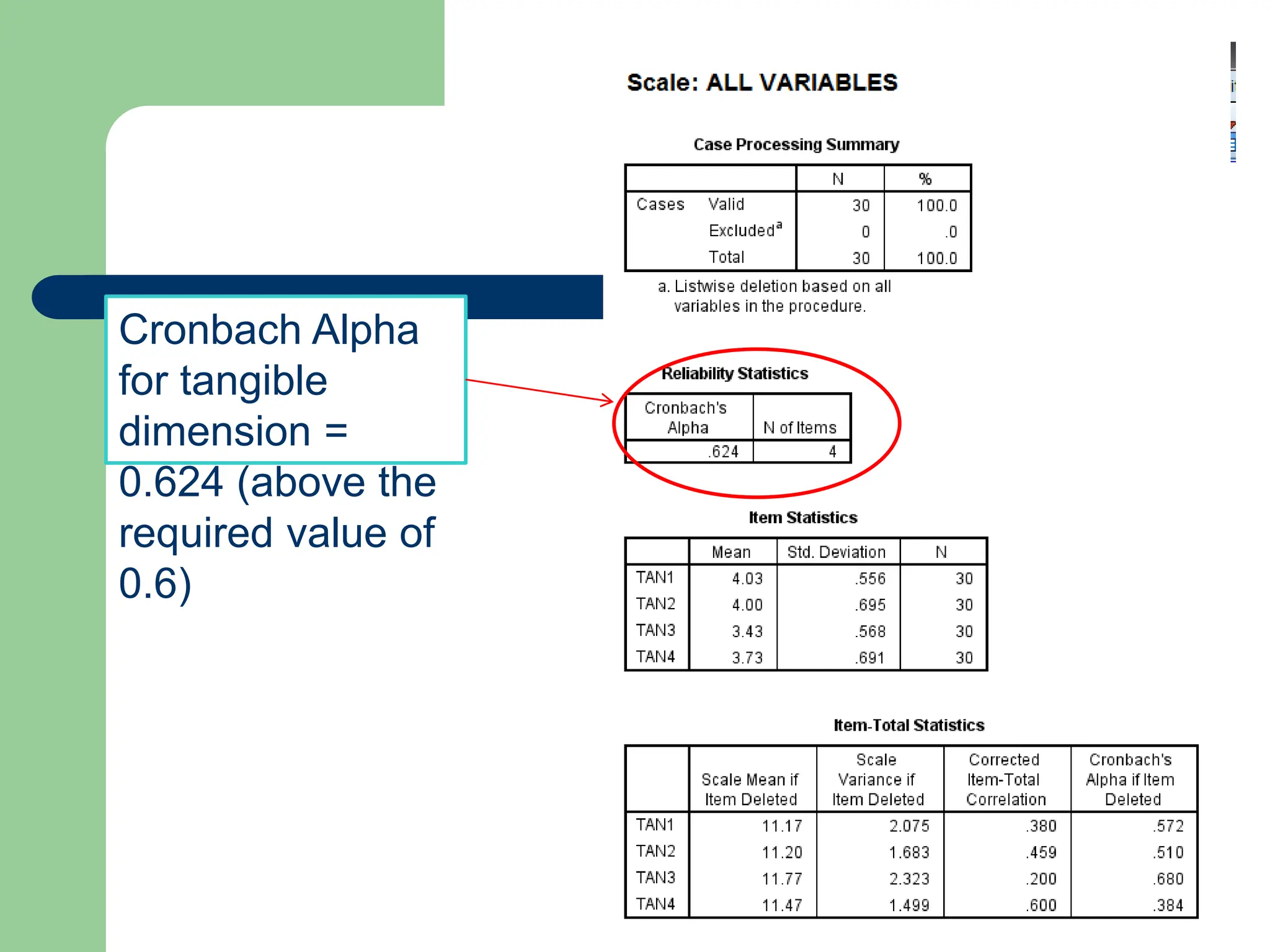

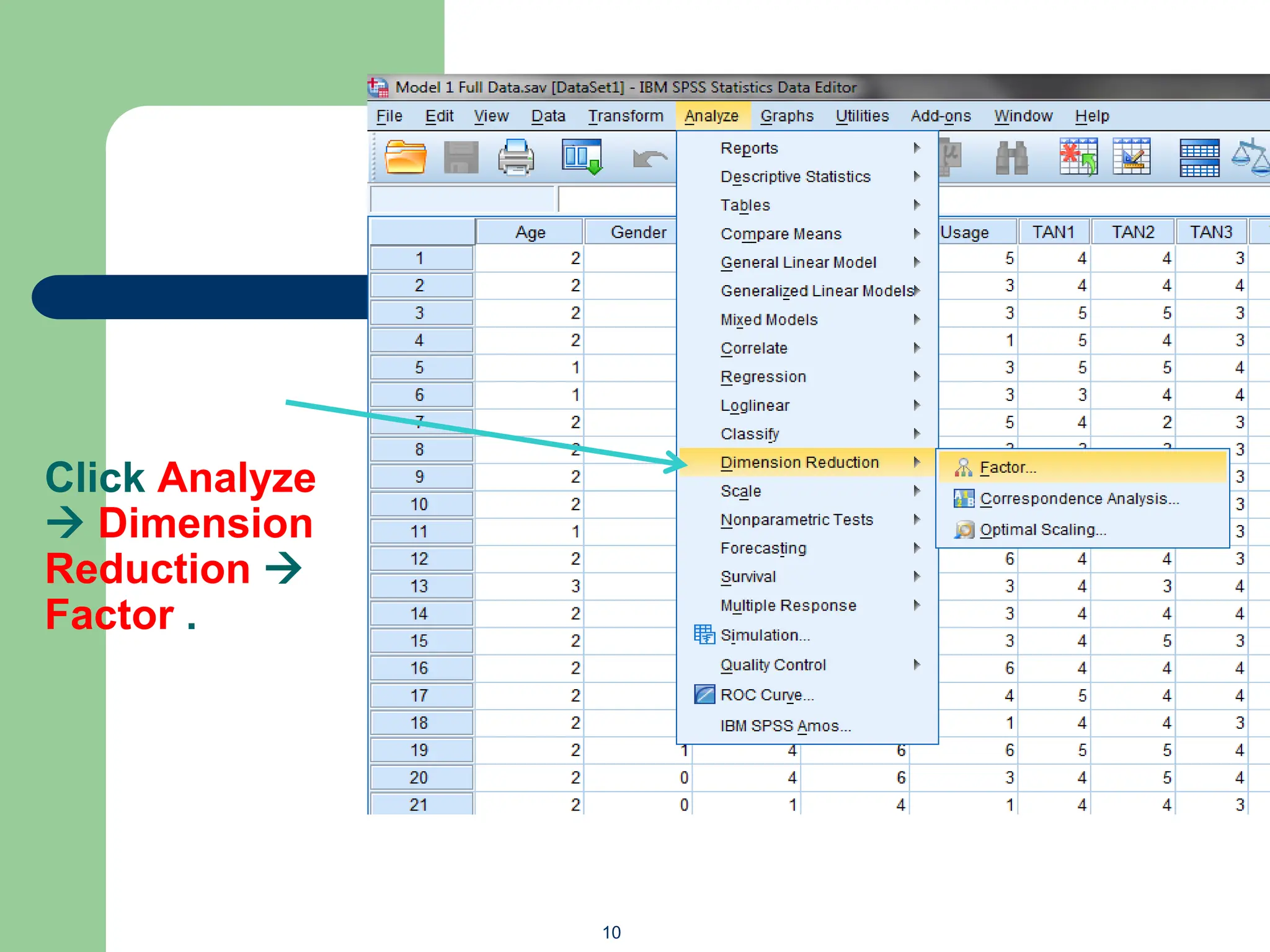

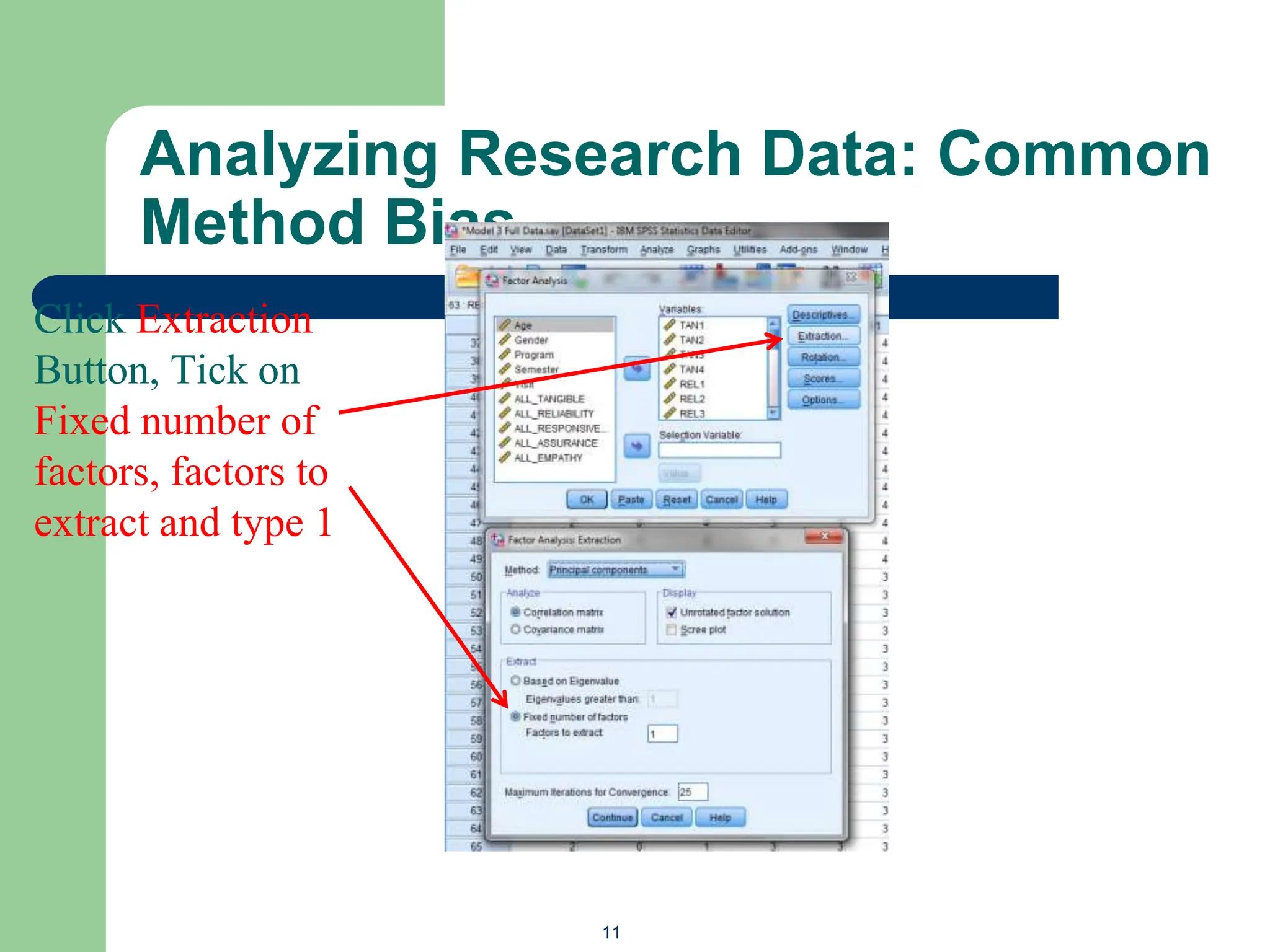

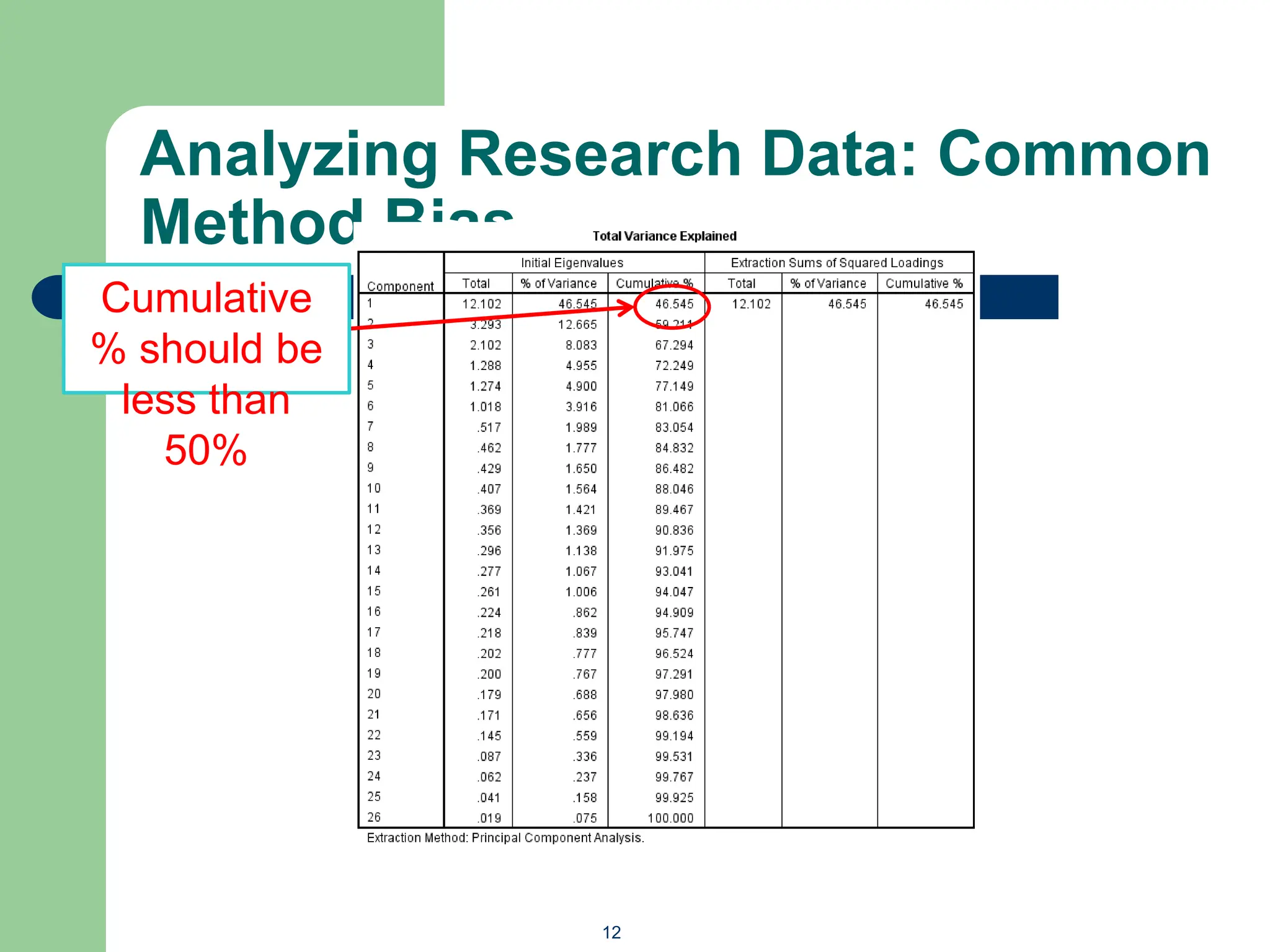

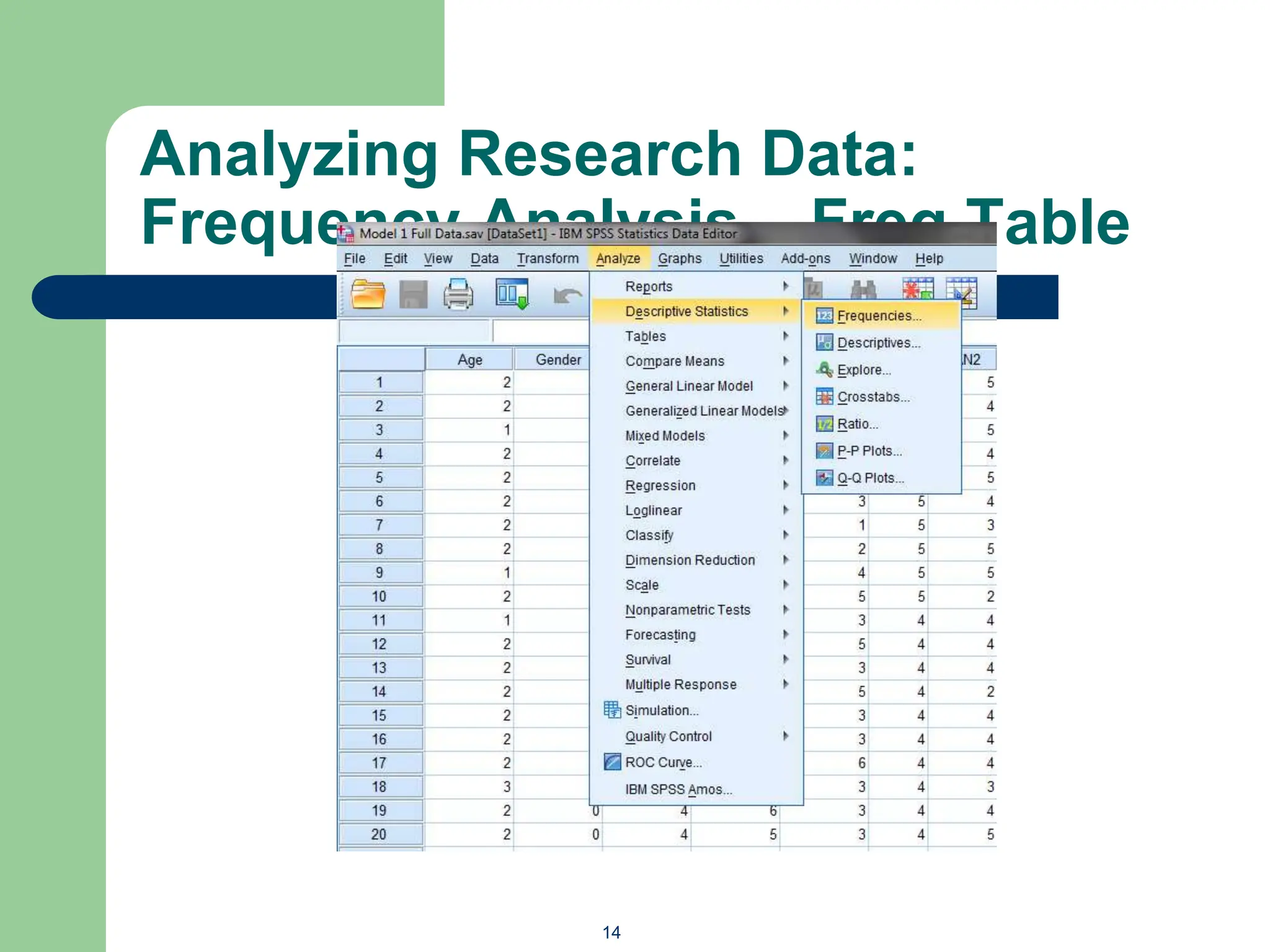

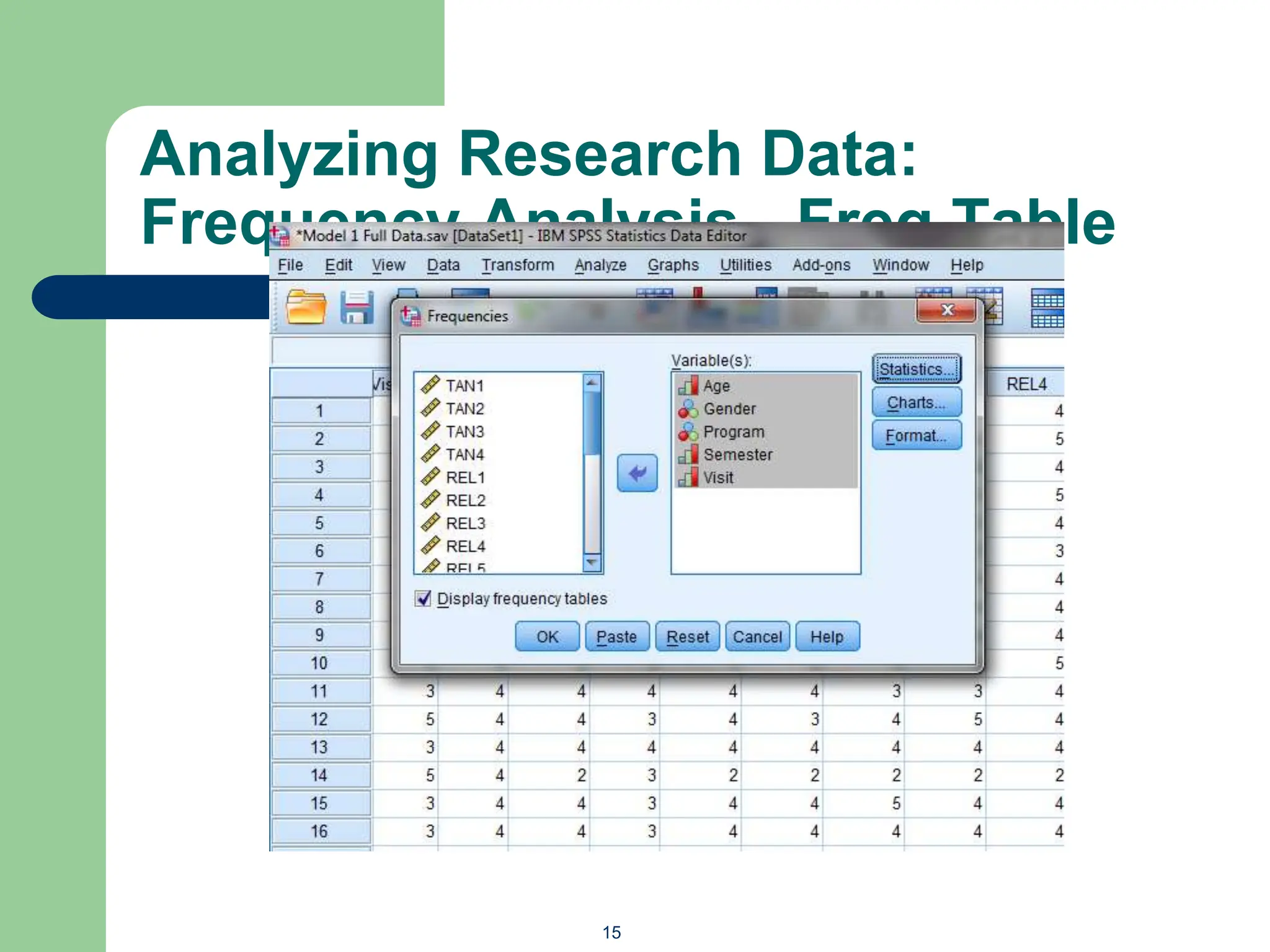

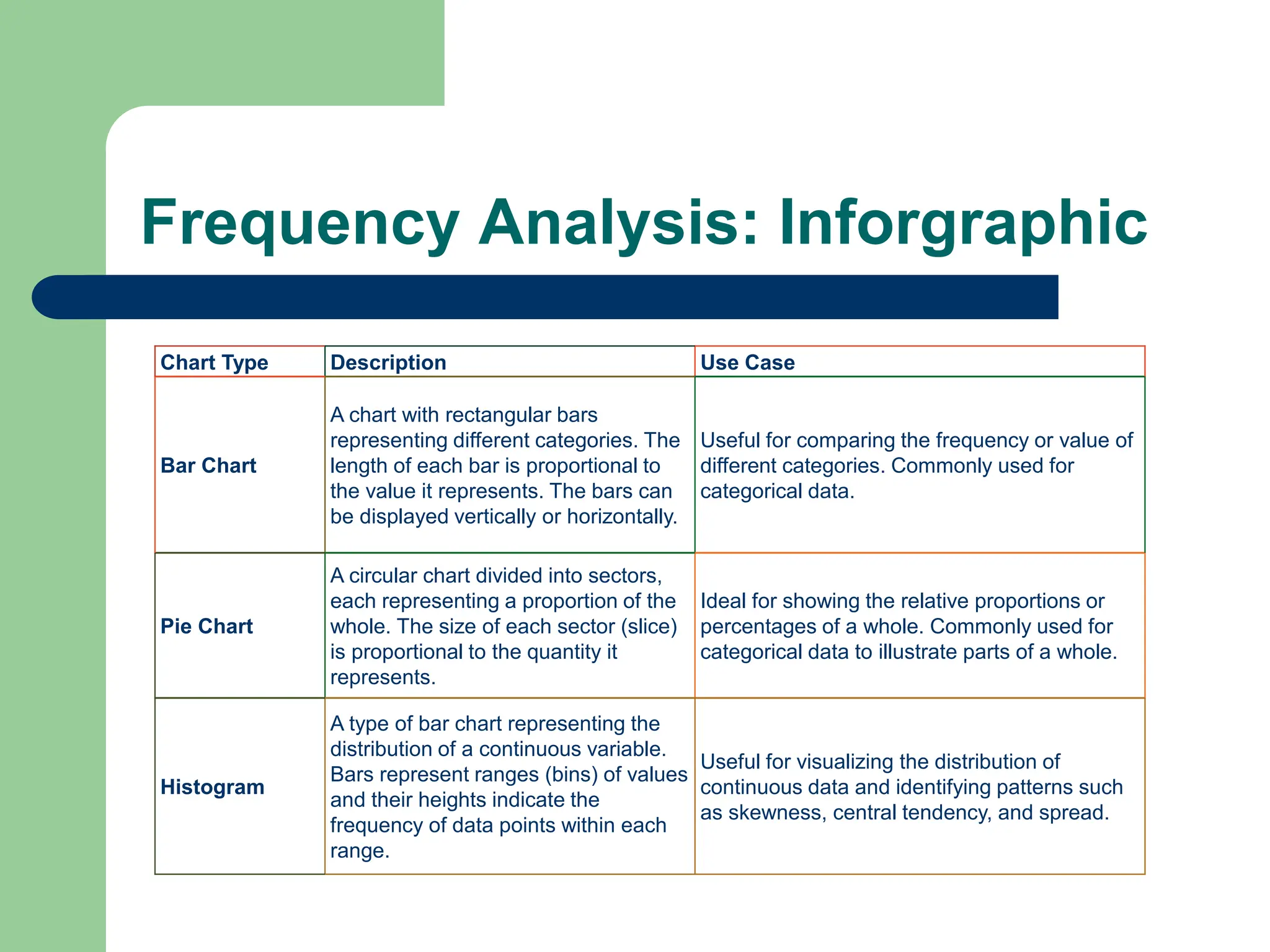

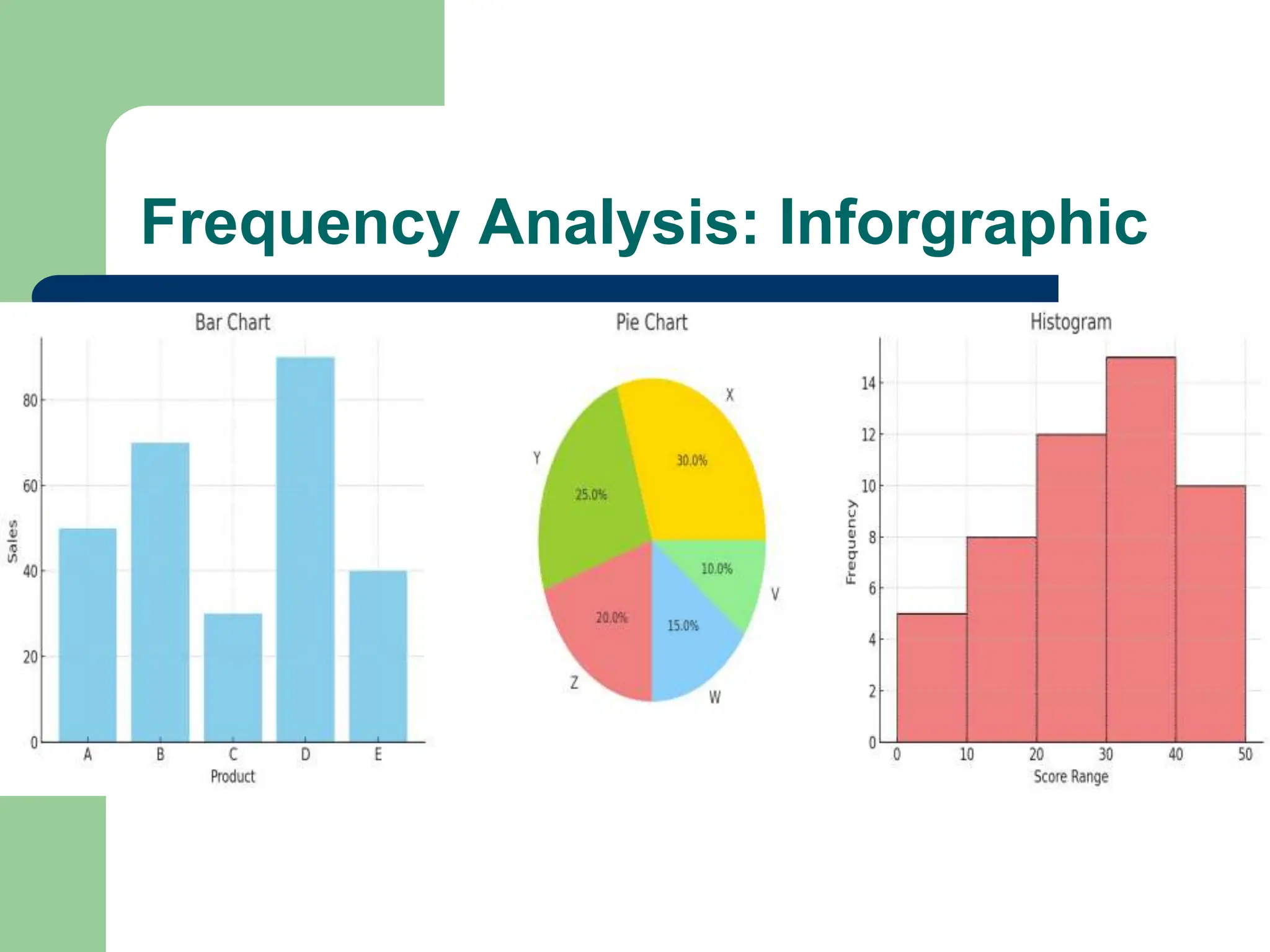

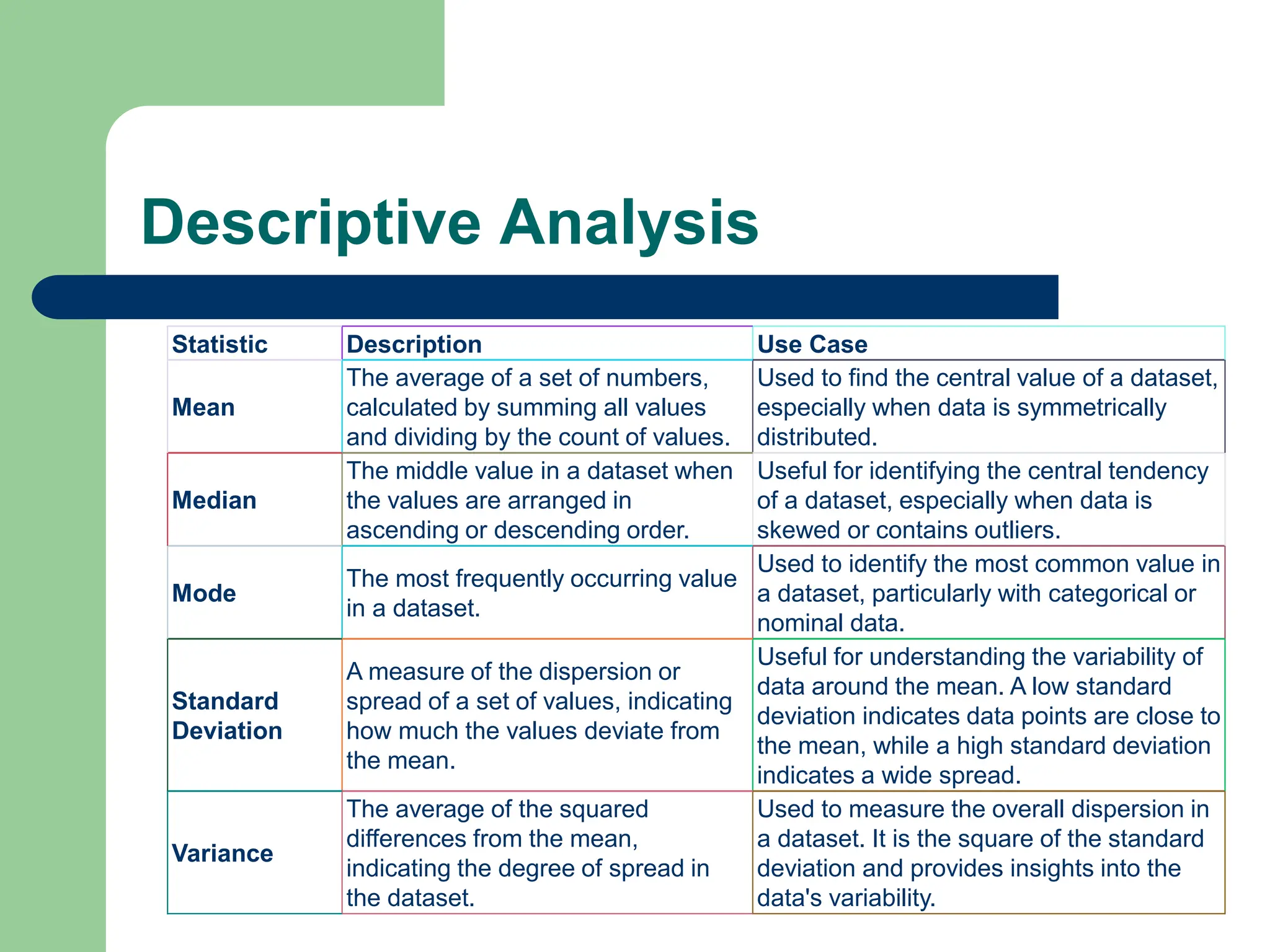

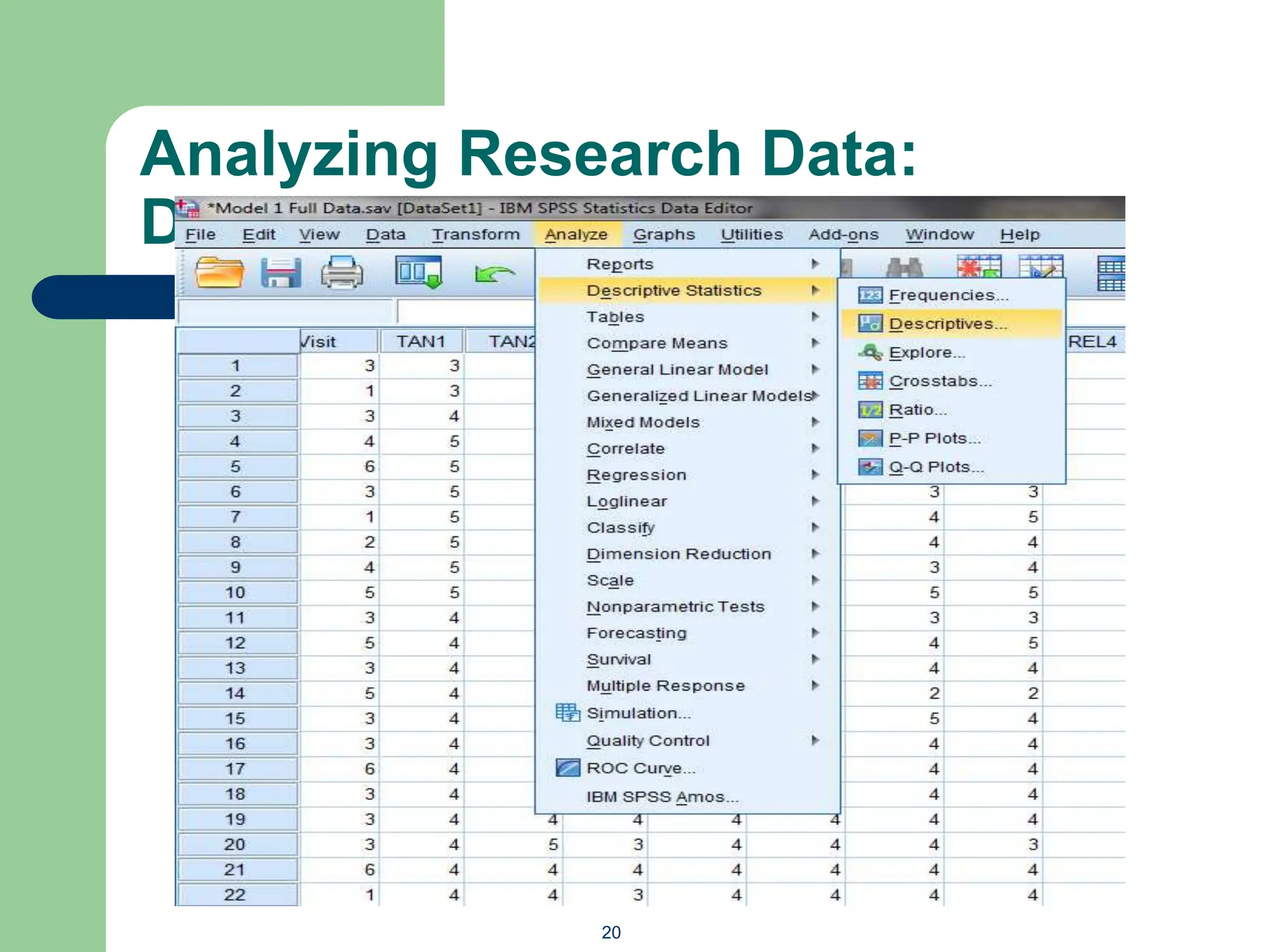

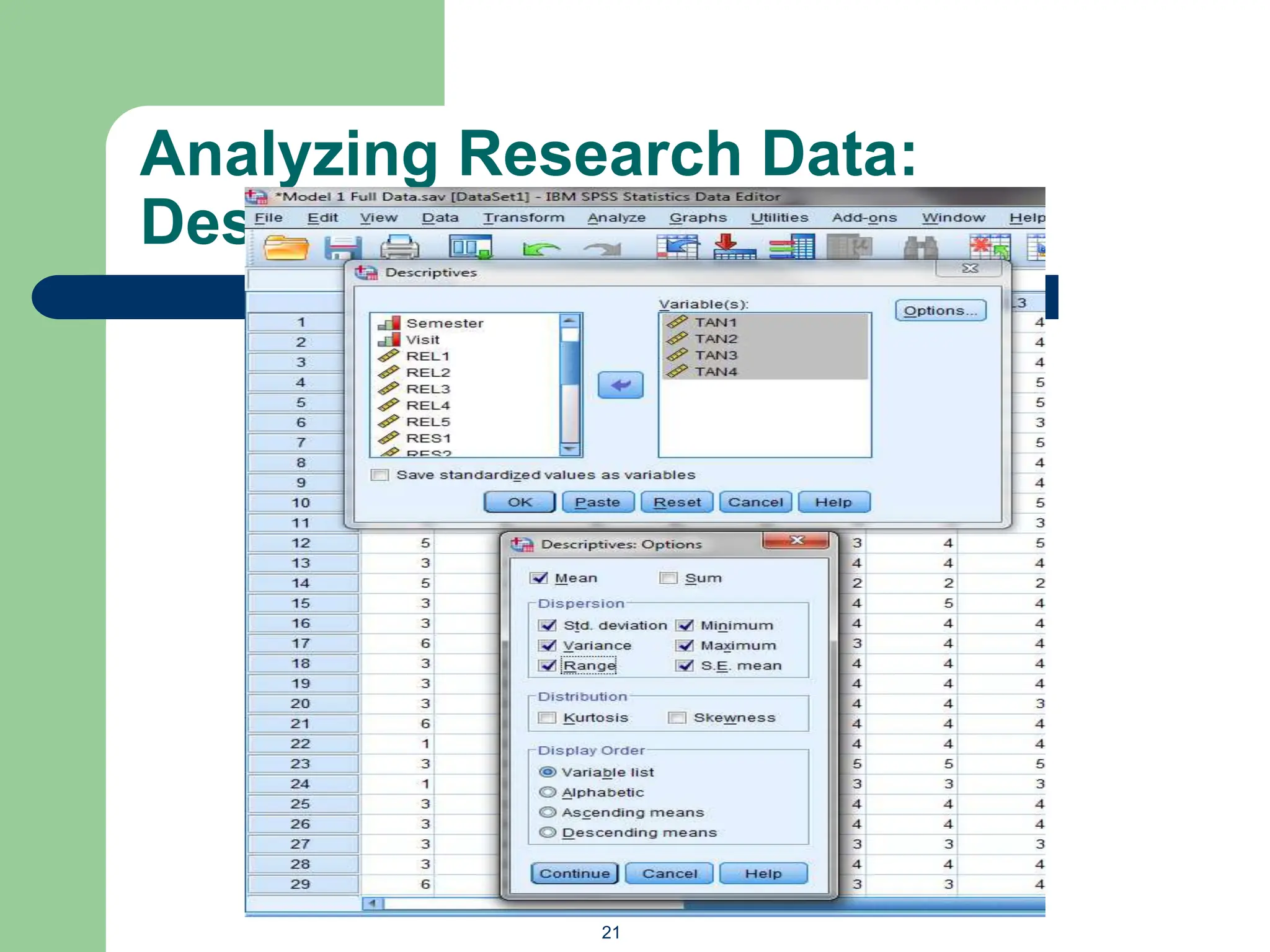

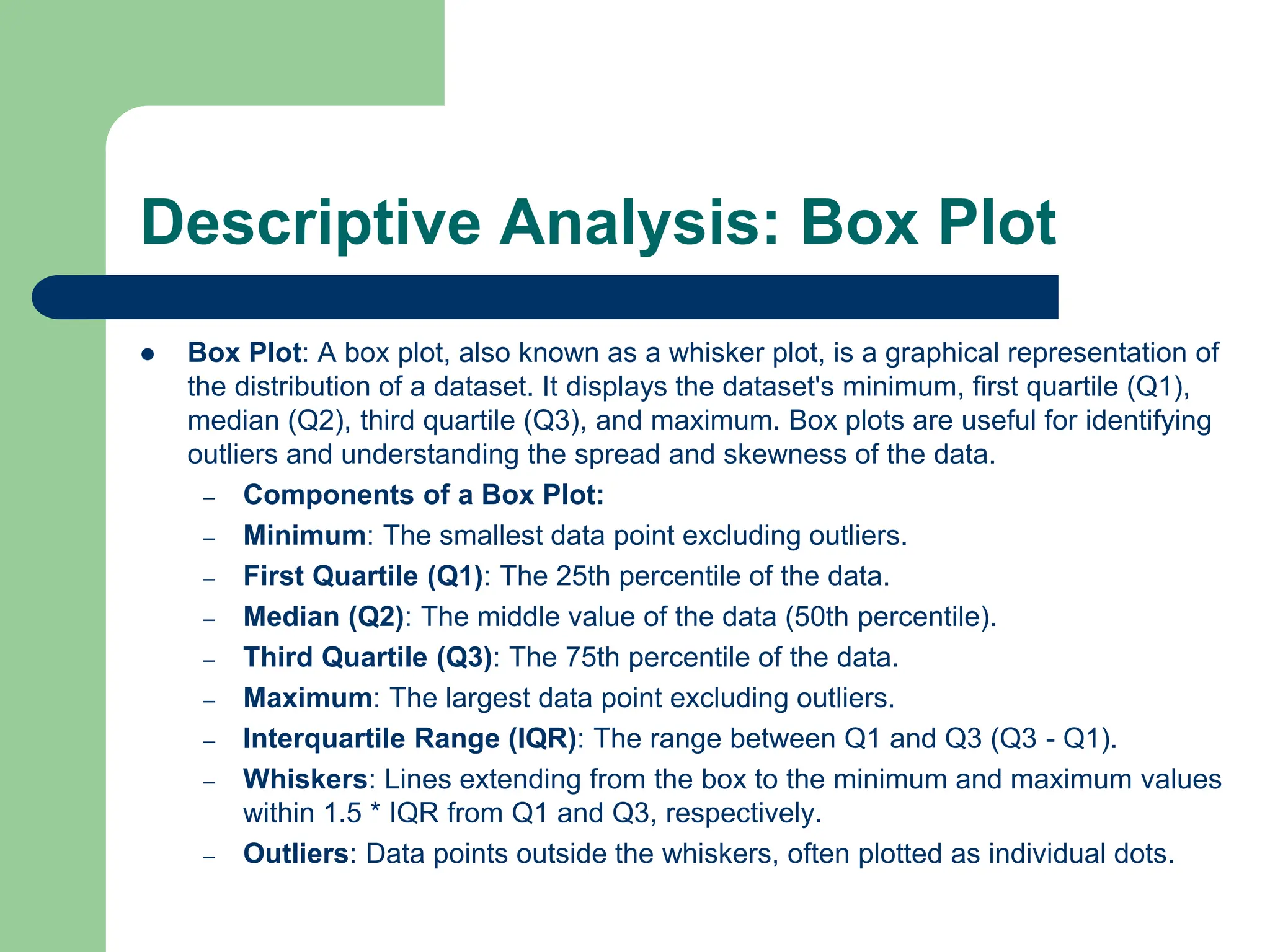

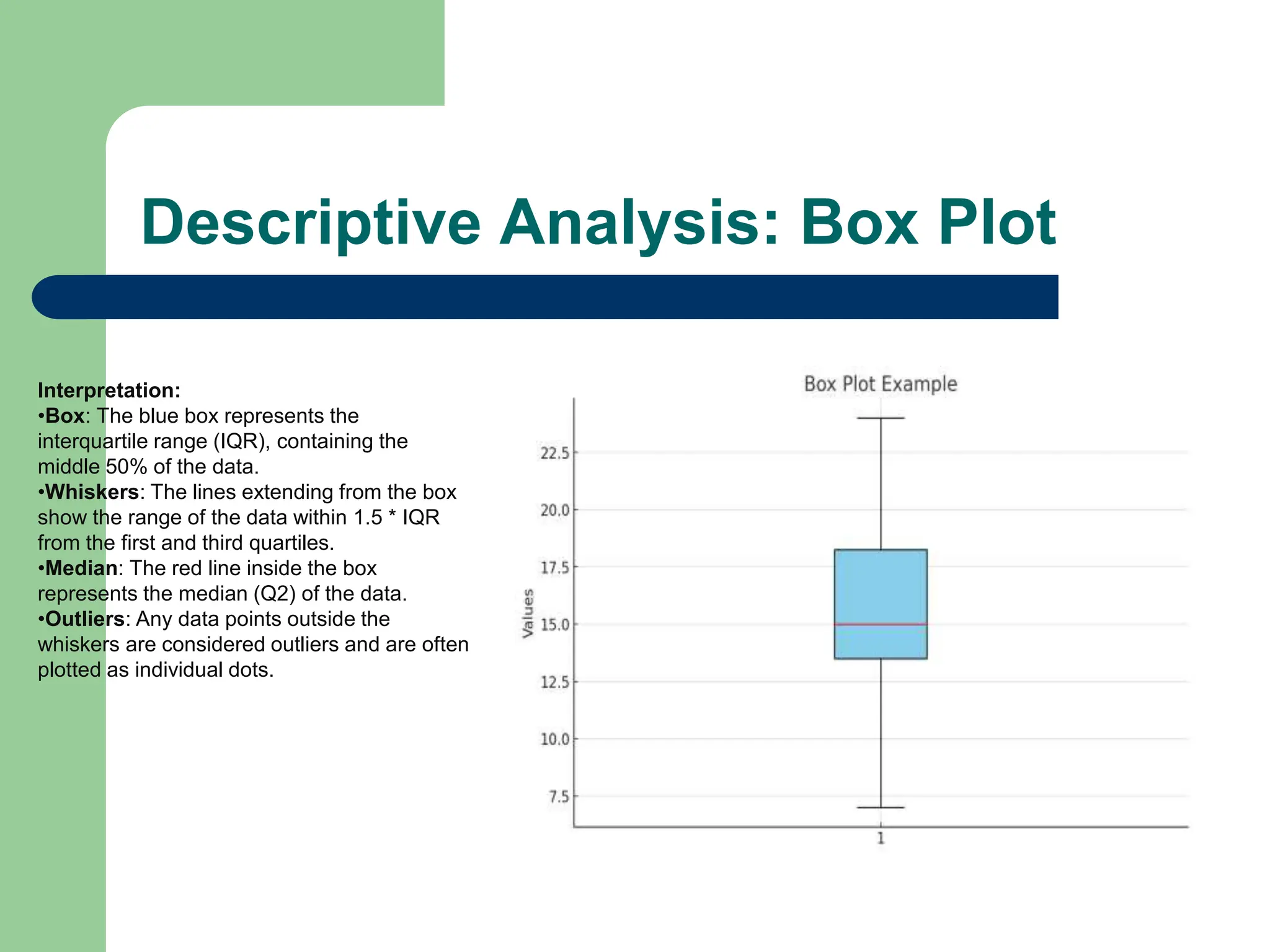

The document provides an overview of quantitative data analysis methods essential for information science, including reliability and descriptive analyses. It discusses various statistical software tools like SPSS, R, and Python used for data analysis, along with methods for assessing reliability (Cronbach's alpha) and detecting common method bias (Harman's single factor test). Additionally, it addresses frequency analysis and descriptive statistics techniques to summarize and visualize data effectively.