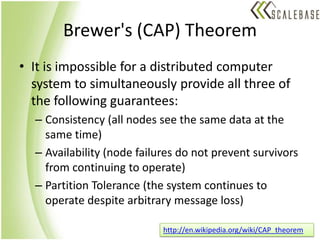

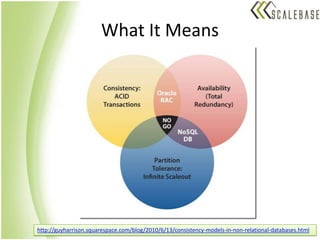

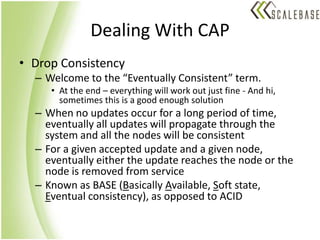

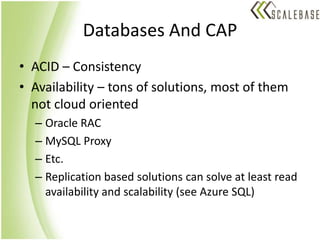

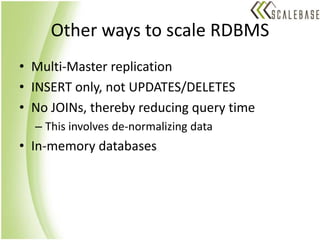

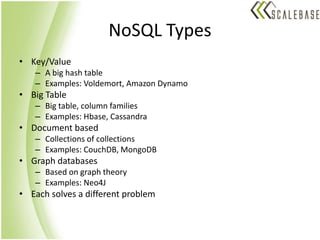

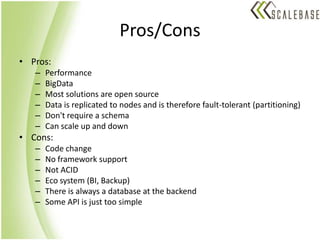

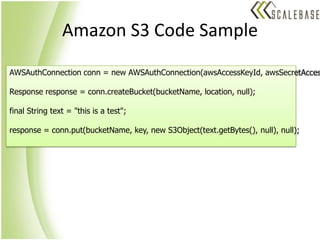

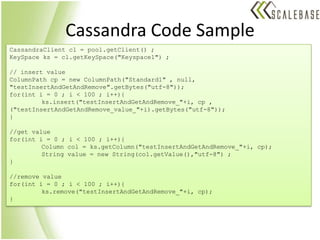

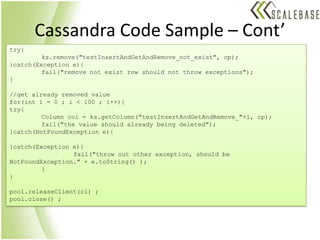

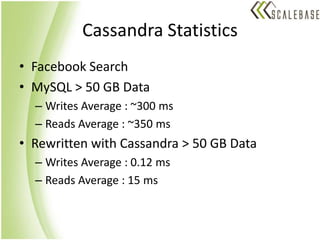

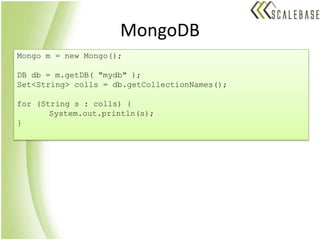

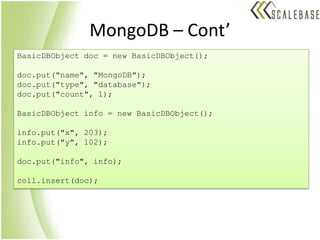

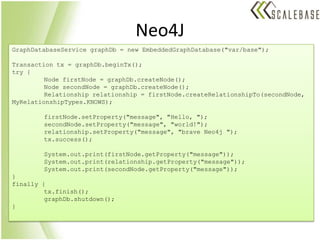

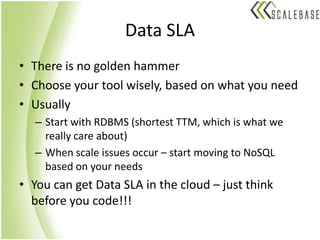

The document discusses data service level agreements (SLAs) in public cloud environments. It explains that achieving availability, consistency, and scalability is challenging due to Brewer's CAP theorem. It reviews strategies for relational and NoSQL databases to handle these tradeoffs, including dropping consistency or availability depending on needs. Code examples demonstrate typical operations for Cassandra, MongoDB, and Neo4J NoSQL databases. The conclusion recommends choosing solutions based on requirements and migrating to NoSQL as needed to address scaling issues.