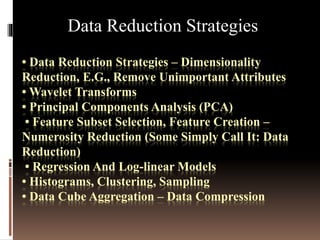

This document discusses various techniques for data reduction, including dimensionality reduction, sampling, binning/cardinality reduction, and parametric methods like regression and log-linear models. Dimensionality reduction techniques aim to reduce the number of attributes/variables, like principal component analysis (PCA) and feature selection. Sampling reduces the number of data instances. Binning and cardinality reduction transform data into a reduced representation. Parametric methods model the data and store only the parameters.