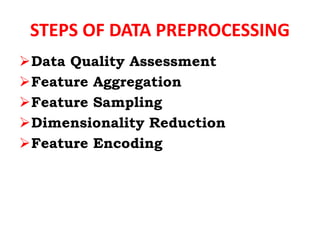

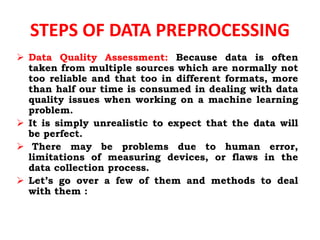

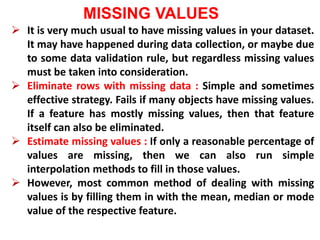

Data preprocessing transforms raw data into a format that is suitable for machine learning algorithms. It involves cleaning data by handling missing values, outliers, and inconsistencies. Dimensionality reduction techniques like principal component analysis are used to reduce the number of features by creating new features that are combinations of the originals. Feature encoding converts categorical features into numeric values that machines can understand through techniques like one-hot encoding. The goal of preprocessing is to prepare data so machine learning algorithms can more easily interpret features and patterns in the data.