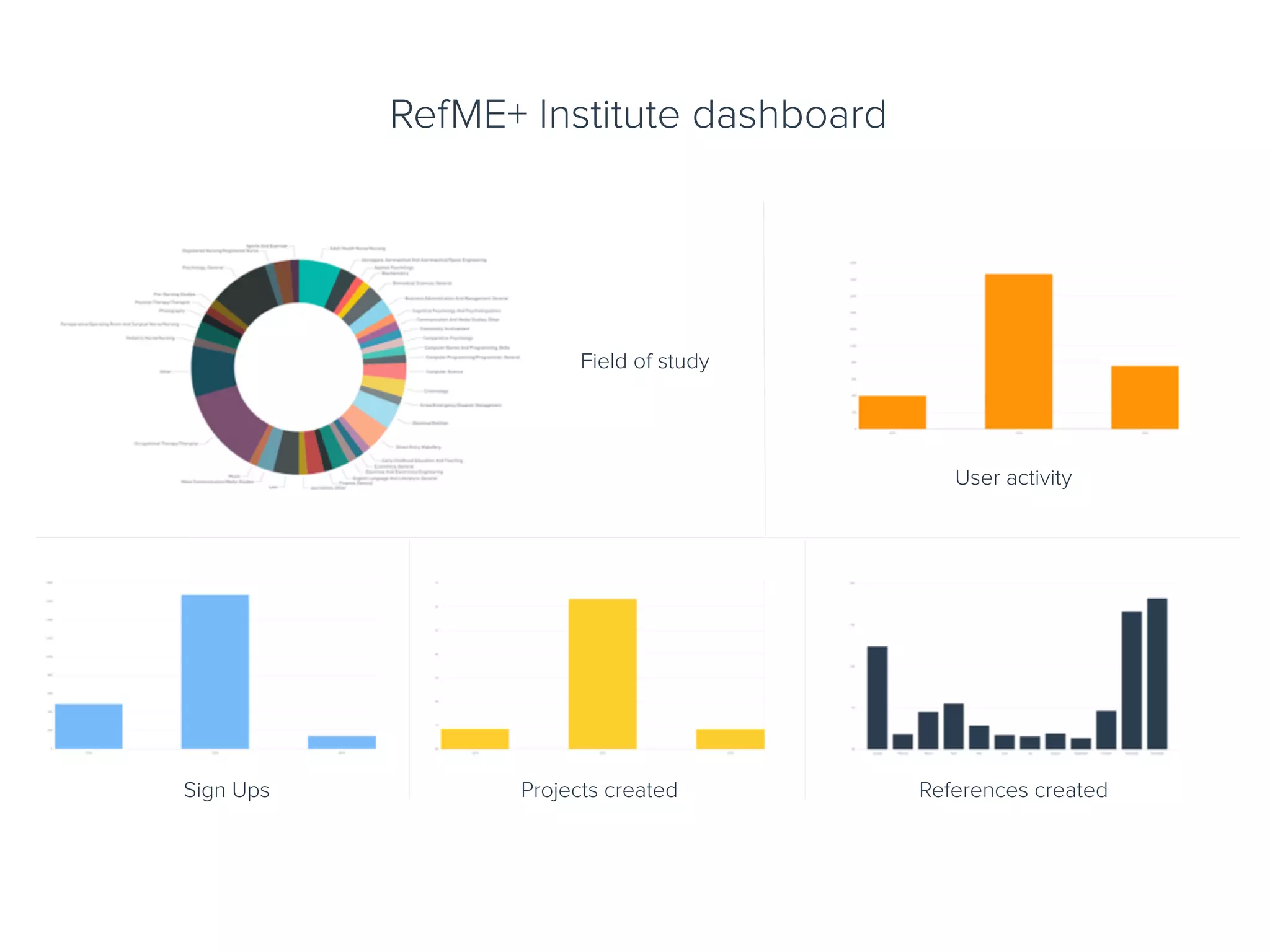

The document discusses using data and learning analytics to inform library investments and improve the student learning experience. It outlines an workshop on collecting and analyzing various data points like library usage, citations in reference managers, and quotes from readings. The goal is to move beyond typical metrics like satisfaction surveys and attendance to actual learning data that can better predict student outcomes and transform support. Privacy, transparency and monitoring versus engagement are important ethical considerations to discuss.

![References

Data intelligence notes - estates management (2011), Available from:

https://www.hesa.ac.uk/intel?name=bds_emr [Accessed: 1.02.16].

King, J.H. & Richards, N.M. (2014) Big data ethics, Available from: http://

papers.ssrn.com/sol3/papers.cfm?abstract_id=2384174 [Accessed:

1.02.16].

Kuh, G.D. (2003) What we’re learning about student engagement from

NSSE: Benchmarks for effective educational practices, Change: The

Magazine of Higher Learning, 35, (2), 24–32.

Sclater, N. (2014) Code of practice for learning analytics A literature review

of the ethical and legal issues, http://repository.jisc.ac.uk/5661/1/

Learning_Analytics_A-_Literature_Review.pdf.](https://image.slidesharecdn.com/lilacpresentationfinalrefmeelhakim-160404154709/75/Data-informed-decision-making-Yaz-El-Hakim-18-2048.jpg)